Notice: This Wiki is now read only and edits are no longer possible. Please see: https://gitlab.eclipse.org/eclipsefdn/helpdesk/-/wikis/Wiki-shutdown-plan for the plan.

Trace Compass/News/NewIn20

Contents

- 1 Added Extension points

- 2 Integration with LTTng-Analyses

- 3 Data-driven pattern detection

- 4 Time graph view improvements

- 5 System call latency analysis

- 6 Critical flow view

- 7 Virtual CPU view

- 8 Bookmarks and custom markers

- 9 Resources view improvements

- 10 Control Flow view Improvements

- 11 Support of vertical zooming in time graph views

- 12 Kernel memory usage analysis

- 13 Linux Input/Output analysis and views

- 14 Manage XML analysis files

- 15 Display of analysis properties

- 16 Importing traces as experiment

- 17 Importing LTTng traces as experiment from the Control view

- 18 Events Table filtering UI improvement

- 19 Add support for unit of seconds in TmfTimestampFormat

- 20 Symbol provider for Call stack view

- 21 Per CPU thread 0

- 22 Speed-up import of large trace packeges

- 23 Improve analysis requirement implementations

- 24 Pie charts in Statistics view

- 25 Support for LTTng 2.8+ Debug Info

- 26 LTTng Live trace reading support disabled

- 27 Bugs fixed in this release

- 28 Bugs fixed in the 2.0.1 release

Added Extension points

In this release 2 new extension points have been added:

- org.eclipse.tracecompass.tmf.core.analysis.ondemand

This extension point is used to provide on-demand analyses. Unlike regular analyses, the on-demand ones are only executed when the user specifies it.

- org.eclipse.tracecompass.tmf.ui.symbolProvider

This extension point can be used to transform from symbol addresses that might be found inside a TmfTrace into human readable texts, for example function names (e.g. used for Call Stack views)

Integration with LTTng-Analyses

The LTTng-Analyses project provides analysis script that can run on LTTng kernel traces to provide various metrics and statistics. If the LTTng-Analyses script are installed on the system (and accessible on the PATH), it is now possible to execute them directly from Trace Compass.

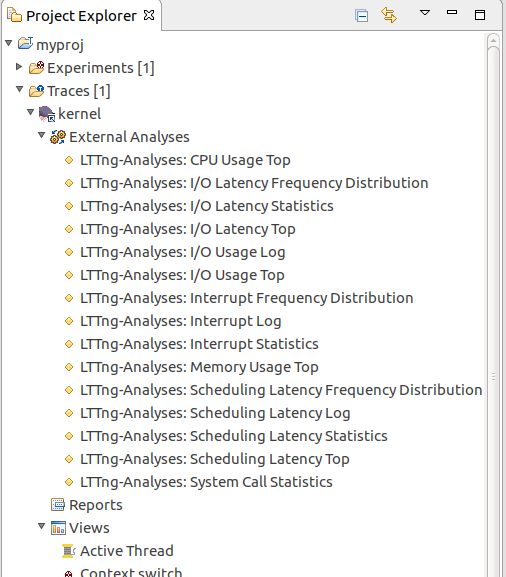

Project View additions

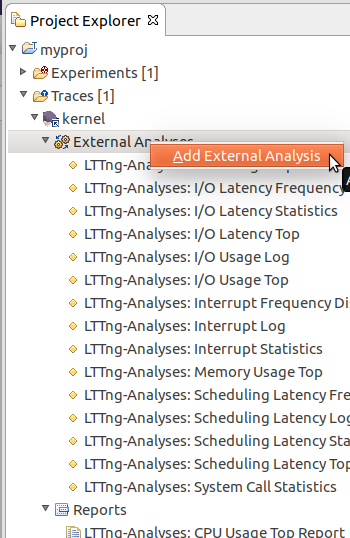

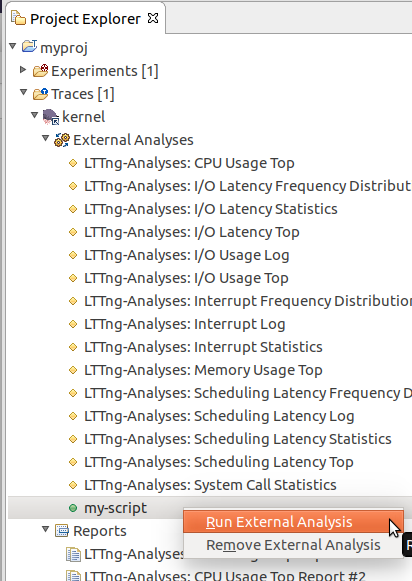

A new External Analyses tree item under the trace in the Project View will list the available analyses:

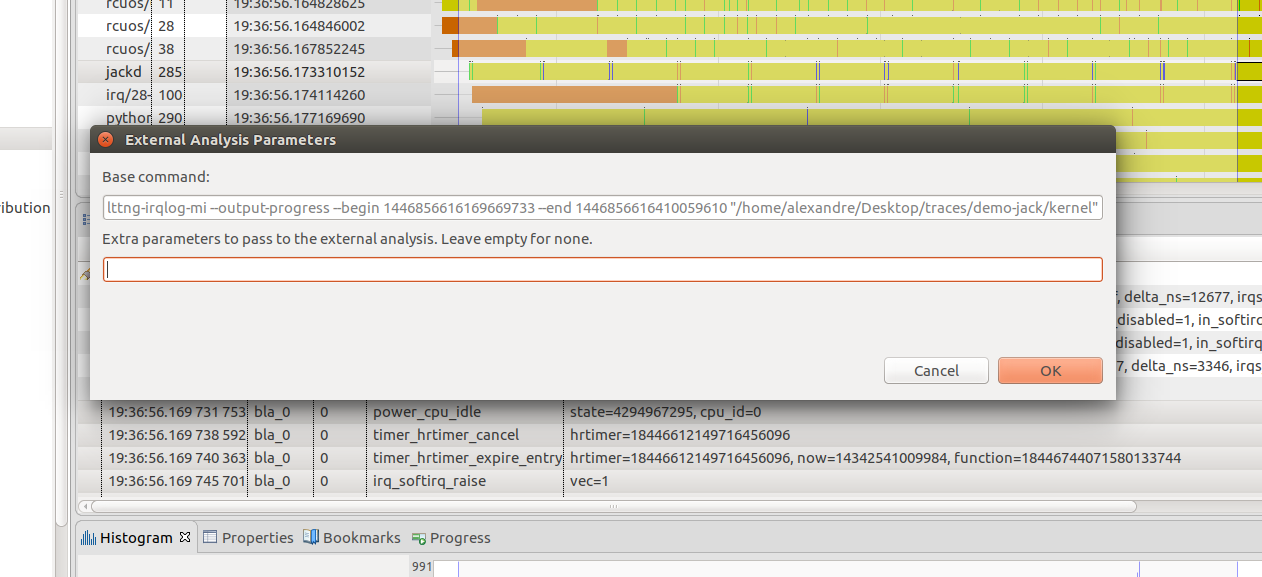

Running an analysis

Double-clicking, or right-clicking and choosing Run Analysis will bring up a dialog showing the command that is about to be executed. If a time range is selected in any of the time-aware views, the analysis will run only on this time range. If there is no time range selection, it will run on the whole trace.

The analysis execution dialog. We see in the background the selected time range, and the corresponding --begin and --end parameters passed to the script.

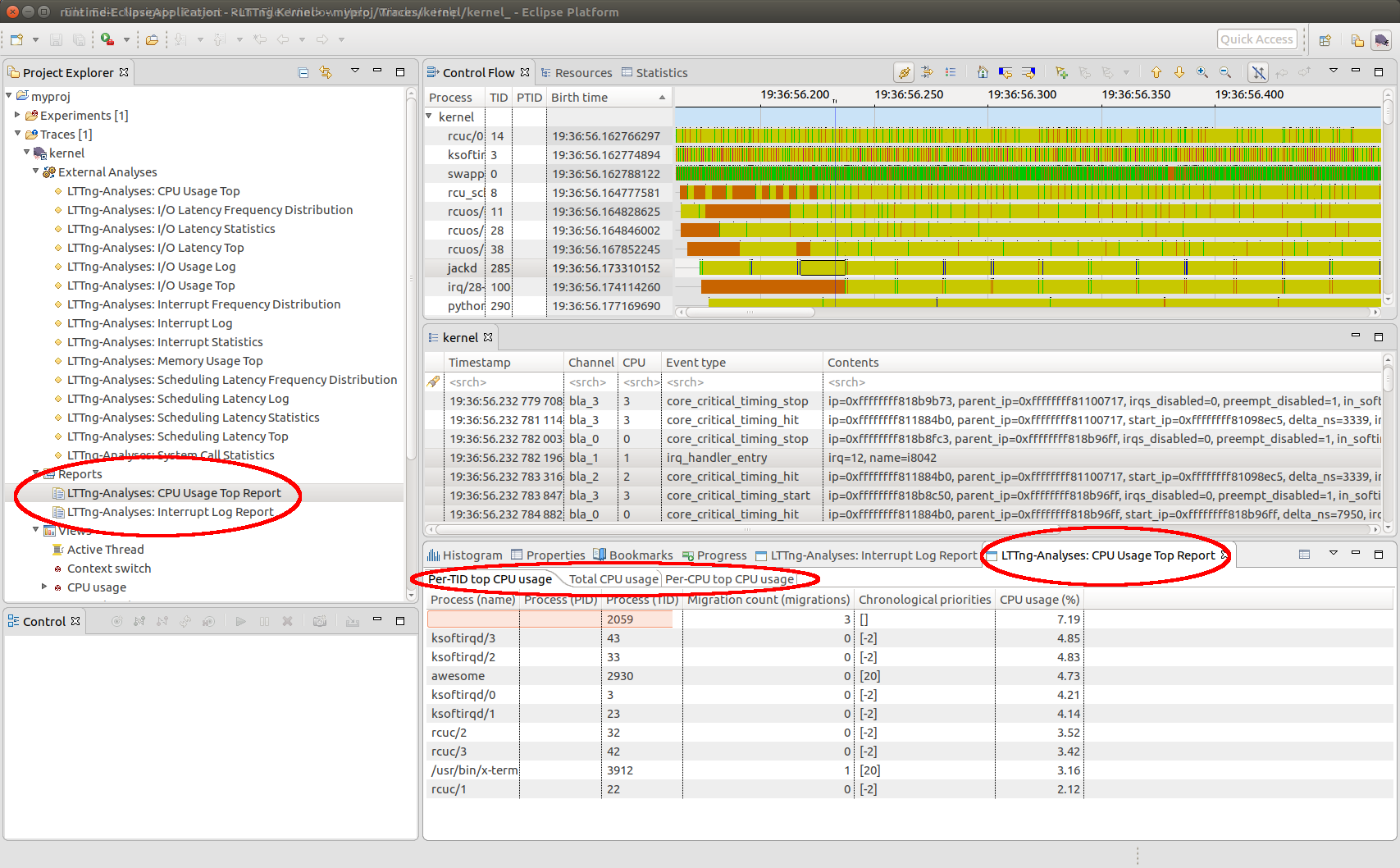

Result reports

Upon completion, a report containing the results will be opened, and placed under the Reports sub-tree item in the Project View. From there they can be re-opened or deleted. Each subsequent run of an analysis generates a new report.

One report can contain one or more result tables, representing the output of the analysis.

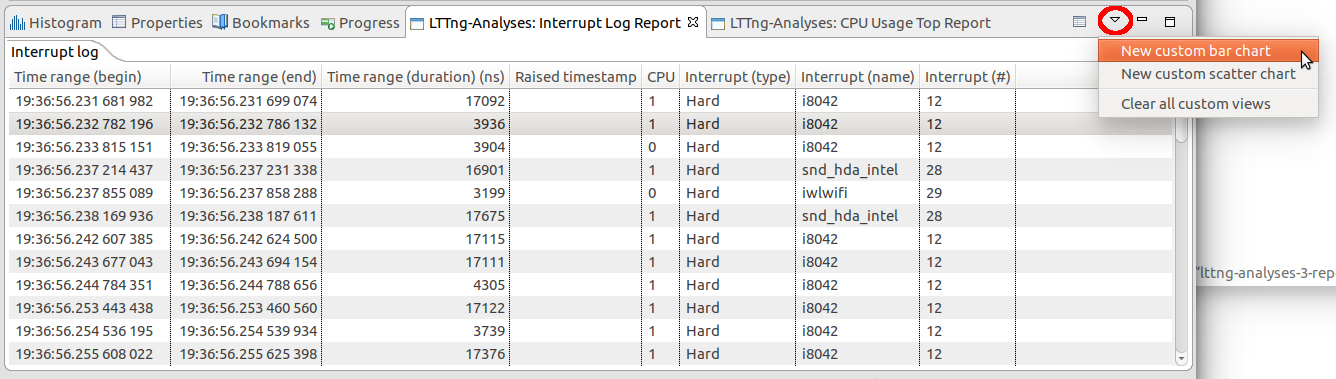

Creating custom charts

The result tables can carry a lot of information, it is often more meaningful to display this information into charts. The view menu has options to create such charts:

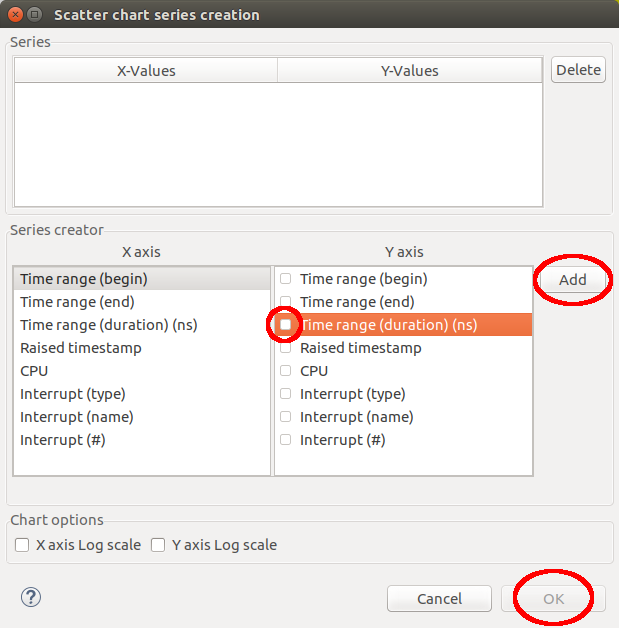

This action will bring up the chart configuration dialog, where the user can decide which columns should be used for the X and Y axis of each series:

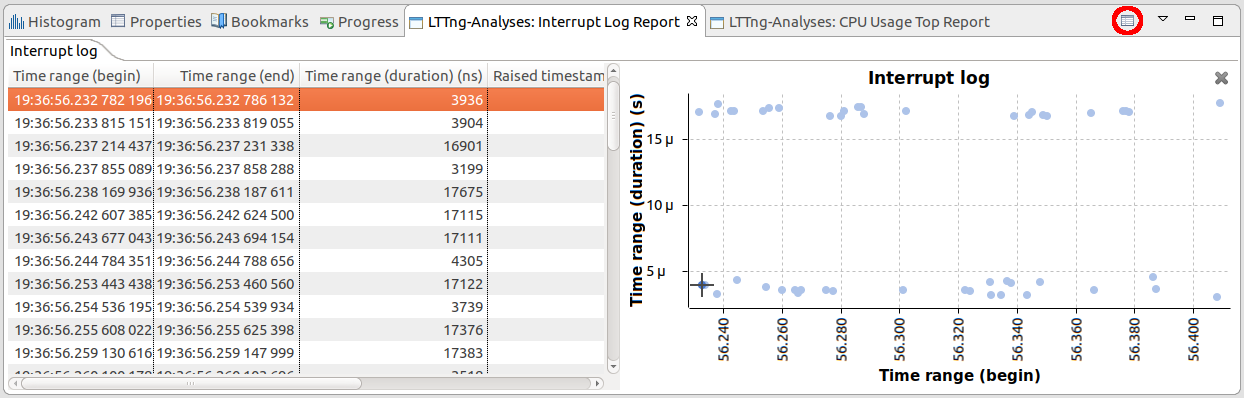

This will insert the corresponding chart next to the table in the view. The indicated button can be used to hide the result table, to give more space to the chart itself.

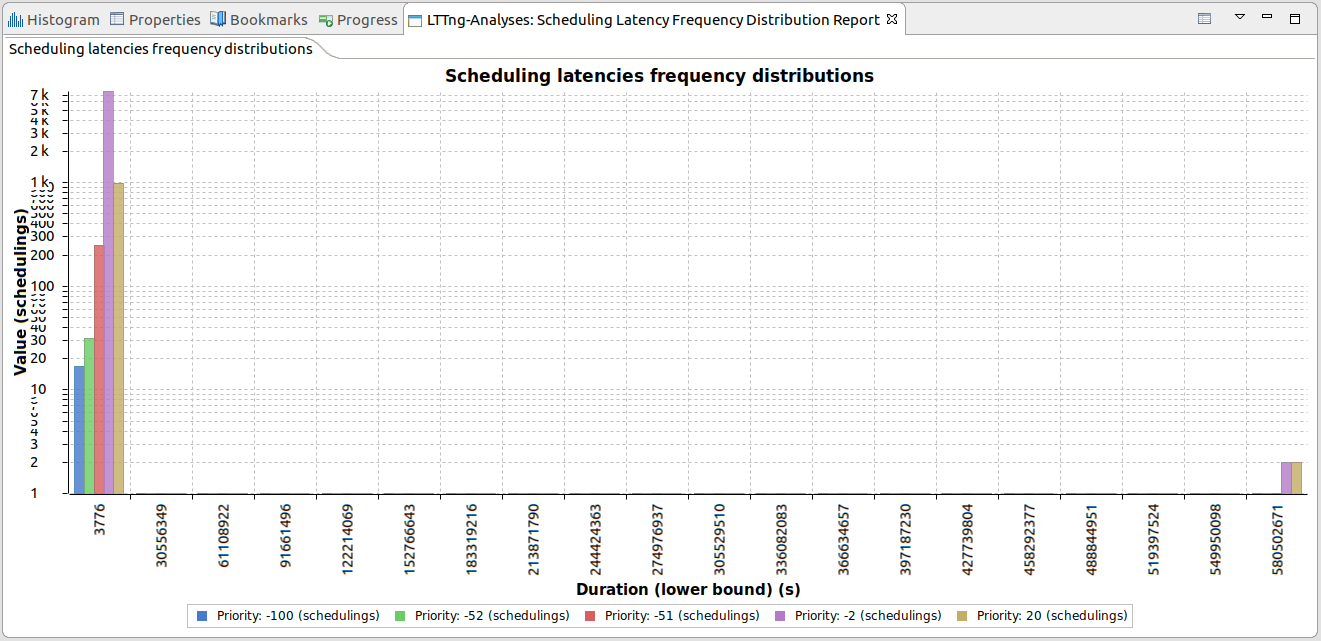

Bar charts can be created the same way:

Chart of the "Scheduling Latency Frequency Distribution" analysis. We can see some scheduling latencies on the right took much more time than the rest of them.

Importing custom analyses

The LTTng-Analyses mentioned above communicate with Trace Compass using the documented LAMI protocol. Any script implementing this protocol can be imported by the user and launched the same way.

Right-clicking on the External Analysis tree element brings up the option to import a new external analysis:

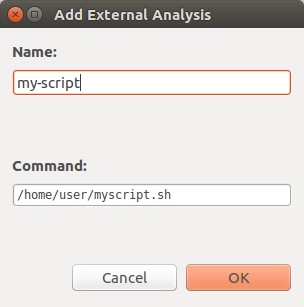

Clicking this option will open the script configuration dialog, where the user has to give a name and the command-line to the script:

If the script is found, and it correctly implements the LAMI protocol (i.e. responds to the --metadata command, among other things), it will be added and made available in the list of external analyses:

From there, it can be executed like any other built-in script, and the same features (reports, charts, etc.) are available.

Data-driven pattern detection

The data-driven analysis and view of Trace Compass has been augmented to support data-driven pattern analysis. With this it possible to define a pattern matching algorithm and populate Timing Analysis views (similar to the views of chapter #System call latency table). Other types of latency or timing analyses can be now defined using this approach. For more information about this feature refer to the Trace Compass User Guide.

Time graph view improvements

Gridlines in time graph views

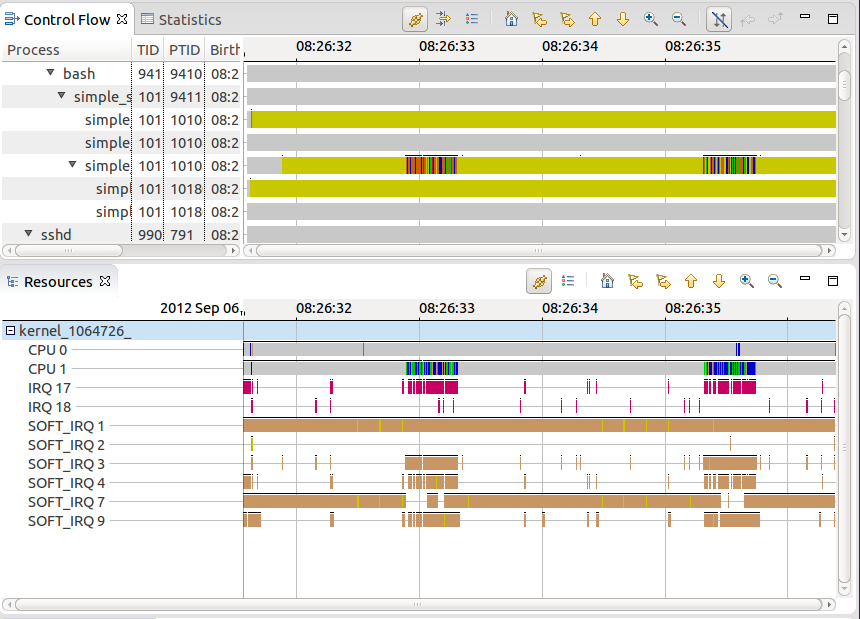

Grid lines have been added to the time graph views such as the Control Flow view.

Persist settings for open traces

All views based on the time graph widgets (e.g. Control Flow view) now persist the current row selection (e.g. threads), filters and sort order (where applicable) for all open traces. Switching traces won't erase the settings.

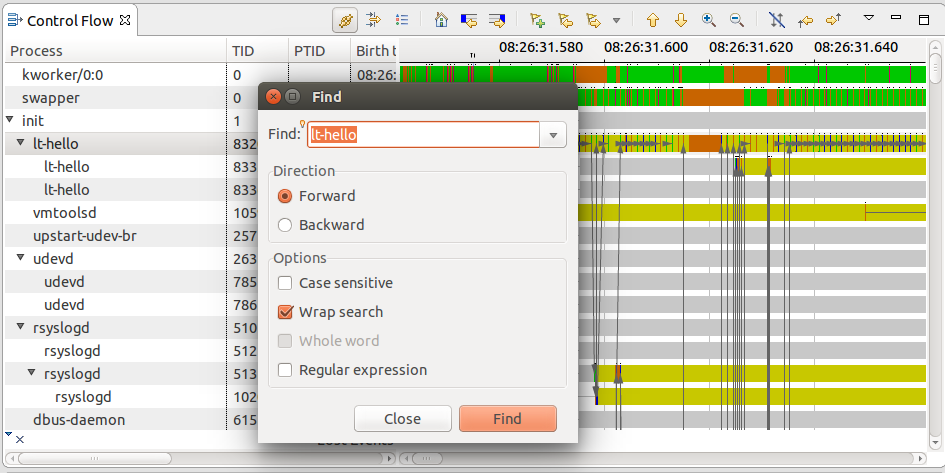

Support for searching within time graph views

It is now possible to search in views based on the time graph views using CTRL+F keyboard short-cut. For example, with this users have the ability to search for a process, TID, PTID, etc. within the Control Flow view or a CPU, IRQ, etc. within the Resource view.

Horizontal scrolling using Shift+MouseWheel

It is possible to scroll horizontally within time graph views using Shift+MouseWheel on the horizontal scroll bar and within the time graph area.

Bookmarks and custom markers in time graph views

see chapter Bookmarks and custom markers

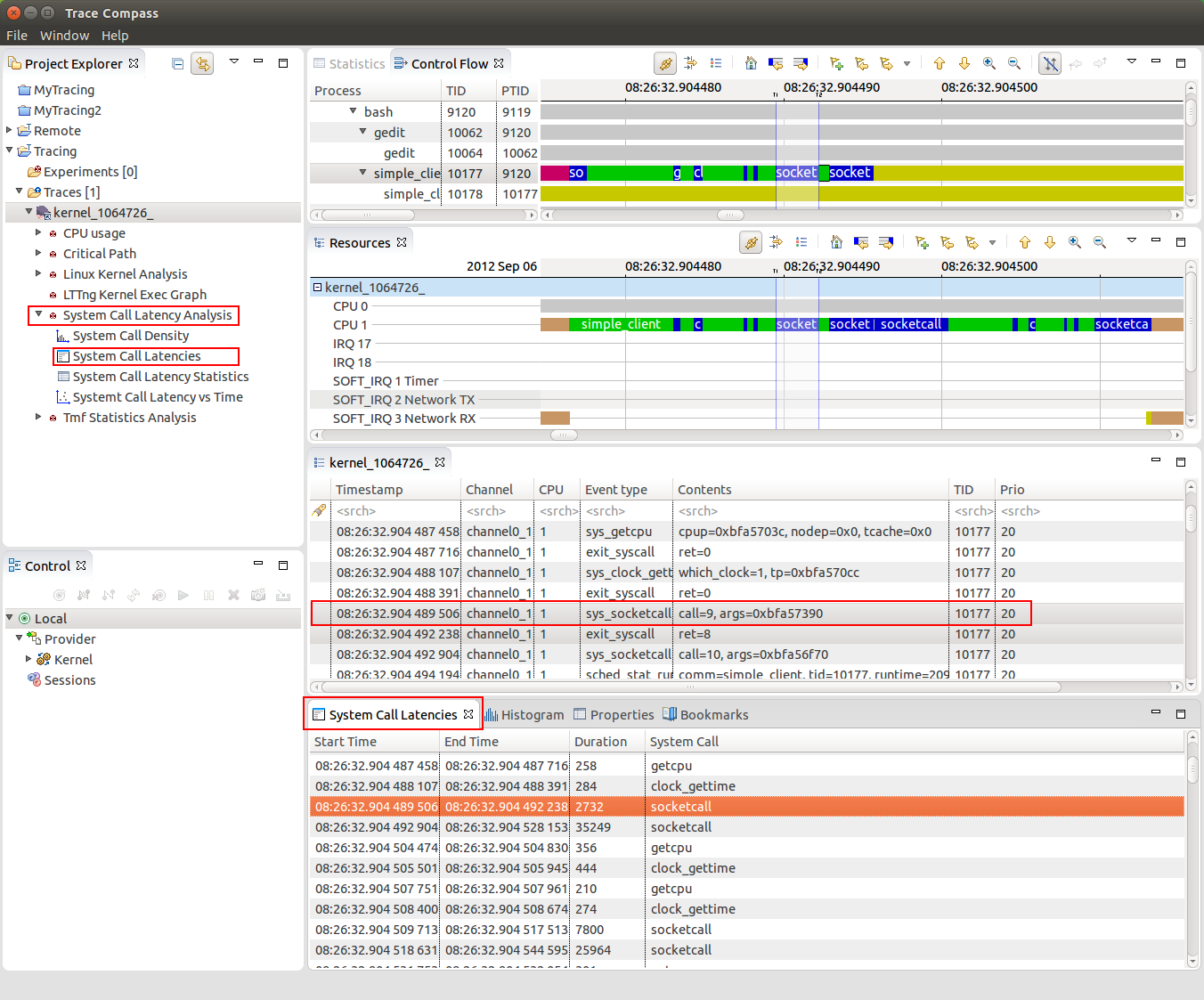

System call latency analysis

For analyzing of analyzing system call latencies in the Linux Kernel using LTTng kernel traces, the System Call Latency Analysis has been added. Various views will now display the results of the analysis in different ways.

System call latency table

The System Call Latency table lists all latencies occurred per system call type occurred within the currently open trace.

System call latency scatter graph

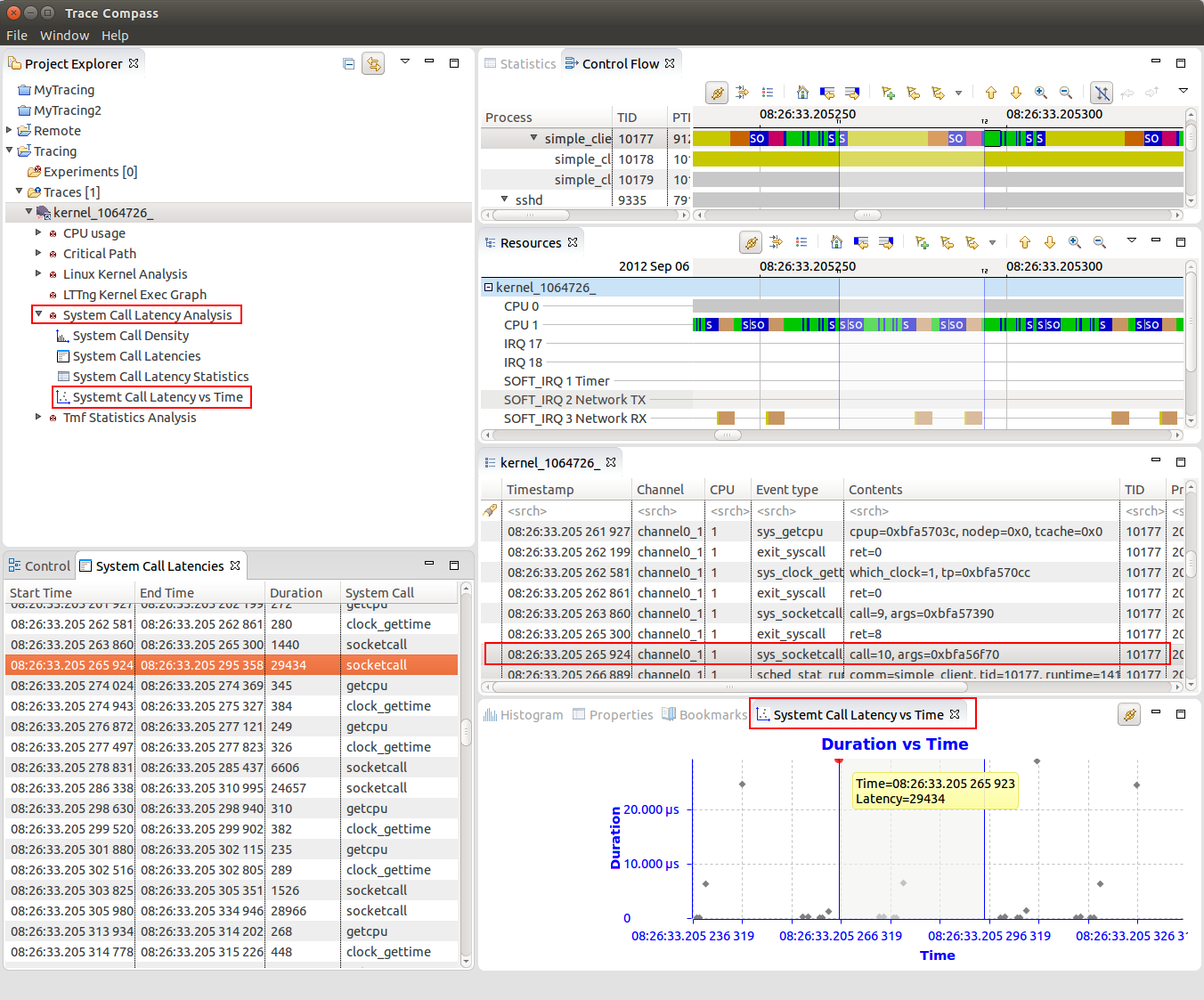

The System Call Latency vs Time view visualizes system call latencies in the Linux Kernel over time.

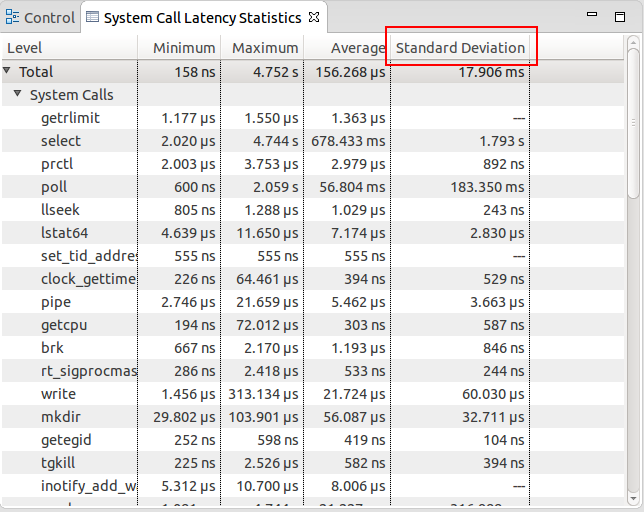

System call latency statistics

The System Call Latency Statistics view shows statistics about system call latencies. I lists the minimum, maximum and average latency as well as the standard deviation for a given system call type.

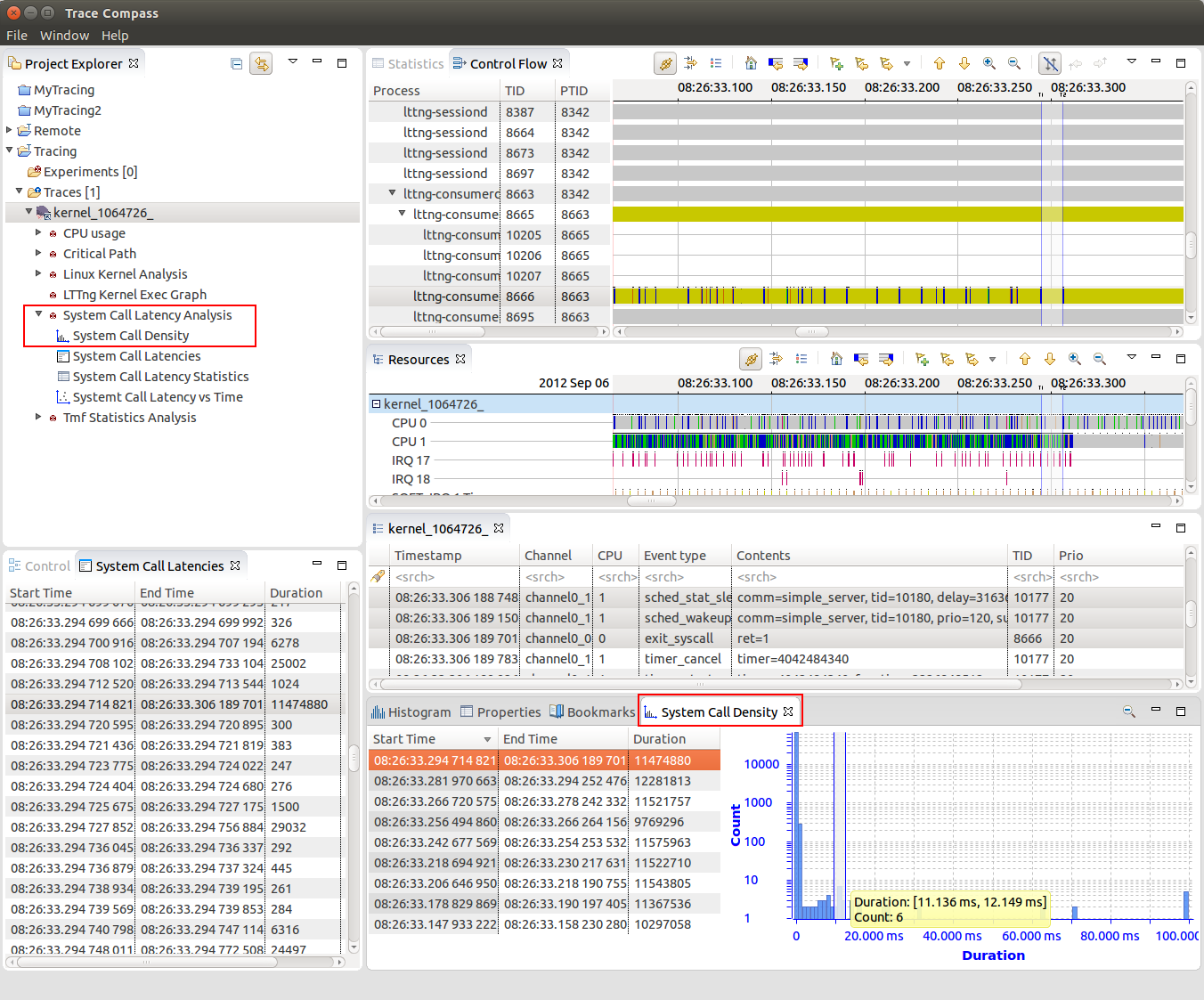

System call latency density

The System Call Density view shows the distribution of the latencies for the current selected time window of the traces. Right-click with the mouse will open a context sensitive menu with 2 menu items for navigation to the minimum or maximum duration.

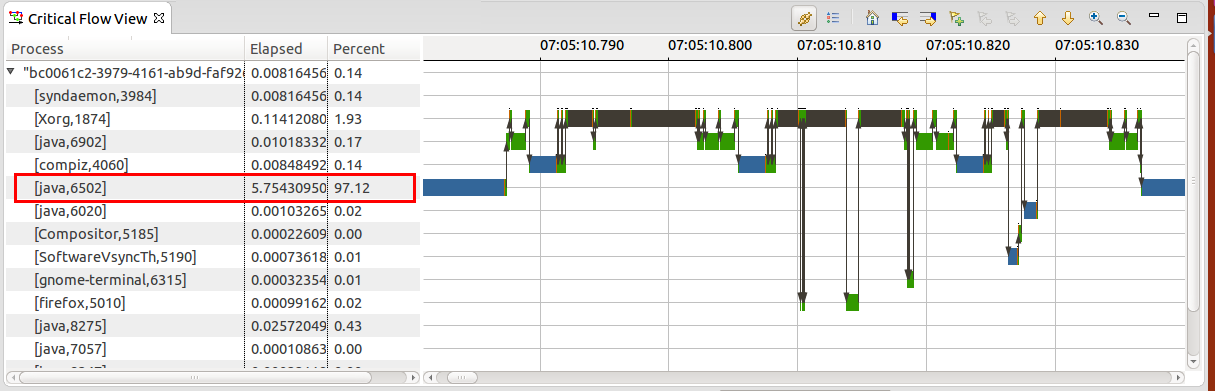

Critical flow view

A critical flow analysis and view has been added to show dependency chains for a given process. Process priority information is part of the state tooltip. This makes it possible to detect if a higher priority process was block by a lower priority process.

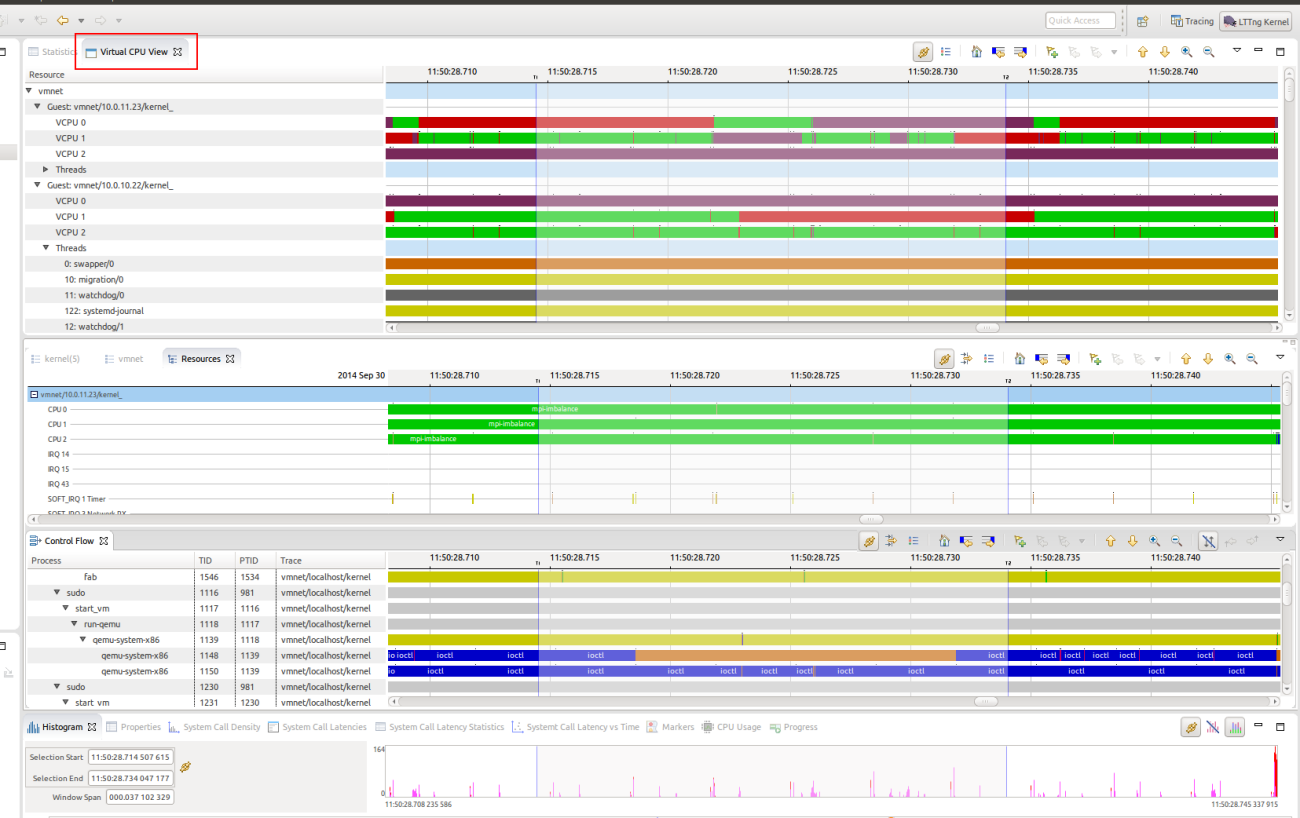

Virtual CPU view

To better understand what is happening in such a virtual environment, it is necessary to trace all the machines involved, guests and hosts, and correlate this information in an experiment that will display a complete view of the virtualized environment. The Virtual CPU view has been added for that.

In order to be able to correlate data from the guests and hosts traces, each hypervisor supported by Trace Compass requires some specific events, that are sometimes not available in the default installation of the tracer. For QEMU/KVM special LTTng kernel modules exist which need to be installed on the traced system. For more information, please follow the instructions in the Trace Compass user guide.

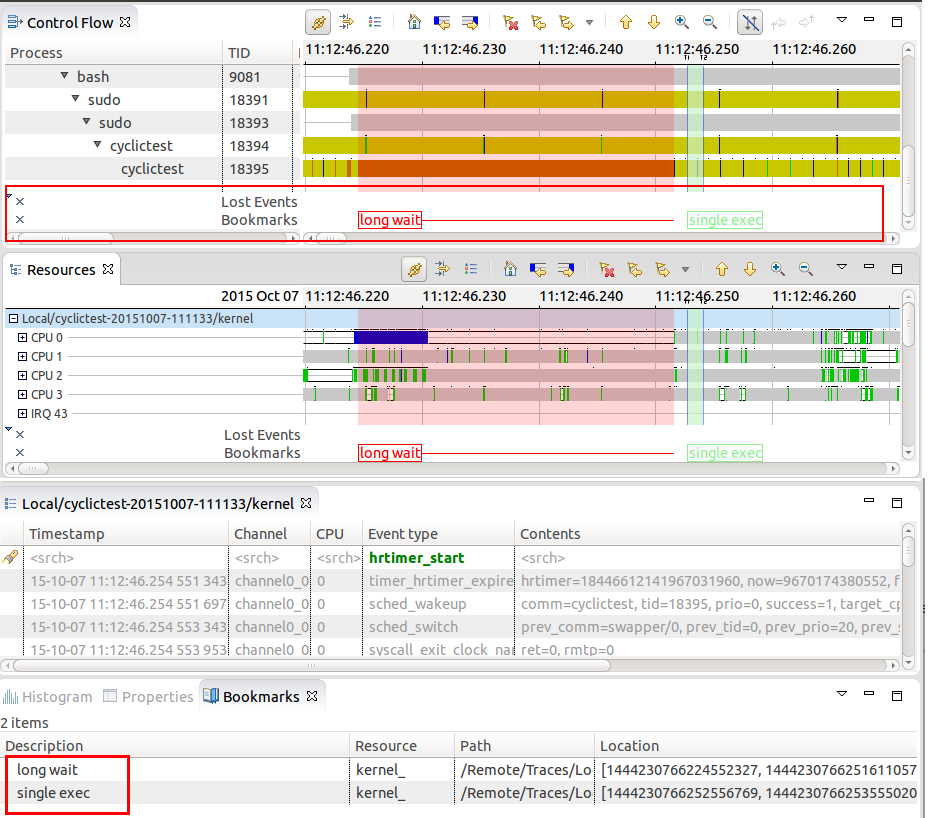

Bookmarks and custom markers

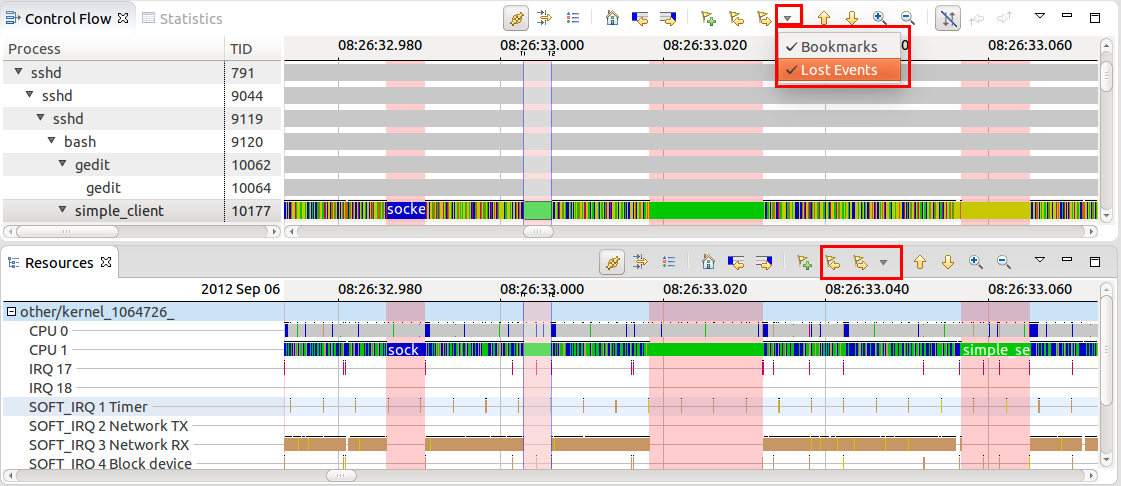

Support for user bookmarks in time graph views

It is now possible to create bookmarks in time graph view for a single or time range selection. This will allow users to annotate their traces and easily navigate to region of interests. The bookmarks are shown in the newly added marker axis on the bottom of the time graph views. It is possible to minimize the marker axis as well as to hide marker categories.

Lost event markers in time graph views

Time graph views such as Control Flow or Resources view now highlight the durations where lost lost events occurred. .

It is possible to navigate from one marker forward and backwards in time graph views as well as it's possible to configure which marker type to include for the navigation. It is also possible to hide markers of a given type from the view menu.

API for trace specific markers

A new API is provided to define programmatically trace specific markers. This can be used, for example, to visualize time periods in the time graph views.

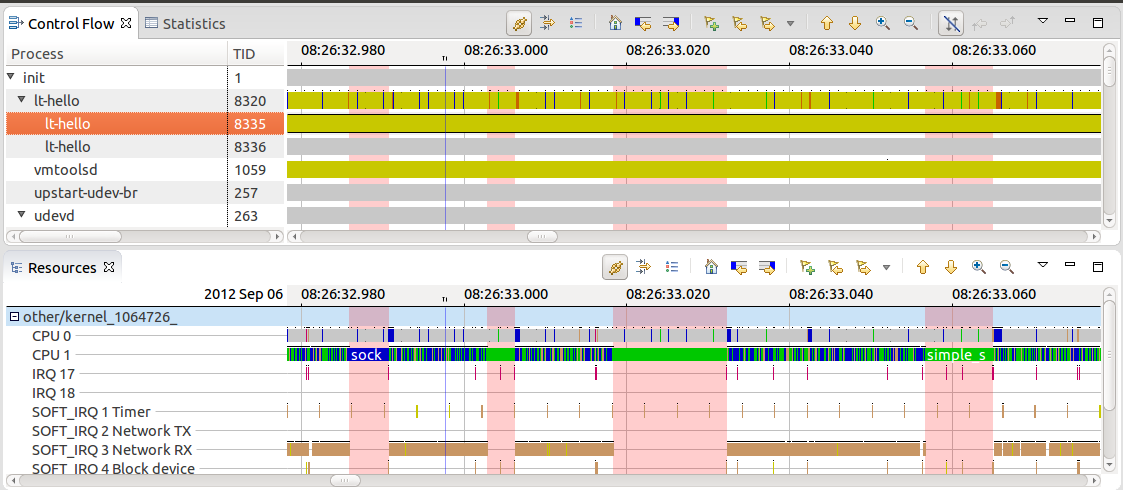

Resources view improvements

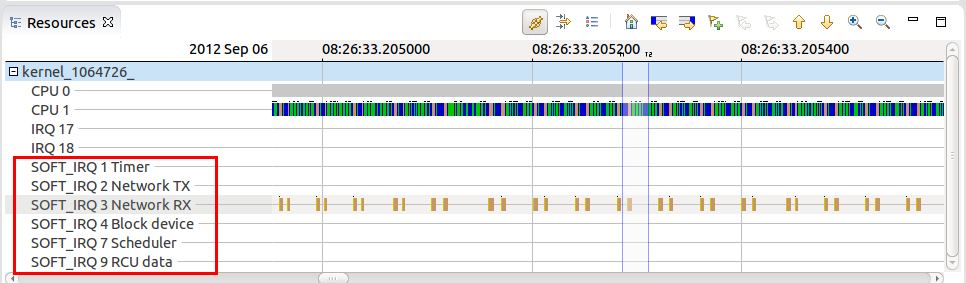

Display of soft IRQ names in the Resources view

The Resources view now displays the soft IRQ names together with the Soft IRQ numbers.

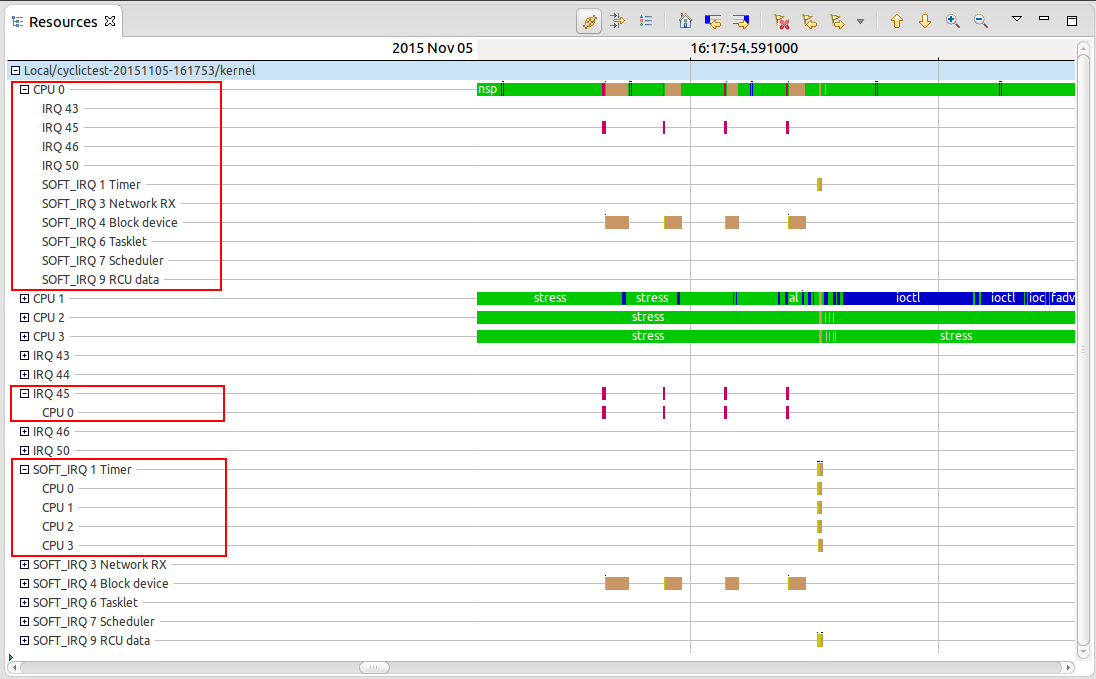

Execution context and state aggregation

The Resources view now shows the execution contexts and adds the CPU states under the IRQ and SoftIRQ entries.

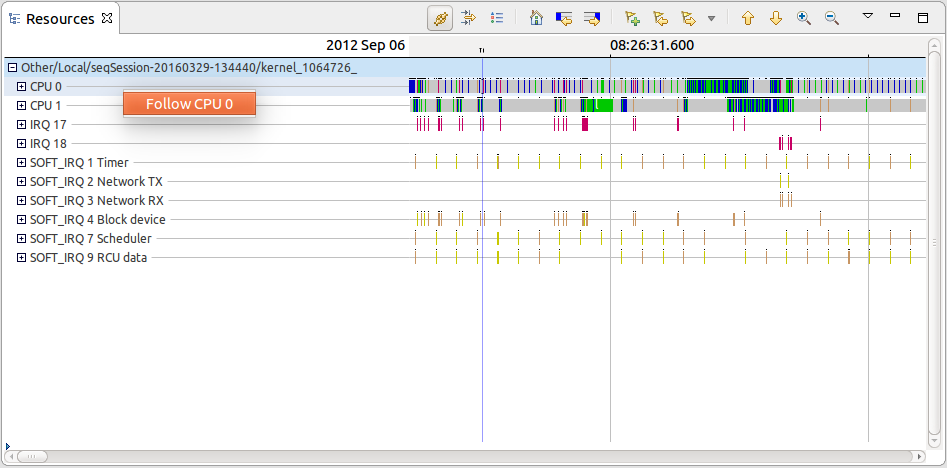

Following a single CPU across views

It is now possible to follow a single CPU from the Resources view using the context-sensitive menu. The CPU Usage view is the first view that uses this information to filter the CPU Usage per CPU.

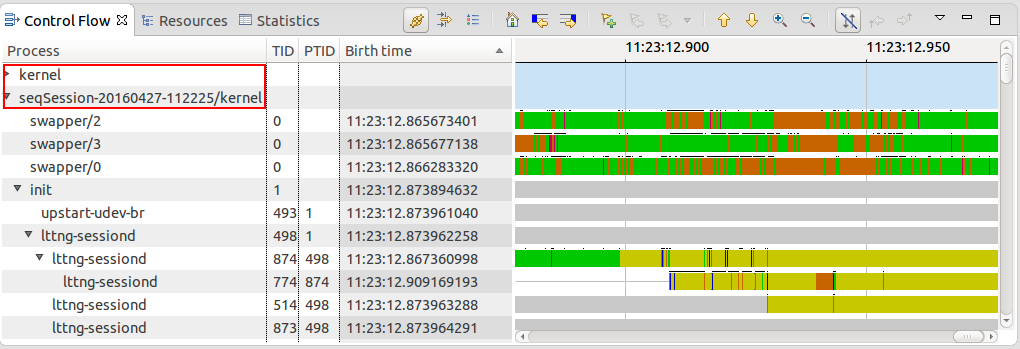

Control Flow view Improvements

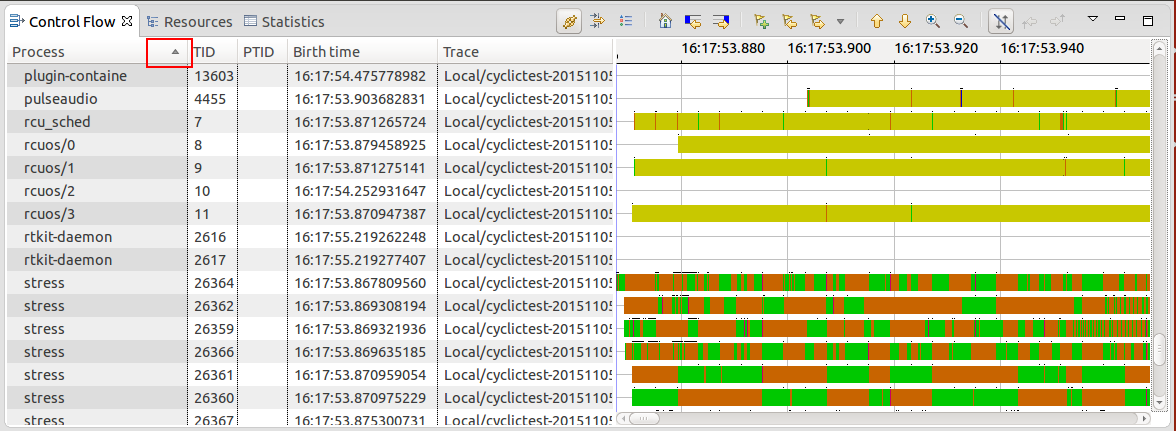

Support for sorting in Control Flow view

It is possible to sort the columns of the tree by clicking on the column header. Subsequent clicking will change the sort order. The hierarchy, i.e. the parent-child relationship is kept. When opening a trace for the first time, the processes are sorted by birth time. The sort order and column will be preserved when switching between open traces. Note that when opening an experiment the processes will be sorted within each trace.

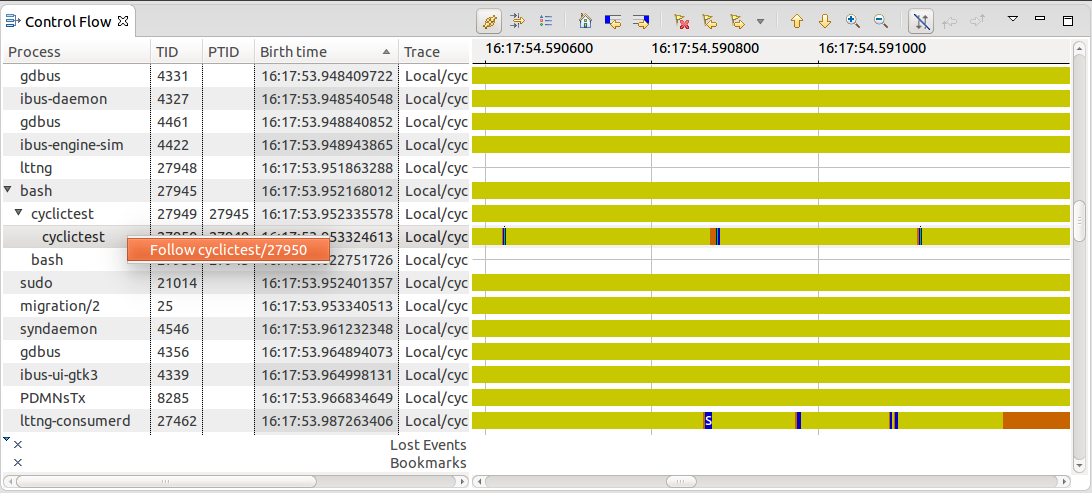

Following single thread across views

It is now possible to follow a single thread from the Control Flow view using the context-sensitive menu. The Critical Path view is the first view that uses this information to trigger its analysis for the selected thread.

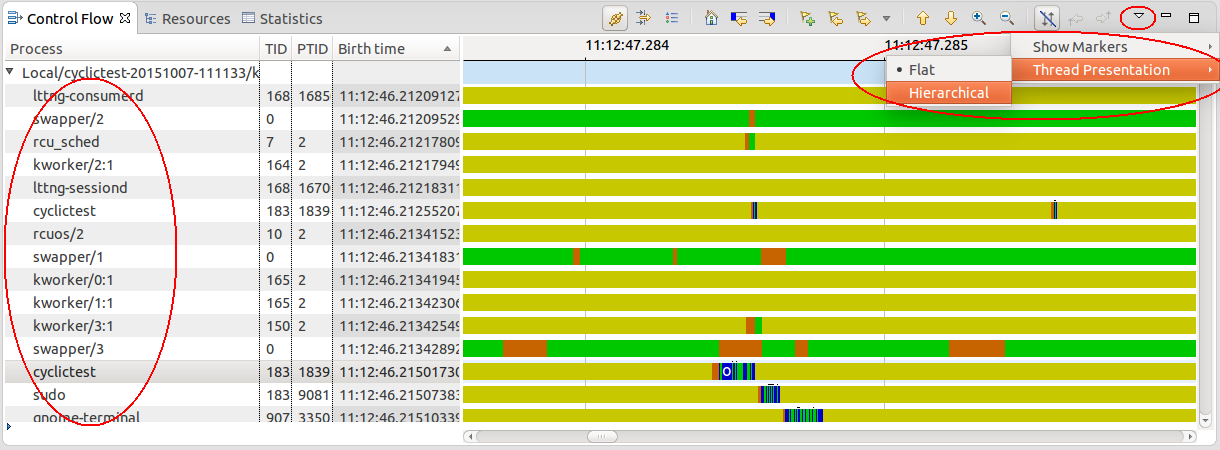

Grouping threads under a trace parent

The threads are no grouped under a trace tree node. The trace column has been removed.

Selecting flat or hierarchical thread presentation

It is now possible to switch between flat or hierarchical thread presentation per trace. For hierarchical presentation the threads are grouped under the parent thread. For the flat presentation no grouping is done.

Support of vertical zooming in time graph views

It is now possible to zoom vertically in all time graph views, for example Control Flow view or Resources view, using the key combination Ctrl+'+' or Ctrl+'-' or using the mouse with Shift+Ctrl+mouse wheel. The key combination Ctrl+'0' resets the vertical zoom to the default.

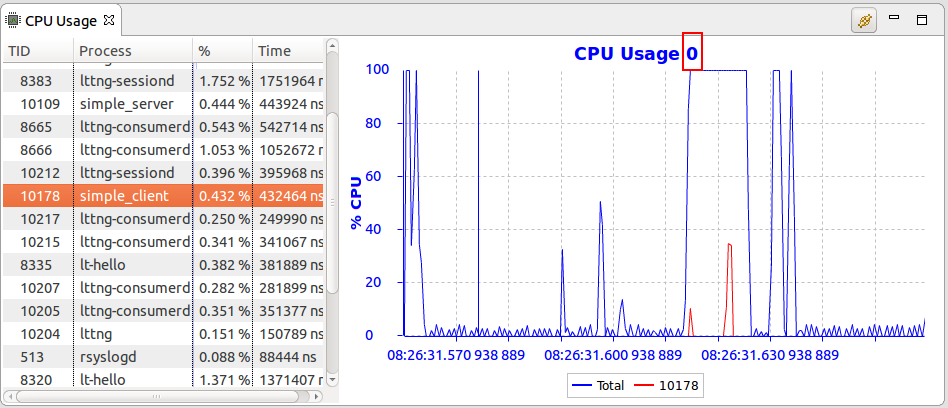

CPU Usage view improvements

Per CPU filtering in CPU Usage view

It is now possible to visualize the CPU usage per CPU in the view. The filtering can be triggered by using the Follow CPU menu item of context sensitive menu of the Resources view.

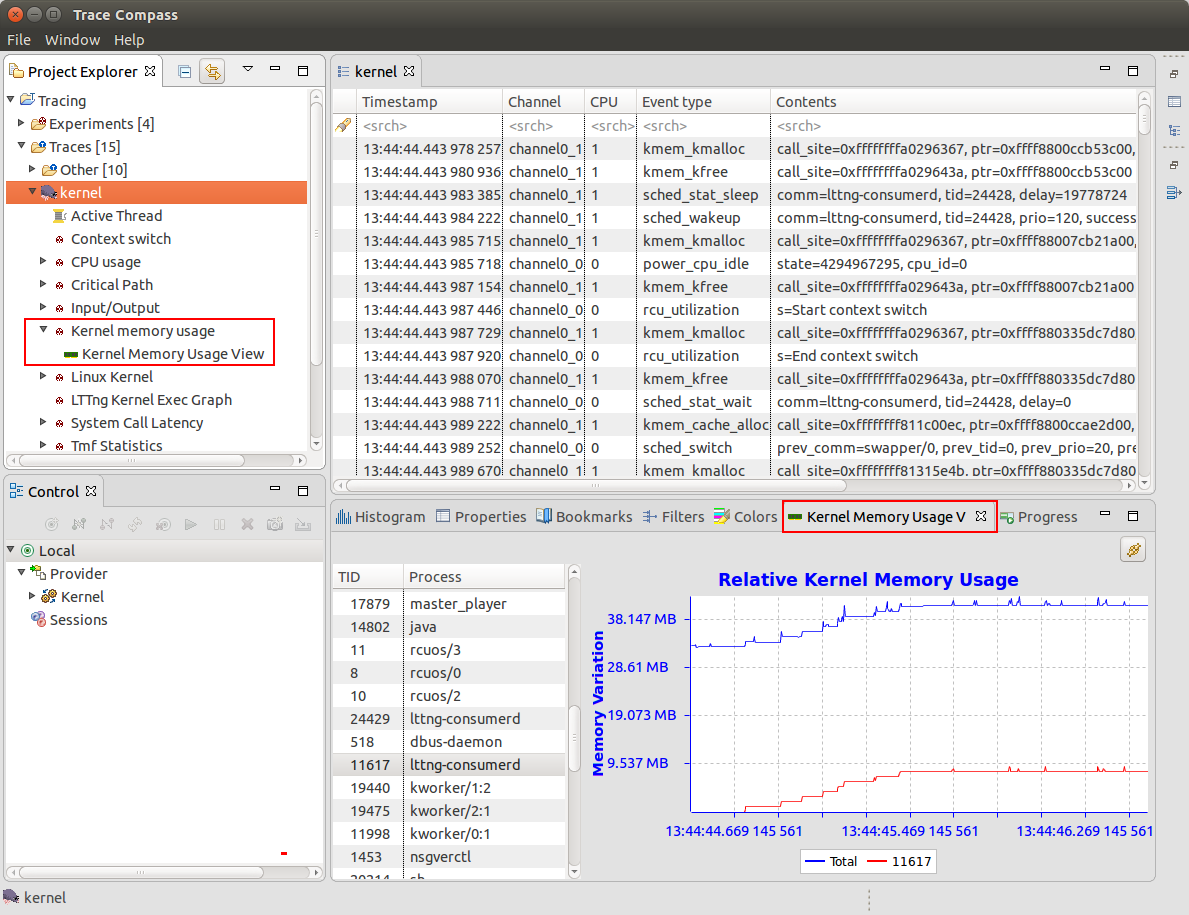

Kernel memory usage analysis

The Linux Kernel memory analysis and view have been added using LTTng Kernel traces. The Kernel Memory Usage view displays the relative Kernel memory usage over time.

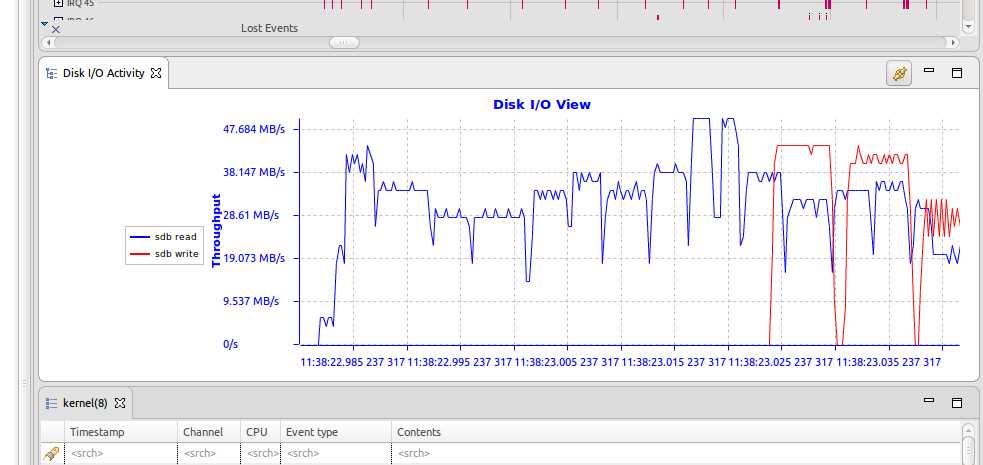

Linux Input/Output analysis and views

The Linux Kernel input/output analysis have been added using LTTng Kernel traces.

I/O Activity view

The I/O Activity view shows the disk I/O activity (throughput) over time.

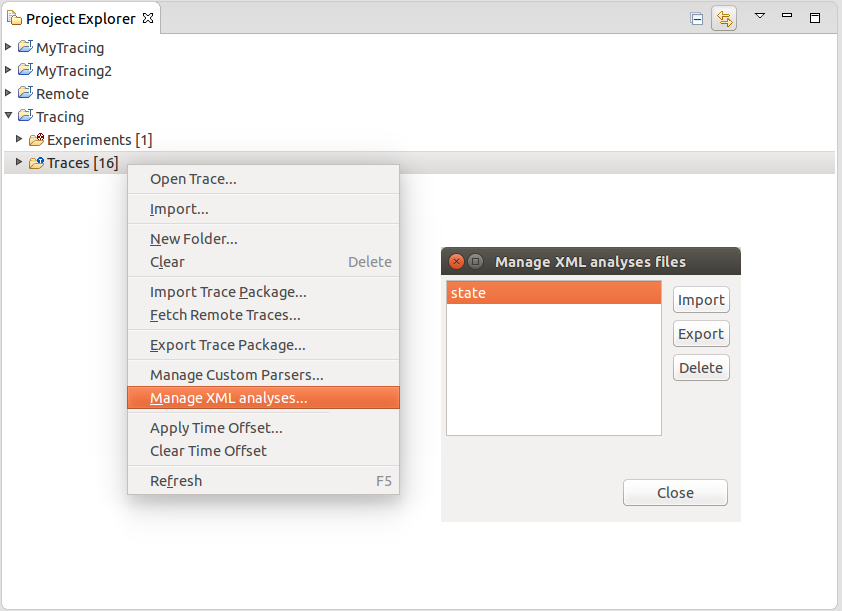

Manage XML analysis files

A dialog box has been added to manage XML analyses. This allows users to import, export and delete XML analysis files.

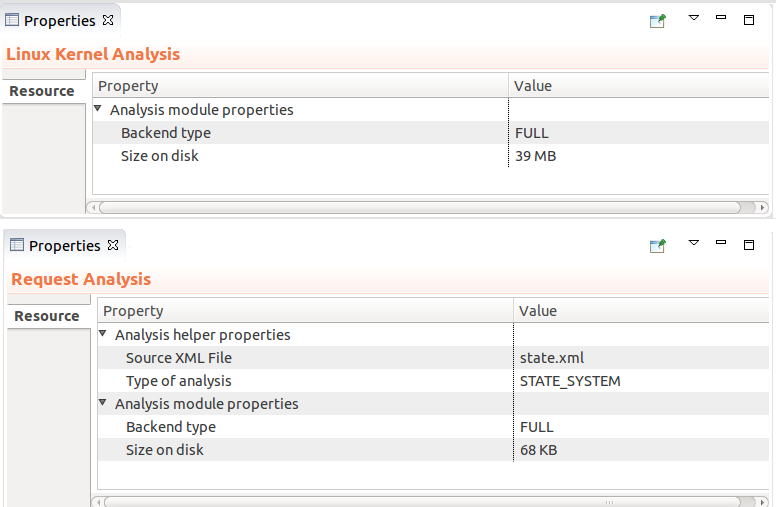

Display of analysis properties

The Properties view now displays analysis properties when selecting an analysis entry in the Project explorer.

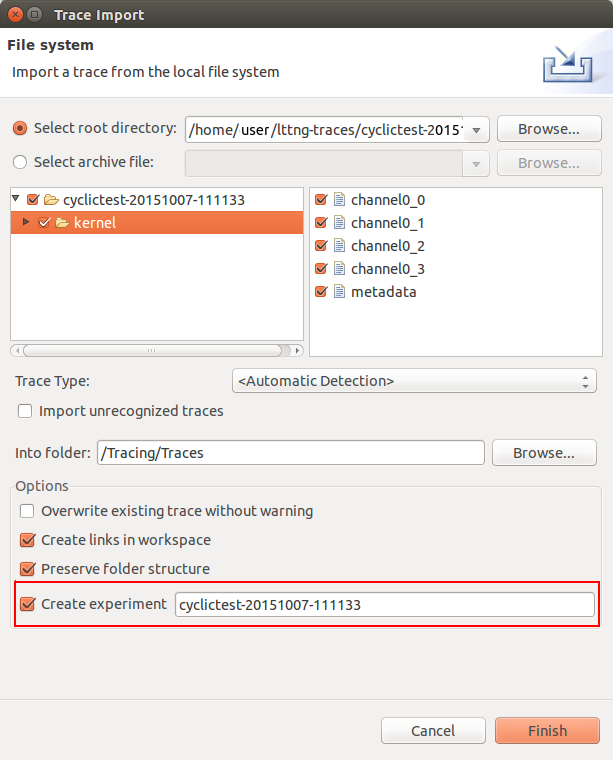

Importing traces as experiment

It is now possible to import traces and create an experiment with all imported traces using the Standard Import wizard.

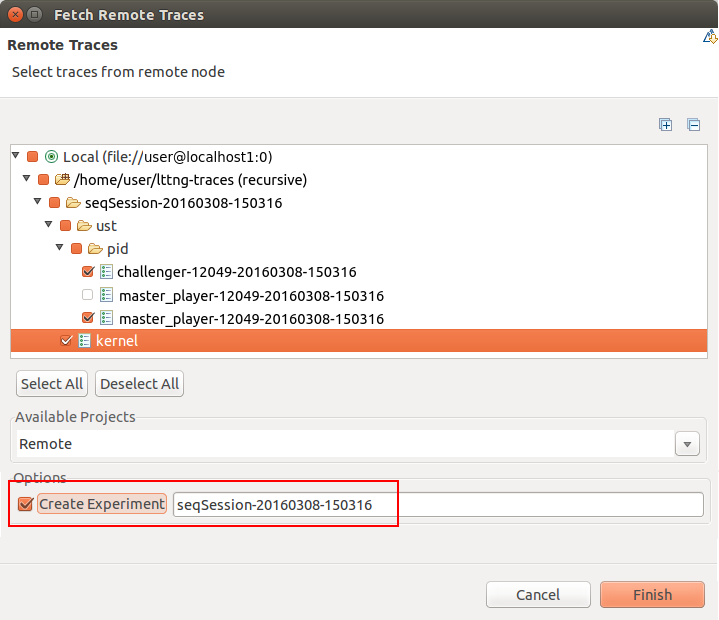

Importing LTTng traces as experiment from the Control view

It is now possible to import traces and create an experiment with all imported traces from the LTTng Control view.

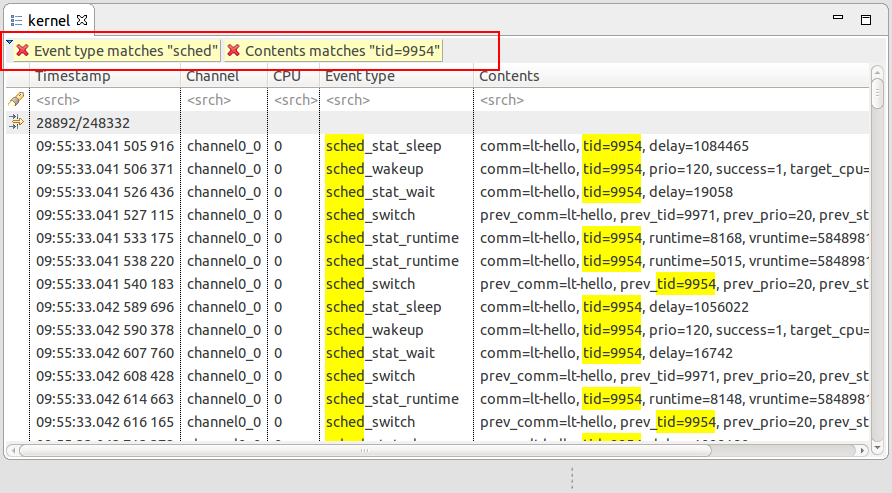

Events Table filtering UI improvement

It is now possible to create a filter from the search string using the CTRL+ENTER key combination. A new collapsible header will be shown for all active filters. Multiple cumulative filters can be added at the same time.

Add support for unit of seconds in TmfTimestampFormat

The T pattern can be augmented with a suffix to indicate the unit of seconds to be used by the format. The following patterns are supported: T (sec), Td (deci), Tc (centi), Tm (milli), Tu (micro), Tn (nano). Amongst other places it is useful when defining custom parsers that need a unit of seconds.

Symbol provider for Call stack view

An extension point has been created to add a symbol provider for the Call Stack view. With this Trace Compass extensions can provide their own symbol mapping implementation. For traces taken with LTTng-UST 2.8 or later, the Callstack View will show the function names automatically, since it will make use of the debug information statedump events (which are enabled when using enable-event -u -a).

Per CPU thread 0

The Control Flow view have been updated to model thread ID 0 per CPU.

Speed-up import of large trace packeges

Importing traces have been optimized to speed-up the time it takes to import many log files.

Improve analysis requirement implementations

- Cleaned-up of analyis requirement API

- Added API to support requirements on event types and event fields. Using event field requirements it possible to define analysis requirements on LTTng event contexts.

- Updated LTTng UST Call Stack and Kernel CPU Usage analysis requirement implementation

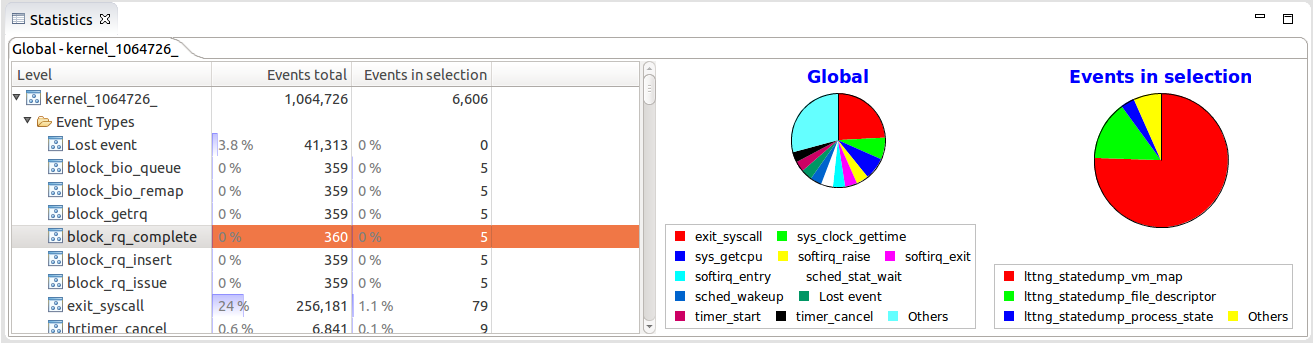

Pie charts in Statistics view

Pie Charts have been added to the Statistics view.

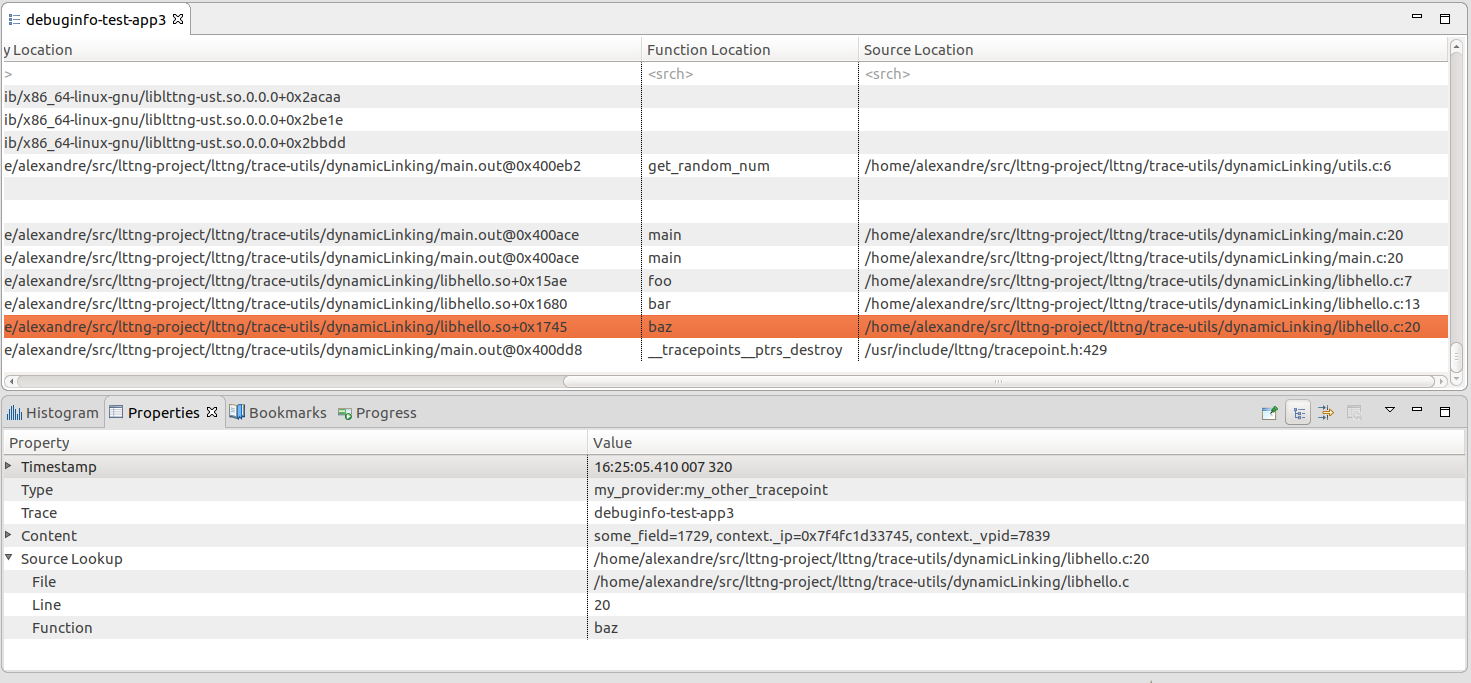

Support for LTTng 2.8+ Debug Info

Traces taken with LTTng-UST 2.8 and above now supply enough information to link every event to its source code location, provided that the ip and vpid contexts are present, and that the original binaries with debug information are available.

A trace event originating from a shared library

A trace event originating from a shared library

Only the Binary Location information is shown if the binaries are not available on the system.

LTTng Live trace reading support disabled

It was decided to disable the LTTng Live trace support because of the shortcomings documented in bug 486728. We look forward to addressing the shortcomings in the future releases in order to re-enable the feature.

Bugs fixed in this release

See Bugzilla report Bugs Fixed in Trace Compass 2.0

Bugs fixed in the 2.0.1 release

See Bugzilla report Bugs Fixed in Trace Compass 2.0.1