Notice: This Wiki is now read only and edits are no longer possible. Please see: https://gitlab.eclipse.org/eclipsefdn/helpdesk/-/wikis/Wiki-shutdown-plan for the plan.

Linux Tools Project/TMF/User Guide

Contents

- 1 Introduction

- 2 Implementing a New Trace Type

- 3 View Tutorial

- 4 Component Interaction

- 4.1 Sending Signals

- 4.2 Receiving Signals

- 4.3 Signal Throttling

- 4.4 Signal Reference

- 4.4.1 TmfStartSynchSignal

- 4.4.2 TmfEndSynchSignal

- 4.4.3 TmfTraceOpenedSignal

- 4.4.4 TmfTraceSelectedSignal

- 4.4.5 TmfTraceClosedSignal

- 4.4.6 TmfTraceRangeUpdatedSignal

- 4.4.7 TmfTraceUpdatedSignal

- 4.4.8 TmfTimeSynchSignal

- 4.4.9 TmfRangeSynchSignal

- 4.4.10 TmfEventFilterAppliedSignal

- 4.4.11 TmfEventSearchAppliedSignal

- 4.4.12 TmfTimestampFormatUpdateSignal

- 4.4.13 TmfStatsUpdatedSignal

- 4.4.14 TmfPacketStreamSelected

- 4.5 Debugging

- 5 Generic State System

- 5.1 Introduction

- 5.2 High-level components

- 5.3 Definitions

- 5.4 Relevant interfaces/classes

- 5.5 Comparison of state system backends

- 5.6 State System Operations

- 5.7 Code example

- 5.8 Mipmap feature

- 6 UML2 Sequence Diagram Framework

- 6.1 TMF UML2 Sequence Diagram Extensions

- 6.2 Management of the Extension Point

- 6.3 Sequence Diagram View

- 6.4 Tutorial

- 6.4.1 Prerequisites

- 6.4.2 Creating an Eclipse UI Plug-in

- 6.4.3 Creating a Sequence Diagram View

- 6.4.4 Defining the uml2SDLoader Extension

- 6.4.5 Implementing the Loader Class

- 6.4.6 Adding time information

- 6.4.7 Default Coolbar and Menu Items

- 6.4.8 Implementing Optional Callbacks

- 6.4.8.1 Using the Paging Provider Interface

- 6.4.8.2 Using the Find Provider Interface

- 6.4.8.3 Using the Filter Provider Interface

- 6.4.8.4 Using the Extended Action Bar Provider Interface

- 6.4.8.5 Using the Properties Provider Interface

- 6.4.8.6 Using the Collapse Provider Interface

- 6.4.8.7 Using the Selection Provider Service

- 6.4.9 Printing a Sequence Diagram

- 6.4.10 Using one Sequence Diagram View with Multiple Loaders

- 6.4.11 Downloading the Tutorial

- 6.5 Integration of Tracing and Monitoring Framework with Sequence Diagram Framework

- 7 CTF Parser

- 8 Event matching and trace synchronization

- 9 Analysis Framework

- 10 Performance Tests

- 11 Network Tracing

Introduction

The purpose of the Tracing Monitoring Framework (TMF) is to facilitate the integration of tracing and monitoring tools into Eclipse, to provide out-of-the-box generic functionalities/views and provide extension mechanisms of the base functionalities for application specific purposes.

Implementing a New Trace Type

The framework can easily be extended to support more trace types. To make a new trace type, one must define the following items:

- The event type

- The trace reader

- The trace context

- The trace location

- The org.eclipse.linuxtools.tmf.core.tracetype plug-in extension point

- (Optional) The org.eclipse.linuxtools.tmf.ui.tracetypeui plug-in extension point

The event type must implement an ITmfEvent or extend a class that implements an ITmfEvent. Typically it will extend TmfEvent. The event type must contain all the data of an event. The trace reader must be of an ITmfTrace type. The TmfTrace class will supply many background operations so that the reader only needs to implement certain functions. The trace context can be seen as the internals of an iterator. It is required by the trace reader to parse events as it iterates the trace and to keep track of its rank and location. It can have a timestamp, a rank, a file position, or any other element, it should be considered to be ephemeral. The trace location is an element that is cloned often to store checkpoints, it is generally persistent. It is used to rebuild a context, therefore, it needs to contain enough information to unambiguously point to one and only one event. Finally the tracetype plug-in extension associates a given trace, non-programmatically to a trace type for use in the UI.

An Example: Nexus-lite parser

Description of the file

This is a very small subset of the nexus trace format, with some changes to make it easier to read. There is one file. This file starts with 64 Strings containing the event names, then an arbitrarily large number of events. The events are each 64 bits long. the first 32 are the timestamp in microseconds, the second 32 are split into 6 bits for the event type, and 26 for the data payload.

The trace type will be made of two parts, part 1 is the event description, it is just 64 strings, comma seperated and then a line feed.

Startup,Stop,Load,Add, ... ,reserved\n

Then there will be the events in this format

| timestamp (32 bits) | type (6 bits) | payload (26 bits) |

| 64 bits total | ||

all events will be the same size (64 bits).

NexusLite Plug-in

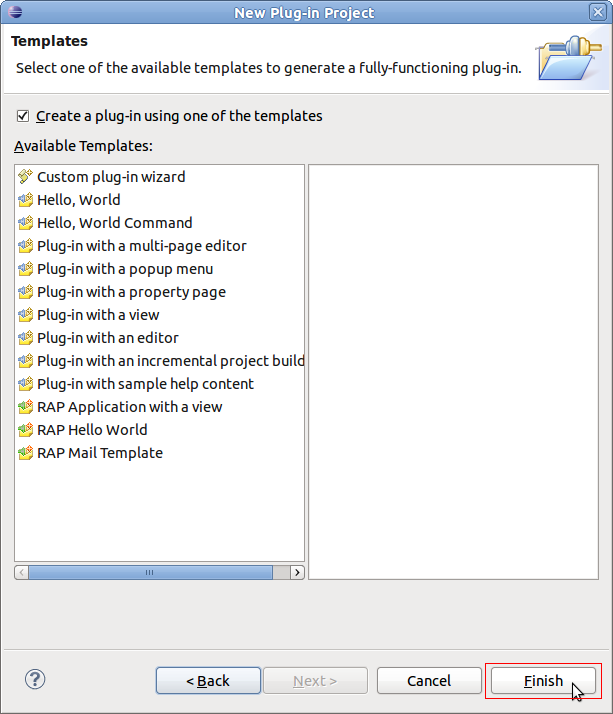

Create a New, Project..., Plug-in Project, set the title to com.example.nexuslite, click Next > then click on Finish.

Now the structure for the Nexus trace Plug-in is set up.

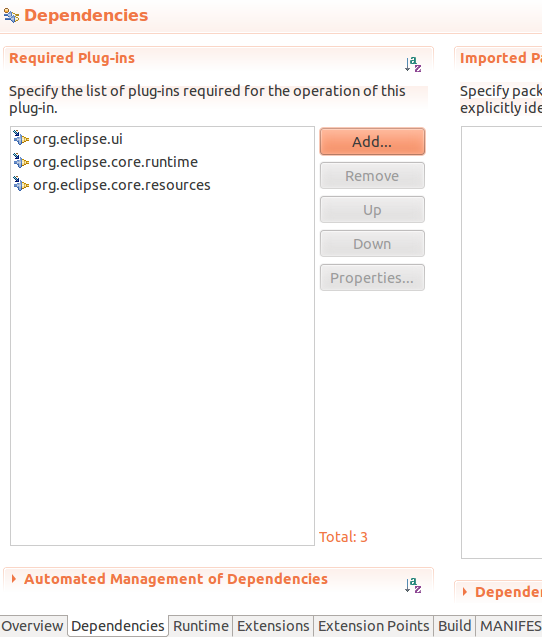

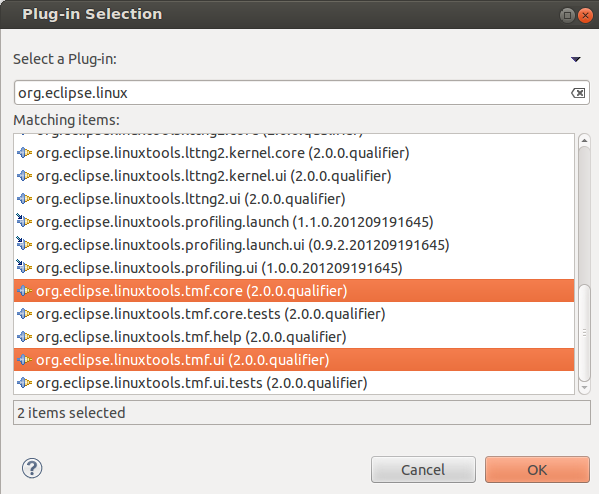

Add a dependency to TMF core and UI by opening the MANIFEST.MF in META-INF, selecting the Dependencies tab and Add ... org.eclipse.linuxtools.tmf.core and org.eclipse.linuxtools.tmf.ui.

Now the project can access TMF classes.

Trace Event

The TmfEvent class will work for this example. No code required.

Trace Reader

The trace reader will extend a TmfTrace class.

It will need to implement:

- validate (is the trace format valid?)

- initTrace (called as the trace is opened

- seekEvent (go to a position in the trace and create a context)

- getNext (implemented in the base class)

- parseEvent (read the next element in the trace)

For reference, there is an example implementation of the Nexus Trace file in org.eclipse.linuxtools.tracing.examples.core.trace.nexus.NexusTrace.java.

In this example, the validate function checks first checks if the file exists, then makes sure that it is really a file, and not a directory. Then we attempt to read the file header, to make sure that it is really a Nexus Trace. If that check passes, we return a TmfValidationStatus with a confidence of 20.

Typically, TmfValidationStatus confidences should range from 1 to 100. 1 meaning "there is a very small chance that this trace is of this type", and 100 meaning "it is this type for sure, and cannot be anything else". At run-time, the auto-detection will pick the the type which returned the highest confidence. So checks of the type "does the file exist?" should not return a too high confidence.

Here we used a confidence of 20, to leave "room" for more specific trace types in the Nexus format that could be defined in TMF.

The initTrace function will read the event names, and find where the data starts. After this, the number of events is known, and since each event is 8 bytes long according to the specs, the seek is then trivial.

The seek here will just reset the reader to the right location.

The parseEvent method needs to parse and return the current event and store the current location.

The getNext method (in base class) will read the next event and update the context. It calls the parseEvent method to read the event and update the location. It does not need to be overridden and in this example it is not. The sequence of actions necessary are parse the next event from the trace, create an ITmfEvent with that data, update the current location, call updateAttributes, update the context then return the event.

Traces will typically implement an index, to make seeking faster. The index can be rebuilt every time the trace is opened. Alternatively, it can be saved to disk, to make future openings of the same trace quicker. To do so, the trace object can implement the ITmfPersistentlyIndexable interface.

Trace Context

The trace context will be a TmfContext

Trace Location

The trace location will be a long, representing the rank in the file. The TmfLongLocation will be the used, once again, no code is required.

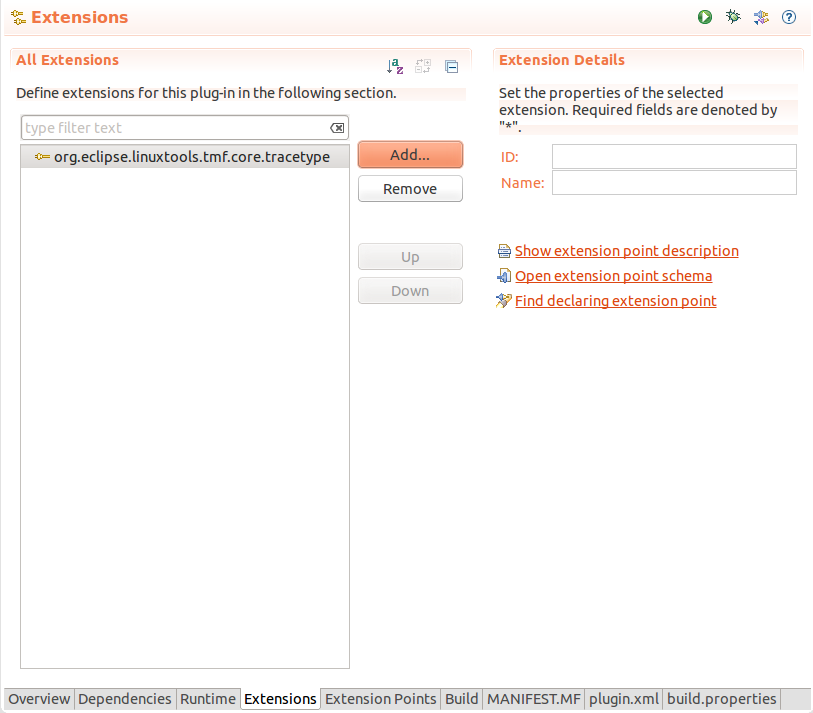

The org.eclipse.linuxtools.tmf.core.tracetype and org.eclipse.linuxtools.tmf.ui.tracetypeui plug-in extension point

One should implement the tmf.core.tracetype extension in their own plug-in. In this example, the Nexus trace plug-in will be modified.

The plugin.xml file in the ui plug-in needs to be updated if one wants users to access the given event type. It can be updated in the Eclipse plug-in editor.

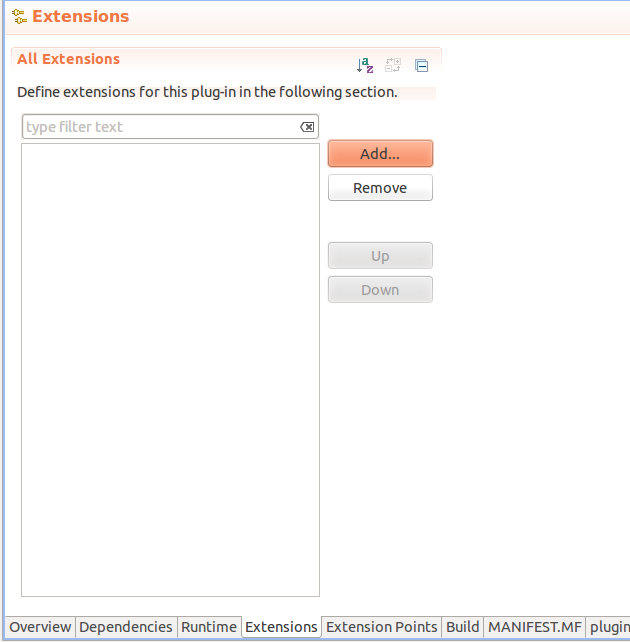

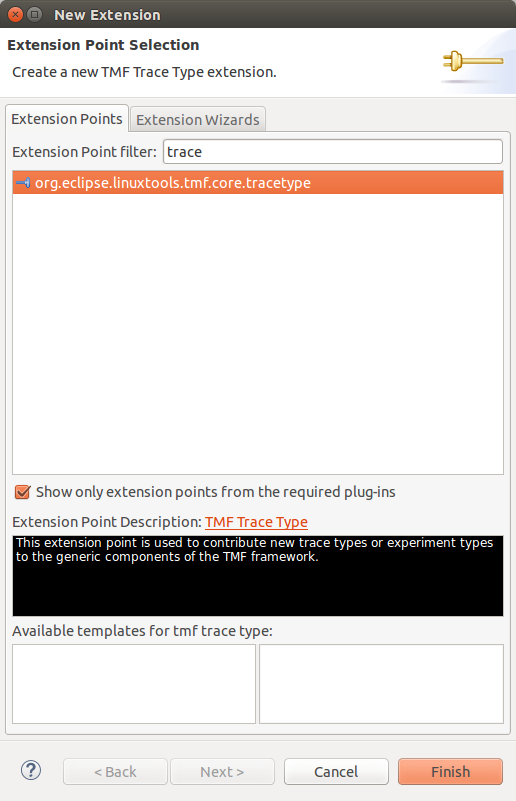

- In Extensions tab, add the org.eclipse.linuxtools.tmf.core.tracetype extension point.

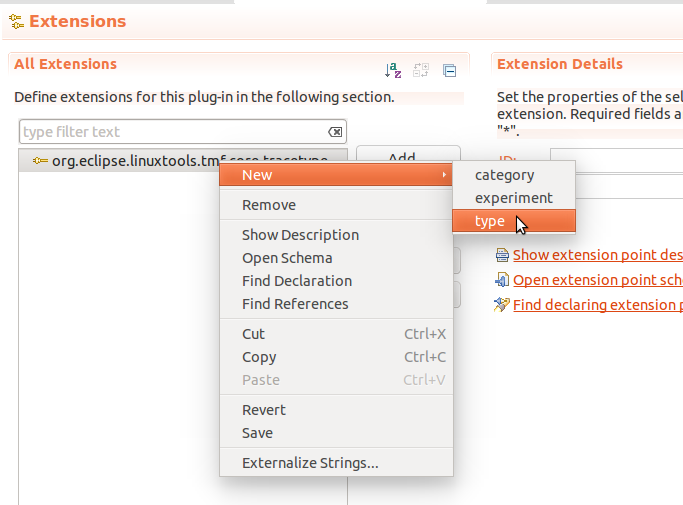

- Add in the org.eclipse.linuxtools.tmf.ui.tracetype extension a new type. To do that, right click on the extension then in the context menu, go to New >, type.

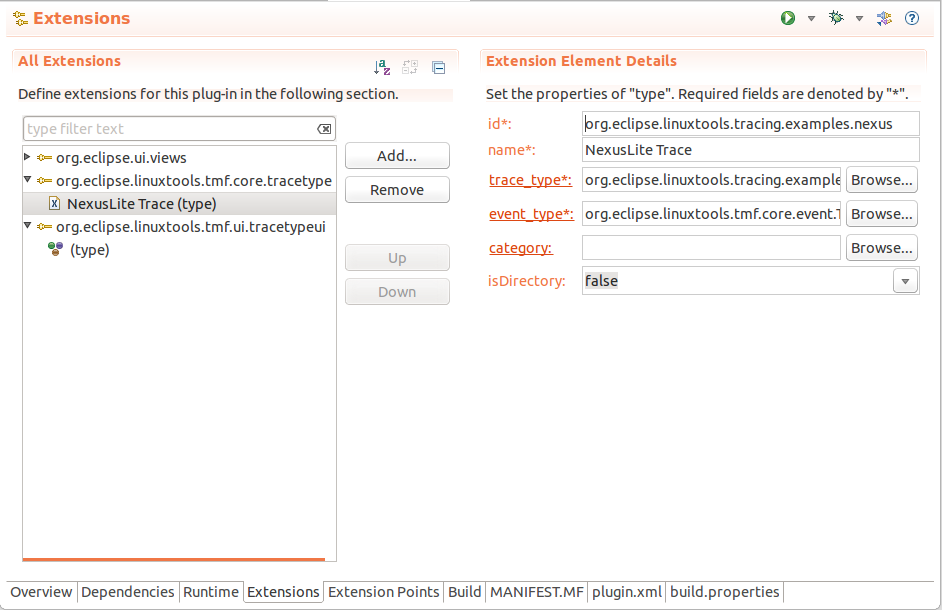

The id is the unique identifier used to refer to the trace.

The name is the field that shall be displayed when a trace type is selected.

The trace type is the canonical path refering to the class of the trace.

The event type is the canonical path refering to the class of the events of a given trace.

The category (optional) is the container in which this trace type will be stored.

- (Optional) To also add UI-specific properties to your trace type, use the org.eclipse.linuxtools.tmf.ui.tracetypeui extension. To do that,

right click on the extension then in the context menu, go to New >, type.

The tracetype here is the id of the org.eclipse.linuxtools.tmf.core.tracetype mentioned above.

The icon is the image to associate with that trace type.

In the end, the extension menu should look like this.

Best Practices

- Do not load the whole trace in RAM, it will limit the size of the trace that can be read.

- Reuse as much code as possible, it makes the trace format much easier to maintain.

- Use Eclipse's editor instead of editing the XML directly.

- Do not forget Java supports only signed data types, there may be special care needed to handle unsigned data.

- If the support for your trace has custom UI elements (like icons, views, etc.), split the core and UI parts in separate plugins, named identically except for a .core or .ui suffix.

- Implement the tmf.core.tracetype extension in the core plugin, and the tmf.ui.tracetypeui extension in the UI plugin if applicable.

Download the Code

The described example is available in the org.eclipse.linuxtools.tracing.examples.(tests.)trace.nexus packages with a trace generator and a quick test case.

Optional Trace Type Attributes

After defining the trace type as described in the previous chapters it is possible to define optional attributes for the trace type.

Default Editor

The defaultEditor attribute of the org.eclipse.tmf.ui.tracetypeui extension point allows for configuring the editor to use for displaying the events. If omitted, the TmfEventsEditor is used as default.

To configure an editor, first add the defaultEditor attribute to the trace type in the extension definition. This can be done by selecting the trace type in the plug-in manifest editor. Then click the right mouse button and select New -> defaultEditor in the context sensitive menu. Then select the newly added attribute. Now you can specify the editor id to use on the right side of the manifest editor. For example, this attribute could be used to implement an extension of the class org.eclipse.ui.part.MultiPageEditor. The first page could use the TmfEventsEditor' to display the events in a table as usual and other pages can display other aspects of the trace.

Events Table Type

The eventsTableType attribute of the org.eclipse.tmf.ui.tracetypeui extension point allows for configuring the events table class to use in the default events editor. If omitted, the default events table will be used.

To configure a trace type specific events table, first add the eventsTableType attribute to the trace type in the extension definition. This can be done by selecting the trace type in the plug-in manifest editor. Then click the right mouse button and select New -> eventsTableType in the context sensitive menu. Then select the newly added attribute and click on class on the right side of the manifest editor. The new class wizard will open. The superclass field will be already filled with the class org.eclipse.linuxtools.tmf.ui.viewers.events.TmfEventsTable.

By using this attribute, a table with different columns than the default columns can be defined. See the class org.eclipse.linuxtools.internal.lttng2.kernel.ui.viewers.events.Lttng2EventsTable for an example implementation.

View Tutorial

This tutorial describes how to create a simple view using the TMF framework and the SWTChart library. SWTChart is a library based on SWT that can draw several types of charts including a line chart which we will use in this tutorial. We will create a view containing a line chart that displays time stamps on the X axis and the corresponding event values on the Y axis.

This tutorial will cover concepts like:

- Extending TmfView

- Signal handling (@TmfSignalHandler)

- Data requests (TmfEventRequest)

- SWTChart integration

Note: TMF 3.0.0 provides base implementations for generating SWTChart viewers and views. For more details please refer to chapter #TMF Built-in Views and Viewers.

Prerequisites

The tutorial is based on Eclipse 4.4 (Eclipse Luna), TMF 3.0.0 and SWTChart 0.7.0. If you are using TMF from the source repository, SWTChart is already included in the target definition file (see org.eclipse.linuxtools.lttng.target). You can also install it manually by using the Orbit update site. http://download.eclipse.org/tools/orbit/downloads/

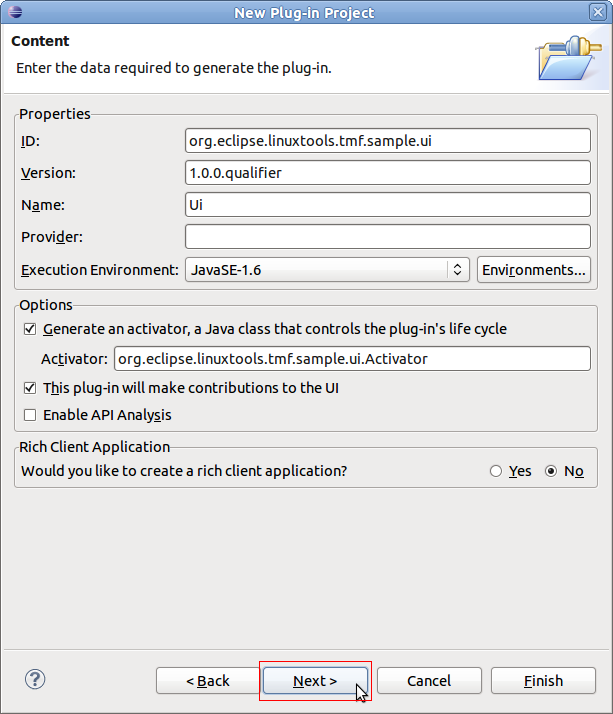

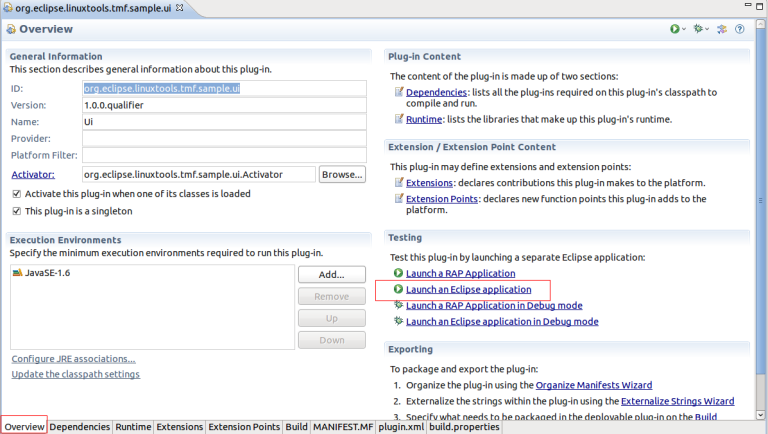

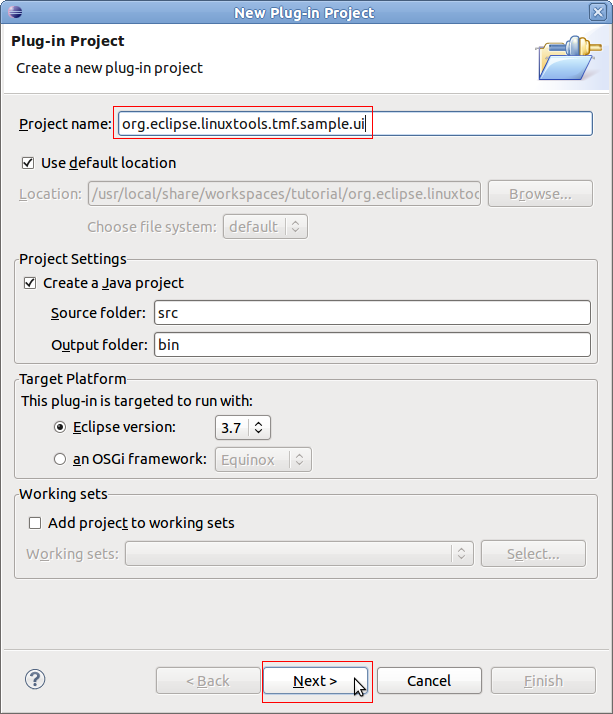

Creating an Eclipse UI Plug-in

To create a new project with name org.eclipse.linuxtools.tmf.sample.ui select File -> New -> Project -> Plug-in Development -> Plug-in Project.

Creating a View

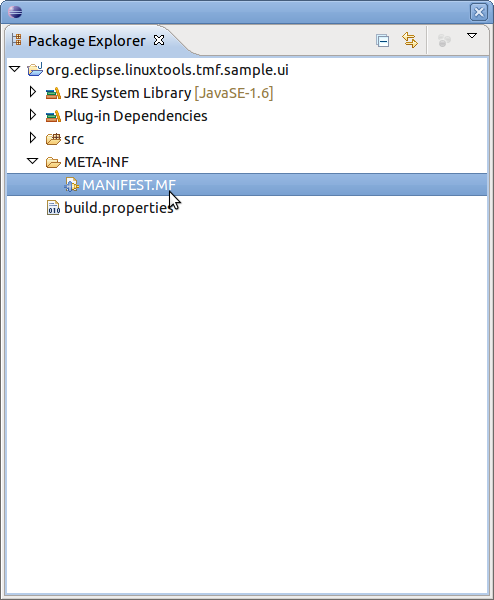

To open the plug-in manifest, double-click on the MANIFEST.MF file.

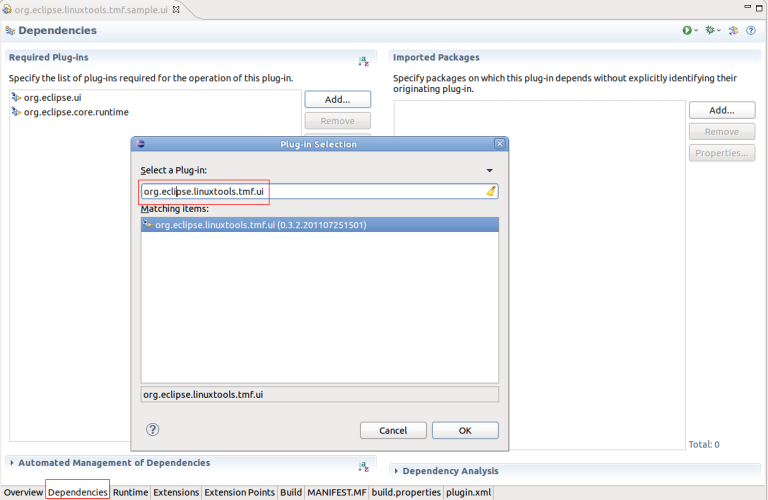

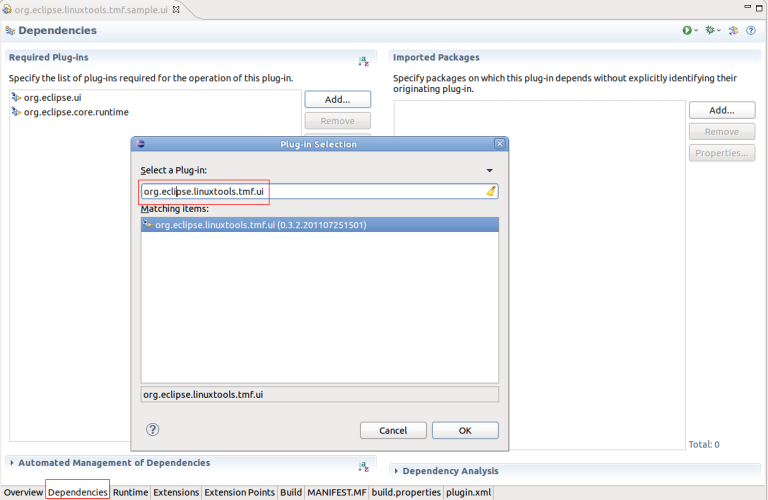

Change to the Dependencies tab and select Add... of the Required Plug-ins section. A new dialog box will open. Next find plug-in org.eclipse.linuxtools.tmf.core and press OK

Following the same steps, add org.eclipse.linuxtools.tmf.ui and org.swtchart.

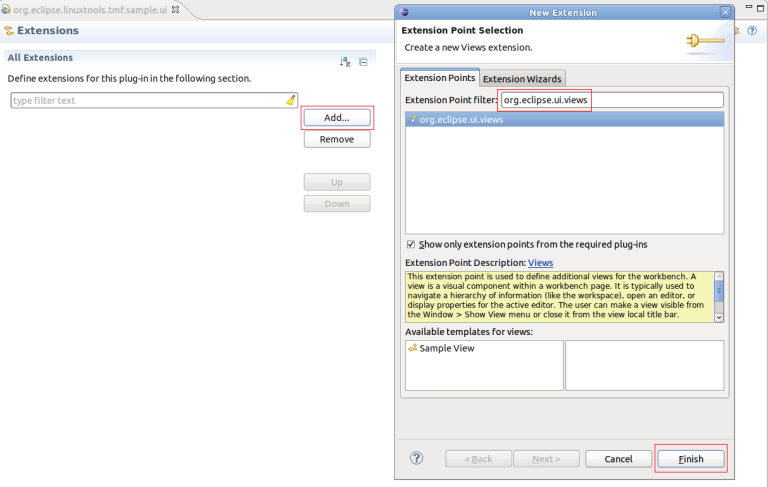

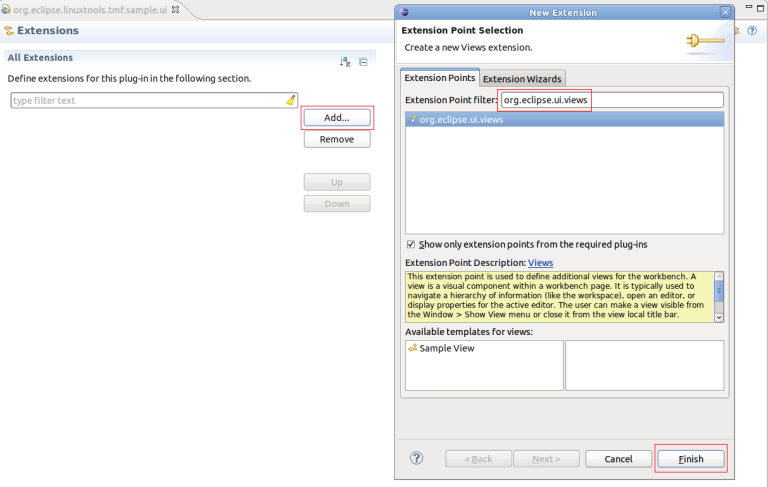

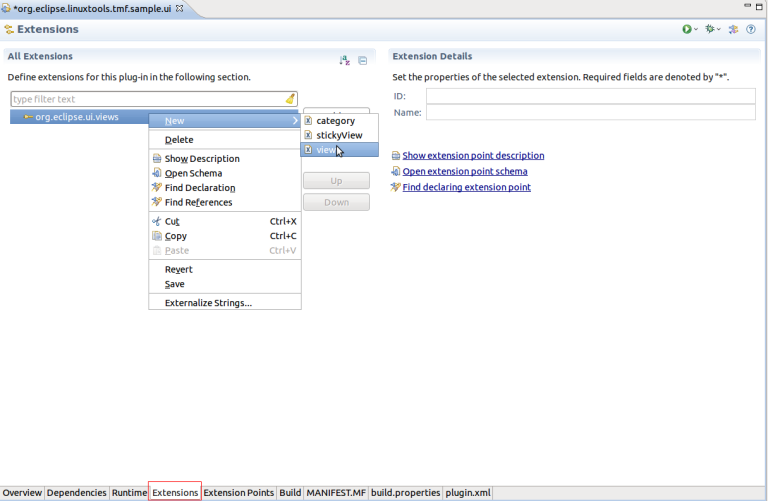

Change to the Extensions tab and select Add... of the All Extension section. A new dialog box will open. Find the view extension org.eclipse.ui.views and press Finish.

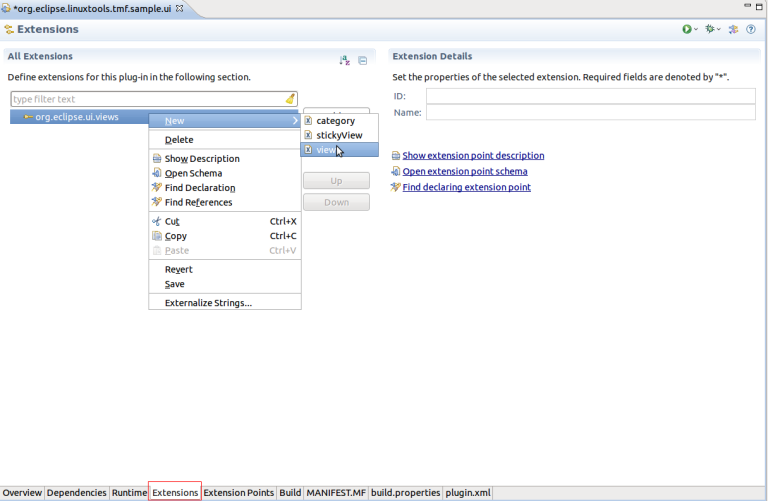

To create a view, click the right mouse button. Then select New -> view

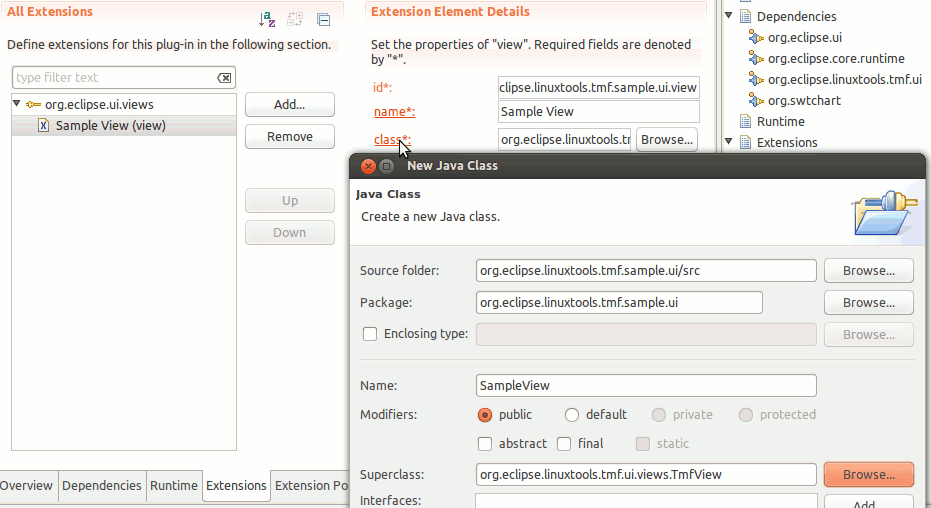

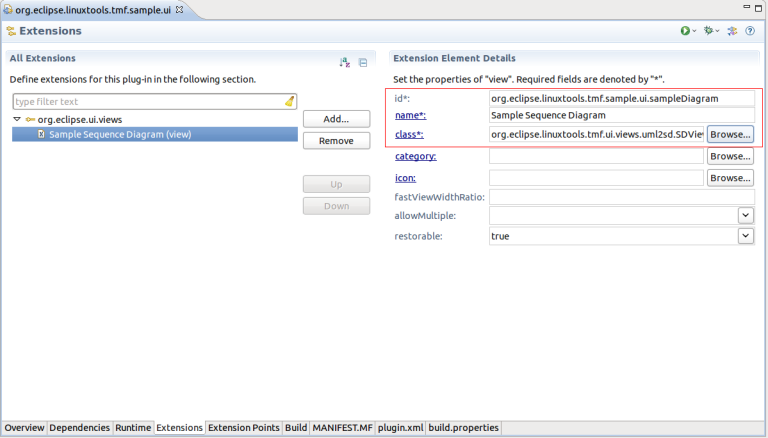

A new view entry has been created. Fill in the fields id and name. For class click on the class hyperlink and it will show the New Java Class dialog. Enter the name SampleView, change the superclass to TmfView and click Finish. This will create the source file and fill the class field in the process. We use TmfView as the superclass because it provides extra functionality like getting the active trace, pinning and it has support for signal handling between components.

This will generate an empty class. Once the quick fixes are applied, the following code is obtained:

package org.eclipse.linuxtools.tmf.sample.ui;

import org.eclipse.swt.widgets.Composite;

import org.eclipse.ui.part.ViewPart;

public class SampleView extends TmfView {

public SampleView(String viewName) {

super(viewName);

// TODO Auto-generated constructor stub

}

@Override

public void createPartControl(Composite parent) {

// TODO Auto-generated method stub

}

@Override

public void setFocus() {

// TODO Auto-generated method stub

}

}

This creates an empty view, however the basic structure is now is place.

Implementing a view

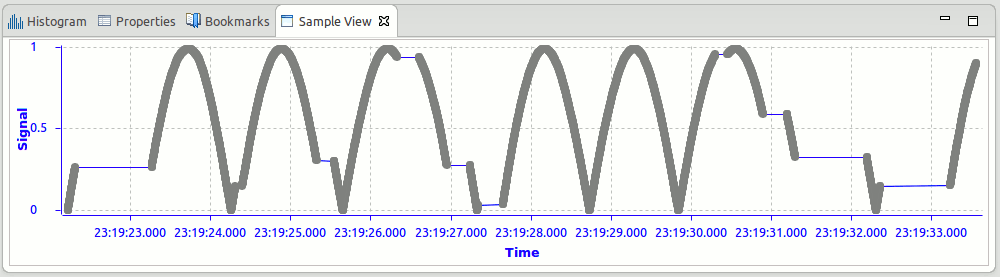

We will start by adding a empty chart then it will need to be populated with the trace data. Finally, we will make the chart more visually pleasing by adjusting the range and formating the time stamps.

Adding an Empty Chart

First, we can add an empty chart to the view and initialize some of its components.

private static final String SERIES_NAME = "Series";

private static final String Y_AXIS_TITLE = "Signal";

private static final String X_AXIS_TITLE = "Time";

private static final String FIELD = "value"; // The name of the field that we want to display on the Y axis

private static final String VIEW_ID = "org.eclipse.linuxtools.tmf.sample.ui.view";

private Chart chart;

private ITmfTrace currentTrace;

public SampleView() {

super(VIEW_ID);

}

@Override

public void createPartControl(Composite parent) {

chart = new Chart(parent, SWT.BORDER);

chart.getTitle().setVisible(false);

chart.getAxisSet().getXAxis(0).getTitle().setText(X_AXIS_TITLE);

chart.getAxisSet().getYAxis(0).getTitle().setText(Y_AXIS_TITLE);

chart.getSeriesSet().createSeries(SeriesType.LINE, SERIES_NAME);

chart.getLegend().setVisible(false);

}

@Override

public void setFocus() {

chart.setFocus();

}

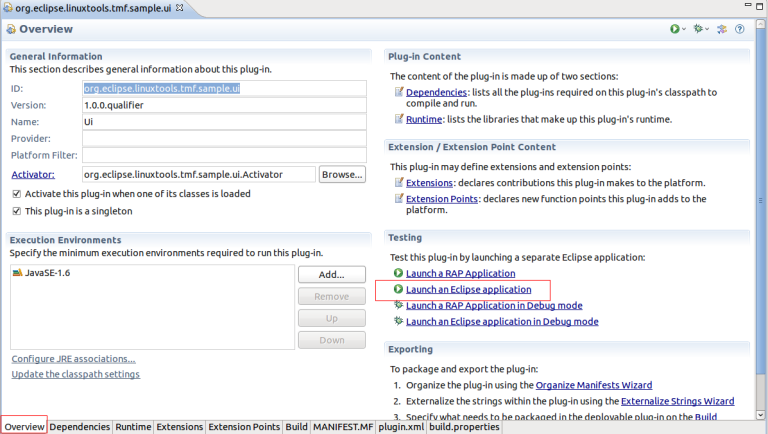

The view is prepared. Run the Example. To launch the an Eclipse Application select the Overview tab and click on Launch an Eclipse Application

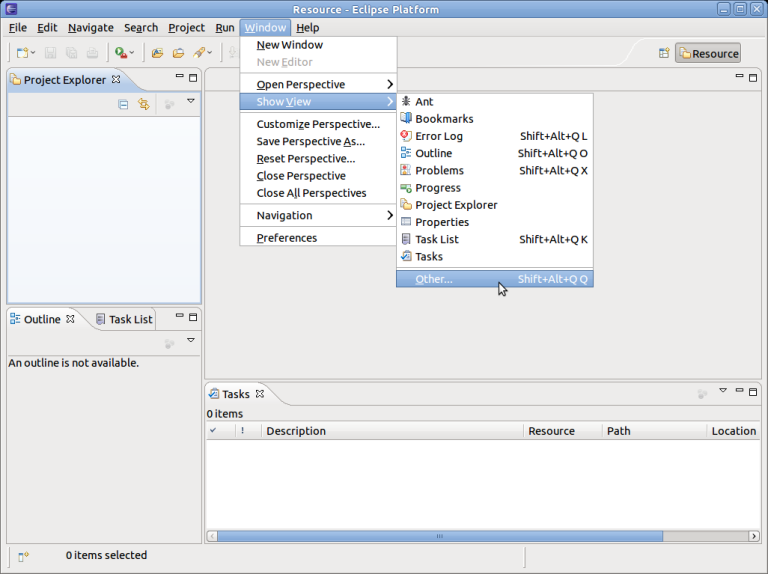

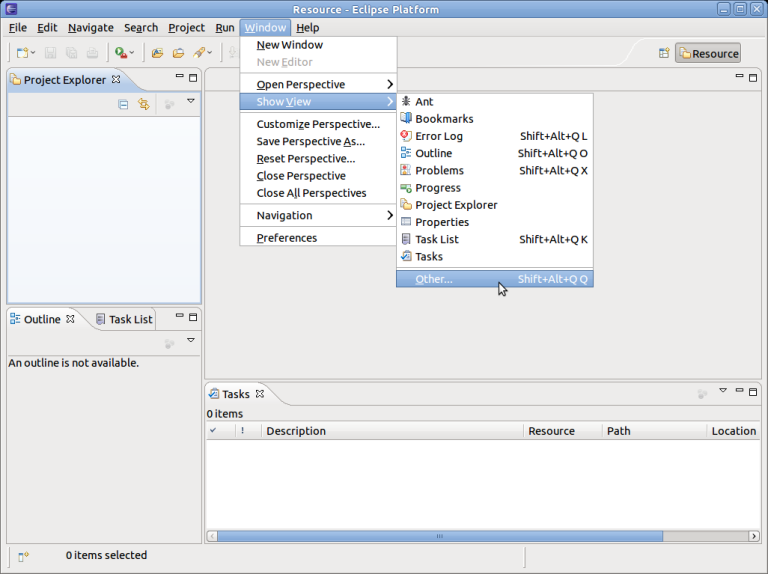

A new Eclipse application window will show. In the new window go to Windows -> Show View -> Other... -> Other -> Sample View.

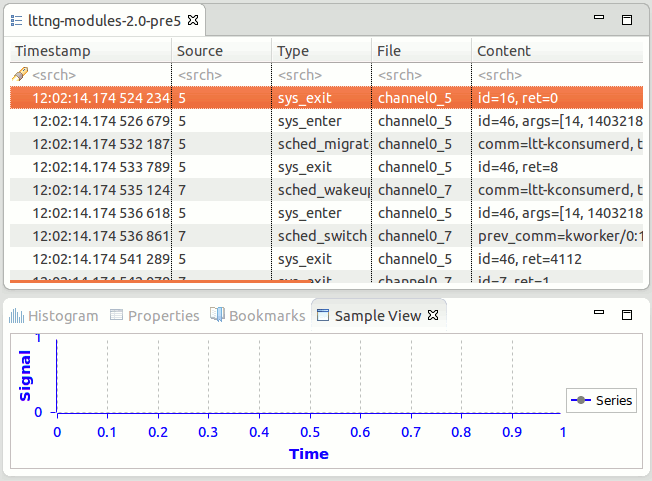

You should now see a view containing an empty chart

Signal Handling

We would like to populate the view when a trace is selected. To achieve this, we can use a signal hander which is specified with the @TmfSignalHandler annotation.

@TmfSignalHandler

public void traceSelected(final TmfTraceSelectedSignal signal) {

}

Requesting Data

Then we need to actually gather data from the trace. This is done asynchronously using a TmfEventRequest

@TmfSignalHandler

public void traceSelected(final TmfTraceSelectedSignal signal) {

// Don't populate the view again if we're already showing this trace

if (currentTrace == signal.getTrace()) {

return;

}

currentTrace = signal.getTrace();

// Create the request to get data from the trace

TmfEventRequest req = new TmfEventRequest(TmfEvent.class,

TmfTimeRange.ETERNITY, 0, ITmfEventRequest.ALL_DATA,

ITmfEventRequest.ExecutionType.BACKGROUND) {

@Override

public void handleData(ITmfEvent data) {

// Called for each event

super.handleData(data);

}

@Override

public void handleSuccess() {

// Request successful, not more data available

super.handleSuccess();

}

@Override

public void handleFailure() {

// Request failed, not more data available

super.handleFailure();

}

};

ITmfTrace trace = signal.getTrace();

trace.sendRequest(req);

}

Transferring Data to the Chart

The chart expects an array of doubles for both the X and Y axis values. To provide that, we can accumulate each event's time and value in their respective list then convert the list to arrays when all events are processed.

TmfEventRequest req = new TmfEventRequest(TmfEvent.class,

TmfTimeRange.ETERNITY, 0, ITmfEventRequest.ALL_DATA,

ITmfEventRequest.ExecutionType.BACKGROUND) {

ArrayList<Double> xValues = new ArrayList<Double>();

ArrayList<Double> yValues = new ArrayList<Double>();

@Override

public void handleData(ITmfEvent data) {

// Called for each event

super.handleData(data);

ITmfEventField field = data.getContent().getField(FIELD);

if (field != null) {

yValues.add((Double) field.getValue());

xValues.add((double) data.getTimestamp().getValue());

}

}

@Override

public void handleSuccess() {

// Request successful, not more data available

super.handleSuccess();

final double x[] = toArray(xValues);

final double y[] = toArray(yValues);

// This part needs to run on the UI thread since it updates the chart SWT control

Display.getDefault().asyncExec(new Runnable() {

@Override

public void run() {

chart.getSeriesSet().getSeries()[0].setXSeries(x);

chart.getSeriesSet().getSeries()[0].setYSeries(y);

chart.redraw();

}

});

}

/**

* Convert List<Double> to double[]

*/

private double[] toArray(List<Double> list) {

double[] d = new double[list.size()];

for (int i = 0; i < list.size(); ++i) {

d[i] = list.get(i);

}

return d;

}

};

Adjusting the Range

The chart now contains values but they might be out of range and not visible. We can adjust the range of each axis by computing the minimum and maximum values as we add events.

ArrayList<Double> xValues = new ArrayList<Double>();

ArrayList<Double> yValues = new ArrayList<Double>();

private double maxY = -Double.MAX_VALUE;

private double minY = Double.MAX_VALUE;

private double maxX = -Double.MAX_VALUE;

private double minX = Double.MAX_VALUE;

@Override

public void handleData(ITmfEvent data) {

super.handleData(data);

ITmfEventField field = data.getContent().getField(FIELD);

if (field != null) {

Double yValue = (Double) field.getValue();

minY = Math.min(minY, yValue);

maxY = Math.max(maxY, yValue);

yValues.add(yValue);

double xValue = (double) data.getTimestamp().getValue();

xValues.add(xValue);

minX = Math.min(minX, xValue);

maxX = Math.max(maxX, xValue);

}

}

@Override

public void handleSuccess() {

super.handleSuccess();

final double x[] = toArray(xValues);

final double y[] = toArray(yValues);

// This part needs to run on the UI thread since it updates the chart SWT control

Display.getDefault().asyncExec(new Runnable() {

@Override

public void run() {

chart.getSeriesSet().getSeries()[0].setXSeries(x);

chart.getSeriesSet().getSeries()[0].setYSeries(y);

// Set the new range

if (!xValues.isEmpty() && !yValues.isEmpty()) {

chart.getAxisSet().getXAxis(0).setRange(new Range(0, x[x.length - 1]));

chart.getAxisSet().getYAxis(0).setRange(new Range(minY, maxY));

} else {

chart.getAxisSet().getXAxis(0).setRange(new Range(0, 1));

chart.getAxisSet().getYAxis(0).setRange(new Range(0, 1));

}

chart.getAxisSet().adjustRange();

chart.redraw();

}

});

}

Formatting the Time Stamps

To display the time stamps on the X axis nicely, we need to specify a format or else the time stamps will be displayed as long. We use TmfTimestampFormat to make it consistent with the other TMF views. We also need to handle the TmfTimestampFormatUpdateSignal to make sure that the time stamps update when the preferences change.

@Override

public void createPartControl(Composite parent) {

...

chart.getAxisSet().getXAxis(0).getTick().setFormat(new TmfChartTimeStampFormat());

}

public class TmfChartTimeStampFormat extends SimpleDateFormat {

private static final long serialVersionUID = 1L;

@Override

public StringBuffer format(Date date, StringBuffer toAppendTo, FieldPosition fieldPosition) {

long time = date.getTime();

toAppendTo.append(TmfTimestampFormat.getDefaulTimeFormat().format(time));

return toAppendTo;

}

}

@TmfSignalHandler

public void timestampFormatUpdated(TmfTimestampFormatUpdateSignal signal) {

// Called when the time stamp preference is changed

chart.getAxisSet().getXAxis(0).getTick().setFormat(new TmfChartTimeStampFormat());

chart.redraw();

}

We also need to populate the view when a trace is already selected and the view is opened. We can reuse the same code by having the view send the TmfTraceSelectedSignal to itself.

@Override

public void createPartControl(Composite parent) {

...

ITmfTrace trace = getActiveTrace();

if (trace != null) {

traceSelected(new TmfTraceSelectedSignal(this, trace));

}

}

The view is now ready but we need a proper trace to test it. For this example, a trace was generated using LTTng-UST so that it would produce a sine function.

In summary, we have implemented a simple TMF view using the SWTChart library. We made use of signals and requests to populate the view at the appropriate time and we formated the time stamps nicely. We also made sure that the time stamp format is updated when the preferences change.

TMF Built-in Views and Viewers

TMF provides base implementations for several types of views and viewers for generating custom X-Y-Charts, Time Graphs, or Trees. They are well integrated with various TMF features such as reading traces and time synchronization with other views. They also handle mouse events for navigating the trace and view, zooming or presenting detailed information at mouse position. The code can be found in the TMF UI plug-in org.eclipse.linuxtools.tmf.ui. See below for a list of relevant java packages:

- Generic

- org.eclipse.linuxtools.tmf.ui.views: Common TMF view base classes

- X-Y-Chart

- org.eclipse.linuxtools.tmf.ui.viewers.xycharts: Common base classes for X-Y-Chart viewers based on SWTChart

- org.eclipse.linuxtools.tmf.ui.viewers.xycharts.barcharts: Base classes for bar charts

- org.eclipse.linuxtools.tmf.ui.viewers.xycharts.linecharts: Base classes for line charts

- Time Graph View

- org.eclipse.linuxtools.tmf.ui.widgets.timegraph: Base classes for time graphs e.g. Gantt-charts

- Tree Viewer

- org.eclipse.linuxtools.tmf.ui.viewers.tree: Base classes for TMF specific tree viewers

Several features in TMF and the Eclipse LTTng integration are using this framework and can be used as example for further developments:

- X-Y- Chart

- org.eclipse.linuxtools.internal.lttng2.ust.ui.views.memusage.MemUsageView.java

- org.eclipse.linuxtools.internal.lttng2.kernel.ui.views.cpuusage.CpuUsageView.java

- org.eclipse.linuxtools.tracing.examples.ui.views.histogram.NewHistogramView.java

- Time Graph View

- org.eclipse.linuxtools.internal.lttng2.kernel.ui.views.controlflow.ControlFlowView.java

- org.eclipse.linuxtools.internal.lttng2.kernel.ui.views.resources.ResourcesView.java

- Tree Viewer

- org.eclipse.linuxtools.tmf.ui.views.statesystem.TmfStateSystemExplorer.java

- org.eclipse.linuxtools.internal.lttng2.kernel.ui.views.cpuusage.CpuUsageComposite.java

Component Interaction

TMF provides a mechanism for different components to interact with each other using signals. The signals can carry information that is specific to each signal.

The TMF Signal Manager handles registration of components and the broadcasting of signals to their intended receivers.

Components can register as VIP receivers which will ensure they will receive the signal before non-VIP receivers.

Sending Signals

In order to send a signal, an instance of the signal must be created and passed as argument to the signal manager to be dispatched. Every component that can handle the signal will receive it. The receivers do not need to be known by the sender.

TmfExampleSignal signal = new TmfExampleSignal(this, ...); TmfSignalManager.dispatchSignal(signal);

If the sender is an instance of the class TmfComponent, the broadcast method can be used:

TmfExampleSignal signal = new TmfExampleSignal(this, ...); broadcast(signal);

Receiving Signals

In order to receive any signal, the receiver must first be registered with the signal manager. The receiver can register as a normal or VIP receiver.

TmfSignalManager.register(this); TmfSignalManager.registerVIP(this);

If the receiver is an instance of the class TmfComponent, it is automatically registered as a normal receiver in the constructor.

When the receiver is destroyed or disposed, it should deregister itself from the signal manager.

TmfSignalManager.deregister(this);

To actually receive and handle any specific signal, the receiver must use the @TmfSignalHandler annotation and implement a method that will be called when the signal is broadcast. The name of the method is irrelevant.

@TmfSignalHandler

public void example(TmfExampleSignal signal) {

...

}

The source of the signal can be used, if necessary, by a component to filter out and ignore a signal that was broadcast by itself when the component is also a receiver of the signal but only needs to handle it when it was sent by another component or another instance of the component.

Signal Throttling

It is possible for a TmfComponent instance to buffer the dispatching of signals so that only the last signal queued after a specified delay without any other signal queued is sent to the receivers. All signals that are preempted by a newer signal within the delay are discarded.

The signal throttler must first be initialized:

final int delay = 100; // in ms TmfSignalThrottler throttler = new TmfSignalThrottler(this, delay);

Then the sending of signals should be queued through the throttler:

TmfExampleSignal signal = new TmfExampleSignal(this, ...); throttler.queue(signal);

When the throttler is no longer needed, it should be disposed:

throttler.dispose();

Signal Reference

The following is a list of built-in signals defined in the framework.

TmfStartSynchSignal

Purpose

This signal is used to indicate the start of broadcasting of a signal. Internally, the data provider will not fire event requests until the corresponding TmfEndSynchSignal signal is received. This allows coalescing of requests triggered by multiple receivers of the broadcast signal.

Senders

Sent by TmfSignalManager before dispatching a signal to all receivers.

Receivers

Received by TmfDataProvider.

TmfEndSynchSignal

Purpose

This signal is used to indicate the end of broadcasting of a signal. Internally, the data provider fire all pending event requests that were received and buffered since the corresponding TmfStartSynchSignal signal was received. This allows coalescing of requests triggered by multiple receivers of the broadcast signal.

Senders

Sent by TmfSignalManager after dispatching a signal to all receivers.

Receivers

Received by TmfDataProvider.

TmfTraceOpenedSignal

Purpose

This signal is used to indicate that a trace has been opened in an editor.

Senders

Sent by a TmfEventsEditor instance when it is created.

Receivers

Received by TmfTrace, TmfExperiment, TmfTraceManager and every view that shows trace data. Components that show trace data should handle this signal.

TmfTraceSelectedSignal

Purpose

This signal is used to indicate that a trace has become the currently selected trace.

Senders

Sent by a TmfEventsEditor instance when it receives focus. Components can send this signal to make a trace editor be brought to front.

Receivers

Received by TmfTraceManager and every view that shows trace data. Components that show trace data should handle this signal.

TmfTraceClosedSignal

Purpose

This signal is used to indicate that a trace editor has been closed.

Senders

Sent by a TmfEventsEditor instance when it is disposed.

Receivers

Received by TmfTraceManager and every view that shows trace data. Components that show trace data should handle this signal.

TmfTraceRangeUpdatedSignal

Purpose

This signal is used to indicate that the valid time range of a trace has been updated. This triggers indexing of the trace up to the end of the range. In the context of streaming, this end time is considered a safe time up to which all events are guaranteed to have been completely received. For non-streaming traces, the end time is set to infinity indicating that all events can be read immediately. Any processing of trace events that wants to take advantage of request coalescing should be triggered by this signal.

Senders

Sent by TmfExperiment and non-streaming TmfTrace. Streaming traces should send this signal in the TmfTrace subclass when a new safe time is determined by a specific implementation.

Receivers

Received by TmfTrace, TmfExperiment and components that process trace events. Components that need to process trace events should handle this signal.

TmfTraceUpdatedSignal

Purpose

This signal is used to indicate that new events have been indexed for a trace.

Senders

Sent by TmfCheckpointIndexer when new events have been indexed and the number of events has changed.

Receivers

Received by components that need to be notified of a new trace event count.

TmfTimeSynchSignal

Purpose

This signal is used to indicate that a new time or time range has been selected. It contains a begin and end time. If a single time is selected then the begin and end time are the same.

Senders

Sent by any component that allows the user to select a time or time range.

Receivers

Received by any component that needs to be notified of the currently selected time or time range.

TmfRangeSynchSignal

Purpose

This signal is used to indicate that a new time range window has been set.

Senders

Sent by any component that allows the user to set a time range window.

Receivers

Received by any component that needs to be notified of the current visible time range window.

TmfEventFilterAppliedSignal

Purpose

This signal is used to indicate that a filter has been applied to a trace.

Senders

Sent by TmfEventsTable when a filter is applied.

Receivers

Received by any component that shows trace data and needs to be notified of applied filters.

TmfEventSearchAppliedSignal

Purpose

This signal is used to indicate that a search has been applied to a trace.

Senders

Sent by TmfEventsTable when a search is applied.

Receivers

Received by any component that shows trace data and needs to be notified of applied searches.

TmfTimestampFormatUpdateSignal

Purpose

This signal is used to indicate that the timestamp format preference has been updated.

Senders

Sent by TmfTimestampFormat when the default timestamp format preference is changed.

Receivers

Received by any component that needs to refresh its display for the new timestamp format.

TmfStatsUpdatedSignal

Purpose

This signal is used to indicate that the statistics data model has been updated.

Senders

Sent by statistic providers when new statistics data has been processed.

Receivers

Received by statistics viewers and any component that needs to be notified of a statistics update.

TmfPacketStreamSelected

Purpose

This signal is used to indicate that the user has selected a packet stream to analyze.

Senders

Sent by the Stream List View when the user selects a new packet stream.

Receivers

Received by views that analyze packet streams.

Debugging

TMF has built-in Eclipse tracing support for the debugging of signal interaction between components. To enable it, open the Run/Debug Configuration... dialog, select a configuration, click the Tracing tab, select the plug-in org.eclipse.linuxtools.tmf.core, and check the signal item.

All signals sent and received will be logged to the file TmfTrace.log located in the Eclipse home directory.

Generic State System

Introduction

The Generic State System is a utility available in TMF to track different states over the duration of a trace. It works by first sending some or all events of the trace into a state provider, which defines the state changes for a given trace type. Once built, views and analysis modules can then query the resulting database of states (called "state history") to get information.

For example, let's suppose we have the following sequence of events in a kernel trace:

10 s, sys_open, fd = 5, file = /home/user/myfile ... 15 s, sys_read, fd = 5, size=32 ... 20 s, sys_close, fd = 5

Now let's say we want to implement an analysis module which will track the amount of bytes read and written to each file. Here, of course the sys_read is interesting. However, by just looking at that event, we have no information on which file is being read, only its fd (5) is known. To get the match fd5 = /home/user/myfile, we have to go back to the sys_open event which happens 5 seconds earlier.

But since we don't know exactly where this sys_open event is, we will have to go back to the very start of the trace, and look through events one by one! This is obviously not efficient, and will not scale well if we want to analyze many similar patterns, or for very large traces.

A solution in this case would be to use the state system to keep track of the amount of bytes read/written to every *filename* (instead of every file descriptor, like we get from the events). Then the module could ask the state system "what is the amount of bytes read for file "/home/user/myfile" at time 16 s", and it would return the answer "32" (assuming there is no other read than the one shown).

High-level components

The State System infrastructure is composed of 3 parts:

- The state provider

- The central state system

- The storage backend

The state provider is the customizable part. This is where the mapping from trace events to state changes is done. This is what you want to implement for your specific trace type and analysis type. It's represented by the ITmfStateProvider interface (with a threaded implementation in AbstractTmfStateProvider, which you can extend).

The core of the state system is exposed through the ITmfStateSystem and ITmfStateSystemBuilder interfaces. The former allows only read-only access and is typically used for views doing queries. The latter also allows writing to the state history, and is typically used by the state provider.

Finally, each state system has its own separate backend. This determines how the intervals, or the "state history", are saved (in RAM, on disk, etc.) You can select the type of backend at construction time in the TmfStateSystemFactory.

Definitions

Before we dig into how to use the state system, we should go over some useful definitions:

Attribute

An attribute is the smallest element of the model that can be in any particular state. When we refer to the "full state", in fact it means we are interested in the state of every single attribute of the model.

Attribute Tree

Attributes in the model can be placed in a tree-like structure, a bit like files and directories in a file system. However, note that an attribute can always have both a value and sub-attributes, so they are like files and directories at the same time. We are then able to refer to every single attribute with its path in the tree.

For example, in the attribute tree for LTTng kernel traces, we use the following attributes, among others:

|- Processes

| |- 1000

| | |- PPID

| | |- Exec_name

| |- 1001

| | |- PPID

| | |- Exec_name

| ...

|- CPUs

|- 0

| |- Status

| |- Current_pid

...

In this model, the attribute "Processes/1000/PPID" refers to the PPID of process with PID 1000. The attribute "CPUs/0/Status" represents the status (running, idle, etc.) of CPU 0. "Processes/1000/PPID" and "Processes/1001/PPID" are two different attribute, even though their base name is the same: the whole path is the unique identifier.

The value of each attribute can change over the duration of the trace, independently of the other ones, and independently of its position in the tree.

The tree-like organization is optional, all attributes could be at the same level. But it's possible to put them in a tree, and it helps make things clearer.

Quark

In addition to a given path, each attribute also has a unique integer identifier, called the "quark". To continue with the file system analogy, this is like the inode number. When a new attribute is created, a new unique quark will be assigned automatically. They are assigned incrementally, so they will normally be equal to their order of creation, starting at 0.

Methods are offered to get the quark of an attribute from its path. The API methods for inserting state changes and doing queries normally use quarks instead of paths. This is to encourage users to cache the quarks and re-use them, which avoids re-walking the attribute tree over and over, which avoids unneeded hashing of strings.

State value

The path and quark of an attribute will remain constant for the whole duration of the trace. However, the value carried by the attribute will change. The value of a specific attribute at a specific time is called the state value.

In the TMF implementation, state values can be integers, longs, doubles, or strings. There is also a "null value" type, which is used to indicate that no particular value is active for this attribute at this time, but without resorting to a 'null' reference.

Any other type of value could be used, as long as the backend knows how to store it.

Note that the TMF implementation also forces every attribute to always carry the same type of state value. This is to make it simpler for views, so they can expect that an attribute will always use a given type, without having to check every single time. Null values are an exception, they are always allowed for all attributes, since they can safely be "unboxed" into all types.

State change

A state change is the element that is inserted in the state system. It consists of:

- a timestamp (the time at which the state change occurs)

- an attribute (the attribute whose value will change)

- a state value (the new value that the attribute will carry)

It's not an object per se in the TMF implementation (it's represented by a function call in the state provider). Typically, the state provider will insert zero, one or more state changes for every trace event, depending on its event type, payload, etc.

Note, we use "timestamp" here, but it's in fact a generic term that could be referred to as "index". For example, if a given trace type has no notion of timestamp, the event rank could be used.

In the TMF implementation, the timestamp is a long (64-bit integer).

State interval

State changes are inserted into the state system, but state intervals are the objects that come out on the other side. Those are stocked in the storage backend. A state interval represents a "state" of an attribute we want to track. When doing queries on the state system, intervals are what is returned. The components of a state interval are:

- Start time

- End time

- State value

- Quark

The start and end times represent the time range of the state. The state value is the same as the state value in the state change that started this interval. The interval also keeps a reference to its quark, although you normally know your quark in advance when you do queries.

State history

The state history is the name of the container for all the intervals created by the state system. The exact implementation (how the intervals are stored) is determined by the storage backend that is used.

Some backends will use a state history that is peristent on disk, others do not. When loading a trace, if a history file is available and the backend supports it, it will be loaded right away, skipping the need to go through another construction phase.

Construction phase

Before we can query a state system, we need to build the state history first. To do so, trace events are sent one-by-one through the state provider, which in turn sends state changes to the central component, which then creates intervals and stores them in the backend. This is called the construction phase.

Note that the state system needs to receive its events into chronological order. This phase will end once the end of the trace is reached.

Also note that it is possible to query the state system while it is being build. Any timestamp between the start of the trace and the current end time of the state system (available with ITmfStateSystem#getCurrentEndTime()) is a valid timestamp that can be queried.

Queries

As mentioned previously, when doing queries on the state system, the returned objects will be state intervals. In most cases it's the state *value* we are interested in, but since the backend has to instantiate the interval object anyway, there is no additional cost to return the interval instead. This way we also get the start and end times of the state "for free".

There are two types of queries that can be done on the state system:

Full queries

A full query means that we want to retrieve the whole state of the model for one given timestamp. As we remember, this means "the state of every single attribute in the model". As parameter we only need to pass the timestamp (see the API methods below). The return value will be an array of intervals, where the offset in the array represents the quark of each attribute.

Single queries

In other cases, we might only be interested in the state of one particular attribute at one given timestamp. For these cases it's better to use a single query. For a single query. we need to pass both a timestamp and a quark in parameter. The return value will be a single interval, representing the state that this particular attribute was at that time.

Single queries are typically faster than full queries (but once again, this depends on the backend that is used), but not by much. Even if you only want the state of say 10 attributes out of 200, it could be faster to use a full query and only read the ones you need. Single queries should be used for cases where you only want one attribute per timestamp (for example, if you follow the state of the same attribute over a time range).

Relevant interfaces/classes

This section will describe the public interface and classes that can be used if you want to use the state system.

Main classes in org.eclipse.linuxtools.tmf.core.statesystem

ITmfStateProvider / AbstractTmfStateProvider

ITmfStateProvider is the interface you have to implement to define your state provider. This is where most of the work has to be done to use a state system for a custom trace type or analysis type.

For first-time users, it's recommended to extend AbstractTmfStateProvider instead. This class takes care of all the initialization mumbo-jumbo, and also runs the event handler in a separate thread. You will only need to implement eventHandle, which is the call-back that will be called for every event in the trace.

For an example, you can look at StatsStateProvider in the TMF tree, or at the small example below.

TmfStateSystemFactory

Once you have defined your state provider, you need to tell your trace type to build a state system with this provider during its initialization. This consists of overriding TmfTrace#buildStateSystems() and in there of calling the method in TmfStateSystemFactory that corresponds to the storage backend you want to use (see the section #Comparison of state system backends).

You will have to pass in parameter the state provider you want to use, which you should have defined already. Each backend can also ask for more configuration information.

You must then call registerStateSystem(id, statesystem) to make your state system visible to the trace objects and the views. The ID can be any string of your choosing. To access this particular state system, the views or modules will need to use this ID.

Also, don't forget to call super.buildStateSystems() in your implementation, unless you know for sure you want to skip the state providers built by the super-classes.

You can look at how LttngKernelTrace does it for an example. It could also be possible to build a state system only under certain conditions (like only if the trace contains certain event types).

ITmfStateSystem

ITmfStateSystem is the main interface through which views or analysis modules will access the state system. It offers a read-only view of the state system, which means that no states can be inserted, and no attributes can be created. Calling TmfTrace#getStateSystems().get(id) will return you a ITmfStateSystem view of the requested state system. The main methods of interest are:

getQuarkAbsolute()/getQuarkRelative()

Those are the basic quark-getting methods. The goal of the state system is to return the state values of given attributes at given timestamps. As we've seen earlier, attributes can be described with a file-system-like path. The goal of these methods is to convert from the path representation of the attribute to its quark.

Since quarks are created on-the-fly, there is no guarantee that the same attributes will have the same quark for two traces of the same type. The views should always query their quarks when dealing with a new trace or a new state provider. Beyond that however, quarks should be cached and reused as much as possible, to avoid potentially costly string re-hashing.

getQuarkAbsolute() takes a variable amount of Strings in parameter, which represent the full path to the attribute. Some of them can be constants, some can come programatically, often from the event's fields.

getQuarkRelative() is to be used when you already know the quark of a certain attribute, and want to access on of its sub-attributes. Its first parameter is the origin quark, followed by a String varagrs which represent the relative path to the final attribute.

These two methods will throw an AttributeNotFoundException if trying to access an attribute that does not exist in the model.

These methods also imply that the view has the knowledge of how the attribute tree is organized. This should be a reasonable hypothesis, since the same analysis plugin will normally ship both the state provider and the view, and they will have been written by the same person. In other cases, it's possible to use getSubAttributes() to explore the organization of the attribute tree first.

waitUntilBuilt()

This is a simple method used to block the caller until the construction phase of this state system is done. If the view prefers to wait until all information is available before starting to do queries (to get all known attributes right away, for example), this is the guy to call.

queryFullState()

This is the method to do full queries. As mentioned earlier, you only need to pass a target timestamp in parameter. It will return a List of state intervals, in which the offset corresponds to the attribute quark. This will represent the complete state of the model at the requested time.

querySingleState()

The method to do single queries. You pass in parameter both a timestamp and an attribute quark. This will return the single state matching this timestamp/attribute pair.

Other methods are available, you are encouraged to read their Javadoc and see if they can be potentially useful.

ITmfStateSystemBuilder

ITmfStateSystemBuilder is the read-write interface to the state system. It extends ITmfStateSystem itself, so all its methods are available. It then adds methods that can be used to write to the state system, either by creating new attributes of inserting state changes.

It is normally reserved for the state provider and should not be visible to external components. However it will be available in AbstractTmfStateProvider, in the field 'ss'. That way you can call ss.modifyAttribute() etc. in your state provider to write to the state.

The main methods of interest are:

getQuark*AndAdd()

getQuarkAbsoluteAndAdd() and getQuarkRelativeAndAdd() work exactly like their non-AndAdd counterparts in ITmfStateSystem. The difference is that the -AndAdd versions will not throw any exception: if the requested attribute path does not exist in the system, it will be created, and its newly-assigned quark will be returned.

When in a state provider, the -AndAdd version should normally be used (unless you know for sure the attribute already exist and don't want to create it otherwise). This means that there is no need to define the whole attribute tree in advance, the attributes will be created on-demand.

modifyAttribute()

This is the main state-change-insertion method. As was explained before, a state change is defined by a timestamp, an attribute and a state value. Those three elements need to be passed to modifyAttribute as parameters.

Other state change insertion methods are available (increment-, push-, pop- and removeAttribute()), but those are simply convenience wrappers around modifyAttribute(). Check their Javadoc for more information.

closeHistory()

When the construction phase is done, do not forget to call closeHistory() to tell the backend that no more intervals will be received. Depending on the backend type, it might have to save files, close descriptors, etc. This ensures that a persitent file can then be re-used when the trace is opened again.

If you use the AbstractTmfStateProvider, it will call closeHistory() automatically when it reaches the end of the trace.

Other relevant interfaces

o.e.l.tmf.core.statevalue.ITmfStateValue

This is the interface used to represent state values. Those are used when inserting state changes in the provider, and is also part of the state intervals obtained when doing queries.

The abstract TmfStateValue class contains the factory methods to create new state values of either int, long, double or string types. To retrieve the real object inside the state value, one can use the .unbox* methods.

Note: Do not instantiate null values manually, use TmfStateValue.nullValue()

o.e.l.tmf.core.interval.ITmfStateInterval

This is the interface to represent the state intervals, which are stored in the state history backend, and are returned when doing state system queries. A very simple implementation is available in TmfStateInterval. Its methods should be self-descriptive.

Exceptions

The following exceptions, found in o.e.l.tmf.core.exceptions, are related to state system activities.

AttributeNotFoundException

This is thrown by getQuarkRelative() and getQuarkAbsolute() (but not byt the -AndAdd versions!) when passing an attribute path that is not present in the state system. This is to ensure that no new attribute is created when using these versions of the methods.

Views can expect some attributes to be present, but they should handle these exceptions for when the attributes end up not being in the state system (perhaps this particular trace didn't have a certain type of events, etc.)

StateValueTypeException

This exception will be thrown when trying to unbox a state value into a type different than its own. You should always check with ITmfStateValue#getType() beforehand if you are not sure about the type of a given state value.

TimeRangeException

This exception is thrown when trying to do a query on the state system for a timestamp that is outside of its range. To be safe, you should check with ITmfStateSystem#getStartTime() and #getCurrentEndTime() for the current valid range of the state system. This is especially important when doing queries on a state system that is currently being built.

StateSystemDisposedException

This exception is thrown when trying to access a state system that has been disposed, with its dispose() method. This can potentially happen at shutdown, since Eclipse is not always consistent with the order in which the components are closed.

Comparison of state system backends

As we have seen in section #High-level components, the state system needs a storage backend to save the intervals. Different implementations are available when building your state system from TmfStateSystemFactory.

Do not confuse full/single queries with full/partial history! All backend types should be able to handle any type of queries defined in the ITmfStateSystem API, unless noted otherwise.

Full history

Available with TmfStateSystemFactory#newFullHistory(). The full history uses a History Tree data structure, which is an optimized structure store state intervals on disk. Once built, it can respond to queries in a log(n) manner.

You need to specify a file at creation time, which will be the container for the history tree. Once it's completely built, it will remain on disk (until you delete the trace from the project). This way it can be reused from one session to another, which makes subsequent loading time much faster.

This the backend used by the LTTng kernel plugin. It offers good scalability and performance, even at extreme sizes (it's been tested with traces of sizes up to 500 GB). Its main downside is the amount of disk space required: since every single interval is written to disk, the size of the history file can quite easily reach and even surpass the size of the trace itself.

Null history

Available with TmfStateSystemFactory#newNullHistory(). As its name implies the null history is in fact an absence of state history. All its query methods will return null (see the Javadoc in NullBackend).

Obviously, no file is required, and almost no memory space is used.

It's meant to be used in cases where you are not interested in past states, but only in the "ongoing" one. It can also be useful for debugging and benchmarking.

In-memory history

Available with TmfStateSystemFactory#newInMemHistory(). This is a simple wrapper using a TreeSet to store all state intervals in memory. The implementation at the moment is quite simple, it will perform a binary search on entries when doing queries to find the ones that match.

The advantage of this method is that it's very quick to build and query, since all the information resides in memory. However, you are limited to 2^31 entries (roughly 2 billions), and depending on your state provider and trace type, that can happen really fast!

There are no safeguards, so if you bust the limit you will end up with ArrayOutOfBoundsException's everywhere. If your trace or state history can be arbitrarily big, it's probably safer to use a Full History instead.

Partial history

Available with TmfStateSystemFactory#newPartialHistory(). The partial history is a more advanced form of the full history. Instead of writing all state intervals to disk like with the full history, we only write a small fraction of them, and go back to read the trace to recreate the states in-between.

It has a big advantage over a full history in terms of disk space usage. It's very possible to reduce the history tree file size by a factor of 1000, while keeping query times within a factor of two. Its main downside comes from the fact that you cannot do efficient single queries with it (they are implemented by doing full queries underneath).

This makes it a poor choice for views like the Control Flow view, where you do a lot of range queries and single queries. However, it is a perfect fit for cases like statistics, where you usually do full queries already, and you store lots of small states which are very easy to "compress".

However, it can't really be used until bug 409630 is fixed.

State System Operations

TmfStateSystemOperations is a static class that implements additional statistical operations that can be performed on attributes of the state system.

These operations require that the attribute be one of the numerical values (int, long or double).

The speed of these operations can be greatly improved for large data sets if the attribute was inserted in the state system as a mipmap attribute. Refer to the Mipmap feature section.

queryRangeMax()

This method returns the maximum numerical value of an attribute in the specified time range. The attribute must be of type int, long or double. Null values are ignored. The returned value will be of the same state value type as the base attribute, or a null value if there is no state interval stored in the given time range.

queryRangeMin()

This method returns the minimum numerical value of an attribute in the specified time range. The attribute must be of type int, long or double. Null values are ignored. The returned value will be of the same state value type as the base attribute, or a null value if there is no state interval stored in the given time range.

queryRangeAverage()

This method returns the average numerical value of an attribute in the specified time range. The attribute must be of type int, long or double. Each state interval value is weighted according to time. Null values are counted as zero. The returned value will be a double primitive, which will be zero if there is no state interval stored in the given time range.

Code example

Here is a small example of code that will use the state system. For this example, let's assume we want to track the state of all the CPUs in a LTTng kernel trace. To do so, we will watch for the "sched_switch" event in the state provider, and will update an attribute indicating if the associated CPU should be set to "running" or "idle".

We will use an attribute tree that looks like this:

CPUs |--0 | |--Status | |--1 | |--Status | | 2 | |--Status ...

The second-level attributes will be named from the information available in the trace events. Only the "Status" attributes will carry a state value (this means we could have just used "1", "2", "3",... directly, but we'll do it in a tree for the example's sake).

Also, we will use integer state values to represent "running" or "idle", instead of saving the strings that would get repeated every time. This will help in reducing the size of the history file.

First we will define a state provider in MyStateProvider. Then, assuming we have already implemented a custom trace type extending CtfTmfTrace, we will add a section to it to make it build a state system using the provider we defined earlier. Finally, we will show some example code that can query the state system, which would normally go in a view or analysis module.

State Provider

import org.eclipse.linuxtools.tmf.core.ctfadaptor.CtfTmfEvent;

import org.eclipse.linuxtools.tmf.core.event.ITmfEvent;

import org.eclipse.linuxtools.tmf.core.exceptions.AttributeNotFoundException;

import org.eclipse.linuxtools.tmf.core.exceptions.StateValueTypeException;

import org.eclipse.linuxtools.tmf.core.exceptions.TimeRangeException;

import org.eclipse.linuxtools.tmf.core.statesystem.AbstractTmfStateProvider;

import org.eclipse.linuxtools.tmf.core.statevalue.ITmfStateValue;

import org.eclipse.linuxtools.tmf.core.statevalue.TmfStateValue;

import org.eclipse.linuxtools.tmf.core.trace.ITmfTrace;

/**

* Example state system provider.

*

* @author Alexandre Montplaisir

*/

public class MyStateProvider extends AbstractTmfStateProvider {

/** State value representing the idle state */

public static ITmfStateValue IDLE = TmfStateValue.newValueInt(0);

/** State value representing the running state */

public static ITmfStateValue RUNNING = TmfStateValue.newValueInt(1);

/**

* Constructor

*

* @param trace

* The trace to which this state provider is associated

*/

public MyStateProvider(ITmfTrace trace) {

super(trace, CtfTmfEvent.class, "Example"); //$NON-NLS-1$

/*

* The third parameter here is not important, it's only used to name a

* thread internally.

*/

}

@Override

public int getVersion() {

/*

* If the version of an existing file doesn't match the version supplied

* in the provider, a rebuild of the history will be forced.

*/

return 1;

}

@Override

public MyStateProvider getNewInstance() {

return new MyStateProvider(getTrace());

}

@Override

protected void eventHandle(ITmfEvent ev) {

/*

* AbstractStateChangeInput should have already checked for the correct

* class type.

*/

CtfTmfEvent event = (CtfTmfEvent) ev;

final long ts = event.getTimestamp().getValue();

Integer nextTid = ((Long) event.getContent().getField("next_tid").getValue()).intValue();

try {

if (event.getEventName().equals("sched_switch")) {

int quark = ss.getQuarkAbsoluteAndAdd("CPUs", String.valueOf(event.getCPU()), "Status");

ITmfStateValue value;

if (nextTid > 0) {

value = RUNNING;

} else {

value = IDLE;

}

ss.modifyAttribute(ts, value, quark);

}

} catch (TimeRangeException e) {

/*

* This should not happen, since the timestamp comes from a trace

* event.

*/

throw new IllegalStateException(e);

} catch (AttributeNotFoundException e) {

/*

* This should not happen either, since we're only accessing a quark

* we just created.

*/

throw new IllegalStateException(e);

} catch (StateValueTypeException e) {

/*

* This wouldn't happen here, but could potentially happen if we try

* to insert mismatching state value types in the same attribute.

*/

e.printStackTrace();

}

}

}

Trace type definition

import java.io.File;

import org.eclipse.core.resources.IProject;

import org.eclipse.core.runtime.IStatus;

import org.eclipse.core.runtime.Status;

import org.eclipse.linuxtools.tmf.core.ctfadaptor.CtfTmfTrace;

import org.eclipse.linuxtools.tmf.core.exceptions.TmfTraceException;

import org.eclipse.linuxtools.tmf.core.statesystem.ITmfStateProvider;

import org.eclipse.linuxtools.tmf.core.statesystem.ITmfStateSystem;

import org.eclipse.linuxtools.tmf.core.statesystem.TmfStateSystemFactory;

import org.eclipse.linuxtools.tmf.core.trace.TmfTraceManager;

/**

* Example of a custom trace type using a custom state provider.

*

* @author Alexandre Montplaisir

*/

public class MyTraceType extends CtfTmfTrace {

/** The file name of the history file */

public final static String HISTORY_FILE_NAME = "mystatefile.ht";

/** ID of the state system we will build */

public static final String STATE_ID = "org.eclipse.linuxtools.lttng2.example";

/**

* Default constructor

*/

public MyTraceType() {

super();

}

@Override

public IStatus validate(final IProject project, final String path) {

/*

* Add additional validation code here, and return a IStatus.ERROR if

* validation fails.

*/

return Status.OK_STATUS;

}

@Override

protected void buildStateSystem() throws TmfTraceException {

super.buildStateSystem();

/* Build the custom state system for this trace */

String directory = TmfTraceManager.getSupplementaryFileDir(this);

final File htFile = new File(directory + HISTORY_FILE_NAME);

final ITmfStateProvider htInput = new MyStateProvider(this);

ITmfStateSystem ss = TmfStateSystemFactory.newFullHistory(htFile, htInput, false);

fStateSystems.put(STATE_ID, ss);

}

}

Query code

import java.util.List;

import org.eclipse.linuxtools.tmf.core.exceptions.AttributeNotFoundException;

import org.eclipse.linuxtools.tmf.core.exceptions.StateSystemDisposedException;

import org.eclipse.linuxtools.tmf.core.exceptions.TimeRangeException;

import org.eclipse.linuxtools.tmf.core.interval.ITmfStateInterval;

import org.eclipse.linuxtools.tmf.core.statesystem.ITmfStateSystem;

import org.eclipse.linuxtools.tmf.core.statevalue.ITmfStateValue;

import org.eclipse.linuxtools.tmf.core.trace.ITmfTrace;

/**

* Class showing examples of state system queries.

*

* @author Alexandre Montplaisir

*/

public class QueryExample {

private final ITmfStateSystem ss;

/**

* Constructor

*

* @param trace

* Trace that this "view" will display.

*/

public QueryExample(ITmfTrace trace) {

ss = trace.getStateSystems().get(MyTraceType.STATE_ID);

}

/**

* Example method of querying one attribute in the state system.

*

* We pass it a cpu and a timestamp, and it returns us if that cpu was

* executing a process (true/false) at that time.

*

* @param cpu

* The CPU to check

* @param timestamp

* The timestamp of the query

* @return True if the CPU was running, false otherwise

*/

public boolean cpuIsRunning(int cpu, long timestamp) {

try {

int quark = ss.getQuarkAbsolute("CPUs", String.valueOf(cpu), "Status");

ITmfStateValue value = ss.querySingleState(timestamp, quark).getStateValue();

if (value.equals(MyStateProvider.RUNNING)) {

return true;

}

/*

* Since at this level we have no guarantee on the contents of the state

* system, it's important to handle these cases correctly.

*/

} catch (AttributeNotFoundException e) {

/*

* Handle the case where the attribute does not exist in the state

* system (no CPU with this number, etc.)

*/

...

} catch (TimeRangeException e) {

/*

* Handle the case where 'timestamp' is outside of the range of the

* history.

*/

...

} catch (StateSystemDisposedException e) {

/*

* Handle the case where the state system is being disposed. If this

* happens, it's normally when shutting down, so the view can just

* return immediately and wait it out.

*/

}

return false;

}

/**

* Example method of using a full query.

*

* We pass it a timestamp, and it returns us how many CPUs were executing a

* process at that moment.

*

* @param timestamp

* The target timestamp

* @return The amount of CPUs that were running at that time

*/

public int getNbRunningCpus(long timestamp) {

int count = 0;

try {

/* Get the list of the quarks we are interested in. */

List<Integer> quarks = ss.getQuarks("CPUs", "*", "Status");

/*

* Get the full state at our target timestamp (it's better than

* doing an arbitrary number of single queries).

*/

List<ITmfStateInterval> state = ss.queryFullState(timestamp);

/* Look at the value of the state for each quark */

for (Integer quark : quarks) {

ITmfStateValue value = state.get(quark).getStateValue();

if (value.equals(MyStateProvider.RUNNING)) {

count++;

}

}

} catch (TimeRangeException e) {

/*

* Handle the case where 'timestamp' is outside of the range of the

* history.

*/

...

} catch (StateSystemDisposedException e) {

/* Handle the case where the state system is being disposed. */

...

}

return count;

}

}

Mipmap feature

The mipmap feature allows attributes to be inserted into the state system with additional computations performed to automatically store sub-attributes that can later be used for statistical operations. The mipmap has a resolution which represents the number of state attribute changes that are used to compute the value at the next mipmap level.

The supported mipmap features are: max, min, and average. Each one of these features requires that the base attribute be a numerical state value (int, long or double). An attribute can be mipmapped for one or more of the features at the same time.

To use a mipmapped attribute in queries, call the corresponding methods of the static class TmfStateSystemOperations.

AbstractTmfMipmapStateProvider

AbstractTmfMipmapStateProvider is an abstract provider class that allows adding features to a specific attribute into a mipmap tree. It extends AbstractTmfStateProvider.

If a provider wants to add mipmapped attributes to its tree, it must extend AbstractTmfMipmapStateProvider and call modifyMipmapAttribute() in the event handler, specifying one or more mipmap features to compute. Then the structure of the attribute tree will be :

|- <attribute> | |- <mipmapFeature> (min/max/avg) | | |- 1 | | |- 2 | | |- 3 | | ... | | |- n (maximum mipmap level) | |- <mipmapFeature> (min/max/avg) | | |- 1 | | |- 2 | | |- 3 | | ... | | |- n (maximum mipmap level) | ...

UML2 Sequence Diagram Framework

The purpose of the UML2 Sequence Diagram Framework of TMF is to provide a framework for generation of UML2 sequence diagrams. It provides

- UML2 Sequence diagram drawing capabilities (i.e. lifelines, messages, activations, object creation and deletion)

- a generic, re-usable Sequence Diagram View

- Eclipse Extension Point for the creation of sequence diagrams

- callback hooks for searching and filtering within the Sequence Diagram View

- scalability

The following chapters describe the Sequence Diagram Framework as well as a reference implementation and its usage.

TMF UML2 Sequence Diagram Extensions

In the UML2 Sequence Diagram Framework an Eclipse extension point is defined so that other plug-ins can contribute code to create sequence diagram.

Identifier: org.eclipse.linuxtools.tmf.ui.uml2SDLoader

Since: 1.0

Description: This extension point aims to list and connect any UML2 Sequence Diagram loader.

Configuration Markup:

<!ELEMENT extension (uml2SDLoader)+> <!ATTLIST extension point CDATA #REQUIRED id CDATA #IMPLIED name CDATA #IMPLIED >

- point - A fully qualified identifier of the target extension point.

- id - An optional identifier of the extension instance.

- name - An optional name of the extension instance.

<!ELEMENT uml2SDLoader EMPTY> <!ATTLIST uml2SDLoader id CDATA #REQUIRED name CDATA #REQUIRED class CDATA #REQUIRED view CDATA #REQUIRED default (true | false)

- id - A unique identifier for this uml2SDLoader. This is not mandatory as long as the id attribute cannot be retrieved by the provider plug-in. The class attribute is the one on which the underlying algorithm relies.

- name - An name of the extension instance.

- class - The implementation of this UML2 SD viewer loader. The class must implement org.eclipse.linuxtools.tmf.ui.views.uml2sd.load.IUml2SDLoader.

- view - The view ID of the view that this loader aims to populate. Either org.eclipse.linuxtools.tmf.ui.views.uml2sd.SDView itself or a extension of org.eclipse.linuxtools.tmf.ui.views.uml2sd.SDView.

- default - Set to true to make this loader the default one for the view; in case of several default loaders, first one coming from extensions list is taken.

Management of the Extension Point

The TMF UI plug-in is responsible for evaluating each contribution to the extension point.

With this extension point, a loader class is associated with a Sequence Diagram View. Multiple loaders can be associated to a single Sequence Diagram View. However, additional means have to be implemented to specify which loader should be used when opening the view. For example, an eclipse action or command could be used for that. This additional code is not necessary if there is only one loader for a given Sequence Diagram View associated and this loader has the attribute "default" set to "true". (see also Using one Sequence Diagram View with Multiple Loaders)

Sequence Diagram View

For this extension point a Sequence Diagram View has to be defined as well. The Sequence Diagram View class implementation is provided by the plug-in org.eclipse.linuxtools.tmf.ui (org.eclipse.linuxtools.tmf.ui.views.uml2sd.SDView) and can be used as is or can also be sub-classed. For that, a view extension has to be added to the plugin.xml.

Supported Widgets

The loader class provides a frame containing all the UML2 widgets to be displayed. The following widgets exist:

- Lifeline

- Activation

- Synchronous Message

- Asynchronous Message

- Synchronous Message Return

- Asynchronous Message Return

- Stop

For a lifeline, a category can be defined. The lifeline category defines icons, which are displayed in the lifeline header.

Zooming

The Sequence Diagram View allows the user to zoom in, zoom out and reset the zoom factor.

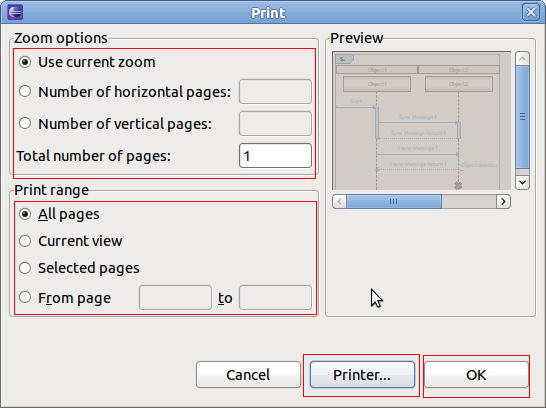

Printing

It is possible to print the whole sequence diagram as well as part of it.

Key Bindings

- SHIFT+ALT+ARROW-DOWN - to scroll down within sequence diagram one view page at a time

- SHIFT+ALT+ARROW-UP - to scroll up within sequence diagram one view page at a time

- SHIFT+ALT+ARROW-RIGHT - to scroll right within sequence diagram one view page at a time

- SHIFT+ALT+ARROW-LEFT - to scroll left within sequence diagram one view page at a time

- SHIFT+ALT+ARROW-HOME - to jump to the beginning of the selected message if not already visible in page

- SHIFT+ALT+ARROW-END - to jump to the end of the selected message if not already visible in page

- CTRL+F - to open find dialog if either the basic or extended find provider is defined (see Using the Find Provider Interface)

- CTRL+P - to open print dialog

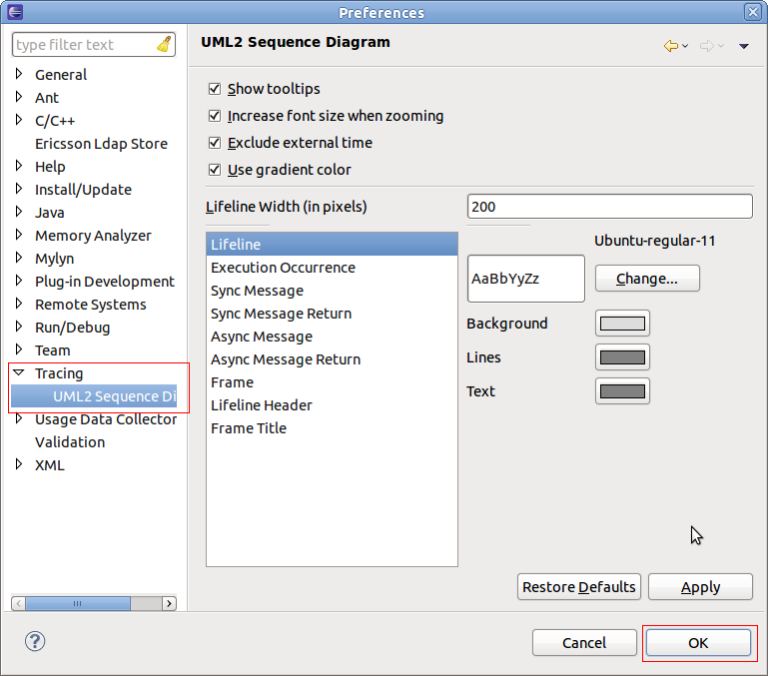

Preferences

The UML2 Sequence Diagram Framework provides preferences to customize the appearance of the Sequence Diagram View. The color of all widgets and text as well as the fonts of the text of all widget can be adjust. Amongst others the default lifeline width can be alternated. To change preferences select Windows->Preferences->Tracing->UML2 Sequence Diagrams. The following preference page will show:

After changing the preferences select OK.

Callback hooks

The Sequence Diagram View provides several callback hooks so that extension can provide application specific functionality. The following interfaces can be provided:

- Basic find provider or extended find Provider

For finding within the sequence diagram - Basic filter provider and extended Filter Provider

For filtering within the sequnce diagram. - Basic paging provider or advanced paging provider

For scalability reasons, used to limit number of displayed messages - Properies provider

To provide properties of selected elements - Collapse provider

To collapse areas of the sequence diagram

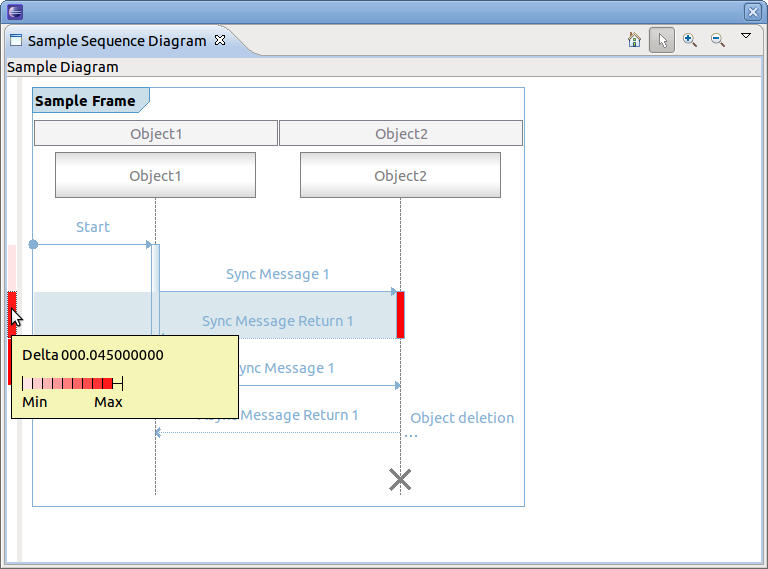

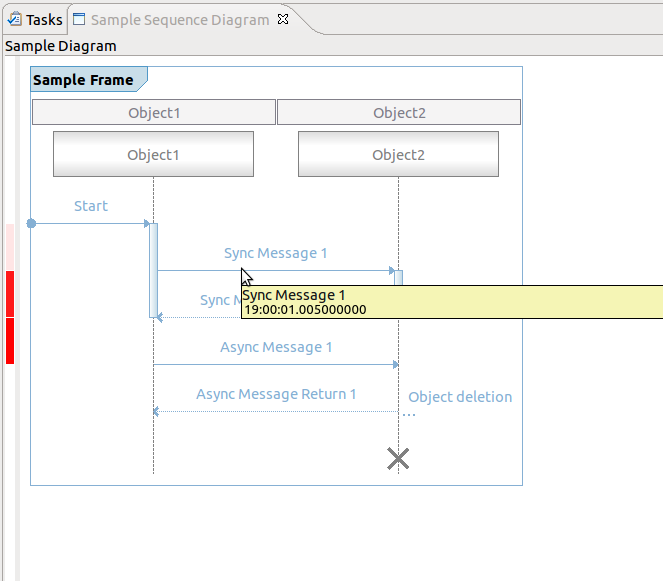

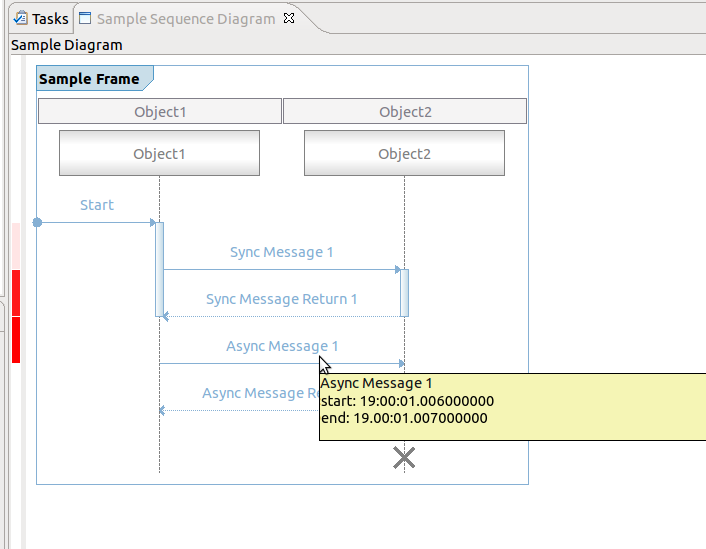

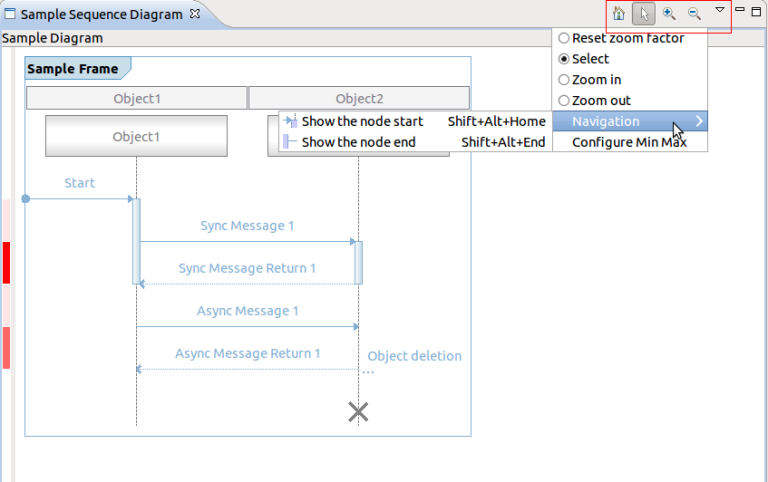

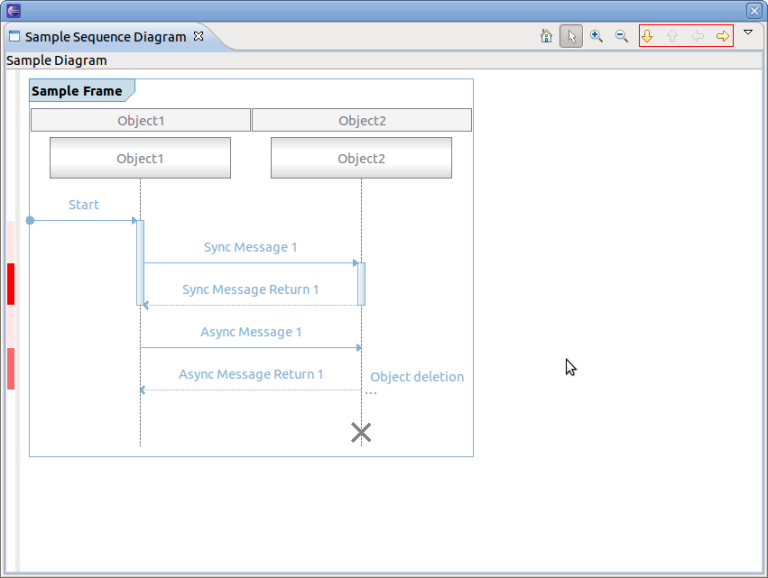

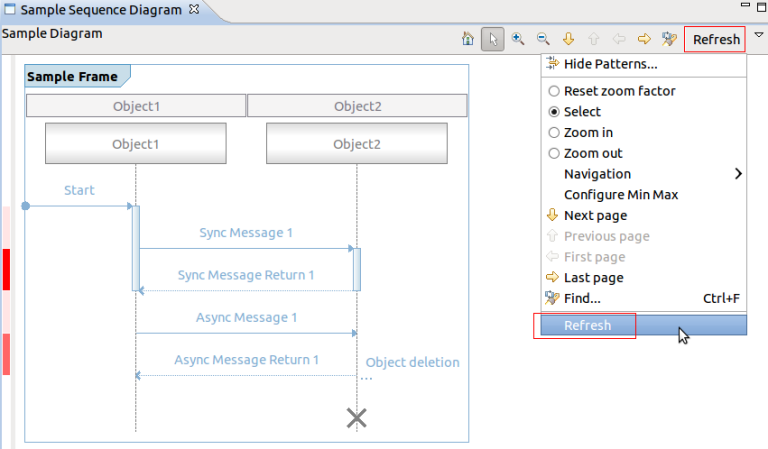

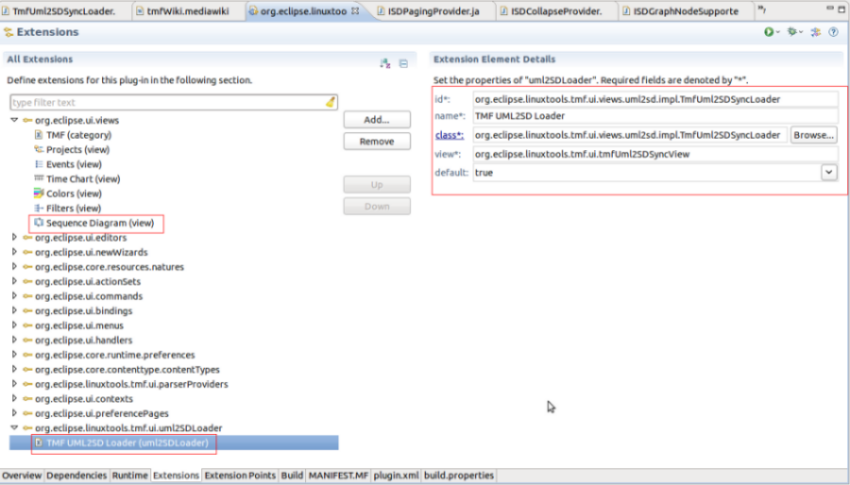

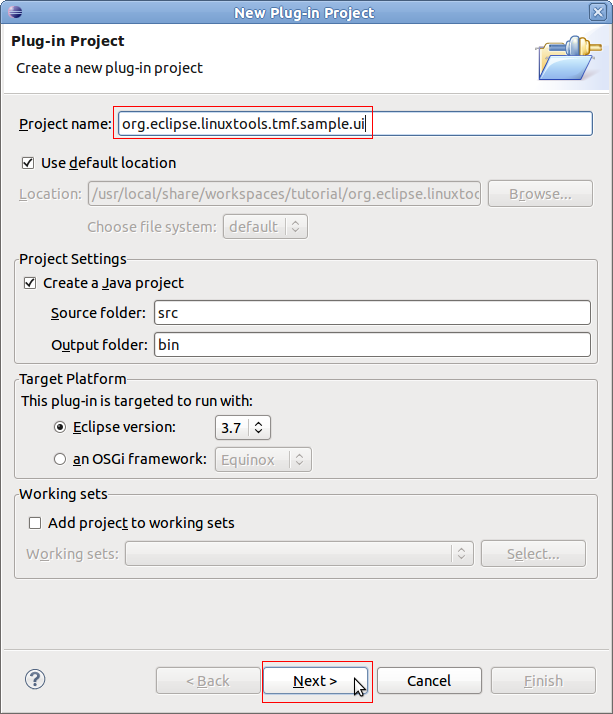

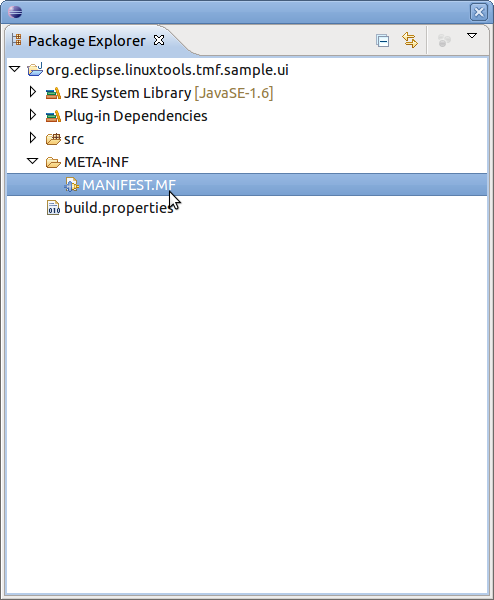

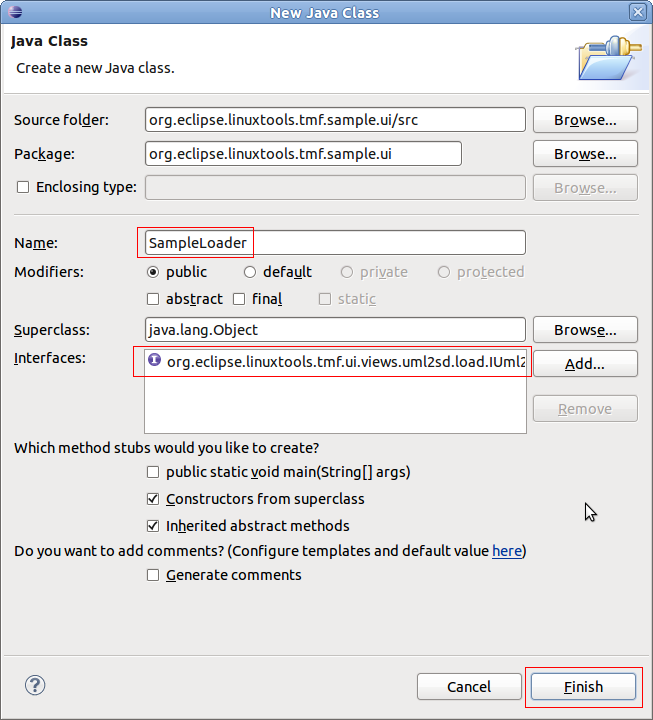

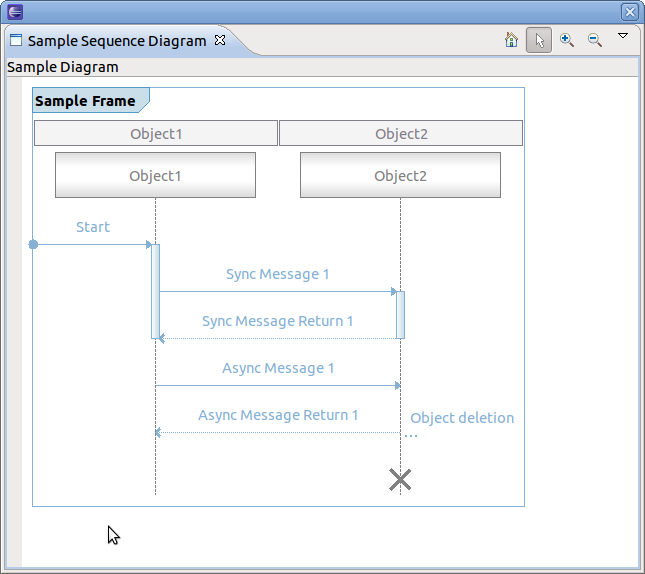

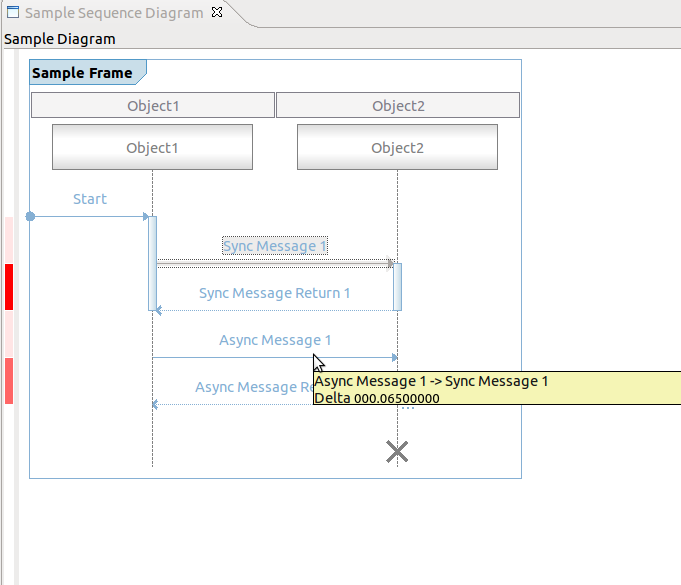

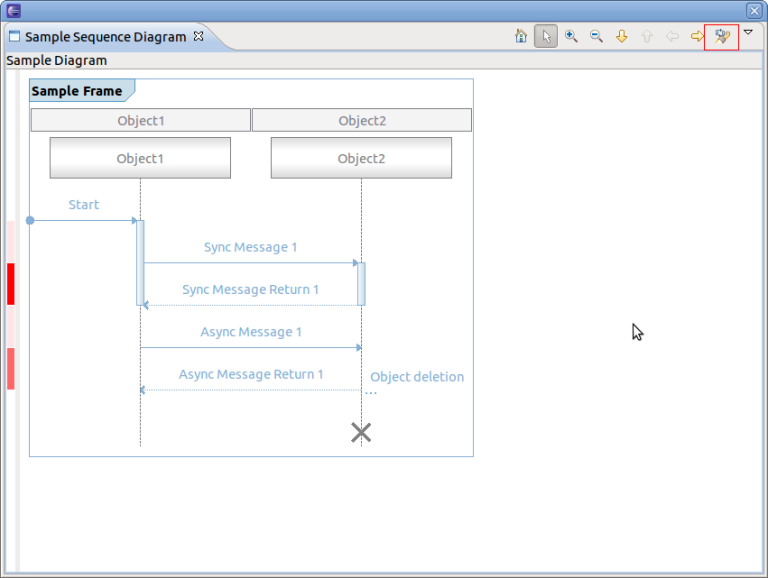

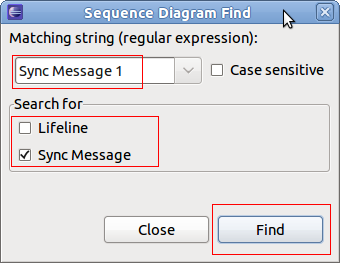

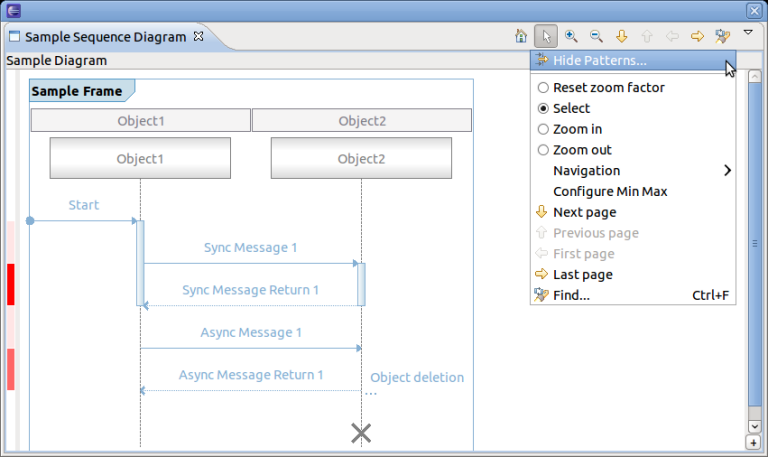

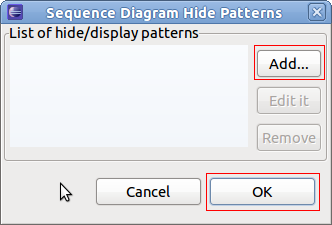

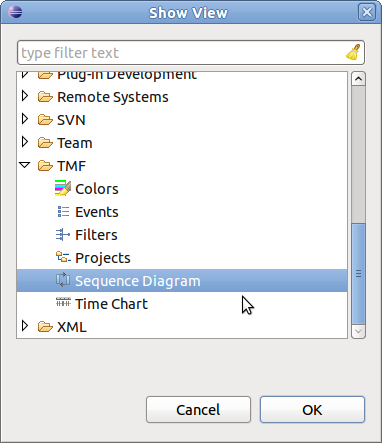

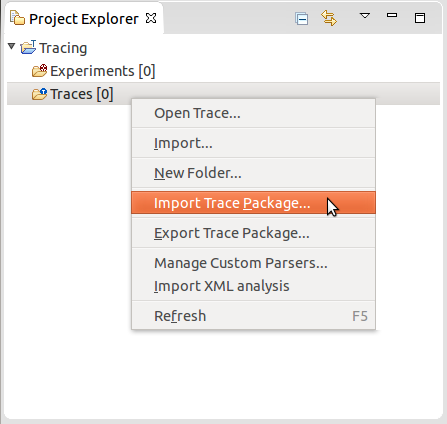

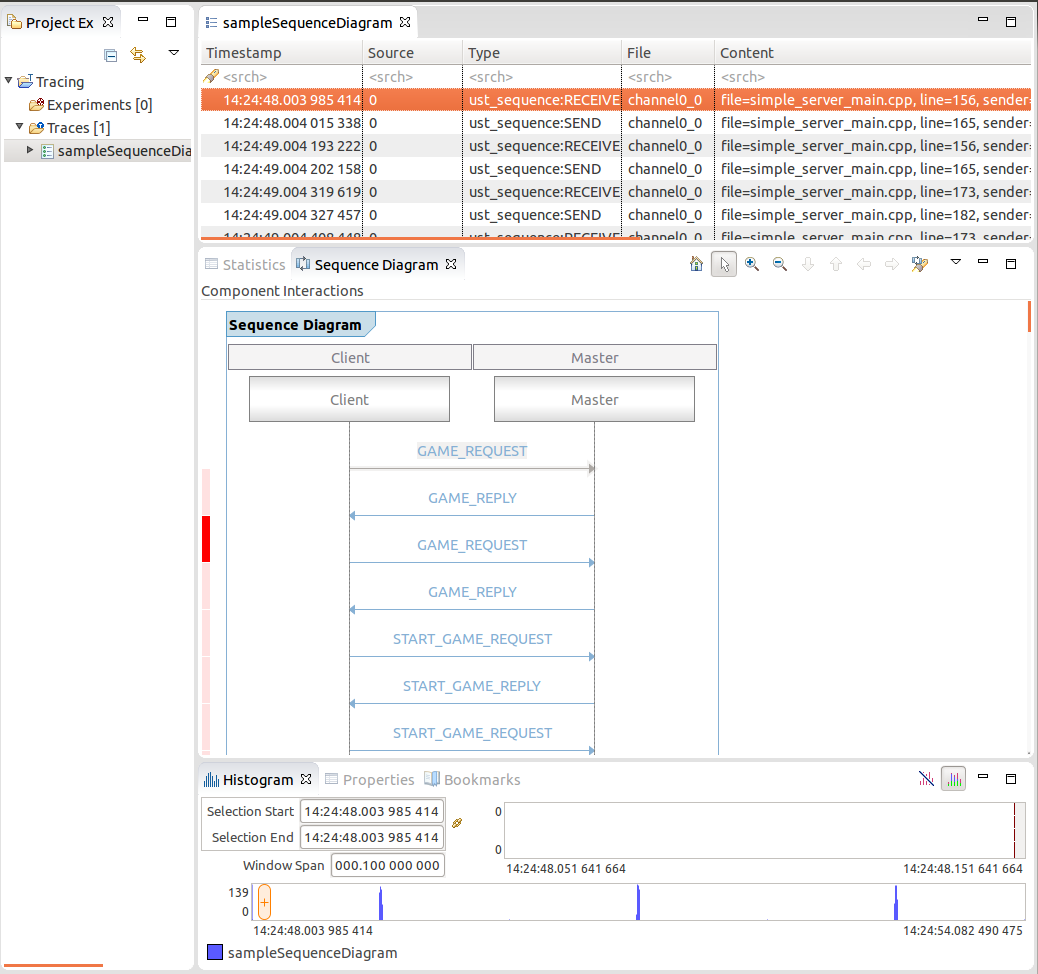

Tutorial