Notice: this Wiki will be going read only early in 2024 and edits will no longer be possible. Please see: https://gitlab.eclipse.org/eclipsefdn/helpdesk/-/wikis/Wiki-shutdown-plan for the plan.

TPTP DMS

Contents

TPTP DMS

Requirements Overview

High Level Structure

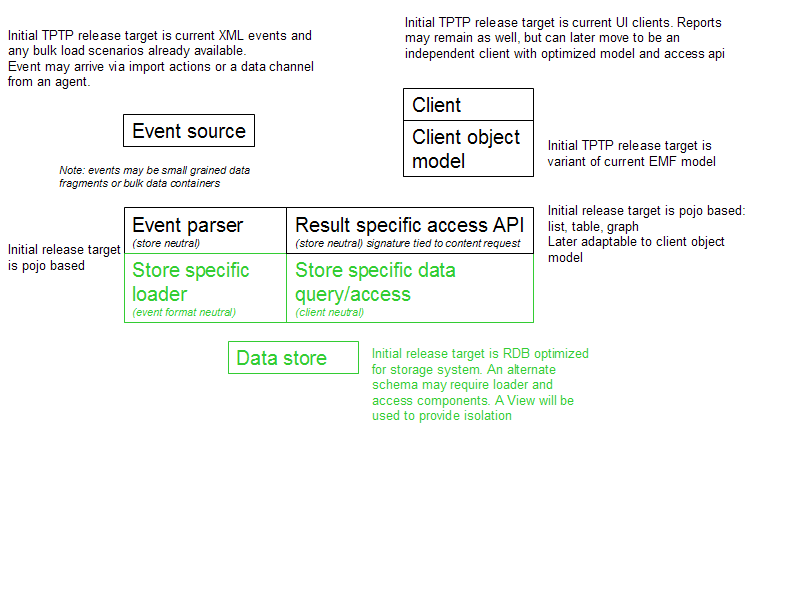

This image shows a very high level rendering of the runtime components involved in the data flow through the data management services of TPTP.

The following gives an overview of each component in this block architecture.

Event Source

An event source is nothing more than a data provider to the loader. In TPTP this often thought to be an agent, but even though that may be where an event originated, the event source in context of this discussion is the input stream to the loader.

There is an implicit contract between the event source and the loader that the data provided is consumable by the loader, however since the transport layers available in TPTP are nothing but a transport service this contract is delegated to the data source pushing data into the transport.

TPTP maintains a set of specifications for event formats it can create, transport and consume. These events will continue to be extended in TPTP as the need arises. Prior to 4.4 events were small and incremental. In 4.4 bulk events will be introduced. These already in effect exist in the form of a "large" XML stream of already known events, wrapped in some sort of envelope. This is how the current trace file import works. In 4.4 we will make this more scalable from a transport and loader perspective.

The design is not complete but may include options of simply passing a Uri to an batch resource of a known format. Also under consideration is embedding and CSV stream. This is not really an event source specific issue, however it is mentioned here to prevent any assumption about the structure or format of the data that may arrive through the stream.

Event Parser

The event loader consists of a few simple processing steps. The first is the event parser, the second is the store specific loader. The contract between these two components is a strongly type data object. In TPTP this object is a simple Java object. The binding between these two steps is directly controlled by the object created. Once the parser has populated the members of the object it will invoke the "add yourself" method, which will trigger the loader behaviour. This may migrate to an asynchronous event model in the future but in 4.4, parsing and loading will be synchronized operations.

The role of the event parser is to instantiate the object(s) needed by the loader, and set the members as specified by the input stream. All default content must be provided by the pojo. In the current TPTP implementation, the specific event parser is selected based on the initial part of the event stream. At this time, events are formated as XML so the element name is the key used to select the parser. Alternate parsers can be easily registered for XML formated events, and with some additional work the same could be done for other stream formats.

This framework allows for a complete decoupling of the input stream format from the loader by using the intermeadiate pojo.

Store specific loader

The store specific loader takes an input pojo and does what ever is needed to process and likely persist it. The historical TPTP implementation instantiated an EMF model and deferred persistence to the EMF resource serialization. Although this is still of interest for quick in memory models, it is not a completely scalable for large volumes of related data by default.

Going forward, the loader is clearly bound to the storage system, and should be optimized for the data store of choice. This implies that the loader needs to be registered independent from the event parser implementation, but still needs to be coupled to it at run time. This registrations will be configurable via plug-in extensions, a configuration file or dynamically. The "add yourself" implementation of the pojo will deal with this aspect of the configuration. This approach will maintain compatibility for current extenders of the loader infrastructure.

Data store

The data store is just that, a service that will persist and retrieve data on demand and manage a consistent state of that data. An example data store is EMF which will manage an in memory data representation, and will also serialize the data into a zipped XML serialization in the case of TPTP. This format will in fact be very similar if not identical to the structures historically used in TPTP. A more scalable example will be a relational database. TPTP will provide a relational database implementation for it's models. This includes a set of DDL and contracts of behavior with the store specific loaders and the store specific query system. This implementation is intended to be portable to various RDB implementations, however as long as the contracts are maintained, alternate schema can be used that may be more optimal is certain configurations.

The loader contract includes a specific schema for storage as well as a consistent state or context for the loaders to operate in. In the case of EMF this is the object graph itself. In this case, due to the fact that the graph is considered to be all in memory, there is no support for commit scopes or data stability beyond basic synchronization locking done in the object graph itself.

In the case of the relational store, this is the RDB schema. Where possible, update able views will be provided to isolate the actual tables for insert and update actions. Views will be provided for all supported read patterns. This will allow for some schema evolution and implementation optimizations. It is up to the loader to declare and use commit scopes.

The query and access contract is provided through views in the case of the relational implementation. In the case of EMF, the object graph itself is exposed.

Client

The client is not a part of the DMS but is included here for completeness and to facilitate various use case discussions.

In TPTP the primary client is the Eclipse user interface, however this will not be supported any differently than a thick client perhaps implemented in SWT or a web client that may or may not be an AJAX based implementation. TPTP will extend it's current user interface to exploit the DMS and this includes the Eclipse workbench, RCP applications and BIRT reports.

Client object model

filler

Result specific access api

filler

Store specific data query/access

filler

Event driven contracts

filler

Control mechanisms

filler