Notice: this Wiki will be going read only early in 2024 and edits will no longer be possible. Please see: https://gitlab.eclipse.org/eclipsefdn/helpdesk/-/wikis/Wiki-shutdown-plan for the plan.

SMILA/Documentation/Crawler

Contents

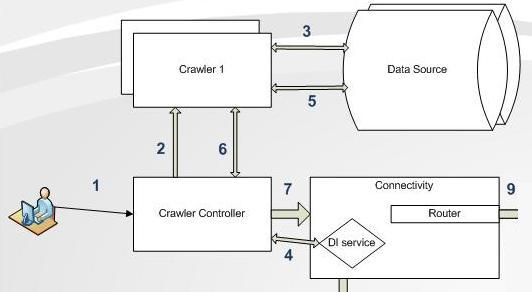

What does Crawler do

A Crawler gathers information about resources, both content and metadata of interest like size or mime type.

Crawler types

SMILA contains three types of crawlers, each for a different data source type, namely WebCrawler, JDBC DatabaseCrawler, and FileSystemCrawler to facilitate gathering information from the internet, databases, or files from a hard disk.

Furthermore, the Connectivity Framework provides an API for developers to create own crawlers.

Crawler configuration

A Crawler is started with a specific, named configuration, that defines what information is to be crawled (e.g. content, kinds of metadata) and where to find that data (e.g. file system path, JDBC Connection String).

Crawler lifecycle

The CrawlerController manages the life cycle of the crawler (e.g. start, stop, abort) and may instantiate multiple Crawlers concurrently, even of the same type.