Notice: this Wiki will be going read only early in 2024 and edits will no longer be possible. Please see: https://gitlab.eclipse.org/eclipsefdn/helpdesk/-/wikis/Wiki-shutdown-plan for the plan.

Difference between revisions of "PTP/designs/2.x/runtime platform"

| Line 421: | Line 421: | ||

|} | |} | ||

| − | + | == Launch == | |

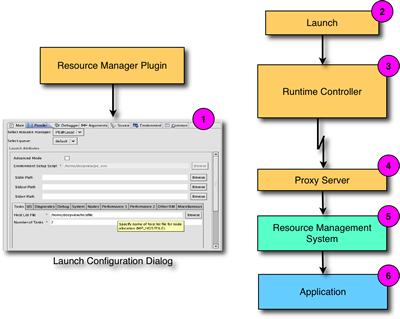

The ''launch'' component is responsible for collecting the information required to launch an application, and passing this to the runtime controller to initiate the launch. Both normal and debug launches are handled by this component. Since the parallel computer system may be employing a batch job system, the launch may not result in immediate execution of the application. Instead, the application may be placed in a queue and only executed when the requisite resources become available. | The ''launch'' component is responsible for collecting the information required to launch an application, and passing this to the runtime controller to initiate the launch. Both normal and debug launches are handled by this component. Since the parallel computer system may be employing a batch job system, the launch may not result in immediate execution of the application. Instead, the application may be placed in a queue and only executed when the requisite resources become available. | ||

| Line 427: | Line 427: | ||

The Eclipse launch configuration system provides a user interface that allows the user to create launch configurations that encapsulate the parameters and environment necessary to run or debug a program. The PTP launch component has extended this functionality to allow specification of the resources necessary for job ''submission''. These resources available are dependent on the particular resource management system in place on the parallel computer system. A customizable launch page is available to allow any types of resources to be selected by the user. These resources are passed to the runtime controller in the form of attributes, and which are in turn passed on to the resource management system. | The Eclipse launch configuration system provides a user interface that allows the user to create launch configurations that encapsulate the parameters and environment necessary to run or debug a program. The PTP launch component has extended this functionality to allow specification of the resources necessary for job ''submission''. These resources available are dependent on the particular resource management system in place on the parallel computer system. A customizable launch page is available to allow any types of resources to be selected by the user. These resources are passed to the runtime controller in the form of attributes, and which are in turn passed on to the resource management system. | ||

| − | + | === Normal Launch === | |

A normal (non-debug) launch proceeds as follows (numbers refer to the diagram). | A normal (non-debug) launch proceeds as follows (numbers refer to the diagram). | ||

| Line 440: | Line 440: | ||

# The application begins execution. | # The application begins execution. | ||

| − | + | === Debug Launch === | |

A debug launch starts in the same manner as a non-debug launch, with the user creating a launch configuration and filling in the dialog fields with the relevant information. | A debug launch starts in the same manner as a non-debug launch, with the user creating a launch configuration and filling in the dialog fields with the relevant information. | ||

| Line 455: | Line 455: | ||

# In the case where the SDM is attaching to an existing application, the resource management system starts the application and passes the relevant connection information to the SDM when it is launched. | # In the case where the SDM is attaching to an existing application, the resource management system starts the application and passes the relevant connection information to the SDM when it is launched. | ||

| − | + | === Implementation Details === | |

The launch configuration is implemented in package <tt>org.eclipse.ptp.launch</tt>, which is built on top of Eclipse's launch configuration. It doesn't matter if you start from "Run as ..." or "Debug ...". The launch logic will go from here. What tells from a <b>debugger</b> launch apart from <b>normal</b> launch is a special debug flag. | The launch configuration is implemented in package <tt>org.eclipse.ptp.launch</tt>, which is built on top of Eclipse's launch configuration. It doesn't matter if you start from "Run as ..." or "Debug ...". The launch logic will go from here. What tells from a <b>debugger</b> launch apart from <b>normal</b> launch is a special debug flag. | ||

Revision as of 14:06, 1 May 2008

Overview

The runtime platform comprises those elements relating to the launching, controlling, and monitoring of parallel applications. The runtime platform is comprised of the following elements in the architecture diagram:

- runtime model

- runtime views

- runtime controller

- proxy server

- launch

PTP follows a model-view-controller (MVC) design pattern. The heart of the architecture is the runtime model, which provides an abstract representation of the parallel computer system and the running applications. Runtime views provide the user with visual feedback of the state of the model, and provide the user interface elements that allow jobs to be launched and controlled. The runtime controller is responsible for communicating with the underlying parallel computer system, translating user commands into actions, and keeping the model updated. All of these elements are Eclipse plugins.

The proxy server is a small program that typically runs on the remote system front-end, and is responsible for performing local actions in response to commands sent by the proxy client. The results of the actions are return to the client in the form of events. The proxy server is usually written in C.

The final element is launch, which is responsible for managing Eclipse launch configurations, and translating these into the appropriate actions required to initiate the launch of an application on the remote parallel machine.

Each of these elements is described in more detail below.

Runtime Model

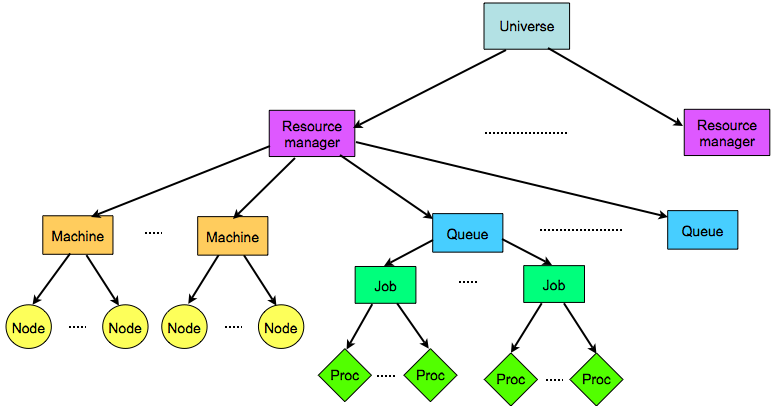

The PTP runtime model is an hierarchical attributed parallel system model that represents the state of a remote parallel system at any particular time. It is the model part of the MVS design pattern. The model is attributed because each model element can contain an arbitrary list of attributes that represent characteristics of the real object. For example, an element representing a compute node could contain attributes describing the hardware configuration of the node. The structure of the runtime model is shown in the diagram below.

Each of the model elements in the hierarchy is a logical representation of a system component. Particular operations and views provided by PTP are associated with each type of model element. Since machine hardware and architectures can vary widely, the model does not attempt to define any particular physical arrangement. It is left to the implementor to decide how the model elements map to the physical machine characteristics.

Elements

The only model element that is provided when PTP is first initialized is the universe. Users must manually create resource manager elements and associate each with a real host and resource management system. The remainder of the model hierarchy is then populated and updated by the resource manager implementation.

Universe

This is the top-level model element, and does not correspond to a physical object. It is used as the entry point into the model.

Resource Manager

This model element corresponds to an instance of a resource management system on a remote computer system. Since a computer system may provide more than one resource management system, there may be multiple resource managers associated with a particular physical computer system. For example, host A may allow interactive jobs to be run directly using the MPICH2 runtime system, or batched via the LSF job scheduler. In this case, there would be two logical resource managers: an MPICH2 resource manager running on host A, and an LSF resource manager running on host A.

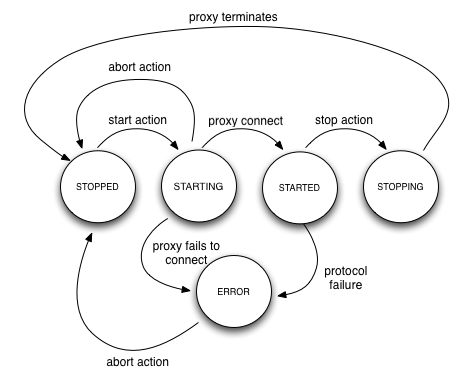

Resource managers have an attribute that reflects the current state of the resource manager. The possible states and their meanings are:

- STOPPED

- The resource manager is not available for status reporting or job submission. Resource manager configuration settings can be changed.

- STARTING

- The resource manager proxy server is being started on the (remote) parallel machine.

- STARTED

- The resource manager is operational and jobs can be submitted.

- STOPPING

- The resource manager is in the process of shutting down. Monitoring and job submission is disabled.

- ERROR

- An error has occurred starting or communicating to the resource manager.

The resource manager implementation is responsible for ensuring that the correct state transitions are observed. Failure to observe the correct state transitions can lead to undefined behavior in the user interface. The resource manager state transitions are shown below.

Machine

This model element provides a grouping of computing resources, and will typically be where the resource management system is accessible from, such as the place a user would normally log in. Many configurations provide such a front-end machine.

Machine elements have a state attribute that can be used by the resource manager implementation to provide visual feedback to the user. Each state is represented by a different icon in the user interface. Allowable states and suggested meanings are as follows:

- UP

- Machine is available for running user jobs

- DOWN

- Machine is physically powered down, or otherwise unavailable to run user jobs

- ALERT

- An alert condition has been raised. This might be used to indicate the machine is shutting down, or there is some other unusual condition.

- ERROR

- The machine has failed.

- UNKNOWN

- The state of the machine is unknown.

It is recommended that resource managers use these states when displaying machines in the user interface.

Queue

This model element represents a logical queue of jobs waiting to be executed on a machine. There will typically be a one-to-one mapping between a queue model element and a resource management system queue. Systems that don't have the notion of queues should map all jobs on to a single default queue.

Queues have a state attribute that can be used by the resource manager to indicate allowable actions for the queue. Each state will display a different icon in the user interface. The following states and suggested meanings are available:

- NORMAL

- The queue is available for job submissions

- COLLECTING

- The queue is available for job submissions, but jobs will not be dispatched from the queue.

- DRAINING

- The queue will not accept new job submissions. Existing jobs will continue to be dispatched

- STOPPED

- The queue will not accept new job submissions, and any existing jobs will not be dispatched.

It is recommended that resource manager implementations use these states.

Node

This model element represents some form of computational resource that is responsible for executing an application program, but where it is not necessary to provide any finer level of granularity. For example, a cluster node with multiple processors would normally be represented as a node element. An SMP machine would represent physical processors as a node element.

Nodes have two state attributes that can be used by the resource manager to indicate node availability to the user. Each combination of attributes is represented by a different icon in the user interface.

The first node state attribute represents the gross state of the node. Recommended meanings are as follows:

- UP

- The node is operating normally.

- DOWN

- The node has been shut down or disabled.

- ERROR

- An error has occurred and the node is no longer available

- UNKNOWN

- The node is in an unknown state

The second node state attribute (the "extended node state) represents additional information about the node. The recommended states and meanings are as follows:

- USER_ALLOC_EXCL

- The node is allocated to the user for exclusive use

- USER_ALLOC_SHARED

- The node is allocated to the user, but is available for shared use

- OTHER_ALLOC_EXCL

- The node is allocated to another user for exclusive use

- OTHER_ALLOC_SHARED

- The node is allocated to another user, but is available for shared use

- RUNNING_PROCESS

- A process is running on the node (internally generated state)

- EXITED_PROCESS

- A process that was running on the node has now exited (internally generated state)

- NONE

- The node has no extended node state

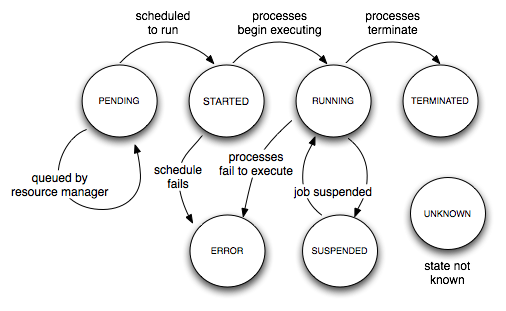

Job

This model element represents an instance of an application that is to be executed. A job typically comprises one or more processes (execution contexts). Jobs also have a state that reflects the progress of the job from submission to termination. Job states are as follows:

- PENDING

- The job has been submitted for execution, but is queued awaiting for required resources to become available

- STARTED

- The required resource are available and the job has be scheduled for execution

- RUNNING

- The process(es) associated with the job have been started

- TERMINATED

- The process(es) associated with the job have exited

- SUSPENDED

- Execution of the job has been temporarily suspended

- ERROR

- The job failed to start

- UNKNOWN

- The job is in an unknown state

It is the responsibility of the proxy server to ensure that the correct state transitions are followed. Failure to follow the correct state transitions can result in undefined behavior in the user interface. Required state transitions are shown in the following diagram.

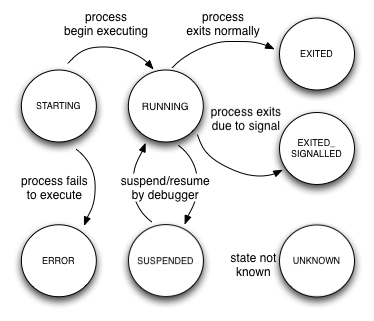

Process

This model element represents an execution unit using some computational resource. There is an implicit one-to-many relationship between a node and a process. For example, a process would be used to represent a Unix process. Finer granularity (i.e. threads of execution) are managed by the debug model.

Processes have a state that reflects the progress of the process from execution to termination. Process states are as follows:

- STARTING

- The process is being launched by the operating system

- RUNNING

- The process has begun executing (including library code execution)

- EXITED

- The process has finished executing

- EXITED_SIGNALLED

- The process finished executing as the result of receiving a signal

- SUSPENDED

- The process has been suspended by a debugger

- ERROR

- The process failed to execute

- UNKNOWN

- The process is in an unknown state

It is the responsibility of the proxy server to ensure that the correct state transitions are followed. Failure to follow the correct state transitions can result in undefined behavior in the user interface. Required state transitions are shown in the following diagram.

Attributes

Each element in the model can contain an arbitrary number of attributes. An attribute is used to provide additional information about the model element. An attribute consists of a key and a value. The key is a unique ID that refers to an attribute definition, which can be thought of as the type of the attribute. The value is the value of the attribute.

An attribute definition contains meta-data about the attribute, and is primarily used for data validation and displaying attributes in the user interface. This meta-data includes:

- ID

- The attribute definition ID.

- type

- The type of the attribute. Currently supported types are ARRAY, BOOLEAN, DATE, DOUBLE, ENUMERATED, INTEGER, and STRING.

- name

- The short name of the attribute. This is the name that is displayed in UI property views.

- description

- A description of the attribute that is displayed when more information about the attribute is requested (e.g. tooltip popups.)

- default

- The default value of the attribute. This is the value assigned to the attribute when it is first created.

- display

- A flag indicating if this attribute is for display purposes. This provides a hint to the UI in order to determine if the attribute should be displayed.

Pre-defined Attributes

All model elements have at least two mandatory attributes. These attributes are:

- id

- This is a unique ID for the model element.

- name

- This is a distinguishing name for the model element. It is primarily used for display purposes and does not need to be unique.

In addition, model elements have different sets of optional attributes. These attributes are shown in the following table:

| model element | attribute definition ID | attribute type | description |

|---|---|---|---|

| resource manager | rmState | enum | Enumeration representing the state of the resource manager. Possible values are: STARTING, STARTED, STOPPING, STOPPED, SUSPENDED, ERROR |

| rmDescription | string | Text describing this resource manager | |

| rmType | string | Resource manager class | |

| rmID | string | Unique identifier for this resource manager. Used for persistence | |

| machine | machineState | enum | Enumeration representing the state of the machine. Possible values are: UP, DOWN, ALERT, ERROR, UNKNOWN |

| numNodes | int | Number of nodes known by this machine | |

| queue | queueState | enum | Enumeration representing the state of the queue. Possible values are: NORMAL, COLLECTING, DRAINING, STOPPED |

| node | nodeState | enum | Enumeration representing the state of the node. Possible values are: UP, DOWN, ERROR, UNKNOWN |

| nodeExtraState | enum | Enumeration representing additional state of the node. This is used to reflect control of the node by a resource management system. Possible values are: USER_ALLOC_EXCL, USER_ALLOC_SHARED, OTHER_ALLOC_EXCL, OTHER_ALLOC_SHARED, RUNNING_PROCESS, EXITED_PROCESS, NONE | |

| nodeNumber | int | Zero-based index of the node | |

| job | jobState | enum | Enumeration representing the state of the job. Possible values are: PENDING, STARTED, RUNNING, TERMINATED, SUSPENDED, ERROR, UNKNOWN |

| jobSubId | string | Job submission ID | |

| jobId | string | ID of job. Used in commands that need to refer to jobs. | |

| queueId | string | Queue ID | |

| jobNumProcs | int | Number of processes | |

| execName | string | Name of executable | |

| execPath | string | Path to executable | |

| workingDir | string | Working directory | |

| progArgs | array | Array of program arguments | |

| env | array | Array containing environment variables | |

| debugExecName | string | Debugger executable name | |

| debugExecPath | string | Debugger executable path | |

| debugArgs | array | Array containing debugger arguments | |

| debug | bool | Debug flag | |

| process | processState | enum | Enumeration representing the state of the process. Possible values are: STARTING, RUNNING, EXITED, EXITED_SIGNALLED, STOPPED, ERROR, UNKNOWN |

| processPID | int | Process ID of the process | |

| processExitCode | int | Exit code of the process on termination | |

| processSignalName | string | Name of the signal that caused process termination | |

| processIndex | int | Zero-based index of the process | |

| processStdout | string | Standard output from the process | |

| processStderr | string | Error output from the process | |

| processNodeId | string | ID of the node that this process is running on |

Events

The runtime model provides an event notification mechanism to allow other components or tools to receive notification whenever changes occur to the model. Each model element provides two sets of event interfaces:

- element events

- These events relate to the model element itself. Two main types of events are provided: a change event, which is triggered when an attribute on the element is added, removed, or changed; and on some elements, an error event that indicates some kind of error has occurred.

- child events

- These events relate to children of the model element. Three main types of events are provided: a change event, which mirrors each child element's change event; a new event that is triggered when a new child element is added; and a remove event that this triggered when a child element is removed. Elements that have more than one type of child (e.g. a resource manager has machine and queue children) will provided separate interfaces for each type of child. These events are known as bulk events because the allow for the notification of multiple events simultaneously.

Event notification is activated by registering either a child listener or an element listener on the model element. Once the listener has been registered, events will begin to be received immediately.

Implementation Details

Element events are always named IElementChangedEvent and IElementErrorEvent, where Element is the name of the element (e.g. Machine or Queue.) Currently the only error event is IResourceManagerErrorEvent. Element events provide two methods for obtaining more information about the event:

- public Map<IAttributeDefinition<?,?,?>, IAttribute<?,?,?>> getAttributes();

- This method returns the attributes that have changed on the element. The attributes are returned as a map, with the key being the attribute definition, and the value the attribute. This allows particular attributes to be accessed efficiently.

- public IPElement getSource();

- This method can be used to get the source of the event, which is the element itself.

Child events are always named IActionElementEvent, where Action is one of Changed, New, or Remove, and Element is the name of the element. For example, IChangedJobEvent will notify child listeners when one or more jobs have been changed. Child events provide three methods for obtaining more information about the event:

- public Collection getElements();

- This method is used to retrieve the collection of child elements associated with the event.

- public IPElement getSource();

- This method can be used to get the source of the event, which is the element whose children caused the event to be sent.

Notice that child Change events do not provide access to the changed attributes. They only provide notification of the child elements that have changed. A listener must be registered on the element event for each element if you wish to be notified of the attributes that have changed.

Listener interfaces follow the same naming scheme. Element listeners are always named IElementListener, and child listeners are always named IElementChildListener.

The following table shows each model element along with it's associated events and listeners.

| Model Element | Element Event | Element Listener | Child Event | Child Listener |

|---|---|---|---|---|

| IPJob | IJobChangeEvent | IJobListener | IChangeProcessEvent | IJobChildListener |

| INewProcessEvent | ||||

| IRemoveProcessEvent | ||||

| IPMachine | IMachineChangeEvent | IMachineListener | IChangeNodeEvent | IMachineChildListener |

| INewNodeEvent | ||||

| IRemovedNodeEvent | ||||

| IPNode | INodeChangeEvent | INodeListener | IChangeProcessEvent | INodeChildListener |

| INewProcessEvent | ||||

| IRemovedProcessEvent | ||||

| IPProcess | IProcessChangeEvent | IProcessListener | ||

| IPQueue | IQueueChangeEvent | IQueueListener | IChangeJobEvent | IJobChildListener |

| INewJobEvent | ||||

| IRemovedJobEvent | ||||

| IResourceManager | IResourceManagerChangeEvent | IResourceManagerListener | IChangeMachine | IResourceManagerChildListener |

| INewMachineEvent | ||||

| IRemovedMachineEvent | ||||

| IChangeQueueEvent | ||||

| INewQueueEvent | ||||

| IRemovedQueueEvent |

Runtime Views

The runtime views serve two functions: they provide a means of observing the state of the runtime model, and they allow the user to interact with the parallel environment. Access to the views is managed using an Eclipse perspective. A perspective is a means of grouping views together so that they can provide a cohesive user interface. The perspective used by the runtime platform is called the PTP Runtime perspective.

There are currently four main views provided by the runtime platform:

- resource manager view

- This view is used to manage the lifecycle of resource managers. Each resource manager model element is displayed in this view. A user can add, delete, start, stop, and edit resource managers using this view. A different color icon is used to display the different states of a resource manager.

- machines view

- This view shows information about the machine and node elements in the model. The left part of the view displays all the machine elements in the model (regardless of resource manager). When a machine element is selected, the right part of the view displays the node elements associated with that machine. Different machine and node element icons are used to reflect the different state attributes of the elements.

- jobs view

- This view shows information about the job and process elements in the model. The left part of the view displays all the job elements in the model (regardless of queue or resource manager). When a job element is selected, the right part of the view displays the process elements associated with that job. Different job and process element icons are used to reflect the different state attributes of the elements.

- process detail view

- This view shows more detailed information about individual processes in the model. Various parts of the view are used to display attributes that are obtained from the process model element.

Runtime Controller

The runtime controller is the controller part of the MVC design pattern, and is implemented using a layered architecture, where each layer communicates with the layers above and below using a set of defined APIs. The following diagram shows the layers of the runtime controller in more detail.

The resource manager is an abstraction of a resource management system, such as a job scheduler, which is typically responsible for controlling user access to compute resources via queues. The resource manager is responsible for updating the runtime model. The resource manager communicates with the runtime system, which is an abstraction of a parallel computer system's runtime system (that part of the software stack that is responsible for process allocation and mapping, application launch, and other communication services.) The runtime system, in turn, communicates with the remote proxy runtime, which manages proxy communication via some form of remote service. The proxy runtime client is used to map the runtime system interface onto a set of commands and events that are used to communicate with a physically remote system. The proxy runtime client makes use of the proxy client to provide client-side communication services for managing proxy communication.

Each of these layers is described in more detail in the following sections.

Resource Manager

A resource manger is the main point of control between the runtime model, runtime views, and the underlying parallel computer system. The resource manager is responsible for maintaining the state of the model, and allowing the user to interact with the parallel system.

There are a number of pre-defined types of resource managers that are known to PTP. These resource manager types are provided as plugins that understand how to communicate with a particular kind of resource management system. When a user creates a new resource manager (through the resource manager view), an instance of one of these types is created. There is a one-to-one correspondence between a resource manager and a resource manager model element. When a new resource manager is created, a new resource manager model element is also created.

A resource manager only maintains the part of the runtime model below its corresponding resource manager model element. It has no knowledge of other parts of the model.

Runtime System

A runtime system is an abstraction layer that is designed to allow implementers more flexibility in how the plugin communicates with the underlying hardware. When using the implementation that is provided with PTP, this layer does little more than pass commands and events between the layers above and below.

Remote Proxy Runtime

The remote proxy runtime is used when communicating to a proxy server that is remote from the host running Eclipse. This component provides a number of services that relate to defining and establishing remote connections, and launching the proxy server on a remote computer system. It does not participate in actual communication between the proxy client and proxy server.

Proxy Runtime Client

The proxy runtime client layer is used to define the commands and events that are specific to runtime communication (such as job submission, termination, etc.) with the proxy server. The implementation details of this protocol are defined in more detail here Resource Manager Proxy Protocol.

Proxy Client

The proxy client provides a range of low level proxy communication services that can be used to implement higher level proxy protocols. These services include:

- An abstract command that can be extended to provide additional commands.

- An abstract event that can be extend to provide additional events.

- Connection lifecycle management.

- Concrete commands and events for protocol initialization and finalization.

- Command/event serialization and de-serialization.

The proxy client is currently used by both the proxy runtime client and the proxy debug client.

Proxy Server

The proxy server is the only part of the PTP runtime architecture that runs outside the Eclipse JVM. It has to main jobs: to receive commands from the runtime controller and perform actions in response to those commands; and keep the runtime controller informed of activities that are taking place on the parallel computer system.

The commands supported by the proxy server are the same as those defined in the Resource Manager Proxy Protocol. Once an action has been performed, the results (if any) are collected into one or more events, and these are transmitted back to the runtime controller. The proxy server must also monitor the status of the system (hardware, configuration, queues, running jobs, etc.) and report "interesting events" back to the runtime controller.

Known Implementations

The following table lists the known implementations of proxy servers.

| PTP Name | Description | Batch | Language | Operating Systems | Architectures |

|---|---|---|---|---|---|

| ORTE | Open Runtime Environment (part of Open MPI) | No | C99 | Linux, MacOS X | x86, x86_64, ppc |

| MPICH2 | MPICH2 mpd runtime | No | Python | Linux, MacOS X | x86, x86_64, ppc |

| PE | IBM Parallel Environment | No | C99 | Linux, AIX | ppc |

| LoadLeveler | IBM LoadLeveler job-management system | Yes | C99 | Linux, AIX | ppc |

Launch

The launch component is responsible for collecting the information required to launch an application, and passing this to the runtime controller to initiate the launch. Both normal and debug launches are handled by this component. Since the parallel computer system may be employing a batch job system, the launch may not result in immediate execution of the application. Instead, the application may be placed in a queue and only executed when the requisite resources become available.

The Eclipse launch configuration system provides a user interface that allows the user to create launch configurations that encapsulate the parameters and environment necessary to run or debug a program. The PTP launch component has extended this functionality to allow specification of the resources necessary for job submission. These resources available are dependent on the particular resource management system in place on the parallel computer system. A customizable launch page is available to allow any types of resources to be selected by the user. These resources are passed to the runtime controller in the form of attributes, and which are in turn passed on to the resource management system.

Normal Launch

A normal (non-debug) launch proceeds as follows (numbers refer to the diagram).

- The user creates a new launch configuration and fills out the dialog with the information necessary to launch the application

- When the run button is pushed, the launch framework collects all the parameters from the dialog and passes these to the PTP runtime via the submitJob interface on the selected resource manager

- The resource manager passes the launch information to the runtime controller which determines how the launch is to happen. For many types of resource managers, this would involve converting the launch command into a wire protocol and sending to a remote proxy server.

- The proxy server receives the launch command over the wire, and converts it into a form suitable for the target system. This is usually an API or a command-line program.

- The target system resource manager initiates the application launch.

- The application begins execution.

Debug Launch

A debug launch starts in the same manner as a non-debug launch, with the user creating a launch configuration and filling in the dialog fields with the relevant information.

- When the user presses the debug button, the launch framework detects that debug mode has been set.

- The debug launcher instantiates the PDI implementation for the selected debugger (specified in the launch configuration Debug tab.)

- The debug controller creates a TCP/IP socked bound to a random port number, and waits for an incoming connection from the external debugger. The port number is passed back to the launch framework.

- The submitJob interface on the selected resource manager is called to pass the launch information to the runtime controller. The launch proceeds in exactly the same manner as a normal launch, except that additional attributes are supplied, including the connection port number. These attributes allow the resource management system to determine how to launch the application under the control of a debugger.

- The resource manager on the target system launches the debugger specified by the attributes. In this case, the debugger launched is the SDM.

- The SDM connects to the debug controller using a port number that was supplied on the command-line. All debugger communication is via this socket.

- In the case where the application is being launched under the control of the debugger, the SDM starts the application processes.

- In the case where the SDM is attaching to an existing application, the resource management system starts the application and passes the relevant connection information to the SDM when it is launched.

Implementation Details

The launch configuration is implemented in package org.eclipse.ptp.launch, which is built on top of Eclipse's launch configuration. It doesn't matter if you start from "Run as ..." or "Debug ...". The launch logic will go from here. What tells from a debugger launch apart from normal launch is a special debug flag.

if (mode.equals(ILaunchManager.DEBUG_MODE)) // debugger launch else // normal launch

We sketch the steps following the launch operation.

First, a set of parameters/attributes are collected. For example, debugger related parameters such as host, port, debugger path are collected into array dbgArgs.

Then, we will call upon resource manager to submit this job:

final IResourceManager rm = getResourceManager(configuration); IPJob job = rm.submitJob(attrMgr, monitor);

Please refer to AbstractParallelLaunchConfigurationDelegates for more details.

Resource manager will eventually contact the backend proxy server, and pass in all the attributes necessary to submit the job. As detailed elsewhere, a proxy server usually provides a set of routines to accomplish submitting a job, cancelling a job, monitoring a job etc. for a particular parallel computing environment. Here we will use ORTE proxy server as an example to continue the flow.

ORTE resource manager at the front end (Eclipse side) will have a way to establish connection with ORTE proxy server (implemented by ptp_orte_proxy.c in package org.eclipse.ptp.orte.proxy, and they can communicate through a wire-protocol (2.0 at this point). The logic will switch to method ORTE_SubmitJob.

ORTE packs relevant into a "context" structure, and to create this structure, we invoke OBJ_NEW(). For ORTE to launch a job, it is a two-step process. First it allocates for the job, second, it launches the job. The call ORTE_SPAWN combines these two steps together, which is simpler, and sufficient for the normal job launch. For debug job, the process is more complicated, and we discuss it separately.

apps = OBJ_NEW(orte_app_context_t);

apps-> num_procs = num_procs;

apps-> app = full_path;

apps-> cwd = strdup(cwd);

apps-> env = env;

...

if (debug) {

rc = debug_spawn(debug_full_path, debug_argc, debug_args, &apps, 1, &ortejobid, &debug_jobid);

} else {

rc = ORTE_SPAWN(&apps,1, &ortejobid, job_state_callback);

The job_state_callback is a registered callback function with ORTE, and will be invoked when there a a job state change. Also within this callback function, a sendProcessChangeStateEvent will be invoked to notify the Eclipse front for updating UI if necessary.

Now, let's turn our attention on the first case - how a debug job is launched through ORTE, and some of the complications involved.

The general thread of launching is as following: for a N-process job, ORTE will launch N+1 SDM processes. The first N processes are called SDM servers, the N+1 th process is called SDM client (or master), as it will co-ordinate the communication from other SDM servers, and connect back to Eclipse front. Each SDM server will in turn start the real application process, in other words, there is one-to-one mapping between SDM server and real application process.

So there are two set of processes (and two jobs) ORTE needs to be aware of: one set is about SDM servers, the other set is for real applications. Inside debug_spawn() function:

rc = ORTE_ALLOCATE_JOB(app_context, num_context, &jid1, debug_app_job_state_callback);

First, we allocate the job without actually launching it, and the real purpose is to get job id #1, which is for application.

Next, we want to allocate debugger job, but before doing so, we need to create a debugger job context just as we did for application.

debug_context = OBJ_NEW(orte_app_context_t);

debug_context->num_procs = app_context[0]->num_procs + 1;

debug_context->app = debug_path;

debug_context->cwd = strdup(app_context[0]->cwd);

...

asprintf(&debug_context->argv[i++], "--jobid=%d", jid1);

debug_context->argv[i++] = NULL;

...

Note that we need to pass appropriate job id to debugger - that is the application job id we obtained earlier by doing the allocation. Debugger needs this job id to do appropriate attachment. Once we have the debugger context ready, we allocate for debugger job by:

rc = ORTE_ALLOCATE_JOB(&debug_context, 1, &jid2, debug_job_state_callback);

Note that job id #2 is for the debugger. Finally, we can launch the debugger by invokding ORTE_LAUNCH_JOB():

if (ORTE_SUCCESS != (rc = ORTE_LAUNCH_JOB(jid2))) {

OBJ_RELEASE(debug_context);

ORTE_ERROR_LOG(rc);

return rc;

}