Notice: this Wiki will be going read only early in 2024 and edits will no longer be possible. Please see: https://gitlab.eclipse.org/eclipsefdn/helpdesk/-/wikis/Wiki-shutdown-plan for the plan.

ICE and HDF5

This area provides an explanation of how NiCE utilizes HDF5 for the Reactor pieces. It should be treated as a general rubric, but not as a solid ruling. This area assumes that the user knows a little bit about HDF5's structure.

Contents

Design Philosophy

HDF5 is a beast, plain and simple. It is a persistence scheme designed to handle big data in binary format. In order to tackle this monster, a brief explanation of its structure followed by some specific use-case scenarios are a must for clarity and understanding.

Five of the basic classes in HDF5's data model are very important within the Reactor pieces:

* Group- Comparable to a composite--represents a collection of data, which can contain other Groups as well. * Attribute- Basic property representation. Can be stored on a Group. Contains a Datatype to represent the data stored. * Dataset- A table representation of data from one specific DataType. * DataType- Represents a primitive (double, integer, string, float, etc.). Provides utility for the size as well (byte size and string length). * Compound Dataset- Is a Dataset, but can handle multiple DataTypes.

For these classes, we assigned general rules and explanations for each. They are as follows:

- Group - Usually represents a class or a collection of data.

- Attribute - Usually represents a property.

- Dataset - Usually represents a collection, or list, of data that ARE NOT Reactor classes. Ex: GridLabelProvider's collection of GridLabels.

- DataType - Primitive type representations. These are managed within a delegation class or factory for consistency.

- Compound Dataset - Represents a collection of various datatypes.

HDF5 within the IO Package: Factories

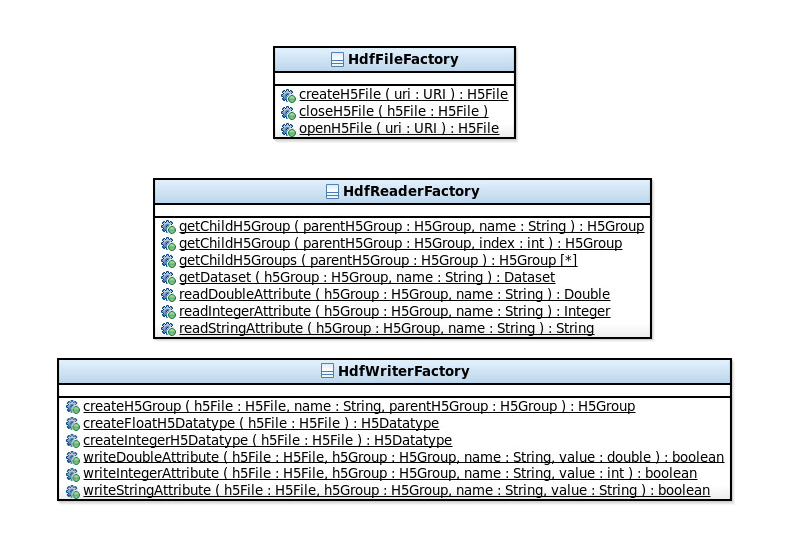

In order to cut down on the complexity of HDF5, we created HDF5 factories to manage to more basic operations and settings for Datatypes, Groups, H5Files, and Datasets. Below is a representation of the HDF5 (Java):

A breakdown of the following classes:

- HdfFileFactory- Responsible for creating, opening, and closing a H5File.

- HdfReaderFactory- Responsible for reading Groups, Attributes, Datasets, and primitive types (double, String, and integer) from a H5File.

- HdfWriterFactory- Responsible for creating Groups, Datatypes, and Attributes from a H5File. Something very important to remember, there are literally over 100 ways to represent Integers, Strings, Doubles, and other primitive types within HDF5. For our intents and purposes, we need to specify the specific type of Integers, Strings, and "long" Floats (Doubles) for our methodology to work. We take care of Doubles, Integers and specific writings of Strings (Attributes) under the hood with the HDF5Writer/ReaderFactory. Strings are a bit more complex, but we try to use either FIXED or VARIABLE length strings when necessary that are NATIVE to the operating system. See readXAttribute on HdfReaderFactory for more details.

In Java, we can open files with URI, but in C++ we use strings.

HDF5 within the IO Package: Interfaces

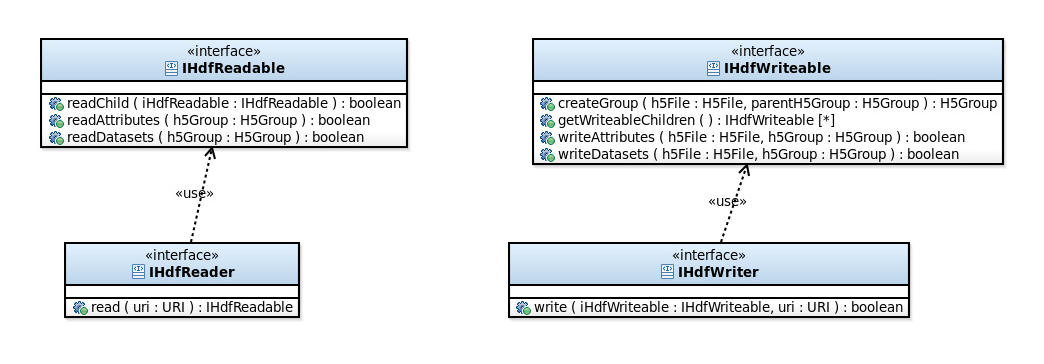

In order to persist and load HDF5 type files, we created a collection of interfaces to handle it's delegation in NiCE. They are below:

Breakdown of the classes:

- IHdfReader - Designed to be called when a user wants to READ a HDF5 File.

- IHDfWriter - Designed to be called when a user wants to WRITE a HDF5 File.

- IHdfReadable - Designed to be called on a class that has children (Groups), attributes, or datasets to be read.

- IHdfWriteable - Designed to be called on a class that has children (Groups), attributes, or datasets to be written.

As the association suggests, a class only uses the readable counterparts for a read operation, and the writable counterparts for writing. Since Groups are considered classes in most associations, the IHdfReadable and IHdfWriteable operations are implemented on classes that need to be manipulated with HDF5. The Reader and Writer operations work recursively on the Readables and Writeables by calling the respective operations for the attributes, datasets, and groups. It should be understood that there are separate implementations for IHdfReader and IHdfWriter and it is also important to note that each subset implementation of the Reactor class (PWR, BWR, SFR, etc) should have its own Reader and Writer.

The Readable and Writeable interfaces are object-oriented (OO) in nature. Classes that have properties that are differing from their super should call read/writeAttributes. The same thing goes for delegation classes (child) and Datasets (read/writeDatasets). If a developer follows this pattern correctly, then the HDF5 should be hierarchical in nature and easily read and written at a moment's notice.

The implementation of these classes should utilize the factories whenever possible for handling a majority of the HDF5 calls. Granted, Datasets and Compound Datasets are always special cases and need to be handled accordingly in the read/writeDataset operations.

GridManager: Implementation Breakdown

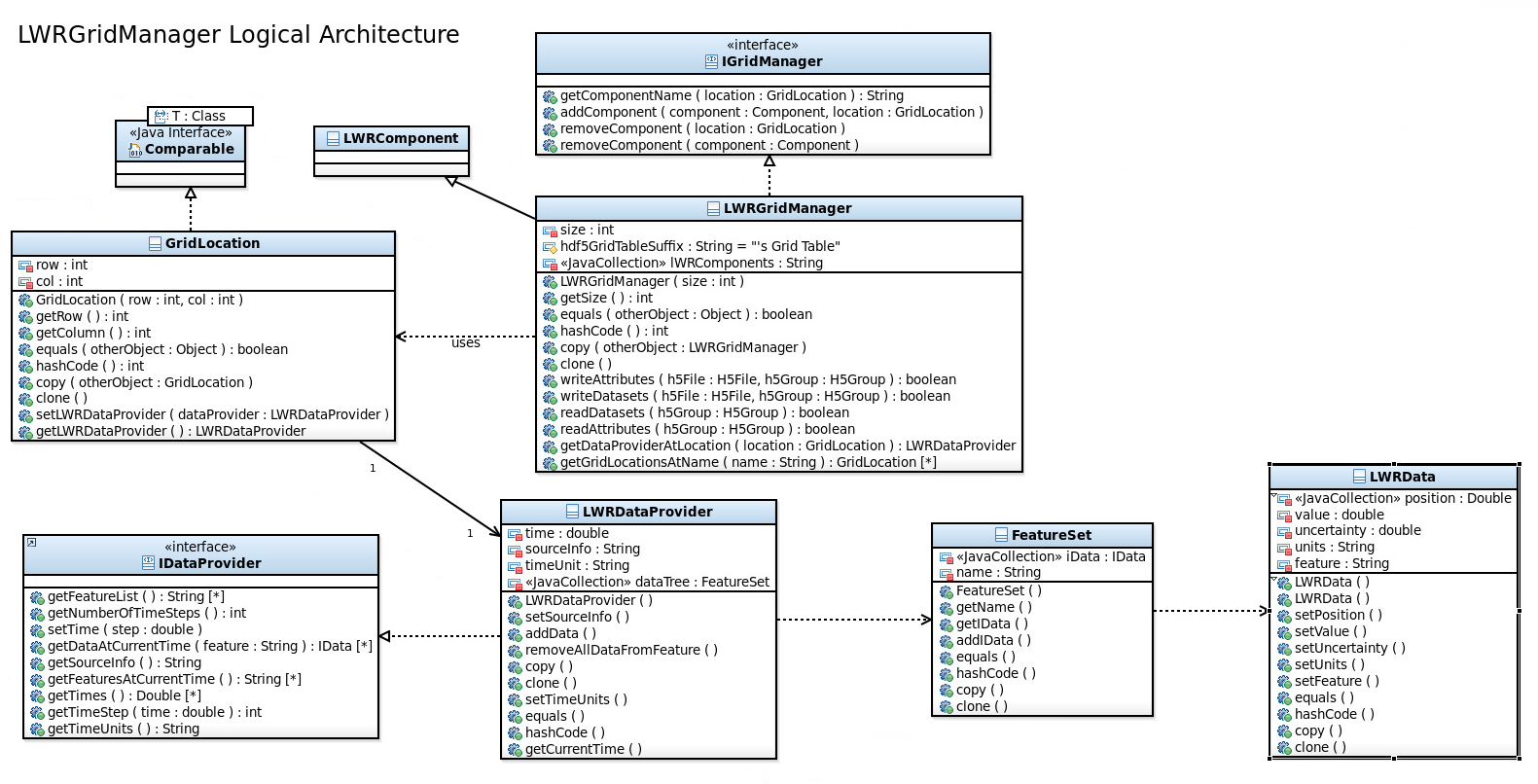

LWRGridManager, or GridManager, is an implementation responsible for storing state point data for specific positions. This utility is the preferred method for storing state point data across the positions within a grid. Currently, the Reactor and the Assemblies hold GridManagers internally through their API.

A key point to remember about GridManagers is that they are responsible for storing pre-determined instances of completed objects at multiple locations. At the assembly level, there are multiple grid managers to represent the logic behind storing multiple types of assemblies within the same location, but the GridManagers SHOULD NOT allow the same type of Assembly to be stored in the same location.

GridManagers are designed to cut down overall data, because realistically there are only so many unique instances of Assemblies or Rods. These unique instances are a small percentage of the entire amount of locations within a grid (usually less than 10 percent). The GridManagers take a name and a location and are assigned to store ONLY the state point data, or data that changes, at that specific location. Within that location, there could be varying states of features at that particular point, like pin powers or material densities for a rod.

The Grid Locations store an implementation of the IDataProvider, LWRDataProvider, that stores a collection of unique features across multiple time steps. In other words:

- GridLocations have a 1-1 relationship with LWRDataProviders. A provider, which is utilized by the GridLocation, have many time steps.

- Time steps have many FeatureSets.

- FeatureSets have many LWRDatas (Implementation of IData).

The image above represents the class diagram, or the associations between the classes.

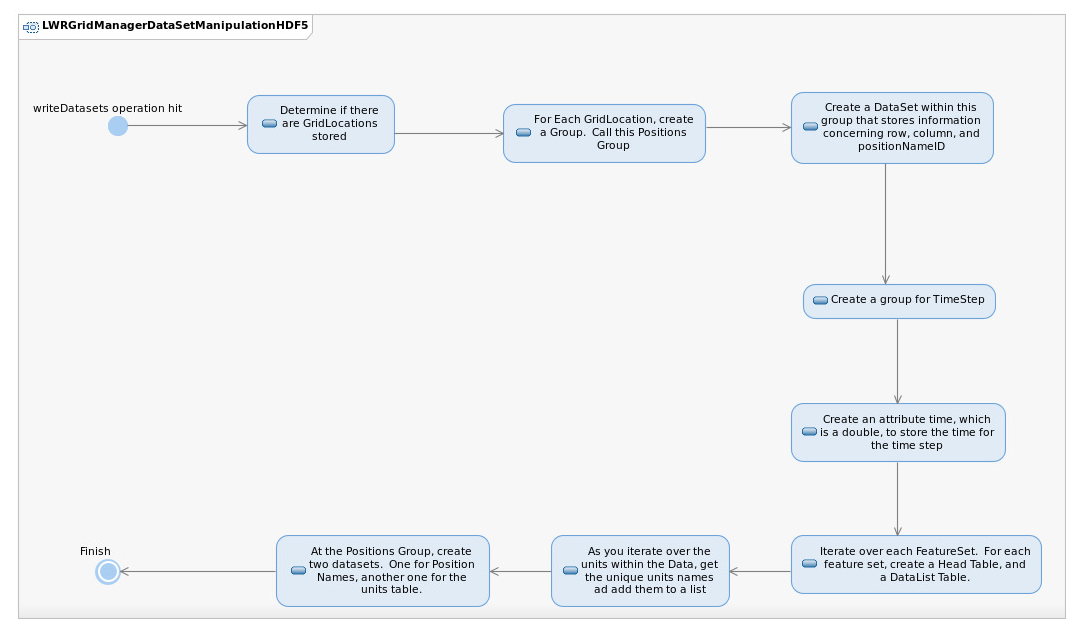

GridManager: read/writeDatasets operation

The GridManager's read/writeDatasets operation is a bit complex and requires a thorough explanation.

HDF5 is a complex beast, and file I/O is naturally slow in nature compared to other forms of data storage (RAM, cache, ETC). Since StatePointData is numerous across multiple rods, we needed a way to store the data in an efficient way.

Here is a text explanation of the "Positions Group" that stores the state point data AND positional data for the LWRGridManager.

Group: Position Group

* [Many] Group: Position row col //row, col represent number values

*[One] Dataset: position //Size 3 for row, col, and positionNameID

*[Many] Group: TimeStep X // X represents the time step number

*[One] Attribute: time (double)

*[Many] Dataset: Head Table //Feature name's table

*[Many] Dataset: DataList Table //Feature name's table

* [One] Dataset: Position Names //Names to represent unique LWRComponents at Locations. Associated with positionNameID for Position row col

* [One] Dataset: Units Names //Names of units from IData.getUnits()

Breaking down the Head and DataList Table

Note: N represents the number of IData (LWRData) with the same FeatureName at that particular time step. The names of the dataset are pre-pended with these names, so that there are many Head and DataList datasets within a timeStep group.

Head Table:

- 2 * N relationship. Longs

- First Position represents the index of the datalist table.

- The second position represents the ID for the UnitsNames table (IData.getUnits())

DataList Table:

- 5 * N relationship. Doubles

- First position represents Value

- Second position represents Uncertainty

- Third position represents position X value

- Forth position represents position Y value

- Fifth position represents position Z value

NiCEIO Package: C++

This area answers the basic question: How do I compile NiCEIO C++ package?

The entire NiCEIO package for C++ is stored in two locations within the trunk: src/native and tests/native

In order to compile NiCEIO, you need the following tools:

- CMAKE 2.8 or later.

- BOOST 1.35 or later.

- HDF5 w/CPP support

BOOST and HDF5 depend on cmake to utilize these pieces correctly. If you are using a local install of HDF5 or boost, or wish to override the root installs of these libraries, then the following variables can be set in your bashrc OR called on the command line in order to override the BOOST or HDF5 install directories:

- HDF5_ROOT =/path/to/hdf5/install

- BOOST_ROOT=/path/to/boost/install

When you checkout NiCEIO, its easier to checkout the entire trunk, work from there, and do an entire install of NiCE. If you wish to only checkout the source code, then you can do so, but the root CMakeFile.txt located in the trunk will need to be modified to edit out the test.

NiCEIO also utilizes out-of-source builds, can be tested, and can be installed.

NiCEIO && Denovo Coupling

First note: This only works on u233. This will STILL require contact with denovo representative for specific files.

Step 1: Install NiCEIO (yes, run make install). Install locally.

Step 2: Set up your bashrc like this:

1 # .bashrc

2

3 # Source global definitions

4 if [ -f /etc/bashrc ]; then

5 . /etc/bashrc

6 fi

7

8 # User specific aliases and functions

9 . /projects/amp/packages/ampscripts/ampsetupgnu451.sh

10 . /opt/casl_vri_dev_env/fissile_four/build_scripts/load_official_dev_env.sh

11

12 export BOOST_ROOT=/opt/gcc-4.5.1/tpls/boost-1.46.1

13

14 export SCALE=/home/<userName>/source/VERA/Scale

15 export DATA=/home/<userName>/source/VERA/Scale/test_data

SCALE, DATA, and BOOST_ROOT are extremely important.

Step 3: Create the following folders in your root directory: source build

Step 4: Checkout denovo! (Disclaimer: This may not be up to date. Contact a Denovo representative if this does not work for you!)

Try this:

- In your source/ directory, git clone casl-dev.ornl.gov:/git-root/VERA

- In your source/VERA directory, git clone angmar.ornl.gov:/data/git/Exnihilo

- In your source/VERA directory, git clone casl-dev.ornl.gov:/git-root/Scale

- In your source/VERA directory, git clone casl-dev.ornl.gov:/git-root/Trilinos

- In your build/ directory, put your do-configure script

Step 5: Do-configure script:

1 #!/bin/bash

2

3 EXTRA_ARGS=$@

4

5 if [ "$VERA_DIR" == "" ] ; then

6 VERA_DIR=../../../VERA

7 fi

8

9 VERA_DIR_ABS=/home/s4h/source/VERA

10 echo "VERA_DIR_ABS = $VERA_DIR_ABS"

11

12 CONFIG_FILES_BASE=$VERA_DIR_ABS/Trilinos/cmake/ctest/drivers/pu241

13

14

15 cmake \

16 -D VERA_CONFIGURE_OPTIONS_FILE:FILEPATH="$CONFIG_FILES_BASE/gcc-4.6.1-mpi- debug-ss-options.cmake,$VERA_DIR_ABS/cmake/ctest/drivers/fissile4/vera-tpls- gcc.4.6.1.cmake" \

17 -D VERA_ENABLE_KNOWN_EXTERNAL_REPOS_TYPE:STRING=Continuous \

18 -D VERA_IGNORE_MISSING_EXTRA_REPOSITORIES:BOOL=TRUE \

19 -D VERA_ENABLE_Insilico:BOOL=ON \

20 -D CMAKE_BUILD_TYPE:STRING=DEBUG \

21 -D BUILD_SHARED_LIBS:BOOL=ON \

22 -D TPL_ENABLE_BinUtils:BOOL=OFF \

23 -D HDF5_LIBRARY_DIRS:FILEPATH="/opt/gcc-4.6.1/tpls/hdf5-1.8.9/lib" \

24 -D HDF5_LIBRARY_NAMES:STRING="hdf5_h1 hdf5 hdf5_cpp hdf5_fortran" \

25 -D HDF5_INCLUDE_DIRS:FILEPATH="/opt/gcc-4.6.1/tpls/hdf5-1.8.9/include" \

26 -D TPL_ENABLE_HDF5:BOOL=ON \

27 -D TPL_ENABLE_PETSC:BOOL=OFF \

28 -D TPL_ENABLE_BOOST:BOOL=ON \

29 -D TPL_ENABLE_NICE:BOOL=ON \

30 -D NICE_LIBRARY_DIRS:FILEPATH="/home/<USERNAME>/installDir/lib" \

31 -D NICE_LIBRARY_NAMES:STRING="NiCEReactor NiCEIO" \

32 -D NICE_INCLUDE_DIRS:FILEPATH="/home/<USERNAME>/installDir/include" \

33 -D VERA_ENABLE_TESTS:BOOL=ON \

34 -D VERA_ENABLE_EXPLICIT_INSTANTIATION:BOOL=ON \

35 -D Teuchos_ENABLE_DEFAULT_STACKTRACE:BOOL=ON \

36 -D DART_TESTING_TIMEOUT:STRING=180.0 \

37 -D CTEST_BUILD_FLAGS:STRING="-j16" \

38 -D CTEST_PARALLEL_LEVEL:STRING="16" \

39 $EXTRA_ARGS \

40 ${VERA_DIR_ABS}

41

42 # Above, I am using the core build options for CASL development but I am doing

43 # a true debug, with explicit instantiation, stack tracing, etc.

You'll have to chmod your do-configure script to make it executable for steps 4, 5 to work.

Step 6: * Move do-configure script into build directory * cd into build directory *run do-configure; make -j33

Hopefully, it will work. Modify the <USERNAME> or <userName> tags as needed.

Step 7: * Get a pX.xml file, .sh5 casl file, and a SequenceInfo.xml file. Copy these files into ~/build/Exnihilio/packages/Insilico/neutronics directory * cd into that directory. * Run neutronics -i pX.xml where X = number of problem that is stored in that directory.

Step 8:

If all else fails, contact a Denovo representative.

Other HDF5 Tidbits

For examples, problems, and solutions to some of the more "in depth" pieces of HDF5, please see the wiki article concerning those issues Challenges Using HDF5 and Java