Notice: this Wiki will be going read only early in 2024 and edits will no longer be possible. Please see: https://gitlab.eclipse.org/eclipsefdn/helpdesk/-/wikis/Wiki-shutdown-plan for the plan.

Difference between revisions of "SMILA/Documentation/Importing/Crawler/Web"

(→Web Extractor Worker) |

|||

| Line 38: | Line 38: | ||

* <tt>ObjectStoreVisitedLinksService</tt> (implements <tt>VisitedLinksService</tt>): Uses the <tt>ObjectStoreService</tt> to store which links have been visited, similar to the <tt>[[SMILA/Documentation/Importing/DeltaCheck#ObjectStoreDeltaService|ObjectStoreDeltaService]]</tt>. It uses a configuration file with the same properties in the same configuration directory, but named <tt>visitedlinksstore.properties</tt>. | * <tt>ObjectStoreVisitedLinksService</tt> (implements <tt>VisitedLinksService</tt>): Uses the <tt>ObjectStoreService</tt> to store which links have been visited, similar to the <tt>[[SMILA/Documentation/Importing/DeltaCheck#ObjectStoreDeltaService|ObjectStoreDeltaService]]</tt>. It uses a configuration file with the same properties in the same configuration directory, but named <tt>visitedlinksstore.properties</tt>. | ||

| − | * <tt>SimpleFetcher</tt>: Uses a GET request to read the URL. It does not follow redirects or do authentication or other advanced stuff. Write content to attachment <tt> | + | * <tt>SimpleFetcher</tt>: Uses a GET request to read the URL. It does not follow redirects or do authentication or other advanced stuff. Write content to attachment <tt>httpContent</tt>, if the resource is of mimetype <tt>text/html</tt> and set the following attributes: |

| − | ** <tt> | + | ** <tt>httpSize</tt>: value from HTTP header <tt>Content-Length</tt> (-1, if not set), as a Long value. |

| − | ** <tt> | + | ** <tt>httpContenttype</tt>: value from HTTP header <tt>Content-Type</tt>, if set. |

| − | ** <tt> | + | ** <tt>httpMimetype</tt>: mimetype part of HTTP header <tt>Content-Type</tt>, if set. |

| − | ** <tt> | + | ** <tt>httpCharset</tt>: charset part of HTTP header <tt>Content-Type</tt>, if set. |

| − | ** <tt> | + | ** <tt>httpLastModified</tt>: value from HTTP header <tt>Last-Modified</tt>, if set, as a DateTime value. |

** <tt>_isCompound</tt>: set to <tt>true</tt> for resources that are identified as extractable compound objects by the running CompoundExtractor service. | ** <tt>_isCompound</tt>: set to <tt>true</tt> for resources that are identified as extractable compound objects by the running CompoundExtractor service. | ||

* <tt>SimpleRecordProducer</tt>: Set record source and calculates <tt>_deltaHash</tt> value for DeltaChecker worker (first wins): | * <tt>SimpleRecordProducer</tt>: Set record source and calculates <tt>_deltaHash</tt> value for DeltaChecker worker (first wins): | ||

** if content is attached, calculate a digest. | ** if content is attached, calculate a digest. | ||

| − | ** if <tt> | + | ** if <tt>httpLastModified</tt> attribute is set, use it as the hash. |

| − | ** if <tt> | + | ** if <tt>httpSize</tt> attribute is set, concatenate value of <tt>httpMimetype</tt> attribute and use it as hash |

** if nothing works, create a UUID to force updating. | ** if nothing works, create a UUID to force updating. | ||

* <tt>SimpleLinkExtractor</tt> (implements <tt>LinkExtractor</tt>: Simple link extraction from HTML <tt><A href="..."></tt> tags using the tagsoup HTML parser. | * <tt>SimpleLinkExtractor</tt> (implements <tt>LinkExtractor</tt>: Simple link extraction from HTML <tt><A href="..."></tt> tags using the tagsoup HTML parser. | ||

| Line 62: | Line 62: | ||

** <tt>fetchedLinks</tt>: The incoming records with the content of the resource attached. | ** <tt>fetchedLinks</tt>: The incoming records with the content of the resource attached. | ||

| − | The fetcher tries to get the content of a web resource identified by attribute <tt> | + | The fetcher tries to get the content of a web resource identified by attribute <tt>httpUrl</tt>, if attachment <tt>httpContent</tt> is not yet set. Like the <tt>SimpleFetcher</tt> above it does not do redirects or authentication or other fancy stuff to read the resource, but just uses a simple <tt>GET</tt> request. |

=== Web Extractor Worker === | === Web Extractor Worker === | ||

| Line 77: | Line 77: | ||

For each input record, an input stream to the described web resource is created and fed into the CompoundExtractor service. The produced records are converted to look like records produced by the file crawler, with these attributes set (if provided by the extractor): | For each input record, an input stream to the described web resource is created and fed into the CompoundExtractor service. The produced records are converted to look like records produced by the file crawler, with these attributes set (if provided by the extractor): | ||

| − | * <tt> | + | * <tt>httpUrl</tt>: complete path in compound |

| − | * <tt> | + | * <tt>httpLastModified</tt>: last modification timestamp |

| − | * <tt> | + | * <tt>httpSize</tt>: uncompressed size |

* <tt>_deltaHash</tt>: computed as in the WebCrawler worker | * <tt>_deltaHash</tt>: computed as in the WebCrawler worker | ||

* <tt>_compoundRecordId</tt>: record ID of top-level compound this element was extracted from | * <tt>_compoundRecordId</tt>: record ID of top-level compound this element was extracted from | ||

| − | * <tt>_isCompound</tt>: set to <tt>true</tt> for elements that are compounds themselves.* <tt>_compoundPath</tt>: sequence of <tt> | + | * <tt>_isCompound</tt>: set to <tt>true</tt> for elements that are compounds themselves.* <tt>_compoundPath</tt>: sequence of <tt>httpUrl</tt> attribute values of the compound objects needed to navigate to the compound element. |

| − | The crawler attributes <tt> | + | The crawler attributes <tt>httpContenttype</tt>, <tt>httpMimetype</tt> and <tt>httpCharset</tt> are currently not set by the WebExtractor worker. |

| − | If the element is not a compound itself, its content is added as attachment <tt> | + | If the element is not a compound itself, its content is added as attachment <tt>httpContent</tt>. |

[[Category:SMILA]] | [[Category:SMILA]] | ||

Revision as of 09:12, 18 May 2012

Currently, the web crawler workers are implemented very simplistic so that we can test the importing framework. A more sophisticated implementation will follow soon (hopefully).

Contents

Web Crawler Worker

- Worker name: webCrawler

- Parameters:

- dataSource: name of data source, used only to mark produced records currently.

- startUrl: URL to start crawling at. Must be a valid URL, no additional escaping is done.

- filter: A map containing link filter settings, i.e. instructions which links to include or exclude from the crawl. This parameter is optional. At the moment, it can contain a single setting:

- urlPrefix: Include only links that start with exactly this string (case-sensitive).

- Task generator: runOnceTrigger

- Input slots:

- linksToCrawl: Records describing links to crawl.

- Output slots:

- linksToCrawl: Records describing outgoing links from the crawled resources. Should be connected to the same bucket as the input slot.

- crawledRecords: Records describing crawled resources. For resources of mimetype text/html the records have the content attached. For other resources, use a webFetcher worker later in the workflow to get the content.

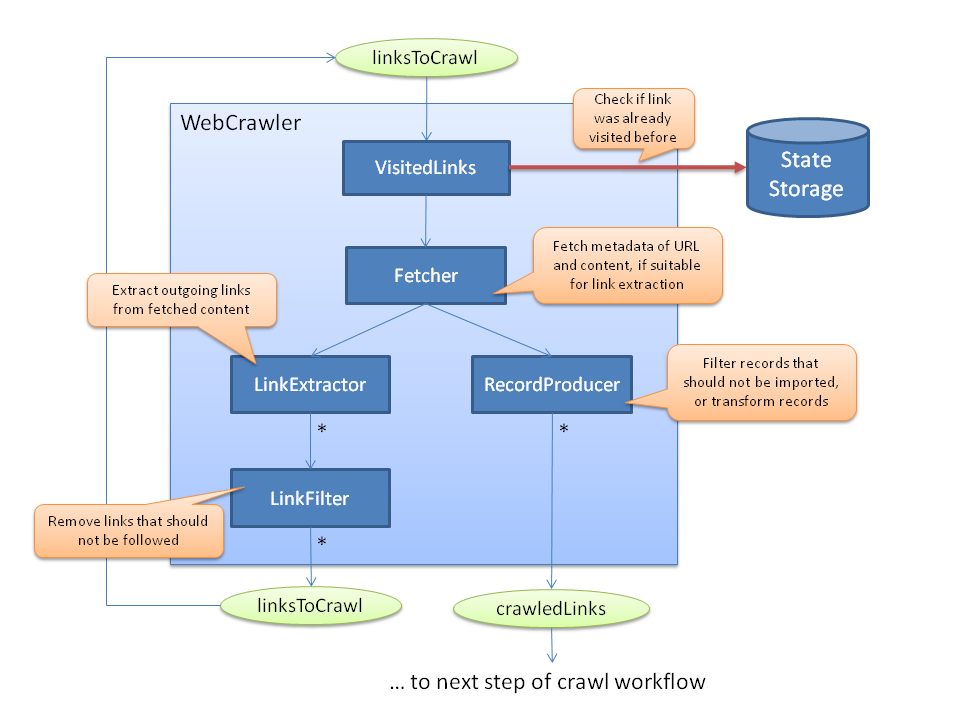

Internal structure

To make it easier to extend and improve the web crawler it is divided internally into components. Each of them is a single OSGi service that handles one part of the crawl functionality and can be exchanged individually to improve a single part of the functionality. The architecture looks like this:

The WebCrawler worker is started with one input bulk that contains records with URLS to crawl. (The exception to this rule is the start of the crawl process where it gets a task without an input bulk, which causes it to generate an input record from the task parameters or configuration in a later version.). Then the components are executed like this:

- First a VisitedLinksService is asked if this link was already crawled by someone else in this crawl job run. If so, the record is just dropped and no output is produced.

- Otherwise, the Fetcher is called to get metadata and content, if the content type of the resource is suitable for link extraction. Else the content will only be fetched in the WebFetcher worker later in the crawl workflow to save IO load.

- If the resource could be fetched without problems, the RecordProducer is called to decide if this record should be written to the crawledLinks output bulk. The producer could also modify the records or split them into multiple records, if necessary for the use case.

- If the content of the resource was fetched, the LinkExtractor is called to extract outgoing links (e.g. look for <A> tags). It can produce multiple link records containing one absolute outgoing URL each.

- If outgoing links were found the LinkFilter is called to remove links that should not be followed (e.g. because they are on a different site) or remove duplicates.

Finally, when all records from an input bulk have been processed, all links visited in this task must be marked as "visited" in the VisitedLinksService.

Outgoing links are separated into multiple bulks to improve scaling: The outgoing links from the initial task that crawls the startUrl will be written to an own bulk each, while outgoing links from later tasks will be separated into bulks of 10 links each. The crawled records are divided into bulks of 100 records at most, but this will usually not have an effect as each incoming link produces one record at most.

Example implementation

Currently the example implementation of the crawler component services is very simple. It's not really suitable for productive use, but it should suffice to demonstrate the concepts.

- ObjectStoreVisitedLinksService (implements VisitedLinksService): Uses the ObjectStoreService to store which links have been visited, similar to the ObjectStoreDeltaService. It uses a configuration file with the same properties in the same configuration directory, but named visitedlinksstore.properties.

- SimpleFetcher: Uses a GET request to read the URL. It does not follow redirects or do authentication or other advanced stuff. Write content to attachment httpContent, if the resource is of mimetype text/html and set the following attributes:

- httpSize: value from HTTP header Content-Length (-1, if not set), as a Long value.

- httpContenttype: value from HTTP header Content-Type, if set.

- httpMimetype: mimetype part of HTTP header Content-Type, if set.

- httpCharset: charset part of HTTP header Content-Type, if set.

- httpLastModified: value from HTTP header Last-Modified, if set, as a DateTime value.

- _isCompound: set to true for resources that are identified as extractable compound objects by the running CompoundExtractor service.

- SimpleRecordProducer: Set record source and calculates _deltaHash value for DeltaChecker worker (first wins):

- if content is attached, calculate a digest.

- if httpLastModified attribute is set, use it as the hash.

- if httpSize attribute is set, concatenate value of httpMimetype attribute and use it as hash

- if nothing works, create a UUID to force updating.

- SimpleLinkExtractor (implements LinkExtractor: Simple link extraction from HTML <A href="..."> tags using the tagsoup HTML parser.

- SimpleLinkFilter: Removes fragment parts from URLs ("#..."), filter URLs with parameters ("?...") and remove duplicates (case sensitive). Filters also links to hosts other than in the link from which they were extracted (case-insensitive, but the complete host part of the URL must match). Additionally, if the filter->urlPrefix parameter is set, all links are removed that do not start with exactly the specified string.

Web Fetcher Worker

- Worker name: webFetcher

- Parameters: none

- Input slots:

- linksToFetch: Records describing crawled resources, with or without the content of the resource.

- Output slots:

- fetchedLinks: The incoming records with the content of the resource attached.

The fetcher tries to get the content of a web resource identified by attribute httpUrl, if attachment httpContent is not yet set. Like the SimpleFetcher above it does not do redirects or authentication or other fancy stuff to read the resource, but just uses a simple GET request.

Web Extractor Worker

- Worker name: webExtractor

- Parameters: none

- Input slots:

- compounds

- Output slots:

- files

- Dependency: CompoundExtractor service

For each input record, an input stream to the described web resource is created and fed into the CompoundExtractor service. The produced records are converted to look like records produced by the file crawler, with these attributes set (if provided by the extractor):

- httpUrl: complete path in compound

- httpLastModified: last modification timestamp

- httpSize: uncompressed size

- _deltaHash: computed as in the WebCrawler worker

- _compoundRecordId: record ID of top-level compound this element was extracted from

- _isCompound: set to true for elements that are compounds themselves.* _compoundPath: sequence of httpUrl attribute values of the compound objects needed to navigate to the compound element.

The crawler attributes httpContenttype, httpMimetype and httpCharset are currently not set by the WebExtractor worker.

If the element is not a compound itself, its content is added as attachment httpContent.