Notice: this Wiki will be going read only early in 2024 and edits will no longer be possible. Please see: https://gitlab.eclipse.org/eclipsefdn/helpdesk/-/wikis/Wiki-shutdown-plan for the plan.

Difference between revisions of "SMILA/Documentation/Importing/Crawler/Web"

(→Internal structure) |

|||

| Line 16: | Line 16: | ||

==== Internal structure ==== | ==== Internal structure ==== | ||

| + | |||

| + | To make it easier to extend and improve the web crawler it is divided internally into components. Each of them is a single OSGi service that handles one part of the crawl functionality and can be exchanged individually to improve a single part of the functionality. The architecture looks like this: | ||

[[Image:SMILA-Importing-Web-Crawler-Internal.png]] | [[Image:SMILA-Importing-Web-Crawler-Internal.png]] | ||

| + | |||

| + | The WebCrawler worker is started with one input bulk that contains records with URLS to crawl. (The exception to this rule is the start of the crawl process where it gets a task without an input bulk, which causes it to generate an input record from the task parameters or configuration in a later version.). Then the components are executed like this: | ||

| + | * First a {{{VisitedLinksService}}} is asked if this link was already crawled by someone else in this crawl job run. If so, the record is just dropped and no output is produced. | ||

| + | * Otherwise, the {{{Fetcher}}} is called to get metadata and content, if the content type of the resource is suitable for link extraction. Else the content will only be fetched in the WebFetcher worker later in the crawl workflow to save IO load. | ||

| + | * If the resource could be fetched without problems, the {{{RecordProducer}}} is called to decide if this record should be written to the {{{crawledLinks}}} output bulk. The producer could also modify the records or split them into multiple records, if necessary for the use case. | ||

| + | * If the content of the resource was fetched, the {{{LinkExtractor}}} is called to extract outgoing links (e.g. look for <A> tags). It can produce multiple link records containing one absolute outgoing URL each. | ||

| + | * If outgoing links were found the {{{LinkFilter}}} is called to remove links that should not be followed (e.g. because they are on a different site) or remove duplicates. | ||

| + | |||

| + | Finally, when all records from an input bulk have been processed, all links visited in this task must be marked as "visited" in the {{{VisitedLinksService}}}. | ||

| + | |||

| + | ==== Default implementation ==== | ||

| + | |||

| + | Currently the default implementation of the crawler component services is very simple. | ||

| + | |||

| + | * {{{VisitedLinksService}}}: Uses the {{{ObjectStoreService}}} to store which links have been visited, similar to the {{{DeltaService}}} | ||

| + | * {{{SimpleFetcher}}}: Uses a GET request to read the URL. Reads mimetype, content length and last-modified from HTTP header and gets content, if mimetype is "text/html". | ||

| + | * {{{SimpleRecordProducer}}}: Accepts each record. | ||

| + | * {{{LinkExtractorHtml}}}: Simple link extraction from HTML {{{<A href="...">}}} tags using the tagsoup HTML parser. | ||

| + | * {{{SimpleHtmlLinkFilter}}}: Accepts only links that end with ".htm" or ".html" (case insensitive) and removes duplicates (case sensitive). | ||

Revision as of 11:25, 12 December 2011

Currently, the web crawler workers are implemented very simplistic so that we can test the importing framework. A more sophisticated implementation will follow soon (hopefully).

Web Crawler

- Worker name: webCrawler

- Parameters:

- dataSource

- startUrl

- Task generator: runOnceTrigger

- Input slots:

- linksToCrawl

- Output slots:

- linksToCrawl

- crawledLinks

Internal structure

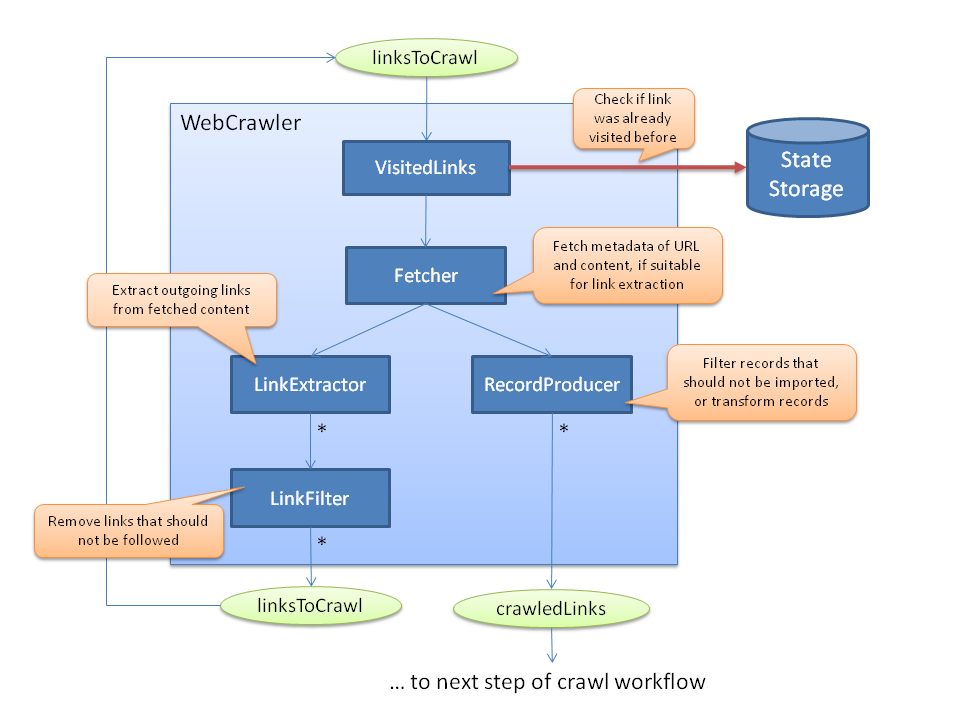

To make it easier to extend and improve the web crawler it is divided internally into components. Each of them is a single OSGi service that handles one part of the crawl functionality and can be exchanged individually to improve a single part of the functionality. The architecture looks like this:

The WebCrawler worker is started with one input bulk that contains records with URLS to crawl. (The exception to this rule is the start of the crawl process where it gets a task without an input bulk, which causes it to generate an input record from the task parameters or configuration in a later version.). Then the components are executed like this:

- First a {{{VisitedLinksService}}} is asked if this link was already crawled by someone else in this crawl job run. If so, the record is just dropped and no output is produced.

- Otherwise, the {{{Fetcher}}} is called to get metadata and content, if the content type of the resource is suitable for link extraction. Else the content will only be fetched in the WebFetcher worker later in the crawl workflow to save IO load.

- If the resource could be fetched without problems, the {{{RecordProducer}}} is called to decide if this record should be written to the {{{crawledLinks}}} output bulk. The producer could also modify the records or split them into multiple records, if necessary for the use case.

- If the content of the resource was fetched, the {{{LinkExtractor}}} is called to extract outgoing links (e.g. look for <A> tags). It can produce multiple link records containing one absolute outgoing URL each.

- If outgoing links were found the {{{LinkFilter}}} is called to remove links that should not be followed (e.g. because they are on a different site) or remove duplicates.

Finally, when all records from an input bulk have been processed, all links visited in this task must be marked as "visited" in the {{{VisitedLinksService}}}.

Default implementation

Currently the default implementation of the crawler component services is very simple.

- {{{VisitedLinksService}}}: Uses the {{{ObjectStoreService}}} to store which links have been visited, similar to the {{{DeltaService}}}

- {{{SimpleFetcher}}}: Uses a GET request to read the URL. Reads mimetype, content length and last-modified from HTTP header and gets content, if mimetype is "text/html".

- {{{SimpleRecordProducer}}}: Accepts each record.

- {{{LinkExtractorHtml}}}: Simple link extraction from HTML {{{<A href="...">}}} tags using the tagsoup HTML parser.

- {{{SimpleHtmlLinkFilter}}}: Accepts only links that end with ".htm" or ".html" (case insensitive) and removes duplicates (case sensitive).