Notice: this Wiki will be going read only early in 2024 and edits will no longer be possible. Please see: https://gitlab.eclipse.org/eclipsefdn/helpdesk/-/wikis/Wiki-shutdown-plan for the plan.

Difference between revisions of "SMILA/Documentation/Default configuration workflow overview"

| Line 1: | Line 1: | ||

[[Image:Schema.jpg]] | [[Image:Schema.jpg]] | ||

| − | |||

| − | |||

| − | |||

| − | |||

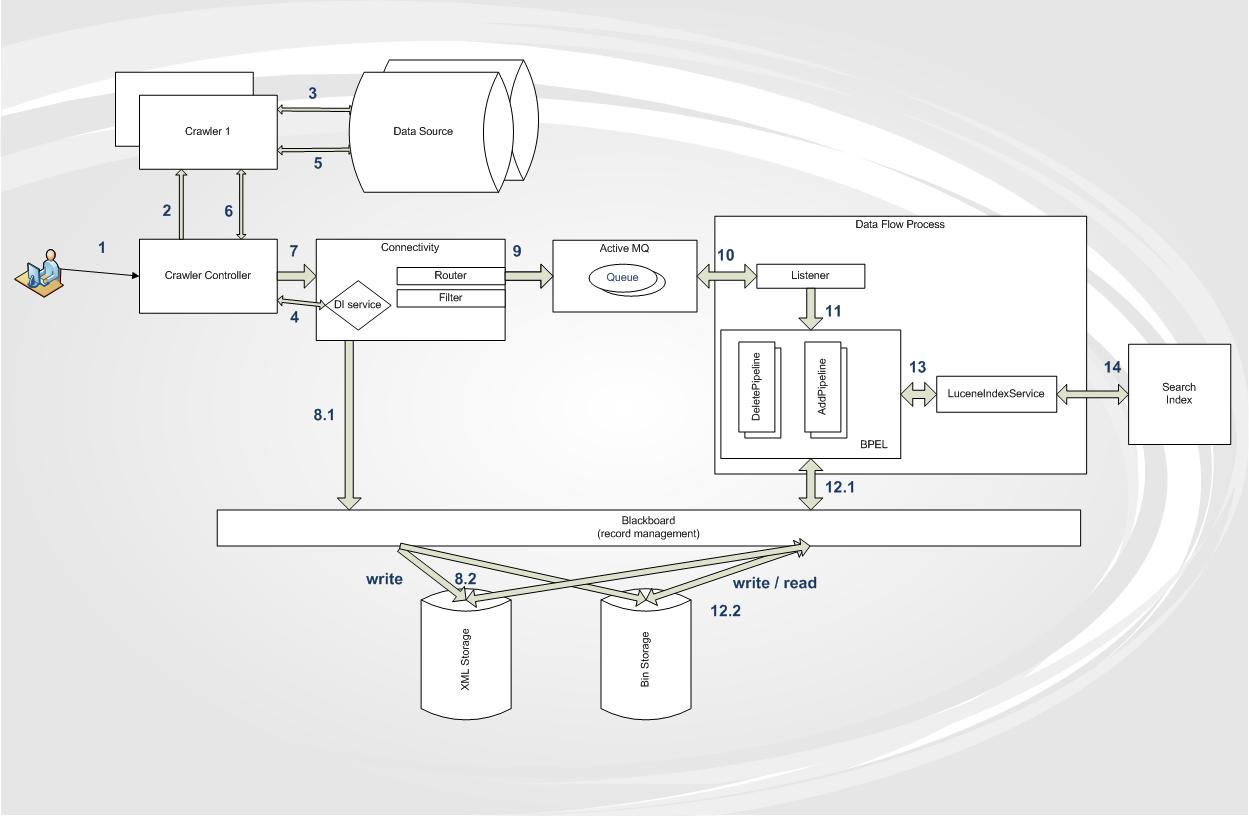

| − | + | *1. Creation or update of an '''index''' is initiated by a '''user''' request. The user sends a name of a configuration file (so called '''IndexOrderConfiguration''') to the '''Crawler Controller'''. | |

| + | The configuration file describes which '''Crawler''' should access a specific '''Data Source'''. Furthermore it contains all necessary parameters for the crawling process. | ||

| − | + | *2. The '''Crawler Controller''' initializes one '''Crawlers''': | |

| + | ** a. The '''Crawler Controller''' reads the Index order configuration file and assigns the '''Data Source''' from the configuration file to the '''Crawler'''. | ||

| + | ** b. The '''Crawler Controller''' starts the Crawler’s thread. | ||

| − | + | *3. The '''Crawler''' sequentially retrieves data records from the '''Data Source''' and returns each record’s attributes to the '''Crawler Controller''' which are required for the generation of the '''Record’s ID''' and its '''Hash'''. | |

| − | + | *4. The '''Crawler Controller''' generates '''ID''' and '''Hash''' and then determines whether this particular record is new or was already indexed by querying the '''Delta Indexing Service'''. | |

| − | + | *5. If the '''record''' was '''not''' previously indexed, the '''Crawler Controller''' instructs the '''Crawler''' to retrieve the full content of the '''record''' (metadata and content). | |

| − | + | *6. The '''Crawler''' fetches the complete '''record''' from the '''Data Source'''. Each '''record''' has an ID and can contain attributes, attachments (contain binary content) and annotations. | |

| − | + | *7. The '''Crawler Controller''' sends the complete retrieved '''Records''' to the '''Connectivity''' module. | |

| − | + | *8. The '''Connectivity module''' in turn persists the record to the '''storage tier''' via the '''Blackboard Service'''. | |

| + | The '''Blackboard''' provides central access to the record storage layer for all SMILA components thus effectively constituting an abstraction layer: clients do not need to know about the underlying persistence / storage technologies. | ||

| − | + | Structured data (text-based) is stored in '''XML-Storage'''; binaries (e.g. attachments, images) are stored in '''Bin-Storage'''. | |

| − | + | *9. In the next step the '''Connectivity module''' transmits the '''record’s''' to the '''Router''' which is part of the '''Connectivity module'''. | |

| + | The '''Router''' filters the '''record’s''' attributes according to its configuration. ''Note'': usually only the ID is passed (it is defined in a filter configurations file). | ||

| − | + | After processing and filtering the record, the '''Router''' pushes the record in a JMS message queue ('''Active MQ''') for further processing. | |

| + | Should any subsequent processes require the '''record’s''' full content, they can access it via the '''Blackboard'''. | ||

| − | 14. The '''Lucene Index Service''' updates the '''Lucene Index'''. | + | *10. A '''Listener''' subscribing to the '''queue’s''' topic receives the message and invokes further processing logic according to its configuration. |

| + | |||

| + | *11. The '''Listener''' passes the '''record''' to the respective '''pipeline''' – the '''Add pipeline''', if it is a new record, the '''Delete pipeline''' if the '''record''' needs to be removed from the '''index''' (because it has been deleted from the original '''Data Source'''). | ||

| + | A '''pipeline''' is a '''BPEL''' process using a set of '''pipelets''' and '''services''' to process a '''Record’s''' data (e.g. extracting text from various document or image file types). | ||

| + | |||

| + | '''BPEL''' is a XML-based language to define business processes by means of orchestrating loosely coupled (web) services. | ||

| + | '''BPEL''' processes require a '''BPEL''' runtime environment (e.g. Apache ODE). | ||

| + | |||

| + | *12. After processing the '''record''' the '''pipelets / service''' stores the gathered additional data via the '''Blackboard''' service. | ||

| + | |||

| + | *13. Finally the '''pipeline''' invokes the '''Lucene Index Service'''. | ||

| + | |||

| + | *14. The '''Lucene Index Service''' updates the '''Lucene Index'''. | ||

Revision as of 08:29, 14 October 2008

- 1. Creation or update of an index is initiated by a user request. The user sends a name of a configuration file (so called IndexOrderConfiguration) to the Crawler Controller.

The configuration file describes which Crawler should access a specific Data Source. Furthermore it contains all necessary parameters for the crawling process.

- 2. The Crawler Controller initializes one Crawlers:

- a. The Crawler Controller reads the Index order configuration file and assigns the Data Source from the configuration file to the Crawler.

- b. The Crawler Controller starts the Crawler’s thread.

- 3. The Crawler sequentially retrieves data records from the Data Source and returns each record’s attributes to the Crawler Controller which are required for the generation of the Record’s ID and its Hash.

- 4. The Crawler Controller generates ID and Hash and then determines whether this particular record is new or was already indexed by querying the Delta Indexing Service.

- 5. If the record was not previously indexed, the Crawler Controller instructs the Crawler to retrieve the full content of the record (metadata and content).

- 6. The Crawler fetches the complete record from the Data Source. Each record has an ID and can contain attributes, attachments (contain binary content) and annotations.

- 7. The Crawler Controller sends the complete retrieved Records to the Connectivity module.

- 8. The Connectivity module in turn persists the record to the storage tier via the Blackboard Service.

The Blackboard provides central access to the record storage layer for all SMILA components thus effectively constituting an abstraction layer: clients do not need to know about the underlying persistence / storage technologies.

Structured data (text-based) is stored in XML-Storage; binaries (e.g. attachments, images) are stored in Bin-Storage.

- 9. In the next step the Connectivity module transmits the record’s to the Router which is part of the Connectivity module.

The Router filters the record’s attributes according to its configuration. Note: usually only the ID is passed (it is defined in a filter configurations file).

After processing and filtering the record, the Router pushes the record in a JMS message queue (Active MQ) for further processing. Should any subsequent processes require the record’s full content, they can access it via the Blackboard.

- 10. A Listener subscribing to the queue’s topic receives the message and invokes further processing logic according to its configuration.

- 11. The Listener passes the record to the respective pipeline – the Add pipeline, if it is a new record, the Delete pipeline if the record needs to be removed from the index (because it has been deleted from the original Data Source).

A pipeline is a BPEL process using a set of pipelets and services to process a Record’s data (e.g. extracting text from various document or image file types).

BPEL is a XML-based language to define business processes by means of orchestrating loosely coupled (web) services. BPEL processes require a BPEL runtime environment (e.g. Apache ODE).

- 12. After processing the record the pipelets / service stores the gathered additional data via the Blackboard service.

- 13. Finally the pipeline invokes the Lucene Index Service.

- 14. The Lucene Index Service updates the Lucene Index.