Notice: This Wiki is now read only and edits are no longer possible. Please see: https://gitlab.eclipse.org/eclipsefdn/helpdesk/-/wikis/Wiki-shutdown-plan for the plan.

Difference between revisions of "SMILA/Documentation/CrawlerController"

| Line 87: | Line 87: | ||

[[Image:CrawlerControllerProcessingLogic.png]] | [[Image:CrawlerControllerProcessingLogic.png]] | ||

| − | * the CrawlerController | + | * First the CrawlerController initializes DeltaIndexing for the current data source by calling <tt>DeltaIndexingManager::init(String)</tt> and also initializes a new Crawler (not shown) |

| − | + | * the then executes subprocess <b>process crawler</b> with the initialized Crawler | |

| − | * | + | * if no error occured so far it performs the subprocess <b>delete delta</b> |

| − | + | * finally it finishes the run by calling <tt>DeltaIndexingManager::finish(String)</tt> | |

| − | * | + | |

| − | + | ||

| + | ;Process Crawler | ||

| + | * the CrawlerController checks if the given Crawler has more data available | ||

| + | * YES: the CrawlerController checks each received DataReference send by the Crawler if it needs to be updated by calling <tt>DeltaIndexingManager::checkForUpdate(Id, String)</tt> | ||

| + | ** YES: the CrawlerController request the complete record from the Crawler and checks if the record is a compound | ||

| + | *** YES: the subprocess <b>process compounds</b> is executed. | ||

| + | *** NO: no special actions are taken | ||

| + | **the record is added to the Queue by calling <tt>ConnectivityManager::add(Record[])</tt> and is marked as visited in the DeltaIndexingManager by calling <tt>DeltaIndexingManager::visit(Id)</tt> | ||

| + | ** NO: the DataReference is skipped. DeltaIndexingManager internally already set the visited flag for this Id | ||

| + | * NO: return to the calling process | ||

| + | |||

| + | |||

| + | ;Process Compounds | ||

| + | * by calling <tt>CompoundManager:extract(Record, DataSourceConnectionConfig)</tt> the subprocess receives a CompoundCrawler that iterates over the elements of the compound record | ||

| + | * the subprocess recursively calls subprocess <b>process crawler</b> using the CompoundCrawler | ||

| + | * the compound record is adapted according to the configuration (set to null, modified, left unmodified) by calling <tt>CompoundManager:adaptCompoundRecord(Record, DataSourceConnectionConfig)</tt> | ||

| + | * return to the calling process | ||

| + | |||

| + | |||

| + | ;Delete Delta | ||

| + | * by calling <tt>DeltaIndexingManager::obsoleteIdIterator(String)</tt> the subprocess receives an Iterator over all Ids that have to be deleted | ||

| + | * for each Id <tt>ConnectivityManager::delete(Id[])</tt> is called | ||

| + | * return to the calling process | ||

=== Configuration === | === Configuration === | ||

Revision as of 06:01, 5 June 2009

Contents

Overview

The CrawlerController is a component that manages and monitors Crawlers.

API

/** * Management interface for the CrawlerController. */ public interface CrawlerController { /** * Starts a crawl of the given dataSourceId. This method creates a new Thread. If it is called for a dataSourceId that * is currently crawled a ConnectivityException is thrown. Returns the hashCode of the crawler instance used for * performance counter. * * @param dataSourceId * the ID of the data source to crawl * @return - the hashcode of the crawler instance as int value * @throws ConnectivityException * if any error occurs */ int startCrawl(String dataSourceId) throws ConnectivityException; /** * Stops an active crawl of the given dataSourceId. * * @param dataSourceId * the ID of the data source to stop the crawl * @throws ConnectivityException * if any error occurs */ void stopCrawl(String dataSourceId) throws ConnectivityException; /** * Checks if there are any active crawls. * * @return true if there are active crawls, false otherwise * @throws ConnectivityException * if any error occurs */ boolean hasActiveCrawls() throws ConnectivityException; /** * Returns a Collection of Strings containing the dataSourceIds of the currently active crawls. * * @return a Collection of Strings containing the dataSourceIds * @throws ConnectivityException * if any error occurs */ Collection<String> getActiveCrawls() throws ConnectivityException; /** * Returns the CrawlState of the crawl with the given dataSourceId. * * @param dataSourceId * the ID of the data source to get the state * @return the CrawlState * @throws ConnectivityException * if any error occurs */ CrawlState getState(String dataSourceId) throws ConnectivityException; }

Implementations

It is possible to provide different implementations for the CrawlerController interface. At the moment there is one implementation available.

org.eclipse.smila.connectivity.framework.impl

This bundle contains the default implementation of the CrawlerController interface.

The CrawlerController implements the general processing logic common for all types of Crawlers. Its interface is a pure management interface that can be accessed by its Java interface or its wrapping JMX interface. It has references to the following OSGi services:

- Crawler ComponentFactory

- ConnectivityManager

- DeltaIndexingManager (optional)

- CompoundManager

- ConfigurationManagement (t.b.d.)

Crawler Factories register themselves at the CrawlerController. Each time a crawl for a certain type of crawler is initiated, a new instance of that Crawler type is created via the Crawler ComponentFactory. This allows parallel crawling of datasources with the same type (e.g. several websites). Note that it is not possible to crawl the same data source concurrently!

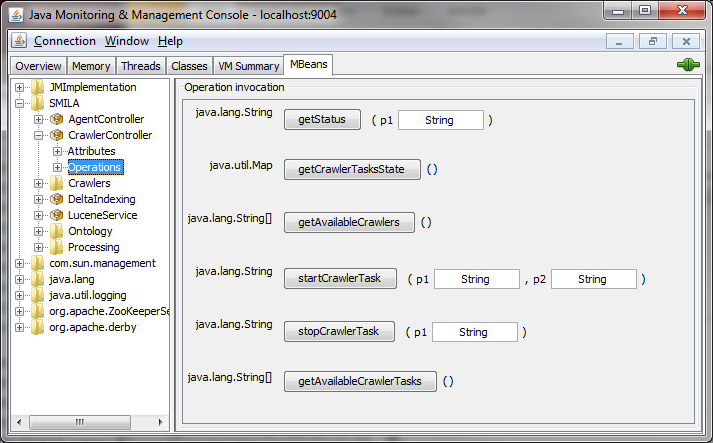

This chart shows the current CrawlerController processing logic for one crawl run:

- First the CrawlerController initializes DeltaIndexing for the current data source by calling DeltaIndexingManager::init(String) and also initializes a new Crawler (not shown)

- the then executes subprocess process crawler with the initialized Crawler

- if no error occured so far it performs the subprocess delete delta

- finally it finishes the run by calling DeltaIndexingManager::finish(String)

- Process Crawler

- the CrawlerController checks if the given Crawler has more data available

- YES: the CrawlerController checks each received DataReference send by the Crawler if it needs to be updated by calling DeltaIndexingManager::checkForUpdate(Id, String)

- YES: the CrawlerController request the complete record from the Crawler and checks if the record is a compound

- YES: the subprocess process compounds is executed.

- NO: no special actions are taken

- the record is added to the Queue by calling ConnectivityManager::add(Record[]) and is marked as visited in the DeltaIndexingManager by calling DeltaIndexingManager::visit(Id)

- NO: the DataReference is skipped. DeltaIndexingManager internally already set the visited flag for this Id

- YES: the CrawlerController request the complete record from the Crawler and checks if the record is a compound

- NO: return to the calling process

- Process Compounds

- by calling CompoundManager:extract(Record, DataSourceConnectionConfig) the subprocess receives a CompoundCrawler that iterates over the elements of the compound record

- the subprocess recursively calls subprocess process crawler using the CompoundCrawler

- the compound record is adapted according to the configuration (set to null, modified, left unmodified) by calling CompoundManager:adaptCompoundRecord(Record, DataSourceConnectionConfig)

- return to the calling process

- Delete Delta

- by calling DeltaIndexingManager::obsoleteIdIterator(String) the subprocess receives an Iterator over all Ids that have to be deleted

- for each Id ConnectivityManager::delete(Id[]) is called

- return to the calling process

Configuration

There are no configuration options available for this bundle.

JMX interface

/** * The Interface CrawlerControllerAgent. */ public interface CrawlerControllerAgent { /** * Start crawl. * * @param dataSourceId * the data source id * * @return the string */ String startCrawl(final String dataSourceId); /** * Stop crawl. * * @param dataSourceId * the data source id * * @return the string */ String stopCrawl(final String dataSourceId); /** * Gets the status. * * @param dataSourceId * the data source id * * @return the status */ String getStatus(final String dataSourceId); /** * Gets the active crawls status. * * @return the active crawls status */ String getActiveCrawlsStatus(); /** * Gets the active crawls. * * @return the active crawls */ String[] getActiveCrawls(); }

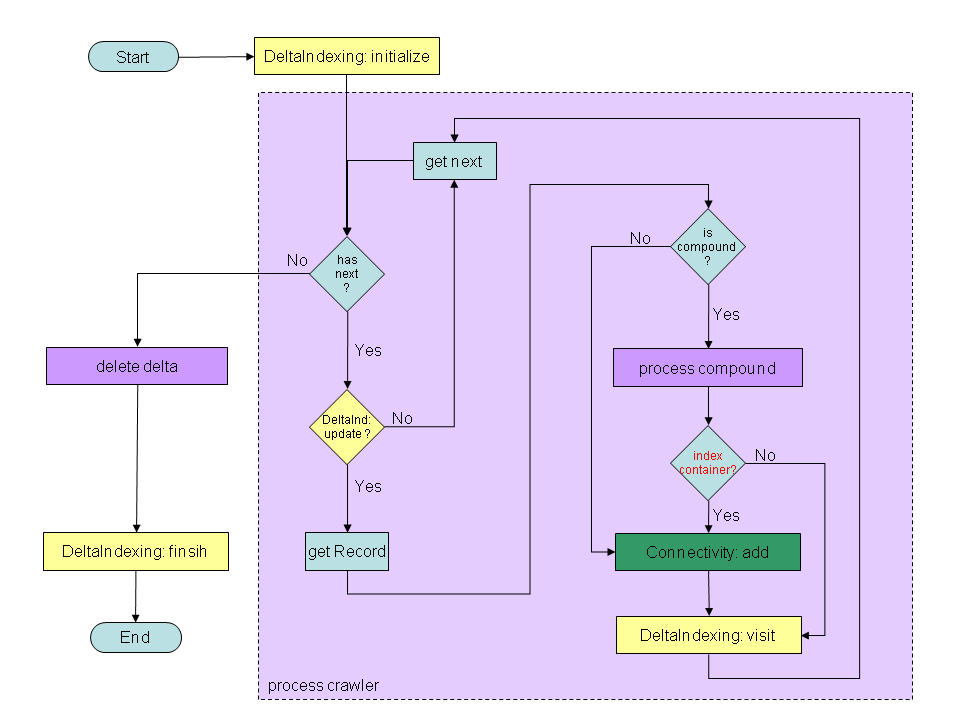

Here is a screenshot of the CrawlerController in the JMX Console: