Notice: this Wiki will be going read only early in 2024 and edits will no longer be possible. Please see: https://gitlab.eclipse.org/eclipsefdn/helpdesk/-/wikis/Wiki-shutdown-plan for the plan.

Difference between revisions of "SMILA/Documentation/Crawler"

m |

|||

| Line 1: | Line 1: | ||

[[Image:CrawlerWorkflow.png|thumb|Crawler Wokflow]] | [[Image:CrawlerWorkflow.png|thumb|Crawler Wokflow]] | ||

| − | == | + | == Overview == |

| − | A Crawler gathers information about resources, both content and metadata of interest like size or mime type. | + | A Crawler gathers information about resources, both content and metadata of interest like size or mime type. SMILA currently comes with three types of crawlers, each for a different data source type, namely WebCrawler, JDBC DatabaseCrawler, and FileSystemCrawler to facilitate gathering information from the internet, databases, or files from a hard disk. Furthermore, the Connectivity Framework provides an API for developers to create own crawlers. |

| − | |||

| − | + | == API == | |

| − | + | A Crawler has to implement two interfaces: <tt>Crawler</tt> and <tt>CrawlerCallback</tt>. The easiest way to achieve this is to extend the abstract base class <tt>AbstractCrawler</tt> located in bundle <tt>org.eclipse.smila.connectivity.framework</tt>. This class already contains handling for the crawlers Id and an OSGI service activate method. The Crawler method <tt>getNext()</tt> is designed to support an array of Datareference objects, as this reduces the number of method calls. In general there are no restrictions on the size of the array, in fact the size could vary on multiple method calls. This allows a Crawler to internally implement a Producer/Consumer pattern. A Crawler implementation that is restricted to work as an iterator only can also enforce this by always returning an array of size one. | |

| − | = | + | <source lang="java"> |

| + | public interface Crawler { | ||

| − | A Crawler is started with a specific, named configuration, that defines what information is to be crawled (e.g. content, kinds of metadata) and where to find that data (e.g. file system path, JDBC Connection String). | + | /** |

| + | * Returns the ID of this Crawler. | ||

| + | * | ||

| + | * @return a String containing the ID of this Crawler | ||

| + | * | ||

| + | * @throws CrawlerException | ||

| + | * if any error occurs | ||

| + | */ | ||

| + | String getCrawlerId() throws CrawlerException; | ||

| + | |||

| + | /** | ||

| + | * Returns an array of DataReference objects. The size of the returned array may vary from call to call. The maximum | ||

| + | * size of the array is determined by configuration or by the implementation class. | ||

| + | * | ||

| + | * @return an array of DataReference objects or null, if no more DataReference exist | ||

| + | * | ||

| + | * @throws CrawlerException | ||

| + | * if any error occurs | ||

| + | * @throws CrawlerCriticalException | ||

| + | * the crawler critical exception | ||

| + | */ | ||

| + | DataReference[] getNext() throws CrawlerException, CrawlerCriticalException; | ||

| + | |||

| + | /** | ||

| + | * Initialize. | ||

| + | * | ||

| + | * @param config | ||

| + | * the DataSourceConnectionConfig | ||

| + | * | ||

| + | * @throws CrawlerException | ||

| + | * the crawler exception | ||

| + | * @throws CrawlerCriticalException | ||

| + | * the crawler critical exception | ||

| + | */ | ||

| + | void initialize(DataSourceConnectionConfig config) throws CrawlerException, CrawlerCriticalException; | ||

| + | |||

| + | /** | ||

| + | * Ends crawl, allowing the Crawler implementation to close any open resources. | ||

| + | * | ||

| + | * @throws CrawlerException | ||

| + | * if any error occurs | ||

| + | */ | ||

| + | void close() throws CrawlerException; | ||

| + | |||

| + | } | ||

| + | </source> | ||

| + | |||

| + | |||

| + | <source lang="java"> | ||

| + | /** | ||

| + | * A callback interface to access metadata and attachments of crawled data. | ||

| + | */ | ||

| + | public interface CrawlerCallback { | ||

| + | /** | ||

| + | * Returns the MObject for the given id. | ||

| + | * | ||

| + | * @param id | ||

| + | * the record id | ||

| + | * @return the MObject | ||

| + | * @throws CrawlerException | ||

| + | * if any non critical error occurs | ||

| + | * @throws CrawlerCriticalException | ||

| + | * if any critical error occurs | ||

| + | */ | ||

| + | MObject getMObject(Id id) throws CrawlerException, CrawlerCriticalException; | ||

| + | |||

| + | /** | ||

| + | * Returns an array of String[] containing the names of the available attachments for the given id. | ||

| + | * | ||

| + | * @param id | ||

| + | * the record id | ||

| + | * @return an array of String[] containing the names of the available attachments | ||

| + | * @throws CrawlerException | ||

| + | * if any non critical error occurs | ||

| + | * @throws CrawlerCriticalException | ||

| + | * if any critical error occurs | ||

| + | */ | ||

| + | String[] getAttachmentNames(Id id) throws CrawlerException, CrawlerCriticalException; | ||

| + | |||

| + | /** | ||

| + | * Returns the attachment for the given Id and name pair. | ||

| + | * | ||

| + | * @param id | ||

| + | * the record id | ||

| + | * @param name | ||

| + | * the name of the attachment | ||

| + | * @return a byte[] containing the attachment | ||

| + | * @throws CrawlerException | ||

| + | * if any non critical error occurs | ||

| + | * @throws CrawlerCriticalException | ||

| + | * if any critical error occurs | ||

| + | */ | ||

| + | byte[] getAttachment(Id id, String name) throws CrawlerException, CrawlerCriticalException; | ||

| + | |||

| + | /** | ||

| + | * Disposes the record with the given Id. | ||

| + | * | ||

| + | * @param id | ||

| + | * the record id | ||

| + | */ | ||

| + | void dispose(Id id); | ||

| + | } | ||

| + | </source> | ||

| + | |||

| + | For completeness, here is the interface DataReference: | ||

| + | <source lang="java"> | ||

| + | /** | ||

| + | * A proxy object interface to a record provided by a Crawler. The object contains the Id and the hash value of the | ||

| + | * record but no additional data. The complete record can be loaded via the CrawlerCallback. | ||

| + | */ | ||

| + | public interface DataReference { | ||

| + | /** | ||

| + | * Returns the Id of the referenced record. | ||

| + | * | ||

| + | * @return the Id of the referenced record | ||

| + | */ | ||

| + | Id getId(); | ||

| + | |||

| + | /** | ||

| + | * Returns the hash of the referenced record as a String. | ||

| + | * | ||

| + | * @return the hash of the referenced record as a String | ||

| + | */ | ||

| + | String getHash(); | ||

| + | |||

| + | /** | ||

| + | * Returns the complete Record object via the CrawlerCallback. | ||

| + | * | ||

| + | * @return the complete record | ||

| + | * @throws CrawlerException | ||

| + | * if any non critical error occurs | ||

| + | * @throws CrawlerCriticalException | ||

| + | * if any critical error occurs | ||

| + | * @throws InvalidTypeException | ||

| + | * if the hash attribute cannot be set | ||

| + | */ | ||

| + | Record getRecord() throws CrawlerException, CrawlerCriticalException, InvalidTypeException; | ||

| + | |||

| + | /** | ||

| + | * Disposes the referenced record object. | ||

| + | */ | ||

| + | void dispose(); | ||

| + | } | ||

| + | </source> | ||

| + | |||

| + | |||

| + | == Architecture == | ||

| + | |||

| + | Crawlers are managed and instantiated by thew CrawlerController. The CrawlerController communicates with the Crawler via interface <tt>Crawler</tt>, only. The Crawler's <tt>getNext()</tt> method returns <tt>DataReference</tt> objects to the CrawlerController. <tt>DataReference</tt> is also an interface implemented by class <tt>org.eclipse.smila.connectivity.framework.util.internal.DataReferenceImpl</tt>. A DataReference, as the name suggests, is only a reference to data provided by the Crawler. This is mainly a performance issue, as due to the use of DeltaIndexing it may not be neccessary to transfer all the data from the Crawler to the CrawlerController and to ConnectivityManager. Therefore a DataReference contains only the minumum data needed to perform DeltaIndexing: an Id and a hash token. To access the whole object it provideds method <tt>getRecord()</tt> that returns a complete Record object containing Id, attributes, annotations and attachments. To create the Record object, the DataReference communicates with the Crawler via interface <tt>CrawlerCallback</tt>, as each DataReference has a reference to the Crawler that created it. | ||

| + | |||

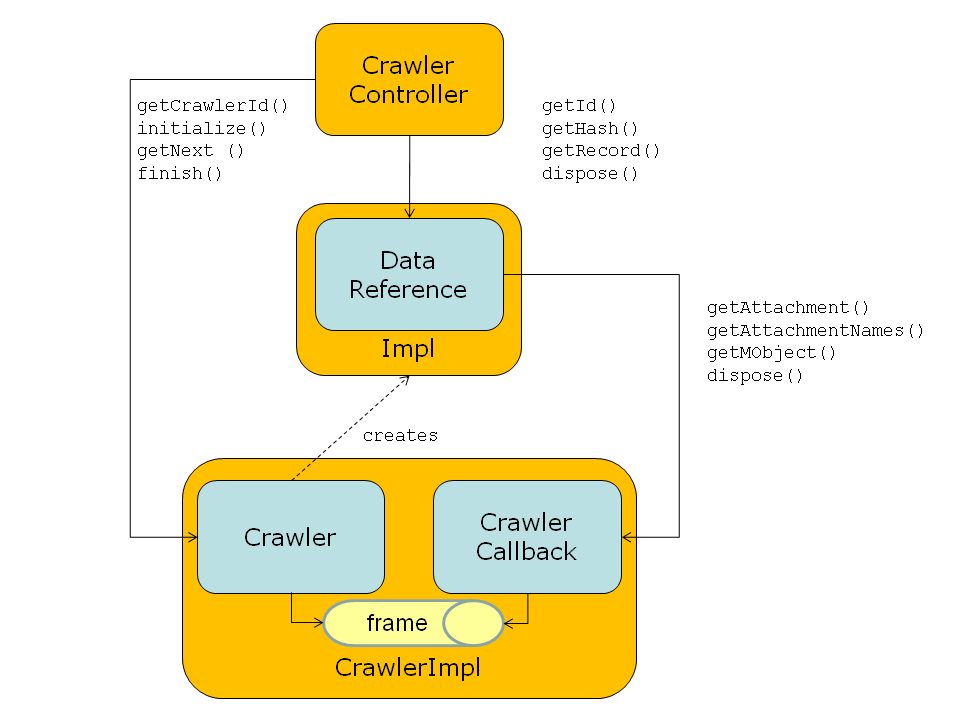

| + | The following chart shows the Crawler Architecture and how data is shared with the CrawlerController: | ||

| + | [[Image:Crawler Architecture.png|Crawler Architecture]] | ||

| + | |||

| + | Package <tt>org.eclipse.smila.connectivity.framework.util</tt> provides some factory classes for crawlers to create Ids, hashes and DataReference objects. More utility classes are planned to be implemented, that allow easy realisation of Crawlers using an Iterator or Producer/Consumer pattern. | ||

| + | |||

| + | |||

| + | == Configuration == | ||

| + | |||

| + | A Crawler is started with a specific, named configuration, that defines what information is to be crawled (e.g. content, kinds of metadata) and where to find that data (e.g. file system path, JDBC Connection String). See each Crawler documentation for details on configuration options. | ||

== Crawler lifecycle == | == Crawler lifecycle == | ||

Revision as of 08:18, 31 March 2009

Overview

A Crawler gathers information about resources, both content and metadata of interest like size or mime type. SMILA currently comes with three types of crawlers, each for a different data source type, namely WebCrawler, JDBC DatabaseCrawler, and FileSystemCrawler to facilitate gathering information from the internet, databases, or files from a hard disk. Furthermore, the Connectivity Framework provides an API for developers to create own crawlers.

API

A Crawler has to implement two interfaces: Crawler and CrawlerCallback. The easiest way to achieve this is to extend the abstract base class AbstractCrawler located in bundle org.eclipse.smila.connectivity.framework. This class already contains handling for the crawlers Id and an OSGI service activate method. The Crawler method getNext() is designed to support an array of Datareference objects, as this reduces the number of method calls. In general there are no restrictions on the size of the array, in fact the size could vary on multiple method calls. This allows a Crawler to internally implement a Producer/Consumer pattern. A Crawler implementation that is restricted to work as an iterator only can also enforce this by always returning an array of size one.

public interface Crawler { /** * Returns the ID of this Crawler. * * @return a String containing the ID of this Crawler * * @throws CrawlerException * if any error occurs */ String getCrawlerId() throws CrawlerException; /** * Returns an array of DataReference objects. The size of the returned array may vary from call to call. The maximum * size of the array is determined by configuration or by the implementation class. * * @return an array of DataReference objects or null, if no more DataReference exist * * @throws CrawlerException * if any error occurs * @throws CrawlerCriticalException * the crawler critical exception */ DataReference[] getNext() throws CrawlerException, CrawlerCriticalException; /** * Initialize. * * @param config * the DataSourceConnectionConfig * * @throws CrawlerException * the crawler exception * @throws CrawlerCriticalException * the crawler critical exception */ void initialize(DataSourceConnectionConfig config) throws CrawlerException, CrawlerCriticalException; /** * Ends crawl, allowing the Crawler implementation to close any open resources. * * @throws CrawlerException * if any error occurs */ void close() throws CrawlerException; }

/** * A callback interface to access metadata and attachments of crawled data. */ public interface CrawlerCallback { /** * Returns the MObject for the given id. * * @param id * the record id * @return the MObject * @throws CrawlerException * if any non critical error occurs * @throws CrawlerCriticalException * if any critical error occurs */ MObject getMObject(Id id) throws CrawlerException, CrawlerCriticalException; /** * Returns an array of String[] containing the names of the available attachments for the given id. * * @param id * the record id * @return an array of String[] containing the names of the available attachments * @throws CrawlerException * if any non critical error occurs * @throws CrawlerCriticalException * if any critical error occurs */ String[] getAttachmentNames(Id id) throws CrawlerException, CrawlerCriticalException; /** * Returns the attachment for the given Id and name pair. * * @param id * the record id * @param name * the name of the attachment * @return a byte[] containing the attachment * @throws CrawlerException * if any non critical error occurs * @throws CrawlerCriticalException * if any critical error occurs */ byte[] getAttachment(Id id, String name) throws CrawlerException, CrawlerCriticalException; /** * Disposes the record with the given Id. * * @param id * the record id */ void dispose(Id id); }

For completeness, here is the interface DataReference:

/** * A proxy object interface to a record provided by a Crawler. The object contains the Id and the hash value of the * record but no additional data. The complete record can be loaded via the CrawlerCallback. */ public interface DataReference { /** * Returns the Id of the referenced record. * * @return the Id of the referenced record */ Id getId(); /** * Returns the hash of the referenced record as a String. * * @return the hash of the referenced record as a String */ String getHash(); /** * Returns the complete Record object via the CrawlerCallback. * * @return the complete record * @throws CrawlerException * if any non critical error occurs * @throws CrawlerCriticalException * if any critical error occurs * @throws InvalidTypeException * if the hash attribute cannot be set */ Record getRecord() throws CrawlerException, CrawlerCriticalException, InvalidTypeException; /** * Disposes the referenced record object. */ void dispose(); }

Architecture

Crawlers are managed and instantiated by thew CrawlerController. The CrawlerController communicates with the Crawler via interface Crawler, only. The Crawler's getNext() method returns DataReference objects to the CrawlerController. DataReference is also an interface implemented by class org.eclipse.smila.connectivity.framework.util.internal.DataReferenceImpl. A DataReference, as the name suggests, is only a reference to data provided by the Crawler. This is mainly a performance issue, as due to the use of DeltaIndexing it may not be neccessary to transfer all the data from the Crawler to the CrawlerController and to ConnectivityManager. Therefore a DataReference contains only the minumum data needed to perform DeltaIndexing: an Id and a hash token. To access the whole object it provideds method getRecord() that returns a complete Record object containing Id, attributes, annotations and attachments. To create the Record object, the DataReference communicates with the Crawler via interface CrawlerCallback, as each DataReference has a reference to the Crawler that created it.

The following chart shows the Crawler Architecture and how data is shared with the CrawlerController:

Package org.eclipse.smila.connectivity.framework.util provides some factory classes for crawlers to create Ids, hashes and DataReference objects. More utility classes are planned to be implemented, that allow easy realisation of Crawlers using an Iterator or Producer/Consumer pattern.

Configuration

A Crawler is started with a specific, named configuration, that defines what information is to be crawled (e.g. content, kinds of metadata) and where to find that data (e.g. file system path, JDBC Connection String). See each Crawler documentation for details on configuration options.

Crawler lifecycle

The CrawlerController manages the life cycle of the crawler (e.g. start, stop, abort) and may instantiate multiple Crawlers concurrently, even of the same type.