Notice: this Wiki will be going read only early in 2024 and edits will no longer be possible. Please see: https://gitlab.eclipse.org/eclipsefdn/helpdesk/-/wikis/Wiki-shutdown-plan for the plan.

Difference between revisions of "SMILA/5 Minutes Tutorial"

| Line 2: | Line 2: | ||

[[Category:HowTo]] | [[Category:HowTo]] | ||

| − | This page contains installation instructions for the SMILA application | + | This page contains installation instructions for the SMILA application and helps you with your first steps in SMILA. |

| − | == Download and unpack SMILA == | + | == Download and unpack the SMILA application. == |

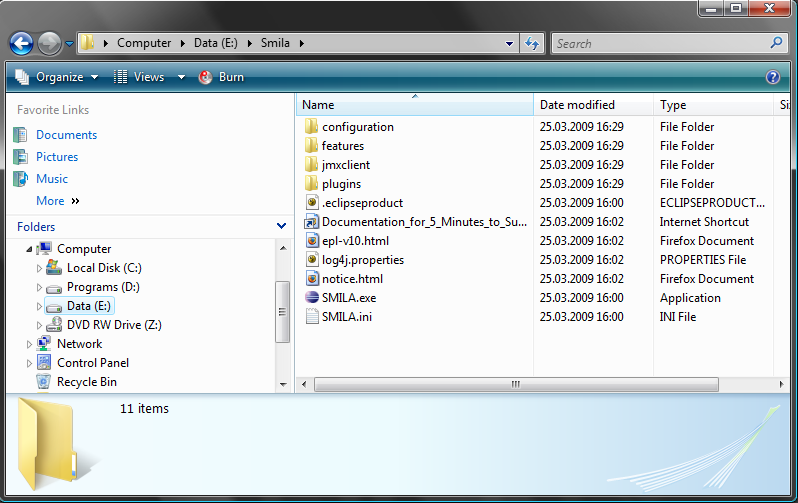

| − | [http://www.eclipse.org/smila/downloads.php | + | After [http://www.eclipse.org/smila/downloads.php downloading] and unpacking you should have the following folder structure. |

[[Image:Installation.png]] | [[Image:Installation.png]] | ||

| Line 14: | Line 14: | ||

To be able to follow the steps below, check the following preconditions: | To be able to follow the steps below, check the following preconditions: | ||

| − | * | + | * Note: To run SMILA you need to have jre executable added to your PATH environment variable. The jvm version should be at least java 5. <br> Either: |

** add the path of your local JRE executable to the PATH environment variable <br>or<br> | ** add the path of your local JRE executable to the PATH environment variable <br>or<br> | ||

** add the argument <tt>-vm <path/to/jre/executable></tt> right at the top of the file <tt>SMILA.ini</tt>. <br>Make sure that <tt>-vm</tt> is indeed the first argument in the file and that there is a line break after it. It should look similar to the following: | ** add the argument <tt>-vm <path/to/jre/executable></tt> right at the top of the file <tt>SMILA.ini</tt>. <br>Make sure that <tt>-vm</tt> is indeed the first argument in the file and that there is a line break after it. It should look similar to the following: | ||

| Line 29: | Line 29: | ||

</tt> | </tt> | ||

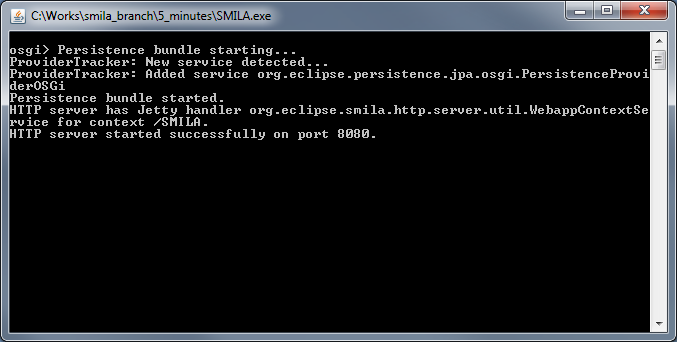

| − | == Start SMILA == | + | == Start the SMILA engine. == |

| − | To start | + | To start SMILA engine double-click on SMILA.exe or open an command line, navigate to the directory that contains extracted files, and run SMILA executable. Wait until the engine is fully started. If everything is OK, you should see output similar to the one on the following screenshot: |

[[Image:Smila-console-0.8.0.png]] | [[Image:Smila-console-0.8.0.png]] | ||

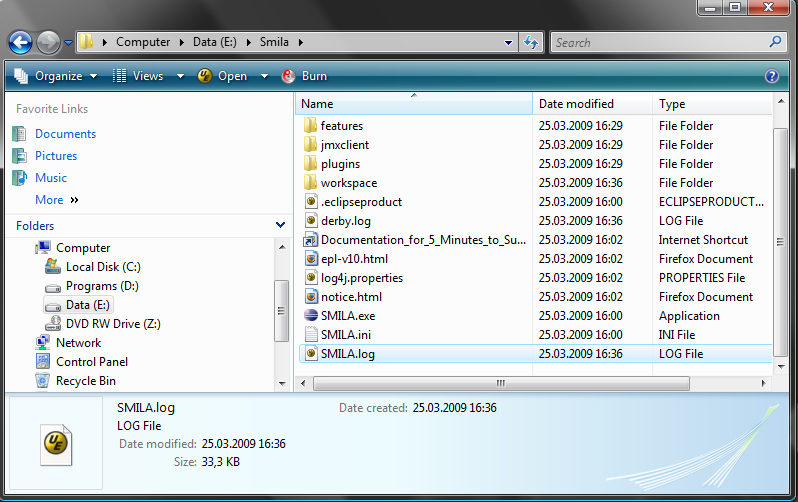

== Check the log file == | == Check the log file == | ||

| − | + | You can check what's happening in the background by opening the SMILA log file in an editor. This file is named <tt>SMILA.log</tt> and can be found in the same directory as the SMILA executable. | |

[[Image:Smila-log.png]] | [[Image:Smila-log.png]] | ||

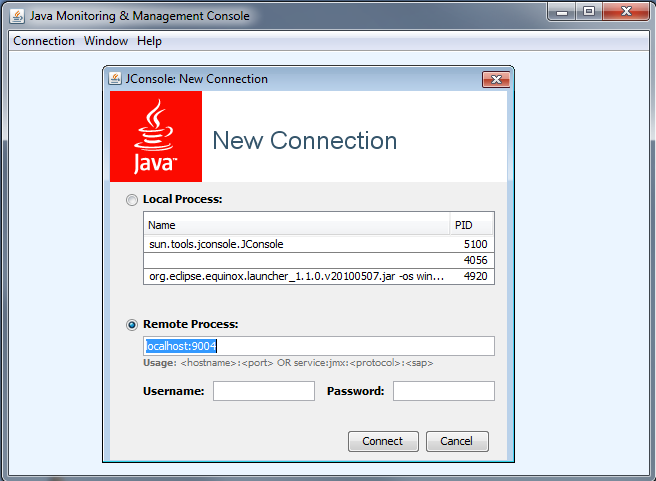

| − | == | + | == Configure crawling jobs. == |

| − | + | Now when the SMILA engine is up and running we can start the crawling jobs. Crawling jobs are managed over the JMX protocol, that means that we can connect to SMILA with a JMX client of your choice. We will use JConsole for that purpose since this JMX client is already available as a default with the Sun Java distribution. | |

Start the JConsole executable in your JDK distribution (<tt><JAVA_HOME>/bin/jconsole</tt>). If the client is up and running, connect to <tt>localhost:9004</tt>. | Start the JConsole executable in your JDK distribution (<tt><JAVA_HOME>/bin/jconsole</tt>). If the client is up and running, connect to <tt>localhost:9004</tt>. | ||

| Line 47: | Line 47: | ||

[[Image:Jconsole.png-0.8.0.png]] | [[Image:Jconsole.png-0.8.0.png]] | ||

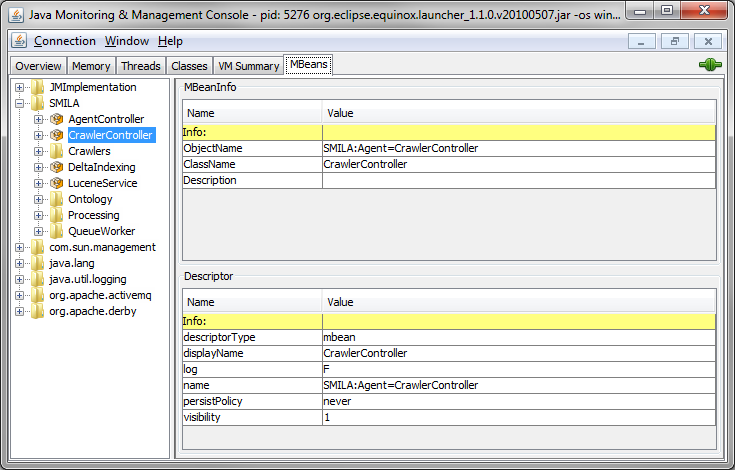

| − | Next, switch to the ''MBeans'' tab, expand the | + | Next, switch to the ''MBeans'' tab, expand the SMILA node in the ''MBeans'' tree on the left side of the window, and click the <tt>CrawlerController</tt> node. This node is used to manage and monitor all crawling activities. |

[[Image:Mbeans-overview-0.8.0.png]] | [[Image:Mbeans-overview-0.8.0.png]] | ||

| − | == Start the | + | == Start the file system crawler. == |

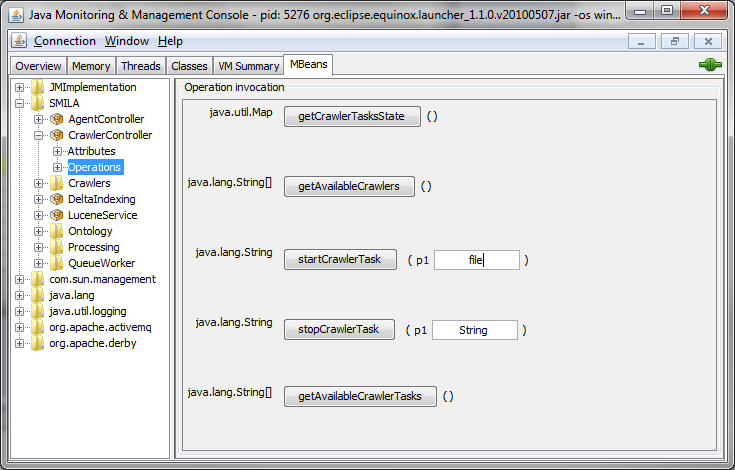

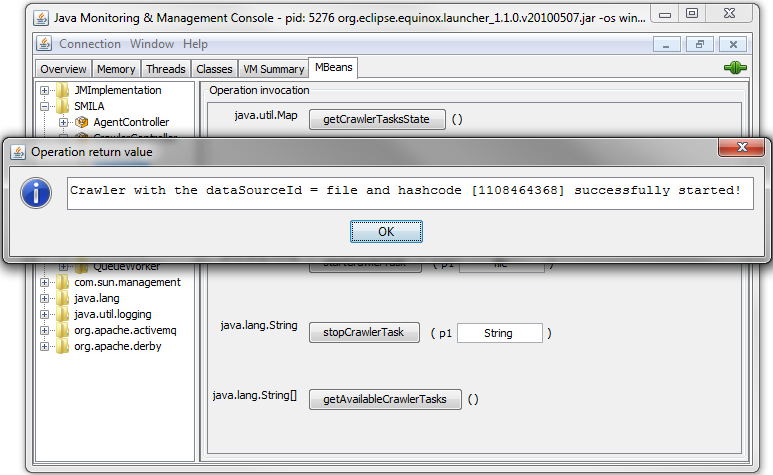

| − | To start | + | To start file system crawler, open the ''Operations'' tab on the right pane, type "file" into text field next to the <tt>startCrawl</tt> button and click on <tt>startCrawl</tt> button. |

[[Image:Start-file-crawl-0.8.0.png]] | [[Image:Start-file-crawl-0.8.0.png]] | ||

| Line 60: | Line 60: | ||

[[Image:Start-crawl-file-result-0.8.0.png]] | [[Image:Start-crawl-file-result-0.8.0.png]] | ||

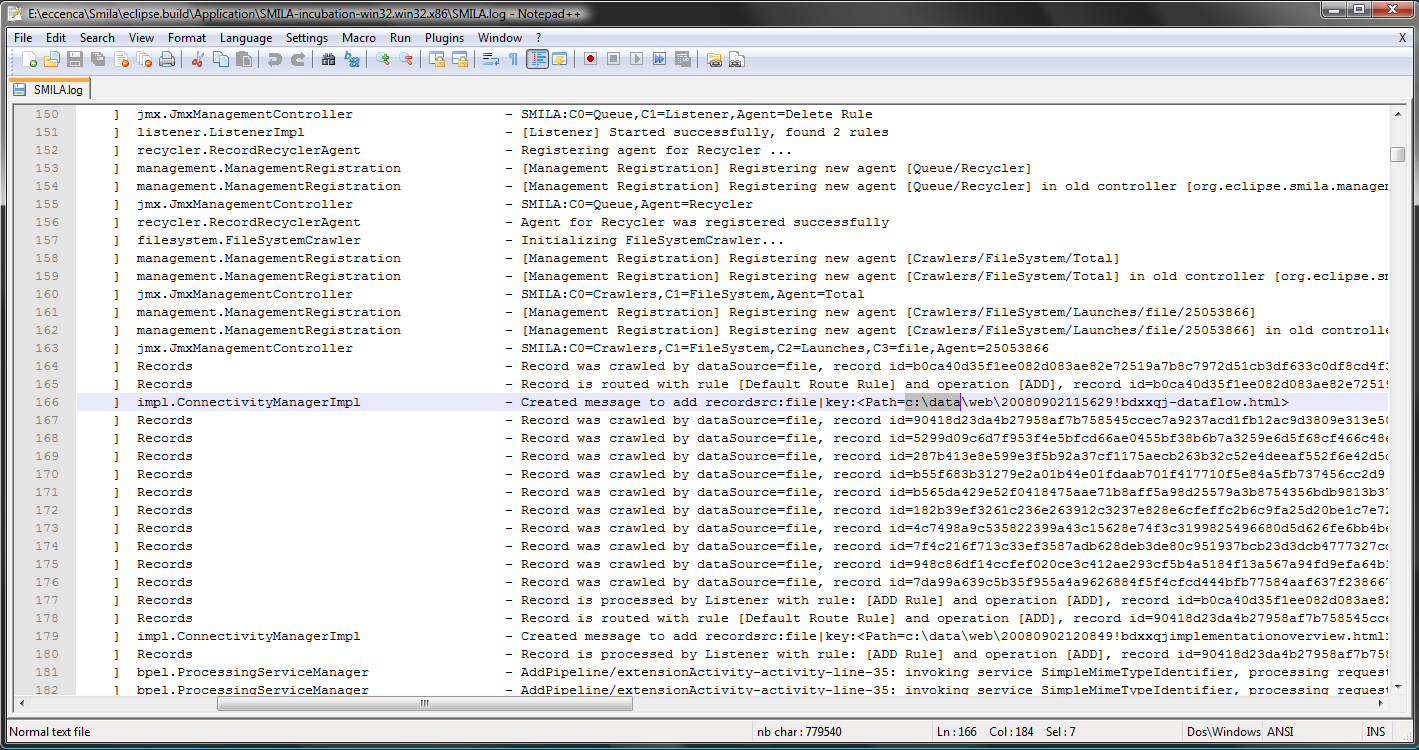

| − | Now | + | Now we can check the log file to see what happened: |

[[Image:File-crawl-log.png]] | [[Image:File-crawl-log.png]] | ||

| − | The | + | The configuration of filesystem crawler crawls the folder c:\data by default. Therefore, it is very likely that do not receive the above results indicating the successful indexing but rather an error message similar to the one shown below, except where it happens that you accidently have a folder <tt>c:\data</tt> on your system that the crawler can find and index: |

<tt> | <tt> | ||

| Line 70: | Line 70: | ||

</tt> | </tt> | ||

| − | The error message above states that the crawler tried to index | + | The error message above states that the crawler tried to index folder at <tt>c:\data</tt> but was not able to find it. To solve this, let's create a folder with sample data, say <tt>c:\data</tt>, put some dummy text files into it, and configure the file system crawler to index it. |

| − | == Configure the | + | == Configure the file system crawler. == |

| − | To | + | To configure the crawler to index other directories, open the configuration file of the crawler at <tt>configuration/org.eclipse.smila.connectivity.framework/file.xml</tt>. Modify the ''BaseDir'' attribute by setting its value to an absolute path that points to your new directory. Don't forget to save the file.: |

<tt> | <tt> | ||

| Line 87: | Line 87: | ||

{|width="100%" style="background-color:#ffcccc; padding-left:30px;" | {|width="100%" style="background-color:#ffcccc; padding-left:30px;" | ||

| | | | ||

| − | Note: Currently | + | Note: Currently only plain text and html files are crawled and indexed correctly by SMILA crawlers. |

|} | |} | ||

| − | == | + | == Searching on the indices. == |

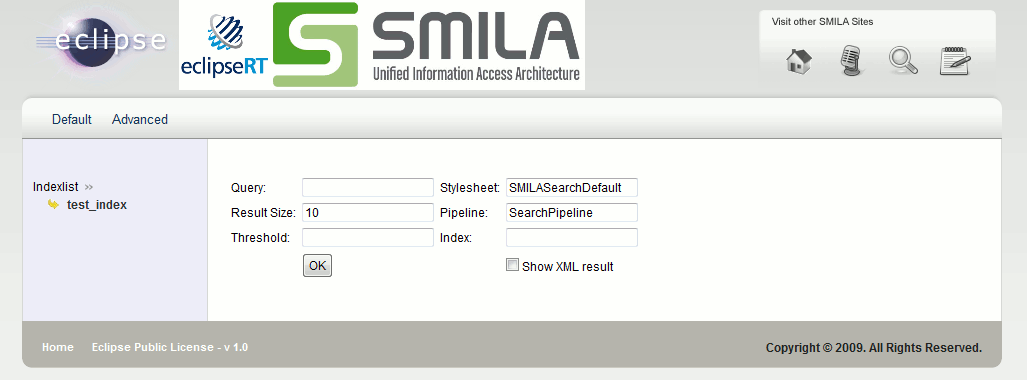

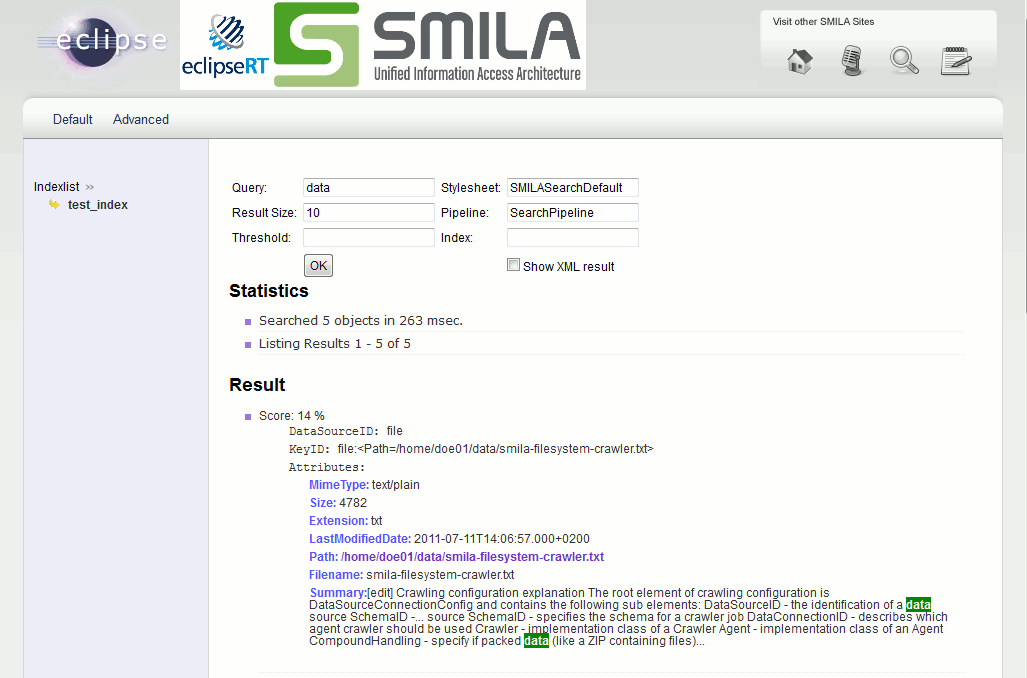

To search on the index which was created by the crawlers, point your browser to <tt>http://localhost:8080/SMILA/search</tt>. There are currently two stylesheets from which you can select by clicking the respective links in the upper left corner of the header bar: The ''Default'' stylesheet shows a reduced search form with text fields like ''Query'', ''Result Size'', and ''Index Name'', adequate to query the full-text content of the indexed documents. The ''Advanced'' stylesheet in turn provides a more detailed search form with text fields for meta-data search like for example ''Path'', ''MimeType'', ''Filename'', and other document attributes. | To search on the index which was created by the crawlers, point your browser to <tt>http://localhost:8080/SMILA/search</tt>. There are currently two stylesheets from which you can select by clicking the respective links in the upper left corner of the header bar: The ''Default'' stylesheet shows a reduced search form with text fields like ''Query'', ''Result Size'', and ''Index Name'', adequate to query the full-text content of the indexed documents. The ''Advanced'' stylesheet in turn provides a more detailed search form with text fields for meta-data search like for example ''Path'', ''MimeType'', ''Filename'', and other document attributes. | ||

| Line 103: | Line 103: | ||

[[Image:Searching-by-filename.png]] | [[Image:Searching-by-filename.png]] | ||

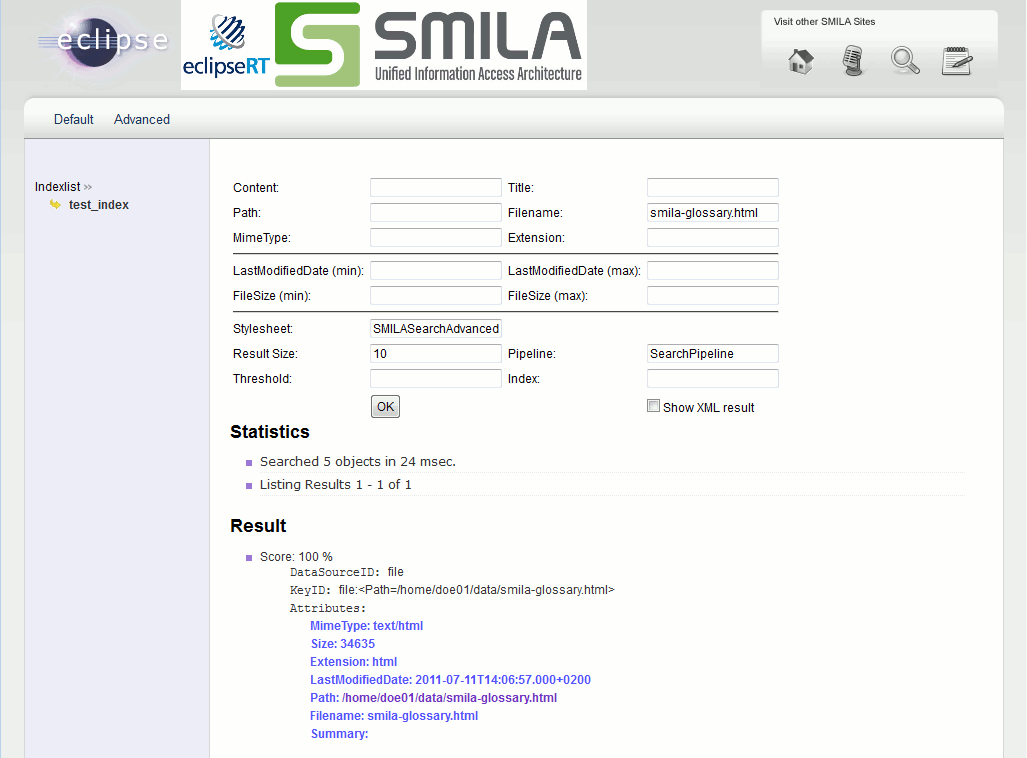

| − | == Configure and run the | + | == Configure and run the web crawler. == |

| − | Now that we | + | Now that we know how to start and configure the file system crawler and how to search on indices configuring and running the web crawler is straightforward: |

| − | + | The configuration file of the web crawler is located at <tt>configuration/org.eclipse.smila.connectivity.framework directory</tt> and is named <tt>web.xml</tt>: | |

[[Image:Webcrawler-config.png]] | [[Image:Webcrawler-config.png]] | ||

| − | By default | + | By default the web crawler is configured to index the URL ''http://wiki.eclipse.org/SMILA''. To change this, open the file in an editor of your choice and set the content of the <tt><Seed></tt> element to the desired web site. Detailed information on the configuration of the web crawler is also available at the [[SMILA/Documentation/Web_Crawler|Web crawler]] configuration page. |

<source lang="xml"> | <source lang="xml"> | ||

| Line 123: | Line 123: | ||

</source> | </source> | ||

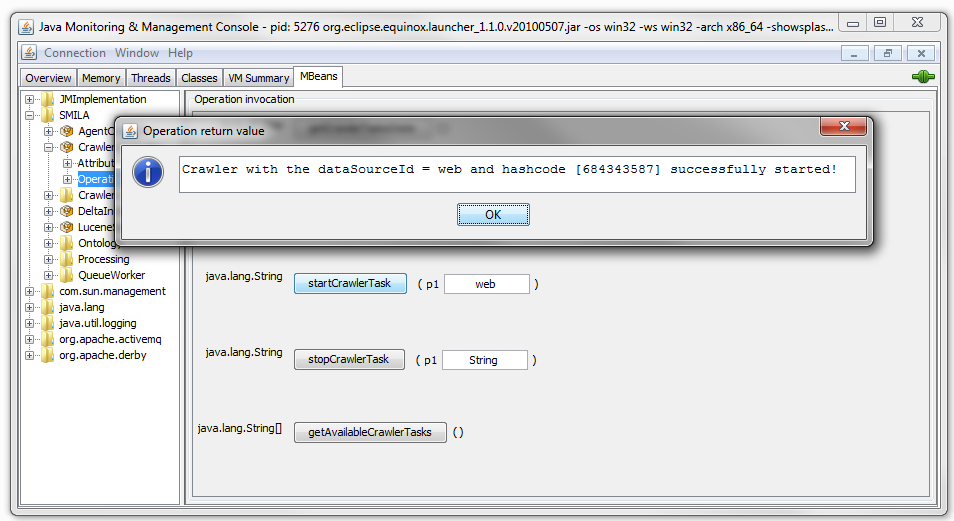

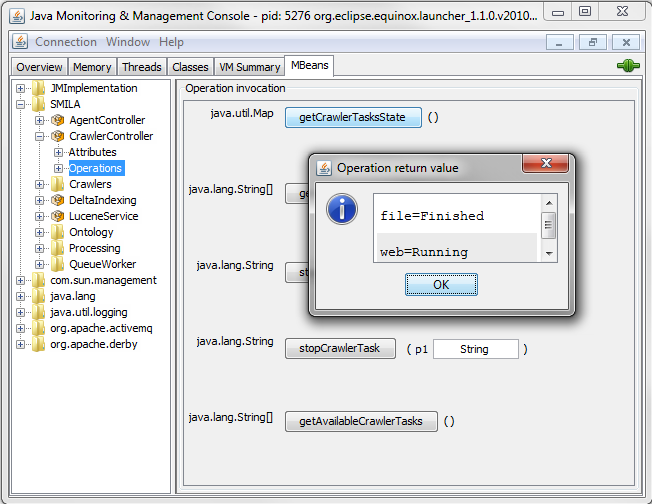

| − | To start the crawling process, save the configuration file, | + | To start the crawling process, save the configuration file, open the ''Operations'' tab in JConsole again, type "web" into the text field next to the <tt>startCrawl</tt> button, and click the button. |

| + | Note: The ''Operations'' tab in JConsole also provides buttons to stop a crawler, get the list of active crawlers and the current status of a particular crawling job. | ||

[[Image:Starting-web-crawler-0.8.0.png]] | [[Image:Starting-web-crawler-0.8.0.png]] | ||

| − | + | Default limit for downloaded documents is set to 1000 into webcrawler configuration, so it can take a while for web crawling job to finish. You can stop crawling job manually by typing "web" next to <tt>stopCrawl</tt> button and then clicking on this button. | |

| + | As an example the following screenshot shows the result after the <tt>getActiveCrawlsStatus</tt> button has been clicked while the web crawler is running: | ||

[[Image:SMILA-One-active-crawl-found-0.8.0.png]] | [[Image:SMILA-One-active-crawl-found-0.8.0.png]] | ||

| Line 133: | Line 135: | ||

If you do not want to wait, you may as well stop the crawling job manually. In order to do this, type "web" into the text field next to the (<tt>stopCrawlerTask</tt>) button, then click this button. | If you do not want to wait, you may as well stop the crawling job manually. In order to do this, type "web" into the text field next to the (<tt>stopCrawlerTask</tt>) button, then click this button. | ||

| − | + | When the web crawler's job is finished, you can search on the generated index using the search form to [[SMILA/Documentation_for_5_Minutes_to_Success#Search on index|search on the generated index]]. | |

[[Category:SMILA]] | [[Category:SMILA]] | ||

| Line 139: | Line 141: | ||

== Manage CrawlerController using the JMX Client == | == Manage CrawlerController using the JMX Client == | ||

| − | + | In addition to managing crawling jobs with JConsole it's also possible to use jmxclient from SMILA distribution. Jmxclient is a console application that allows to manage crawl jobs and create scripts for batch crawlers execution. For more information please check [[SMILA/Documentation/Management#JMX_Client|jmxclient documentation ]]. Jmxclient application is located into <tt>jmxclient</tt> directory. You should use appropriate run script (run.bat or run.sh) to start the application. | |

| − | For example, to start | + | For example, to start file system crawler use following command: |

| − | < | + | <code> |

run crawl file | run crawl file | ||

| − | </ | + | </code> |

For more information please check the [[SMILA/Documentation/Management#JMX_Client|JMX Client documentation]]. | For more information please check the [[SMILA/Documentation/Management#JMX_Client|JMX Client documentation]]. | ||

| − | == 5 Minutes for changing the workflow == | + | == 5 Minutes for changing the workflow. == |

| − | In previous sections all data collected by crawlers was processed with the same workflow and | + | In previous sections all data collected by crawlers was processed with the same workflow and indexed into the same index, test_index. |

| − | It is possible | + | It is possible to configure SMILA so that data from different data sources will go through different workflows and will be indexed into different indices. This will require more advanced configuration than before but still is quite simple. |

| − | + | Lets create an additional workflow for webcrawler records so that the webcrawler data will be indexed into a separate index, say "web_index". | |

=== Modify Listener rules === | === Modify Listener rules === | ||

| − | The first step includes modifying and extending the Listener rules so that webcrawler records are to be processed by their own BPEL workflow. For more information | + | The first step includes modifying and extending the Listener rules so that webcrawler records are to be processed by their own BPEL workflow. For more information about Listener, please see the section [[SMILA/Documentation/QueueWorker/Listener|Listener]] of the [[SMILA/Documentation/QueueWorker|QueueWorker]] documentation. |

Open the configuration of the Listener from <tt>configuration/org.eclipse.smila.connectivity.queue.worker.jms/QueueWorkerListenerConfig.xml</tt> and edit the <tt><Condition></tt> tag of the existing ''ADD Rule'' to skip webcrawler data. The result should be as follows: | Open the configuration of the Listener from <tt>configuration/org.eclipse.smila.connectivity.queue.worker.jms/QueueWorkerListenerConfig.xml</tt> and edit the <tt><Condition></tt> tag of the existing ''ADD Rule'' to skip webcrawler data. The result should be as follows: | ||

| Line 170: | Line 172: | ||

</Rule> | </Rule> | ||

</source> | </source> | ||

| − | Now add the following new rule: | + | Now add the following new rule to this file: |

<source lang="xml"> | <source lang="xml"> | ||

<Rule Name="Web ADD Rule" WaitMessageTimeout="10" Threads="2"> | <Rule Name="Web ADD Rule" WaitMessageTimeout="10" Threads="2"> | ||

| Line 384: | Line 386: | ||

Ok, now it seems that we have finally finished configuring SMILA for using separate workflows for file system and web crawling and index data from these crawlers into different indices. | Ok, now it seems that we have finally finished configuring SMILA for using separate workflows for file system and web crawling and index data from these crawlers into different indices. | ||

Here is what we have done so far: | Here is what we have done so far: | ||

| − | # | + | # Modified Listener rules in order to use different workflows for web and file system crawling. |

| − | # | + | # Created a new BPEL workflow for Web crawler data. |

# We added the <tt>web_index</tt> index to the Lucence configuration. | # We added the <tt>web_index</tt> index to the Lucence configuration. | ||

Now we can start SMILA again and observe what will happen when starting the Web crawler. | Now we can start SMILA again and observe what will happen when starting the Web crawler. | ||

| Line 391: | Line 393: | ||

{|width="100%" style="background-color:#d8e4f1; padding-left:30px;" | {|width="100%" style="background-color:#d8e4f1; padding-left:30px;" | ||

| | | | ||

| − | It | + | It is very important to shutdown SMILA engine and restart afterwards because modified configurations will load only on startup. |

|} | |} | ||

| Line 400: | Line 402: | ||

== Configuration overview == | == Configuration overview == | ||

| − | SMILA configuration files are | + | SMILA configuration files are placed into <tt>configuration</tt> directory of the SMILA application. |

| − | The following figure shows the configuration files relevant to this tutorial, regarding SMILA components and the data lifecycle. | + | The following figure shows the configuration files relevant to this tutorial, regarding SMILA components and the data lifecycle. SMILA component names are black-colored, directories containing configuration files and filenames are blue-colored. |

[[Image:Smila-configuration-overview.jpg]] | [[Image:Smila-configuration-overview.jpg]] | ||

Revision as of 08:19, 20 April 2011

This page contains installation instructions for the SMILA application and helps you with your first steps in SMILA.

Contents

- 1 Download and unpack the SMILA application.

- 2 Check the preconditions

- 3 Start the SMILA engine.

- 4 Check the log file

- 5 Configure crawling jobs.

- 6 Start the file system crawler.

- 7 Configure the file system crawler.

- 8 Searching on the indices.

- 9 Configure and run the web crawler.

- 10 Manage CrawlerController using the JMX Client

- 11 5 Minutes for changing the workflow.

- 12 Configuration overview

Download and unpack the SMILA application.

After downloading and unpacking you should have the following folder structure.

Check the preconditions

To be able to follow the steps below, check the following preconditions:

- Note: To run SMILA you need to have jre executable added to your PATH environment variable. The jvm version should be at least java 5.

Either:- add the path of your local JRE executable to the PATH environment variable

or - add the argument -vm <path/to/jre/executable> right at the top of the file SMILA.ini.

Make sure that -vm is indeed the first argument in the file and that there is a line break after it. It should look similar to the following:

- add the path of your local JRE executable to the PATH environment variable

-vm d:/java/jre6/bin/java ...

- Since we are going to use Jconsole as the JMX client later in this tutorial, it is recommended to install and use a Java SE Development Kit (JDK) and not just a Java SE Runtime Environment (JRE) because the latter does not include this application.

- When using the Linux distributable of SMILA, make sure that the files SMILA and jmxclient/run.sh have executable permissions. If not, set the permission by running the following commands in a console:

chmod +x ./SMILA chmod +x ./jmxclient/run.sh

Start the SMILA engine.

To start SMILA engine double-click on SMILA.exe or open an command line, navigate to the directory that contains extracted files, and run SMILA executable. Wait until the engine is fully started. If everything is OK, you should see output similar to the one on the following screenshot:

Check the log file

You can check what's happening in the background by opening the SMILA log file in an editor. This file is named SMILA.log and can be found in the same directory as the SMILA executable.

Configure crawling jobs.

Now when the SMILA engine is up and running we can start the crawling jobs. Crawling jobs are managed over the JMX protocol, that means that we can connect to SMILA with a JMX client of your choice. We will use JConsole for that purpose since this JMX client is already available as a default with the Sun Java distribution.

Start the JConsole executable in your JDK distribution (<JAVA_HOME>/bin/jconsole). If the client is up and running, connect to localhost:9004.

Next, switch to the MBeans tab, expand the SMILA node in the MBeans tree on the left side of the window, and click the CrawlerController node. This node is used to manage and monitor all crawling activities.

Start the file system crawler.

To start file system crawler, open the Operations tab on the right pane, type "file" into text field next to the startCrawl button and click on startCrawl button.

You should receive a message similar to the following, indicating that the crawler has been successfully started:

Now we can check the log file to see what happened:

The configuration of filesystem crawler crawls the folder c:\data by default. Therefore, it is very likely that do not receive the above results indicating the successful indexing but rather an error message similar to the one shown below, except where it happens that you accidently have a folder c:\data on your system that the crawler can find and index:

... ERROR impl.CrawlThread - org.eclipse.smila.connectivity.framework.CrawlerCriticalException: Folder "c:\data" is not found

The error message above states that the crawler tried to index folder at c:\data but was not able to find it. To solve this, let's create a folder with sample data, say c:\data, put some dummy text files into it, and configure the file system crawler to index it.

Configure the file system crawler.

To configure the crawler to index other directories, open the configuration file of the crawler at configuration/org.eclipse.smila.connectivity.framework/file.xml. Modify the BaseDir attribute by setting its value to an absolute path that points to your new directory. Don't forget to save the file.:

<Process> <BaseDir>/home/johndoe/mydata</BaseDir> ... </Process>

Then start the File System Crawler again and check SMILA.log for the result.

|

Note: Currently only plain text and html files are crawled and indexed correctly by SMILA crawlers. |

Searching on the indices.

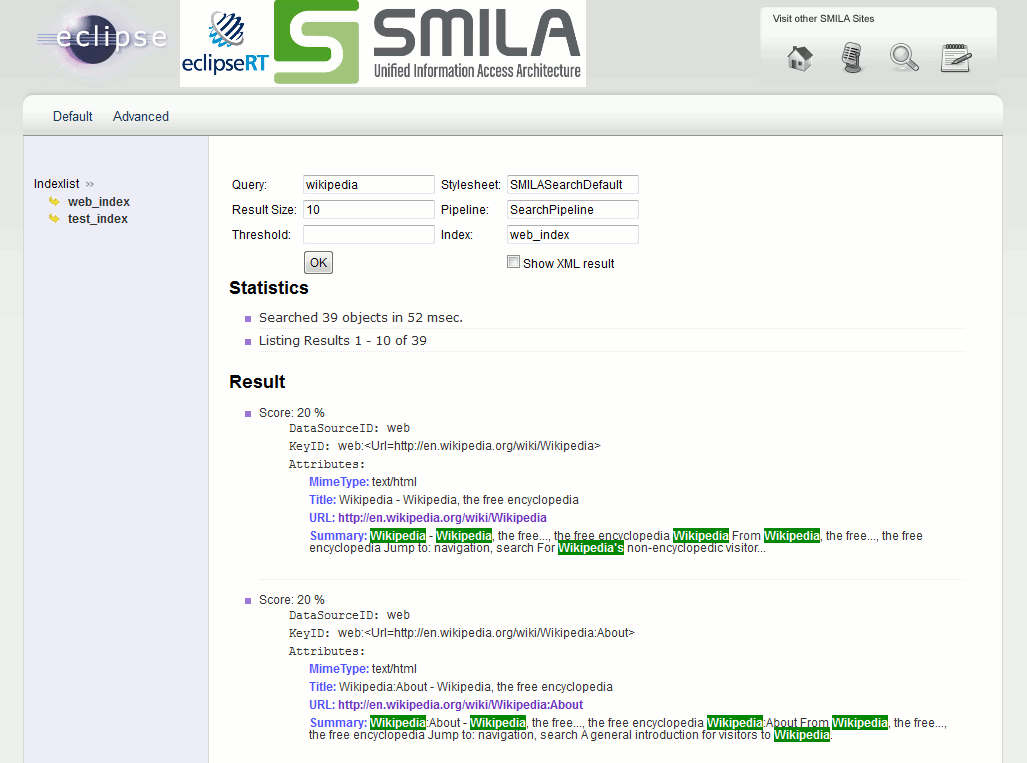

To search on the index which was created by the crawlers, point your browser to http://localhost:8080/SMILA/search. There are currently two stylesheets from which you can select by clicking the respective links in the upper left corner of the header bar: The Default stylesheet shows a reduced search form with text fields like Query, Result Size, and Index Name, adequate to query the full-text content of the indexed documents. The Advanced stylesheet in turn provides a more detailed search form with text fields for meta-data search like for example Path, MimeType, Filename, and other document attributes.

Now, let's try the Default stylesheet and enter our first simple search using a word that you expect to be contained in your dummy files. In this tutorial, we assume that there is a match for the term "data" in the indexed documents. First, select the index on which you want to search from the Indexlist column on the left-hand side. Currently, there should be only one in the list, namely an index called "test_index". Note that the selected index name will appear in the Index Name text field of the search form. Then enter the desired term into the Query text field. And finally, click OK to send your query to SMILA. Your result could be similar to the following:

Now, let's use the Advanced stylesheet and search for the name of one the files contained in the indexed folder to check whether it was properly indexed. In our example, we are going to search for a file named glossary.html. Click Advanced to switch to the detailed search form, enter the desired file name into the Filename text field, then click OK to submit your search. Your result could be similar to the following:

Configure and run the web crawler.

Now that we know how to start and configure the file system crawler and how to search on indices configuring and running the web crawler is straightforward:

The configuration file of the web crawler is located at configuration/org.eclipse.smila.connectivity.framework directory and is named web.xml:

By default the web crawler is configured to index the URL http://wiki.eclipse.org/SMILA. To change this, open the file in an editor of your choice and set the content of the <Seed> element to the desired web site. Detailed information on the configuration of the web crawler is also available at the Web crawler configuration page.

... <Seeds FollowLinks="NoFollow"> <Seed>http://en.wikipedia.org/wiki/Main_Page</Seed> </Seeds> <Filters> <Filter Type="RegExp" Value=".*action=edit.*" WorkType="Unselect"/> </Filters> ...

To start the crawling process, save the configuration file, open the Operations tab in JConsole again, type "web" into the text field next to the startCrawl button, and click the button. Note: The Operations tab in JConsole also provides buttons to stop a crawler, get the list of active crawlers and the current status of a particular crawling job.

Default limit for downloaded documents is set to 1000 into webcrawler configuration, so it can take a while for web crawling job to finish. You can stop crawling job manually by typing "web" next to stopCrawl button and then clicking on this button. As an example the following screenshot shows the result after the getActiveCrawlsStatus button has been clicked while the web crawler is running:

If you do not want to wait, you may as well stop the crawling job manually. In order to do this, type "web" into the text field next to the (stopCrawlerTask) button, then click this button.

When the web crawler's job is finished, you can search on the generated index using the search form to search on the generated index.

Manage CrawlerController using the JMX Client

In addition to managing crawling jobs with JConsole it's also possible to use jmxclient from SMILA distribution. Jmxclient is a console application that allows to manage crawl jobs and create scripts for batch crawlers execution. For more information please check jmxclient documentation . Jmxclient application is located into jmxclient directory. You should use appropriate run script (run.bat or run.sh) to start the application. For example, to start file system crawler use following command:

run crawl file

For more information please check the JMX Client documentation.

5 Minutes for changing the workflow.

In previous sections all data collected by crawlers was processed with the same workflow and indexed into the same index, test_index. It is possible to configure SMILA so that data from different data sources will go through different workflows and will be indexed into different indices. This will require more advanced configuration than before but still is quite simple.

Lets create an additional workflow for webcrawler records so that the webcrawler data will be indexed into a separate index, say "web_index".

Modify Listener rules

The first step includes modifying and extending the Listener rules so that webcrawler records are to be processed by their own BPEL workflow. For more information about Listener, please see the section Listener of the QueueWorker documentation.

Open the configuration of the Listener from configuration/org.eclipse.smila.connectivity.queue.worker.jms/QueueWorkerListenerConfig.xml and edit the <Condition> tag of the existing ADD Rule to skip webcrawler data. The result should be as follows:

<Rule Name="ADD Rule" WaitMessageTimeout="10" Threads="4" MaxMessageBlockSize="20"> <Source BrokerId="broker1" Queue="SMILA.connectivity"/> <Condition>Operation='ADD' and NOT(DataSourceID LIKE '%feeds%') and NOT(DataSourceID LIKE '%xmldump%') and NOT (DataSourceID LIKE 'web%')</Condition> <Task> <Process Workflow="AddPipeline"/> </Task> </Rule>

Now add the following new rule to this file:

<Rule Name="Web ADD Rule" WaitMessageTimeout="10" Threads="2"> <Source BrokerId="broker1" Queue="SMILA.connectivity"/> <Condition>Operation='ADD' and DataSourceID LIKE 'web%'</Condition> <Task> <Process Workflow="AddWebPipeline"/> </Task> </Rule>

This rule defines that webcrawler data will be processed by the AddWebPipeline workflow, which we will have to create in the next step.

Create workflow for the BPEL WorkflowProcessor

We need to add the AddWebPipeline workflow to the BPEL WorkflowProcessor. For more information about BPEL WorkflowProcessor please check the BPEL WorkflowProcessor documentation. BPEL WorkflowProcessor configuration files are contained in the configuration/org.eclipse.smila.processing.bpel/pipelines directory. There is a file called addpipeline.bpel which defines the "AddPipeline" process. Let's create the addwebpipeline.bpel file that will define the "AddWebPipeline" process and put the following code into it:

<?xml version="1.0" encoding="utf-8" ?> <process name="AddWebPipeline" targetNamespace="http://www.eclipse.org/smila/processor" xmlns="http://docs.oasis-open.org/wsbpel/2.0/process/executable" xmlns:xsd="http://www.w3.org/2001/XMLSchema" xmlns:proc="http://www.eclipse.org/smila/processor" xmlns:rec="http://www.eclipse.org/smila/record"> <import location="processor.wsdl" namespace="http://www.eclipse.org/smila/processor" importType="http://schemas.xmlsoap.org/wsdl/" /> <partnerLinks> <partnerLink name="Pipeline" partnerLinkType="proc:ProcessorPartnerLinkType" myRole="service" /> </partnerLinks> <extensions> <extension namespace="http://www.eclipse.org/smila/processor" mustUnderstand="no" /> </extensions> <variables> <variable name="request" messageType="proc:ProcessorMessage" /> </variables> <sequence> <receive name="start" partnerLink="Pipeline" portType="proc:ProcessorPortType" operation="process" variable="request" createInstance="yes" /> <!-- only process text based content, skip everything else --> <if name="conditionIsText"> <condition>starts-with($request.records/rec:Record[1]/rec:Val[@key="MimeType"],"text/")</condition> <sequence name="processTextBasedContent"> <!-- extract txt from html files --> <if name="conditionIsHtml"> <condition>starts-with($request.records/rec:Record[1]/rec:Val[@key="MimeType"],"text/html") or starts-with($request.records/rec:Record[1]/rec:Val[@key="MimeType"],"text/xml") </condition> </if> <extensionActivity> <proc:invokePipelet name="invokeHtml2Txt"> <proc:pipelet class="org.eclipse.smila.processing.pipelets.HtmlToTextPipelet" /> <proc:variables input="request" output="request" /> <proc:configuration> <rec:Val key="inputType">ATTACHMENT</rec:Val> <rec:Val key="outputType">ATTACHMENT</rec:Val> <rec:Val key="inputName">Content</rec:Val> <rec:Val key="outputName">Content</rec:Val> <rec:Val key="meta:title">Title</rec:Val> </proc:configuration> </proc:invokePipelet> </extensionActivity> <extensionActivity> <proc:invokePipelet name="invokeLucenePipelet"> <proc:pipelet class="org.eclipse.smila.lucene.pipelets.LuceneIndexPipelet" /> <proc:variables input="request" output="request" /> <proc:configuration> <rec:Map key="_indexing"> <rec:Val key="indexname">web_index</rec:Val> <rec:Val key="executionMode">ADD</rec:Val> </rec:Map> </proc:configuration> </proc:invokePipelet> </extensionActivity> </sequence> </if> <reply name="end" partnerLink="Pipeline" portType="proc:ProcessorPortType" operation="process" variable="request" /> <exit /> </sequence> </process>

Note that we use "web_index" index name for the LuceneService in the code above:

<proc:configuration> <rec:Map key="_indexing"> <rec:Val key="indexname">web_index</rec:Val> <rec:Val key="executionMode">ADD</rec:Val> </rec:Map> </proc:configuration>

We need to add our pipeline description to the deploy.xml file placed in the same directory. Add the following code to the end of deploy.xml before the closing </deploy> tag:

<process name="proc:AddWebPipeline"> <in-memory>true</in-memory> <provide partnerLink="Pipeline"> <service name="proc:AddWebPipeline" port="ProcessorPort" /> </provide> </process>

Now we need to add our "web_index" to the LuceneIndexService configuration.

Configure LuceneIndexService

For more information about the LuceneIndexService, please see LuceneIndexService.

Let's configure our "web_index" index structure and search template. Add the following code to the end of configuration/org.eclipse.smila.search.datadictionary/DataDictionary.xml file before the closing </AnyFinderDataDictionary> tag:

<Index Name="web_index"> <Connection xmlns="http://www.anyfinder.de/DataDictionary/Connection" MaxConnections="5"/> <IndexStructure xmlns="http://www.anyfinder.de/IndexStructure" Name="web_index"> <Analyzer ClassName="org.apache.lucene.analysis.standard.StandardAnalyzer"/> <IndexField FieldNo="8" IndexValue="true" Name="MimeType" StoreText="true" Tokenize="true" Type="Text"/> <IndexField FieldNo="7" IndexValue="true" Name="Size" StoreText="true" Tokenize="true" Type="Text"/> <IndexField FieldNo="6" IndexValue="true" Name="Extension" StoreText="true" Tokenize="true" Type="Text"/> <IndexField FieldNo="5" IndexValue="true" Name="Title" StoreText="true" Tokenize="true" Type="Text"/> <IndexField FieldNo="4" IndexValue="true" Name="Url" StoreText="true" Tokenize="false" Type="Text"> <Analyzer ClassName="org.apache.lucene.analysis.WhitespaceAnalyzer"/> </IndexField> <IndexField FieldNo="3" IndexValue="true" Name="LastModifiedDate" StoreText="true" Tokenize="false" Type="Text"/> <IndexField FieldNo="2" IndexValue="true" Name="Path" StoreText="true" Tokenize="true" Type="Text"/> <IndexField FieldNo="1" IndexValue="true" Name="Filename" StoreText="true" Tokenize="true" Type="Text"/> <IndexField FieldNo="0" IndexValue="true" Name="Content" StoreText="true" Tokenize="true" Type="Text"/> </IndexStructure> <Configuration xmlns="http://www.anyfinder.de/DataDictionary/Configuration" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://www.anyfinder.de/DataDictionary/Configuration ../xml/DataDictionaryConfiguration.xsd"> <DefaultConfig> <Field FieldNo="8"> <FieldConfig Constraint="optional" Weight="1" xsi:type="FTText"> <Parameter xmlns="http://www.anyfinder.de/Search/TextField" Operator="OR" Tolerance="exact"/> </FieldConfig> </Field> <Field FieldNo="7"> <FieldConfig Constraint="optional" Weight="1" xsi:type="FTText"> <Parameter xmlns="http://www.anyfinder.de/Search/TextField" Operator="OR" Tolerance="exact"/> </FieldConfig> </Field> <Field FieldNo="6"> <FieldConfig Constraint="optional" Weight="1" xsi:type="FTText"> <Parameter xmlns="http://www.anyfinder.de/Search/TextField" Operator="OR" Tolerance="exact"/> </FieldConfig> </Field> <Field FieldNo="5"> <FieldConfig Constraint="optional" Weight="1" xsi:type="FTText"> <Parameter xmlns="http://www.anyfinder.de/Search/TextField" Operator="OR" Tolerance="exact"/> </FieldConfig> </Field> <Field FieldNo="4"> <FieldConfig Constraint="optional" Weight="1" xsi:type="FTText"> <Parameter xmlns="http://www.anyfinder.de/Search/TextField" Operator="OR" Tolerance="exact"/> </FieldConfig> </Field> <Field FieldNo="3"> <FieldConfig Constraint="optional" Weight="1" xsi:type="FTText"> <Parameter xmlns="http://www.anyfinder.de/Search/TextField" Operator="OR" Tolerance="exact"/> </FieldConfig> </Field> <Field FieldNo="2"> <FieldConfig Constraint="optional" Weight="1" xsi:type="FTText"> <Parameter xmlns="http://www.anyfinder.de/Search/TextField" Operator="OR" Tolerance="exact"/> </FieldConfig> </Field> <Field FieldNo="1"> <FieldConfig Constraint="optional" Weight="1" xsi:type="FTText"> <Parameter xmlns="http://www.anyfinder.de/Search/TextField" Operator="OR" Tolerance="exact"/> </FieldConfig> </Field> <Field FieldNo="0"> <FieldConfig Constraint="required" Weight="1" xsi:type="FTText"> <NodeTransformer xmlns="http://www.anyfinder.de/Search/ParameterObjects" Name="urn:ExtendedNodeTransformer"> <ParameterSet xmlns="http://www.brox.de/ParameterSet"/> </NodeTransformer> <Parameter xmlns="http://www.anyfinder.de/Search/TextField" Operator="AND" Tolerance="exact"/> </FieldConfig> </Field> </DefaultConfig> </Configuration> </Index>

Now we need to add mapping of attribute and attachment names to Lucene "FieldNo" defined in DataDictionary.xml. Open configuration/org.eclipse.smila.lucene/Mappings.xml file and add the following code to the end of file before closing </Mappings> tag:

<Mapping indexName="web_index"> <Attributes> <Attribute name="Filename" fieldNo="1" /> <Attribute name="Path" fieldNo="2" /> <Attribute name="LastModifiedDate" fieldNo="3" /> <Attribute name="Url" fieldNo="4" /> <Attribute name="Title" fieldNo="5" /> <Attribute name="Extension" fieldNo="6" /> <Attribute name="Size" fieldNo="7" /> <Attribute name="MimeType" fieldNo="8" /> </Attributes> <Attachments> <Attachment name="Content" fieldNo="0" /> </Attachments> </Mapping>

Put it all together

Ok, now it seems that we have finally finished configuring SMILA for using separate workflows for file system and web crawling and index data from these crawlers into different indices. Here is what we have done so far:

- Modified Listener rules in order to use different workflows for web and file system crawling.

- Created a new BPEL workflow for Web crawler data.

- We added the web_index index to the Lucence configuration.

Now we can start SMILA again and observe what will happen when starting the Web crawler.

|

It is very important to shutdown SMILA engine and restart afterwards because modified configurations will load only on startup. |

Now you can search on the new index "web_index" using your browser:

Configuration overview

SMILA configuration files are placed into configuration directory of the SMILA application. The following figure shows the configuration files relevant to this tutorial, regarding SMILA components and the data lifecycle. SMILA component names are black-colored, directories containing configuration files and filenames are blue-colored.