|

|

| (38 intermediate revisions by the same user not shown) |

| Line 1: |

Line 1: |

| − | NOTE: THIS DOCUMENT IS A DRAFT CURRENTLY UNDER DEVELOPMENT. THERE MAY BE SUBSTANTIAL CHANGES IN THE NEAR FUTURE.

| |

| − |

| |

| | == Overview == | | == Overview == |

| | | | |

| Line 21: |

Line 19: |

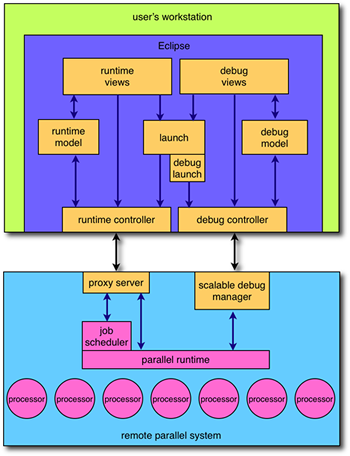

| | The architecture can be roughly divided into three major components: the ''runtime platform'', the ''debug platform'', and ''tool integration services''. These components are defined in more detail in the following sections. | | The architecture can be roughly divided into three major components: the ''runtime platform'', the ''debug platform'', and ''tool integration services''. These components are defined in more detail in the following sections. |

| | | | |

| − | == Runtime Platform == | + | == [[PTP/designs/2.x/runtime_platform | Runtime Platform]] == |

| − | | + | |

| − | The runtime platform comprises those elements relating to the launching, controlling, and monitoring of parallel applications. The runtime platform is comprised of the following elements in the architecture diagram:

| + | |

| − | | + | |

| − | * runtime model

| + | |

| − | * runtime views

| + | |

| − | * runtime controller

| + | |

| − | * proxy server

| + | |

| − | * launch

| + | |

| − | | + | |

| − | PTP follows a model-view-controller (MVC) design pattern. The heart of the architecture is the ''runtime model'', which provides an abstract representation of the parallel computer system and the running applications. ''Runtime views'' provide the user with visual feedback of the state of the model, and provide the user interface elements that allow jobs to be launched and controlled. The ''runtime controller'' is responsible for communicating with the underlying parallel computer system, translating user commands into actions, and keeping the model updated. All of these elements are Eclipse plugins.

| + | |

| − | | + | |

| − | The ''proxy server'' is a small program that typically runs on the remote system front-end, and is responsible for performing local actions in response to commands sent by the proxy client. The results of the actions are return to the client in the form of events. The proxy server is usually written in C.

| + | |

| − | | + | |

| − | The final element is ''launch'', which is responsible for managing Eclipse launch configurations, and translating these into the appropriate actions required to initiate the launch of an application on the remote parallel machine.

| + | |

| − | | + | |

| − | Each of these elements is described in more detail below.

| + | |

| − | | + | |

| − | === Runtime Model ===

| + | |

| − | | + | |

| − | The PTP runtime model is an hierarchical attributed parallel system model that represents the state of a remote parallel system at any particular time. It is the ''model'' part of the MVS design pattern. The model is ''attributed'' because each model element can contain an arbitrary list of attributes that represent characteristics of the real object. For example, an element representing a compute node could contain attributes describing the hardware configuration of the node. The structure of the runtime model is shown in the diagram below.

| + | |

| − | | + | |

| − | [[Image:model20.png|center]]

| + | |

| − | | + | |

| − | Each of the model elements in the hierarchy is a logical representation of a system component. Particular operations and views provided by PTP are associated with each type of model element. Since machine hardware and architectures can vary widely, the model does not attempt to define any particular physical arrangement. It is left to the implementor to decide how the model elements map to the physical machine characteristics.

| + | |

| − | | + | |

| − | ==== Elements ====

| + | |

| − | | + | |

| − | The only model element that is provided when PTP is first initialized is the universe. Users must manually create resource manager elements and associate each with a real host and resource management system. The remainder of the model hierarchy is then populated and updated by the ''resource manager'' implementation.

| + | |

| − | | + | |

| − | ===== Universe =====

| + | |

| − | | + | |

| − | This is the top-level model element, and does not correspond to a physical object. It is used as the entry point into the model.

| + | |

| − | | + | |

| − | ===== Resource Manager =====

| + | |

| − | | + | |

| − | This model element corresponds to an instance of a resource management system on a remote computer system. Since a computer system may provide more than one resource management system, there may be multiple resource managers associated with a particular physical computer system. For example, host A may allow interactive jobs to be run directly using the MPICH2 runtime system, or batched via the LSF job scheduler. In this case, there would be two logical resource managers: an MPICH2 resource manager running on host A, and an LSF resource manager running on host A.

| + | |

| − | | + | |

| − | Resource managers have an attribute that reflects the current state of the resource manager. The possible states and their meanings are:

| + | |

| − | | + | |

| − | ; '''STOPPED''' : The resource manager is not available for status reporting or job submission. Resource manager configuration settings can be changed.

| + | |

| − | | + | |

| − | ; '''STARTING''' : The resource manager proxy server is being started on the (remote) parallel machine.

| + | |

| − | | + | |

| − | ; '''STARTED''' : The resource manager is operational and jobs can be submitted.

| + | |

| − | | + | |

| − | ; '''STOPPING''' : The resource manager is in the process of shutting down. Monitoring and job submission is disabled.

| + | |

| − | | + | |

| − | ; '''ERROR''' : An error has occurred starting or communicating to the resource manager.

| + | |

| − | | + | |

| − | The resource manager implementation is responsible for ensuring that the correct state transitions are observed. '''Failure to observe the correct state transitions can lead to undefined behavior in the user interface.''' The resource manager state transitions are shown below.

| + | |

| − | | + | |

| − | [[Image:rm_state.png|center]]

| + | |

| − | | + | |

| − | ===== Machine =====

| + | |

| − | | + | |

| − | This model element provides a grouping of computing resources, and will typically be where the resource management system is accessible from, such as the place a user would normally log in. Many configurations provide such a front-end machine.

| + | |

| − | | + | |

| − | Machine elements have a state attribute that can be used by the resource manager implementation to provide visual feedback to the user. Each state is represented by a different icon in the user interface. Allowable states and suggested meanings are as follows:

| + | |

| − | | + | |

| − | ; '''UP''' : Machine is available for running user jobs

| + | |

| − | | + | |

| − | ; '''DOWN''' : Machine is physically powered down, or otherwise unavailable to run user jobs

| + | |

| − | | + | |

| − | ; '''ALERT''' : An alert condition has been raised. This might be used to indicate the machine is shutting down, or there is some other unusual condition.

| + | |

| − | | + | |

| − | ; '''ERROR''' : The machine has failed.

| + | |

| − | | + | |

| − | ; '''UNKNOWN''' : The state of the machine is unknown.

| + | |

| − | | + | |

| − | It is recommended that resource managers use these states when displaying machines in the user interface.

| + | |

| − | | + | |

| − | ===== Queue =====

| + | |

| − | | + | |

| − | This model element represents a logical queue of jobs waiting to be executed on a machine. There will typically be a one-to-one mapping between a queue model element and a resource management system queue. Systems that don't have the notion of queues should map all jobs on to a single ''default'' queue.

| + | |

| − | | + | |

| − | Queues have a state attribute that can be used by the resource manager to indicate allowable actions for the queue. Each state will display a different icon in the user interface. The following states and suggested meanings are available:

| + | |

| − | | + | |

| − | ; '''NORMAL''' : The queue is available for job submissions

| + | |

| − | | + | |

| − | ; '''COLLECTING''' : The queue is available for job submissions, but jobs will not be dispatched from the queue.

| + | |

| − | | + | |

| − | ; '''DRAINING''' : The queue will not accept new job submissions. Existing jobs will continue to be dispatched

| + | |

| − | | + | |

| − | ; '''STOPPED''' : The queue will not accept new job submissions, and any existing jobs will not be dispatched.

| + | |

| − | | + | |

| − | It is recommended that resource manager implementations use these states.

| + | |

| − | | + | |

| − | ===== Node =====

| + | |

| − | | + | |

| − | This model element represents some form of computational resource that is responsible for executing an application program, but where it is not necessary to provide any finer level of granularity. For example, a cluster node with multiple processors would normally be represented as a node element. An SMP machine would represent physical processors as a node element.

| + | |

| − | | + | |

| − | Nodes have two state attributes that can be used by the resource manager to indicate node availability to the user. Each combination of attributes is represented by a different icon in the user interface.

| + | |

| − | | + | |

| − | The first node state attribute represents the gross state of the node. Recommended meanings are as follows:

| + | |

| − | | + | |

| − | ; '''UP''' : The node is operating normally.

| + | |

| − | | + | |

| − | ; '''DOWN''' : The node has been shut down or disabled.

| + | |

| − | | + | |

| − | ; '''ERROR''' : An error has occurred and the node is no longer available

| + | |

| − | | + | |

| − | ; '''UNKNOWN''' : The node is in an unknown state

| + | |

| − | | + | |

| − | The second node state attribute (the "extended node state) represents additional information about the node. The recommended states and meanings are as follows:

| + | |

| − | | + | |

| − | ; '''USER_ALLOC_EXCL''' : The node is allocated to the user for exclusive use

| + | |

| − | | + | |

| − | ; '''USER_ALLOC_SHARED''' : The node is allocated to the user, but is available for shared use

| + | |

| − | | + | |

| − | ; '''OTHER_ALLOC_EXCL''' : The node is allocated to another user for exclusive use

| + | |

| − | | + | |

| − | ; '''OTHER_ALLOC_SHARED''' : The node is allocated to another user, but is available for shared use

| + | |

| − | | + | |

| − | ; '''RUNNING_PROCESS''' : A process is running on the node (internally generated state)

| + | |

| − |

| + | |

| − | ; '''EXITED_PROCESS''' : A process that was running on the node has now exited (internally generated state)

| + | |

| − | | + | |

| − | ; '''NONE''' : The node has no extended node state

| + | |

| − | | + | |

| − | ===== Job =====

| + | |

| − | | + | |

| − | This model element represents an instance of an application that is to be executed. A job typically comprises one or more processes (execution contexts). Jobs also have a state that reflects the progress of the job from submission to termination. Job states are as follows:

| + | |

| − | | + | |

| − | ; '''PENDING''' : The job has been submitted for execution, but is queued awaiting for required resources to become available

| + | |

| − | | + | |

| − | ; '''STARTED''' : The required resource are available and the job has be scheduled for execution

| + | |

| − | | + | |

| − | ; '''RUNNING''' : The process(es) associated with the job have been started

| + | |

| − | | + | |

| − | ; '''TERMINATED''' : The process(es) associated with the job have exited

| + | |

| − | | + | |

| − | ; '''SUSPENDED''' : Execution of the job has been temporarily suspended

| + | |

| − | | + | |

| − | ; '''ERROR''' : The job failed to start

| + | |

| − | | + | |

| − | ; '''UNKNOWN''' : The job is in an unknown state

| + | |

| − | | + | |

| − | It is the responsibility of the proxy server to ensure that the correct state transitions are followed. '''Failure to follow the correct state transitions can result in undefined behavior in the user interface.''' Required state transitions are shown in the following diagram.

| + | |

| − | | + | |

| − | [[Image:job_state.png|center]]

| + | |

| − | | + | |

| − | ===== Process =====

| + | |

| − | | + | |

| − | This model element represents an execution unit using some computational resource. There is an implicit one-to-many relationship between a node and a process. For example, a process would be used to represent a Unix process. Finer granularity (i.e. threads of execution) are managed by the debug model.

| + | |

| − | | + | |

| − | Processes have a state that reflects the progress of the process from execution to termination. Process states are as follows:

| + | |

| − | | + | |

| − | ; '''STARTING''' : The process is being launched by the operating system

| + | |

| − | | + | |

| − | ; '''RUNNING''' : The process has begun executing (including library code execution)

| + | |

| − | | + | |

| − | ; '''EXITED''' : The process has finished executing

| + | |

| − | | + | |

| − | ; '''EXITED_SIGNALLED''' : The process finished executing as the result of receiving a signal

| + | |

| − | | + | |

| − | ; '''SUSPENDED''' : The process has been suspended by a debugger

| + | |

| − | | + | |

| − | ; '''ERROR''' : The process failed to execute

| + | |

| − | | + | |

| − | ; '''UNKNOWN''' : The process is in an unknown state

| + | |

| − | | + | |

| − | It is the responsibility of the proxy server to ensure that the correct state transitions are followed. '''Failure to follow the correct state transitions can result in undefined behavior in the user interface.''' Required state transitions are shown in the following diagram.

| + | |

| − | | + | |

| − | [[Image:process_state.png|center]]

| + | |

| − | | + | |

| − | ==== Attributes ====

| + | |

| − | | + | |

| − | Each element in the model can contain an arbitrary number of attributes. An attribute is used to provide additional information about the model element. An attribute consists of a ''key'' and a ''value''. The key is a unique ID that refers to an ''attribute definition'', which can be thought of as the type of the attribute. The value is the value of the attribute.

| + | |

| − | | + | |

| − | An attribute definition contains meta-data about the attribute, and is primarily used for data validation and displaying attributes in the user interface. This meta-data includes:

| + | |

| − | | + | |

| − | ; ID : The attribute definition ID.

| + | |

| − | | + | |

| − | ; type : The type of the attribute. Currently supported types are ARRAY, BOOLEAN, DATE, DOUBLE, ENUMERATED, INTEGER, and STRING.

| + | |

| − | | + | |

| − | ; name : The short name of the attribute. This is the name that is displayed in UI property views.

| + | |

| − | | + | |

| − | ; description : A description of the attribute that is displayed when more information about the attribute is requested (e.g. tooltip popups.)

| + | |

| − | | + | |

| − | ; default : The default value of the attribute. This is the value assigned to the attribute when it is first created.

| + | |

| − | | + | |

| − | ; display : A flag indicating if this attribute is for display purposes. This provides a hint to the UI in order to determine if the attribute should be displayed.

| + | |

| − | | + | |

| − | ==== Pre-defined Attributes ====

| + | |

| − | | + | |

| − | All model elements have at least two mandatory attributes. These attributes are:

| + | |

| − | | + | |

| − | ; '''id''' : This is a unique ID for the model element.

| + | |

| − | | + | |

| − | ; '''name''' : This is a distinguishing name for the model element. It is primarily used for display purposes and does not need to be unique.

| + | |

| − | | + | |

| − | In addition, model elements have different sets of optional attributes. These attributes are shown in the following table:

| + | |

| − | | + | |

| − | {| border="1" callpadding="2"

| + | |

| − | ! width="120" | model element !! width="140" | attribute definition ID !! width="100" | attribute type !! description

| + | |

| − | |-

| + | |

| − | | rowspan="4" | resource manager

| + | |

| − | | rmState || enum || Enumeration representing the state of the resource manager. Possible values are: STARTING, STARTED, STOPPING, STOPPED, SUSPENDED, ERROR

| + | |

| − | |-

| + | |

| − | | rmDescription || string || Text describing this resource manager

| + | |

| − | |-

| + | |

| − | | rmType || string || Resource manager class

| + | |

| − | |-

| + | |

| − | | rmID || string || Unique identifier for this resource manager. Used for persistence

| + | |

| − | |-

| + | |

| − | | rowspan="2" | machine

| + | |

| − | | machineState || enum || Enumeration representing the state of the machine. Possible values are: UP, DOWN, ALERT, ERROR, UNKNOWN

| + | |

| − | |-

| + | |

| − | | numNodes || int || Number of nodes known by this machine

| + | |

| − | |-

| + | |

| − | | queue || queueState || enum || Enumeration representing the state of the queue. Possible values are: NORMAL, COLLECTING, DRAINING, STOPPED

| + | |

| − | |-

| + | |

| − | | rowspan="3" | node

| + | |

| − | | nodeState || enum || Enumeration representing the state of the node. Possible values are: UP, DOWN, ERROR, UNKNOWN

| + | |

| − | |-

| + | |

| − | | nodeExtraState || enum || Enumeration representing additional state of the node. This is used to reflect control of the node by a resource management system. Possible values are: USER_ALLOC_EXCL, USER_ALLOC_SHARED, OTHER_ALLOC_EXCL, OTHER_ALLOC_SHARED, RUNNING_PROCESS, EXITED_PROCESS, NONE

| + | |

| − | |-

| + | |

| − | | nodeNumber || int || Zero-based index of the node

| + | |

| − | |-

| + | |

| − | | rowspan="13" | job

| + | |

| − | | jobState || enum || Enumeration representing the state of the job. Possible values are: PENDING, STARTED, RUNNING, TERMINATED, SUSPENDED, ERROR, UNKNOWN

| + | |

| − | |-

| + | |

| − | | jobSubId || string || Job submission ID

| + | |

| − | |-

| + | |

| − | | jobId || string || ID of job. Used in commands that need to refer to jobs.

| + | |

| − | |-

| + | |

| − | | queueId || string || Queue ID

| + | |

| − | |-

| + | |

| − | | jobNumProcs || int || Number of processes

| + | |

| − | |-

| + | |

| − | | execName || string || Name of executable

| + | |

| − | |-

| + | |

| − | | execPath || string || Path to executable

| + | |

| − | |-

| + | |

| − | | workingDir || string || Working directory

| + | |

| − | |-

| + | |

| − | | progArgs || array || Array of program arguments

| + | |

| − | |-

| + | |

| − | | env || array || Array containing environment variables

| + | |

| − | |-

| + | |

| − | | debugExecName || string || Debugger executable name

| + | |

| − | |-

| + | |

| − | | debugExecPath || string || Debugger executable path

| + | |

| − | |-

| + | |

| − | | debugArgs || array || Array containing debugger arguments

| + | |

| − | |-

| + | |

| − | | debug || bool || Debug flag

| + | |

| − | |-

| + | |

| − | | rowspan="8" | process

| + | |

| − | | processState || enum || Enumeration representing the state of the process. Possible values are: STARTING, RUNNING, EXITED, EXITED_SIGNALLED, STOPPED, ERROR, UNKNOWN

| + | |

| − | |-

| + | |

| − | | processPID || int || Process ID of the process

| + | |

| − | |-

| + | |

| − | | processExitCode || int || Exit code of the process on termination

| + | |

| − | |-

| + | |

| − | | processSignalName || string || Name of the signal that caused process termination

| + | |

| − | |-

| + | |

| − | | processIndex || int || Zero-based index of the process

| + | |

| − | |-

| + | |

| − | | processStdout || string || Standard output from the process

| + | |

| − | |-

| + | |

| − | | processStderr || string || Error output from the process

| + | |

| − | |-

| + | |

| − | | processNodeId || string || ID of the node that this process is running on

| + | |

| − | |-

| + | |

| − | |}

| + | |

| − | | + | |

| − | ==== Events ====

| + | |

| − | | + | |

| − | The runtime model provides an event notification mechanism to allow other components or tools to receive notification whenever changes occur to the model. Each model element provides two sets of event interfaces:

| + | |

| − | | + | |

| − | ; element events : These events relate to the model element itself. Two main types of events are provided: a ''change'' event, which is triggered when an attribute on the element is added, removed, or changed; and on some elements, an ''error'' event that indicates some kind of error has occurred.

| + | |

| − | | + | |

| − | ; child events : These events relate to children of the model element. Three main types of events are provided: a ''change'' event, which mirrors each child element's change event; a ''new'' event that is triggered when a new child element is added; and a ''remove'' event that this triggered when a child element is removed. Elements that have more than one type of child (e.g. a resource manager has machine and queue children) will provided separate interfaces for each type of child. These events are known as <i>bulk</i> events because the allow for the notification of multiple events simultaneously.

| + | |

| − | | + | |

| − | Event notification is activated by registering either a child listener or an element listener on the model element. Once the listener has been registered, events will begin to be received immediately.

| + | |

| − | | + | |

| − | ==== Implementation Details ====

| + | |

| − | | + | |

| − | '''Element events''' are always named <tt>I<i>Element</i>ChangedEvent</tt> and <tt>I<i>Element</i>ErrorEvent</tt>, where <tt><i>Element</i></tt> is the name of the element (e.g. <tt>Machine</tt> or <tt>Queue</tt>.) Currently the only error event is <tt>IResourceManagerErrorEvent</tt>. Element events provide two methods for obtaining more information about the event:

| + | |

| − | | + | |

| − | ; <tt>public Map<IAttributeDefinition<?,?,?>, IAttribute<?,?,?>> getAttributes();</tt> : This method returns the attributes that have changed on the element. The attributes are returned as a map, with the key being the attribute definition, and the value the attribute. This allows particular attributes to be accessed efficiently.

| + | |

| − | | + | |

| − | ; <tt>public IP<i>Element</i> getSource();</tt> : This method can be used to get the source of the event, which is the element itself.

| + | |

| − | | + | |

| − | '''Child events''' are always named <tt>I<i>Action</i><i>Element</i>Event</tt>, where <tt><i>Action</i></tt> is one of <tt>Changed</tt>, <tt>New</tt>, or <tt>Remove</tt>, and <tt><i>Element</i></tt> is the name of the element. For example, <tt>IChangedJobEvent</tt> will notify child listeners when one or more jobs have been changed. Child events provide three methods for obtaining more information about the event:

| + | |

| − | | + | |

| − | ; <tt>public Collection get<i>Elements</i>();</tt> : This method is used to retrieve the collection of child elements associated with the event.

| + | |

| − | | + | |

| − | ; <tt>public IP<i>Element</i> getSource();</tt> : This method can be used to get the source of the event, which is the element whose children caused the event to be sent.

| + | |

| − | | + | |

| − | Notice that child <tt><i>Change</i></tt> events do not provide access to the changed attributes. They only provide notification of the child elements that have changed. A listener must be registered on the element event <i>for each element</i> if you wish to be notified of the attributes that have changed.

| + | |

| − | | + | |

| − | '''Listener interfaces''' follow the same naming scheme. Element listeners are always named <tt>I<i>Element</i>Listener</tt>, and child listeners are always named <tt>I<i>Element</i>ChildListener</tt>.

| + | |

| − | | + | |

| − | The following table shows each model element along with it's associated events and listeners.

| + | |

| − | | + | |

| − | {| border="1"

| + | |

| − | |-

| + | |

| − | ! Model Element !! Element Event !! Element Listener !! Child Event !! Child Listener

| + | |

| − | |-

| + | |

| − | | rowspan="3" | IPJob || rowspan="3" | IJobChangeEvent || rowspan="3" | IJobListener || IChangeProcessEvent || rowspan="3" | IJobChildListener

| + | |

| − | |-

| + | |

| − | | INewProcessEvent

| + | |

| − | |-

| + | |

| − | | IRemoveProcessEvent

| + | |

| − | |-

| + | |

| − | | rowspan="3" | IPMachine || rowspan="3" | IMachineChangeEvent || rowspan="3" | IMachineListener || IChangeNodeEvent || rowspan="3" | IMachineChildListener

| + | |

| − | |-

| + | |

| − | | INewNodeEvent

| + | |

| − | |-

| + | |

| − | | IRemovedNodeEvent

| + | |

| − | |-

| + | |

| − | | rowspan="3" | IPNode || rowspan="3" | INodeChangeEvent || rowspan="3" | INodeListener || IChangeProcessEvent || rowspan="3" | INodeChildListener

| + | |

| − | |-

| + | |

| − | | INewProcessEvent

| + | |

| − | |-

| + | |

| − | | IRemovedProcessEvent

| + | |

| − | |-

| + | |

| − | | IPProcess || IProcessChangeEvent || IProcessListener || ||

| + | |

| − | |-

| + | |

| − | | rowspan="3" | IPQueue || rowspan="3" | IQueueChangeEvent || rowspan="3" | IQueueListener || IChangeJobEvent || rowspan="3" | IJobChildListener

| + | |

| − | |-

| + | |

| − | | INewJobEvent

| + | |

| − | |-

| + | |

| − | | IRemovedJobEvent

| + | |

| − | |-

| + | |

| − | | rowspan="6" | IResourceManager || rowspan="6" | IResourceManagerChangeEvent || rowspan="6" | IResourceManagerListener || IChangeMachine || rowspan="6" | IResourceManagerChildListener

| + | |

| − | |-

| + | |

| − | | INewMachineEvent

| + | |

| − | |-

| + | |

| − | | IRemovedMachineEvent

| + | |

| − | |-

| + | |

| − | | IChangeQueueEvent

| + | |

| − | |-

| + | |

| − | | INewQueueEvent

| + | |

| − | |-

| + | |

| − | | IRemovedQueueEvent

| + | |

| − | |-

| + | |

| − | |}

| + | |

| − | | + | |

| − | === Runtime Views ===

| + | |

| − | | + | |

| − | The runtime views serve two functions: they provide a means of observing the state of the runtime model, and they allow the user to interact with the parallel environment. Access to the views is managed using an Eclipse ''perspective''. A perspective is a means of grouping views together so that they can provide a cohesive user interface. The perspective used by the runtime platform is called the '''PTP Runtime''' perspective.

| + | |

| − | | + | |

| − | There are currently four main views provided by the runtime platform:

| + | |

| − | | + | |

| − | ; '''resource manager view''' : This view is used to manage the lifecycle of resource managers. Each resource manager model element is displayed in this view. A user can add, delete, start, stop, and edit resource managers using this view. A different color icon is used to display the different states of a resource manager.

| + | |

| − | | + | |

| − | ; '''machines view''' : This view shows information about the machine and node elements in the model. The left part of the view displays all the machine elements in the model (regardless of resource manager). When a machine element is selected, the right part of the view displays the node elements associated with that machine. Different machine and node element icons are used to reflect the different state attributes of the elements.

| + | |

| − | | + | |

| − | ; '''jobs view''' : This view shows information about the job and process elements in the model. The left part of the view displays all the job elements in the model (regardless of queue or resource manager). When a job element is selected, the right part of the view displays the process elements associated with that job. Different job and process element icons are used to reflect the different state attributes of the elements.

| + | |

| − | | + | |

| − | ; '''process detail view''' : This view shows more detailed information about individual processes in the model. Various parts of the view are used to display attributes that are obtained from the process model element.

| + | |

| − | | + | |

| − | ==== Implementation Details ====

| + | |

| − | | + | |

| − | === Runtime Controller ===

| + | |

| − | | + | |

| − | The runtime controller is the ''controller'' part of the MVC design pattern, and is implemented using a layered architecture, where each layer communicates with the layers above and below using a set of defined APIs. The following diagram shows the layers of the runtime controller in more detail.

| + | |

| − | | + | |

| − | [[Image:runtime_controller.png|center]]

| + | |

| − | | + | |

| − | The ''resource manager'' is an abstraction of a resource management system, such as a job scheduler, which is typically responsible for controlling user access to compute resources via queues. The resource manager is responsible for updating the runtime model. The resource manager communicates with the ''runtime system'', which is an abstraction of a parallel computer system's runtime system (that part of the software stack that is responsible for process allocation and mapping, application launch, and other communication services.) The runtime system, in turn, communicates with the ''remote proxy runtime'', which manages proxy communication via some form of remote service. The ''proxy runtime client'' is used to map the runtime system interface onto a set of commands and events that are used to communicate with a physically remote system. The proxy runtime client makes use of the ''proxy client'' to provide client-side communication services for managing proxy communication.

| + | |

| − | | + | |

| − | Each of these layers is described in more detail in the following sections.

| + | |

| − | | + | |

| − | ==== Resource Manager ====

| + | |

| − | | + | |

| − | A ''resource manger'' is the main point of control between the runtime model, runtime views, and the underlying parallel computer system. The resource manager is responsible for maintaining the state of the model, and allowing the user to interact with the parallel system.

| + | |

| − | | + | |

| − | There are a number of pre-defined ''types'' of resource managers that are known to PTP. These resource manager types are provided as plugins that understand how to communicate with a particular kind of resource management system. When a user creates a new resource manager (through the resource manager view), an ''instance'' of one of these types is created. There is a one-to-one correspondence between a resource manager and a resource manager model element. When a new resource manager is created, a new resource manager model element is also created.

| + | |

| − | | + | |

| − | A resource manager only maintains the part of the runtime model below its corresponding resource manager model element. It has no knowledge of other parts of the model.

| + | |

| − | | + | |

| − | ===== Implementation Details =====

| + | |

| − | | + | |

| − | ==== Runtime System ====

| + | |

| − | | + | |

| − | A ''runtime system'' is an abstraction layer that is designed to allow implementers more flexibility in how the plugin communicates with the underlying hardware. When using the implementation that is provided with PTP, this layer does little more than pass commands and events between the layers above and below.

| + | |

| − | | + | |

| − | ===== Implementation Details =====

| + | |

| − | | + | |

| − | ==== Remote Proxy Runtime ====

| + | |

| − | | + | |

| − | The ''remote proxy runtime'' is used when communicating to a proxy server that is remote from the host running Eclipse. This component provides a number of services that relate to defining and establishing remote connections, and launching the proxy server on a remote computer system. It does not participate in actual ''communication'' between the proxy client and proxy server.

| + | |

| − | | + | |

| − | ===== Implementation Details =====

| + | |

| − | | + | |

| − | ==== Proxy Runtime Client ====

| + | |

| − | | + | |

| − | The ''proxy runtime client'' layer is used to define the commands and events that are specific to runtime communication (such as job submission, termination, etc.) with the proxy server. The implementation details of this protocol are defined in more detail here [[PTP/designs/rm_proxy|Resource Manager Proxy Protocol]].

| + | |

| − | | + | |

| − | ===== Implementation Details =====

| + | |

| − | | + | |

| − | ==== Proxy Client ====

| + | |

| − | | + | |

| − | The ''proxy client'' provides a range of low level proxy communication services that can be used to implement higher level proxy protocols. These services include:

| + | |

| − | | + | |

| − | * An abstract command that can be extended to provide additional commands.

| + | |

| − | * An abstract event that can be extend to provide additional events.

| + | |

| − | * Connection lifecycle management.

| + | |

| − | * Concrete commands and events for protocol initialization and finalization.

| + | |

| − | * Command/event serialization and de-serialization.

| + | |

| − | | + | |

| − | The proxy client is currently used by both the proxy runtime client and the proxy debug client.

| + | |

| − | | + | |

| − | ===== Implementation Details =====

| + | |

| − | | + | |

| − | === Proxy Server ===

| + | |

| − | | + | |

| − | The ''proxy server'' is the only part of the PTP runtime architecture that runs outside the Eclipse JVM. It has to main jobs: to receive commands from the runtime controller and perform actions in response to those commands; and keep the runtime controller informed of activities that are taking place on the parallel computer system.

| + | |

| − | | + | |

| − | The commands supported by the proxy server are the same as those defined in the [[PTP/designs/rm_proxy|Resource Manager Proxy Protocol]]. Once an action has been performed, the results (if any) are collected into one or more events, and these are transmitted back to the runtime controller. The proxy server must also monitor the status of the system (hardware, configuration, queues, running jobs, etc.) and report "interesting events" back to the runtime controller.

| + | |

| − | | + | |

| − | ==== Implementation Details ====

| + | |

| − | | + | |

| − | ==== Known Implementations ====

| + | |

| − | | + | |

| − | The following table lists the known implementations of proxy servers.

| + | |

| − | | + | |

| − | {| border="1"

| + | |

| − | |-

| + | |

| − | ! PTP Name !! Description !! Batch !! Language !! Operating Systems !! Architectures

| + | |

| − | |-

| + | |

| − | | ORTE || Open Runtime Environment (part of Open MPI) || No || C99 || Linux, MacOS X || x86, x86_64, ppc

| + | |

| − | |-

| + | |

| − | | MPICH2 || MPICH2 mpd runtime || No || Python || Linux, MacOS X || x86, x86_64, ppc

| + | |

| − | |-

| + | |

| − | | PE || IBM Parallel Environment || No || C99 || Linux, AIX || ppc

| + | |

| − | |-

| + | |

| − | | LoadLeveler || IBM LoadLeveler job-management system || Yes || C99 || Linux, AIX || ppc

| + | |

| − | |-

| + | |

| − | |}

| + | |

| − | | + | |

| − | === Launch ===

| + | |

| − | | + | |

| − | The ''launch'' component is responsible for collecting the information required to launch an application, and passing this to the runtime controller to initiate the launch. Both normal and debug launches are handled by this component. Since the parallel computer system may be employing a batch job system, the launch may not result in immediate execution of the application. Instead, the application may be placed in a queue and only executed when the requisite resources become available.

| + | |

| − | | + | |

| − | The Eclipse launch configuration system provides a user interface that allows the user to create launch configurations that encapsulate the parameters and environment necessary to run or debug a program. The PTP launch component has extended this functionality to allow specification of the resources necessary for job ''submission''. These resources available are dependent on the particular resource management system in place on the parallel computer system. A customizable launch page is available to allow any types of resources to be selected by the user. These resources are passed to the runtime controller in the form of attributes, and which are in turn passed on to the resource management system.

| + | |

| − | | + | |

| − | ==== Normal Launch ====

| + | |

| − | | + | |

| − | [[Image:launch.png|center]]

| + | |

| − | | + | |

| − | ==== Debug Launch ====

| + | |

| − | | + | |

| − | [[Image:launch_debug.png|center]]

| + | |

| − | | + | |

| − | ==== Implementation Details ====

| + | |

| − | | + | |

| − | The launch configuration is implemented in package <tt>org.eclipse.ptp.launch</tt>, which is built on top of Eclipse's launch configuration. It doesn't matter if you start from "Run as ..." or "Debug ...". The launch logic will go from here. What tells from a <b>debugger</b> launch apart from <b>normal</b> launch is a special debug flag.

| + | |

| − | | + | |

| − | <pre>

| + | |

| − | if (mode.equals(ILaunchManager.DEBUG_MODE))

| + | |

| − | // debugger launch

| + | |

| − | else

| + | |

| − | // normal launch

| + | |

| − | </pre>

| + | |

| − | | + | |

| − | | + | |

| − | We sketch the steps following the launch operation.

| + | |

| − | | + | |

| − | First, a set of parameters/attributes are collected. For example, debugger related parameters such as host, port, debugger path are collected into array <tt>dbgArgs</tt>.

| + | |

| − | | + | |

| − | Then, we will call upon resource manager to submit this job:

| + | |

| − | | + | |

| − | <pre>

| + | |

| − | final IResourceManager rm = getResourceManager(configuration);

| + | |

| − | IPJob job = rm.submitJob(attrMgr, monitor);

| + | |

| − | </pre>

| + | |

| − | | + | |

| − | Please refer to <tt>AbstractParallelLaunchConfigurationDelegates</tt> for more details.

| + | |

| − | | + | |

| − | | + | |

| − | Resource manager will eventually contact the backend proxy server, and pass in all the attributes necessary to submit the job. As detailed elsewhere, a proxy server usually provides a set of routines to accomplish submitting a job, cancelling a job, monitoring a job etc. for a particular parallel computing environment. Here we will use ORTE proxy server as an example to continue the flow.

| + | |

| − | | + | |

| − | ORTE resource manager at the front end (Eclipse side) will have a way to establish connection with ORTE proxy server (implemented by ptp_orte_proxy.c in package <tt>org.eclipse.ptp.orte.proxy</tt>, and they can communicate through a wire-protocol (2.0 at this point). The logic will switch to method <tt>ORTE_SubmitJob</tt>.

| + | |

| − | | + | |

| − | ORTE packs relevant into a "context" structure, and to create this structure, we invoke <tt>OBJ_NEW()</tt>. For ORTE to launch a job, it is a two-step process. First it allocates for the job, second, it launches the job. The call <tt>ORTE_SPAWN</tt> combines these two steps together, which is simpler, and sufficient for the normal job launch. For debug job, the process is more complicated, and we discuss it separately.

| + | |

| − | | + | |

| − | <pre>

| + | |

| − | apps = OBJ_NEW(orte_app_context_t);

| + | |

| − | apps-> num_procs = num_procs;

| + | |

| − | apps-> app = full_path;

| + | |

| − | apps-> cwd = strdup(cwd);

| + | |

| − | apps-> env = env;

| + | |

| − | ...

| + | |

| − | if (debug) {

| + | |

| − | rc = debug_spawn(debug_full_path, debug_argc, debug_args, &apps, 1, &ortejobid, &debug_jobid);

| + | |

| − | } else {

| + | |

| − | rc = ORTE_SPAWN(&apps,1, &ortejobid, job_state_callback);

| + | |

| − | </pre>

| + | |

| − | | + | |

| − | | + | |

| − | The <tt>job_state_callback</tt> is a registered callback function with ORTE, and will be invoked when there a a job state change. Also within this callback function, a <tt>sendProcessChangeStateEvent</tt> will be invoked to notify the Eclipse front for updating UI if necessary.

| + | |

| − |

| + | |

| − | Now, let's turn our attention on the first case - how a debug job is launched through ORTE, and some of the complications involved.

| + | |

| − | | + | |

| − | The general thread of launching is as following: for a N-process job, ORTE will launch N+1 SDM processes. The first N processes are called <u>SDM servers</u>, the N+1 th process is called <u>SDM client</u> (or master), as it will co-ordinate the communication from other SDM servers, and connect back to Eclipse front. Each SDM server will in turn start the real application process, in other words, there is one-to-one mapping between SDM server and real application process.

| + | |

| − | | + | |

| − | So there are two set of processes (and two jobs) ORTE needs to be aware of: one set is about SDM servers, the other set is for real applications. Inside <tt>debug_spawn()</tt> function:

| + | |

| − | <code><pre>

| + | |

| − | rc = ORTE_ALLOCATE_JOB(app_context, num_context, &jid1, debug_app_job_state_callback);

| + | |

| − | </pre></code>

| + | |

| − | First, we allocate the job without actually launching it, and the real purpose is to get job id #1, which is for application.

| + | |

| − | | + | |

| − | Next, we want to allocate debugger job, but before doing so, we need to create a debugger job context just as we did for application.

| + | |

| − | <code><pre>

| + | |

| − | debug_context = OBJ_NEW(orte_app_context_t);

| + | |

| − | debug_context->num_procs = app_context[0]->num_procs + 1;

| + | |

| − | debug_context->app = debug_path;

| + | |

| − | debug_context->cwd = strdup(app_context[0]->cwd);

| + | |

| − | ...

| + | |

| − | asprintf(&debug_context->argv[i++], "--jobid=%d", jid1);

| + | |

| − | debug_context->argv[i++] = NULL;

| + | |

| − | ...

| + | |

| − | </pre></code>

| + | |

| − | | + | |

| − | Note that we need to pass appropriate job id to debugger - that is the application job id we obtained earlier by doing the allocation. Debugger needs this job id to do appropriate attachment.

| + | |

| − | Once we have the debugger context ready, we allocate for debugger job by:

| + | |

| − | <code><pre>

| + | |

| − | rc = ORTE_ALLOCATE_JOB(&debug_context, 1, &jid2, debug_job_state_callback);

| + | |

| − | </pre></code>

| + | |

| − | Note that job id #2 is for the debugger. Finally, we can launch the debugger by invokding <tt>ORTE_LAUNCH_JOB()</tt>:

| + | |

| − | | + | |

| − | <code><pre>

| + | |

| − | if (ORTE_SUCCESS != (rc = ORTE_LAUNCH_JOB(jid2))) {

| + | |

| − | OBJ_RELEASE(debug_context);

| + | |

| − | ORTE_ERROR_LOG(rc);

| + | |

| − | return rc;

| + | |

| − | }

| + | |

| − | </pre></code>

| + | |

| − | | + | |

| − | == Debug Platform ==

| + | |

| − | | + | |

| − | The debug platform comprises those elements relating to the debugging of parallel applications. The debug platform is comprised of the following elements in the architecture diagram:

| + | |

| − | | + | |

| − | * debug model

| + | |

| − | * debug views

| + | |

| − | * debug controller

| + | |

| − | * debug launch

| + | |

| − | * scalable debug manager

| + | |

| − | | + | |

| − | The ''debug model'' provides a representation of a job and its associated processes being debugged. The ''debug views'' allow the user to interact with the debug model, and control the operation of the debugger. The ''debug controller'' interacts with the job being debugged and is responsible for updating the state of the debug model. The debug model and controller are collectively known as the ''parallel debug interface (PDI)'', which is set of abstract interfaces that can be implemented by different types of debuggers to provide the concrete classes necessary to support a variety of architectures and systems. PTP provides an implementation of PDI that communicates via a proxy running on a remote machine. The Java side of the implementation provides a set of commands and events that are used for communication with a remote debug agent. The proxy protocol is built on top of the same protocol used by the runtime services. The ''scalable debug manager'' is an external program that runs on the remote system and implements the server side of the proxy protocol. It manages the debugger communication between the proxy client and low-level debug engines that control the debug operations on the application processes.

| + | |

| − | | + | |

| − | === Debug Model ===

| + | |

| − | | + | |

| − | The debug model represents objects in the target program that are of interest to the debugger. Model objects are updated to reflect the states of the corresponding objects in the target, and can be displayed in views in the user interface. They form the core of a model-view-controller design pattern. PDI provides a broad range of model objects, and these are summarized in the following table.

| + | |

| − | | + | |

| − | {| border="1"

| + | |

| − | |-

| + | |

| − | ! Model Object

| + | |

| − | ! Description

| + | |

| − | |-

| + | |

| − | | IPDIAddressBreakpoint

| + | |

| − | | A breakpoint that will suspend execution when a particular address is reached

| + | |

| − | |-

| + | |

| − | | IPDIArgument

| + | |

| − | | A function or procedure argument

| + | |

| − | |-

| + | |

| − | | IPDIArgumentDescriptor

| + | |

| − | | Detailed information about an argument

| + | |

| − | |-

| + | |

| − | | IPDIExceptionpoint

| + | |

| − | | An breakpoint that will suspend execution when a particular exception is raised

| + | |

| − | |-

| + | |

| − | | IPDIExpression

| + | |

| − | | An expression that can be evaluated to produce a value

| + | |

| − | |-

| + | |

| − | | IPDIFunctionBreakpoint

| + | |

| − | | A breakpoint that will suspend execution when a particular function is called

| + | |

| − | |-

| + | |

| − | | IPDIGlobalVariable

| + | |

| − | | A variable that has global scope within the execution context

| + | |

| − | |-

| + | |

| − | | IPDIGlobalVariableDescriptor

| + | |

| − | | Detailed information about a global variable

| + | |

| − | |-

| + | |

| − | | IPDIInstruction

| + | |

| − | | A machine instruction

| + | |

| − | |-

| + | |

| − | | IPDILineBreakpoint

| + | |

| − | | A breakpoint that will suspend execution when a particular source line number is reached

| + | |

| − | |-

| + | |

| − | | IPDILocalVariable

| + | |

| − | | A variable with scope local to the current stack frame

| + | |

| − | |-

| + | |

| − | | IPDILocalVariableDescriptor

| + | |

| − | | Detailed information about a local variable

| + | |

| − | |-

| + | |

| − | | IPDIMemory

| + | |

| − | | An object representing a single memory location in an execution context

| + | |

| − | |-

| + | |

| − | | IPDIMemoryBlock

| + | |

| − | | A contiguous segment of memory in an execution context

| + | |

| − | |-

| + | |

| − | | IPDIMixedInstruction

| + | |

| − | | A machine instruction with source context information

| + | |

| − | |-

| + | |

| − | | IPDIMultiExpressions

| + | |

| − | | Expressions in multiple execution contexts

| + | |

| − | |-

| + | |

| − | | IPDIRegister

| + | |

| − | | A special purpose variable

| + | |

| − | |-

| + | |

| − | | IPDIRegisterDescriptor

| + | |

| − | | Detailed information about a register

| + | |

| − | |-

| + | |

| − | | IPDIRegisterGroup

| + | |

| − | | A group of registers in a target execution context

| + | |

| − | |-

| + | |

| − | | IPDIRuntimeOptions

| + | |

| − | | Configuration information about a debug session

| + | |

| − | |-

| + | |

| − | | IPDISharedLibrary

| + | |

| − | | A shared library that has been loaded into the debug target

| + | |

| − | |-

| + | |

| − | | IPDISharedLibraryManagement

| + | |

| − | | A shared library manager

| + | |

| − | |-

| + | |

| − | | IPDISignal

| + | |

| − | | A POSIX signal

| + | |

| − | |-

| + | |

| − | | IPDISignalDescriptor

| + | |

| − | | Detailed information about a signal

| + | |

| − | |-

| + | |

| − | | IPDISourceManagement

| + | |

| − | | Manages information about the source code

| + | |

| − | |-

| + | |

| − | | IPDIStackFrame

| + | |

| − | | A stack frame in a suspended execution context (thread)

| + | |

| − | |-

| + | |

| − | | IPDIStackFrameDescriptor

| + | |

| − | | Detailed information about a stack frame

| + | |

| − | |-

| + | |

| − | | IPDITarget

| + | |

| − | | A debuggable program. This is the root of the PDI model

| + | |

| − | |-

| + | |

| − | | IPDITargetExpression

| + | |

| − | | An expression with an associated variable

| + | |

| − | |-

| + | |

| − | | IPDIThread

| + | |

| − | | An execution context

| + | |

| − | |-

| + | |

| − | | IPDIThreadGroup

| + | |

| − | | A group of threads

| + | |

| − | |-

| + | |

| − | | IPDIThreadStorage

| + | |

| − | | Storage associated with a thread

| + | |

| − | |-

| + | |

| − | | IPDIThreadStorageDescriptor

| + | |

| − | | Detailed information about thread storage

| + | |

| − | |-

| + | |

| − | | IPDITracepoint

| + | |

| − | | A point in the program execution at which data will be collected

| + | |

| − | |-

| + | |

| − | | IPDIVariable

| + | |

| − | | A data structure in the program

| + | |

| − | |-

| + | |

| − | | IPDIVariableDescriptor

| + | |

| − | | Detailed information about a variable

| + | |

| − | |-

| + | |

| − | | IPDIWatchpoint

| + | |

| − | | A breakpoint that will suspend execution when a particular data structure is accessed

| + | |

| − | |}

| + | |

| − | | + | |

| − | === Debug Views ===

| + | |

| − | | + | |

| − | The debugger provides a number of views that display the state of objects in the debug model. The views provided by the PTP debugger are summarized in the following table.

| + | |

| − | | + | |

| − | {| border="1"

| + | |

| − | |-

| + | |

| − | ! View Name

| + | |

| − | ! Description

| + | |

| − | |-

| + | |

| − | | Breakpoints View

| + | |

| − | | Displays the breakpoints for the program, and if the breakpoint is active or inactive. Active breakpoints will trigger suspension of the execution context.

| + | |

| − | |-

| + | |

| − | | Debug View

| + | |

| − | | Displays the threads and stack frames associated with a process. Is used to navigate to different stack frame locations.

| + | |

| − | |-

| + | |

| − | | Parallel Debug View

| + | |

| − | | Displays the parallel job and processes associated with the job. Allows the user to define groupings of processes for control and viewing purposes.

| + | |

| − | |-

| + | |

| − | | PTP Variable View

| + | |

| − | | Registers variables that will be displayed in tooltip popups in the Parallel Debug View.

| + | |

| − | |-

| + | |

| − | | Signals View

| + | |

| − | | Displays the state of signal handling in the debugger, and allows the user to change how signals will be handled.

| + | |

| − | |-

| + | |

| − | | Variable View

| + | |

| − | | Displays the values of all local variables in the current execution context.

| + | |

| − | |-

| + | |

| − | |}

| + | |

| − | | + | |

| − | === Debug Controller ===

| + | |

| − | | + | |

| − | The debug controller is responsible for maintaining the state of the debug model, and for communicting with the backend debug engine. Commands to the debug engine are initiated by the user via interaction with views in the user interface, and cause the debug controller to invoke the appropriate method on the PDI implementation. PDI then translates the commands into a format required by the backend debug engine. Events that are generated by the backend debug engine are converted by the PDI debugger implementation into PDI model events. The controller then uses these events to update the debug model.

| + | |

| − | | + | |

| − | === Debug API ===

| + | |

| − | | + | |

| − | The debug controller uses an API known as the parallel debug interface (PDI) to communicate with a backend debug engine. A new PDI implementation is only required to support a different debug protocol than that used by the scalable debug manager (SDM).

| + | |

| − | | + | |

| − | ==== Addressing ====

| + | |

| − | | + | |

| − | Most debugger methods and events require knowledge of which processes they are directed to, or where they have originated from. The BitList class is used for this purpose. Debugger processes are numbered from 0 to N-1 (where N is the total number) and correspond to bits in the BitList. For example, to send a command to processes 15 through 30, bits 15-30 would be set in the BitList. The PDI makes no assumptions how the BitList is represented once it leaves the PDI classes.

| + | |

| − | | + | |

| − | ==== Data Representation ====

| + | |

| − | | + | |

| − | Unlike most debuggers, a PDI debugger does not use strings to represent data values that have been obtained from the target processes. Instead, PDI provides a first-class data type that represents both the type and value of the target data in an architecture independent manner. This allows Eclipse to manipulate data that originates from any target architecture, regardless of word length, byte ordering, or other architectural issues. The following table shows the classes that are available for representing both simple and compound data types.

| + | |

| − | | + | |

| − | {| border="1"

| + | |

| − | ! Type Class

| + | |

| − | ! Value Class

| + | |

| − | ! Description

| + | |

| − | |-

| + | |

| − | | IAIFTypeAddress

| + | |

| − | | IAIFValueAddress

| + | |

| − | | Represents a machine address.

| + | |

| − | |-

| + | |

| − | | IAIFTypeArray

| + | |

| − | | IAIFValueArray

| + | |

| − | | Represents a multi-dimensional array.

| + | |

| − | |-

| + | |

| − | | IAIFTypeBool

| + | |

| − | | IAIFValueBool

| + | |

| − | | Represents a boolean value.

| + | |

| − | |-

| + | |

| − | | IAIFTypeChar

| + | |

| − | | IAIFValueChar

| + | |

| − | | Represents a single character.

| + | |

| − | |-

| + | |

| − | | IAIFTypeCharPointer

| + | |

| − | | IAIFValueCharPointer

| + | |

| − | | Represents a pointer to a character string (i.e. a C string type).

| + | |

| − | |-

| + | |

| − | | IAIFTypeClass

| + | |

| − | | IAIFValueClass

| + | |

| − | | Represents a class.

| + | |

| − | |-

| + | |

| − | | IAIFTypeEnum

| + | |

| − | | IAIFValueEnum

| + | |

| − | | Represents an enumerated value.

| + | |

| − | |-

| + | |

| − | | IAIFTypeFloat

| + | |

| − | | IAIFValueFloat

| + | |

| − | | Represents a floating point number.

| + | |

| − | |-

| + | |

| − | | IAIFTypeFunction

| + | |

| − | | IAIFValueFunction

| + | |

| − | | Represents a function object.

| + | |

| − | |-

| + | |

| − | | IAIFTypeInt

| + | |

| − | | IAIFValueInt

| + | |

| − | | Represents an integer.

| + | |

| − | |-

| + | |

| − | | IAIFTypeLong

| + | |

| − | | IIAIFValueLong

| + | |

| − | | Represents a long integer.

| + | |

| − | |-

| + | |

| − | | IAIFTypeLongLong

| + | |

| − | | IIAIFValueLongLong

| + | |

| − | | Represents a long long integer.

| + | |

| − | |-

| + | |

| − | | IAIFTypeNamed

| + | |

| − | | IAIFValueNamed

| + | |

| − | | Represents a named object.

| + | |

| − | |-

| + | |

| − | | IAIFTypePointer

| + | |

| − | | IAIFValuePointer

| + | |

| − | | Represents a pointer to an object.

| + | |

| − | |-

| + | |

| − | | IAIFTypeRange

| + | |

| − | | IAIFValueRange

| + | |

| − | | Represents a value range.

| + | |

| − | |-

| + | |

| − | | IAIFTypeReference

| + | |

| − | | IAIFValueReference

| + | |

| − | | Represents a reference to a named object.

| + | |

| − | |-

| + | |

| − | | IAIFTypeShort

| + | |

| − | | IAIFValueShort

| + | |

| − | | Represents a short integer.

| + | |

| − | |-

| + | |

| − | | IAIFTypeString

| + | |

| − | | IAIFValueString

| + | |

| − | | Represents a string (not C string).

| + | |

| − | |-

| + | |

| − | | IAIFTypeStruct

| + | |

| − | | IAIFValueStruct

| + | |

| − | | Represents a structure.

| + | |

| − | |-

| + | |

| − | | IAIFTypeUnion

| + | |

| − | | IAIFValueUnion

| + | |

| − | | Represents a union.

| + | |

| − | |-

| + | |

| − | | IAIFTypeVoid

| + | |

| − | | IAIFValueVoid

| + | |

| − | | Represents a void type.

| + | |

| − | |}

| + | |

| − | | + | |

| − | ==== Command Requests ====

| + | |

| − | | + | |

| − | The PDI specifies a number of commands that are sent from the UI to the backend debug engine in order to perform debug operations. Commands are addressed to destination debug processes using the BitList class. The following table lists the interfaces that must be implemented to support debug commands.

| + | |

| − | | + | |

| − | {| border="1"

| + | |

| − | |-

| + | |

| − | ! Interface

| + | |

| − | ! Description

| + | |

| − | |-

| + | |

| − | | IPDIDebugger

| + | |

| − | | This is the main interface for implementing a new debugger. The concrete implementation of this class must provide methods for each debugger command, as well as some utility methods for controlling debugger operation.

| + | |

| − | |-

| + | |

| − | | IPDIBreakpointManagement

| + | |

| − | | This interface provides methods for managing all types of breakpoints, including line, function, and address breakpoints, watchpoints, and exceptions.

| + | |

| − | |-

| + | |

| − | | IPDIExecuteManagement

| + | |

| − | | This interface provides methods for controlling the execution of the program being debugged, such as resuming, stepping, and termination.

| + | |

| − | |-

| + | |

| − | | IPDIMemoryBlockManagement

| + | |

| − | | This interface provides methods for managing direct access to process memory.

| + | |

| − | |-

| + | |

| − | | IPDISignalManagement

| + | |

| − | | This interface provides methods for managing signals.

| + | |

| − | |-

| + | |

| − | | IPDIStackframeManagement

| + | |

| − | | This interface provides methods for managing access to process stack frames.

| + | |

| − | |-

| + | |

| − | | IPDIThreadManagement

| + | |

| − | | This interface provides methods for managing process threads.

| + | |

| − | |-

| + | |

| − | | IPDIVariableManagement

| + | |

| − | | This interface provides methods for managing all types of variables (local, global, etc.) and expression evaluation.

| + | |

| − | |}

| + | |

| − | | + | |

| − | ==== Events ====

| + | |

| − | | + | |

| − | Every PDI command results in one or more events. An event contains a list of source addresses that are represented by a BitList class. Each event may also contain additional data that provides more detailed information about the event. The data in an event with multiple sources is assumed to be identical for each source. The following table provides a list of the available events.

| + | |

| − | | + | |

| − | {| border="1"

| + | |

| − | |-

| + | |

| − | ! Event

| + | |

| − | ! Description

| + | |

| − | |-

| + | |

| − | | IPDIChangedEvent

| + | |

| − | | Notification that a PDI model object has changed.

| + | |

| − | |-

| + | |

| − | | IPDIConnectedEvent

| + | |

| − | | Notification that the debugger has started successfully.

| + | |

| − | |-

| + | |

| − | | IPDICreatedEvent

| + | |

| − | | Notification that a new PDI model object has been created.

| + | |

| − | |-

| + | |

| − | | IPDIDestroyedEvent

| + | |

| − | | Notification that a PDI model object has been destroyed.

| + | |

| − | |-

| + | |

| − | | IPDIDisconnectedEvent

| + | |

| − | | Notification that the debugger session has terminated.

| + | |

| − | |-

| + | |

| − | | IPDIErrorEvent

| + | |

| − | | Notification that an error condition has occurred.

| + | |

| − | |-

| + | |

| − | | IPDIRestartedEvent

| + | |

| − | | Not currently used.

| + | |

| − | |-

| + | |

| − | | IPDIResumedEvent

| + | |

| − | | Notification that the debugger target has resumed execution.

| + | |

| − | |-

| + | |

| − | | IPDIStartedEvent

| + | |

| − | | Notification that the debugger has successfully started.

| + | |

| − | |-

| + | |

| − | | IPDISuspendedEvent

| + | |

| − | | Notification that the debugger target has been suspended.

| + | |

| − | |}

| + | |

| − | | + | |

| − | Many events also provide additional information associated with the event result. The following table provides a list of these interfaces.

| + | |

| − | | + | |

| − | {| border="1"

| + | |

| − | |-

| + | |

| − | ! Interface

| + | |

| − | ! Description

| + | |

| − | |-

| + | |

| − | | IPDIBreakpointInfo

| + | |

| − | | Information about a process when it stops at a breakpoint.

| + | |

| − | |-

| + | |

| − | | IPDIDataReadMemoryInfo

| + | |

| − | | Result of a IPDIDataReadMemoryRequest

| + | |

| − | |-

| + | |

| − | | IPDIEndSteppingRangeInfo

| + | |

| − | | Information about the process when a step command is completed.

| + | |

| − | |-

| + | |

| − | | IPDIErrorInfo

| + | |

| − | | Additional information about the cause of an error condition.

| + | |

| − | |-

| + | |

| − | | IPDIExitInfo

| + | |

| − | | Information about the reason that a target process exited.

| + | |

| − | |-

| + | |

| − | | IPDIFunctionFinishedInfo

| + | |

| − | | Not currently implemented.

| + | |

| − | |-

| + | |

| − | | IPDILocationReachedInfo

| + | |

| − | | Not currently implemented.

| + | |

| − | |-

| + | |

| − | | IPDIMemoryBlockInfo

| + | |

| − | | Represents a block of memory in the target process.

| + | |

| − | |-

| + | |

| − | | IPDIRegisterInfo

| + | |

| − | | Not currently implemented.

| + | |

| − | |-

| + | |

| − | | IPDISharedLibraryInfo

| + | |

| − | | Not currently implemented.

| + | |

| − | |-

| + | |

| − | | IPDISignalInfo

| + | |

| − | | Information about a signal on the target system.

| + | |

| − | |-

| + | |

| − | | IPDIThreadInfo

| + | |

| − | | Represents information about a thread.

| + | |

| − | |-

| + | |

| − | | IPDIVariableInfo

| + | |

| − | | Represents information about a variable.

| + | |

| − | |-

| + | |

| − | | IPDIWatchpointScopeInfo

| + | |

| − | | Not currently implemented.

| + | |

| − | |-

| + | |

| − | | IPDIWatchpointTriggerInfo

| + | |

| − | | Not currently implemented.

| + | |

| − | |}

| + | |

| − | | + | |

| − | === Scalable Debug Manager ===

| + | |

| − | | + | |

| − | == Remote Services ==

| + | |

| − | | + | |

| − | === Overview ===

| + | |

| − | | + | |

| − | Although some users are lucky enough to have a parallel computer under their desk, most users will be developing and running parallel applications on remote systems. This means that PTP must support monitoring, running and debugging applications on computer systems that are geographically distant from the user's desktop. The traditional approach take to this problem was to require the users to log into a remote machine using a terminal emulation program, and then run commands directly on the remote system. Early versions of PTP also took this approach, requiring the use of the remote X11 protocol to display an Eclipse session that was actually running on the remote machine. However this technique suffers from a number of performance and usability problems that are difficult to overcome. The approach taken in later versions of PTP (2.0 and higher) is to run Eclipse locally on the user's desktop, and provide a proxy protocol to communicate with a lightweight agent running on the remote system, as described in the preceding sections. In addition to the proxy protocol, there are a number of other remote activities that must also take place to transparently support remote development. PTP support for these activities is described in the following sections.

| + | |

| − | | + | |

| − | === Remote Services Abstraction ===

| + | |

| − | | + | |

| − | PTP takes the approach that the user should be spared from needing to know about specific details of how to interact with a remote computer system. That is, the user should only need to supply enough information to establish a connection to a remote machine (typically the name of the system, and a username and password), then everything else should be taken care of automatically. Details such as the protocol used for communication, how files are accessed, or commands initiated, do not need to be exposed to the user.

| + | |

| − | | + | |

| − | An additional requirement is that PTP should not be dependent on other Eclipse projects (apart from the platform). Unfortunately, while the Eclipse File System (EFS) provides an abstraction for accessing non-local resources, it does not support other kinds of services, such as remote command execution. The only alternative to EFS is the RSE project, but introducing such a dependency into PTP is not desirable at this time.

| + | |

| − | | + | |

| − | In order to address these requirements, PTP provides a remote services abstraction layer that allows uniform access to local and remote resources using arbitrary remote service providers. The following diagram shows the architecture of the remote services abstraction.

| + | |

| − | | + | |

| − | [[Image:remote_services.png|center]]

| + | |

| − | | + | |

| − | The top two layers (shown in orange) comprise the abstraction layer. The lower (green) layer are the actual service provider implementations. By providing this separation between the abstraction and the service providers, no additional dependencies are introduced into PTP.

| + | |

| − | | + | |

| − | Three primary service types are provided:

| + | |

| − | | + | |

| − | ; Connection management : Create and manage connections to the remote system. Once a connection has been established, it can be used to support additional activities.

| + | |

| − | | + | |

| − | ; File management : Provides services that allow browsing for and performing operations on remote resources, either files or directories.

| + | |

| − | | + | |

| − | ; Process management : Provides services for running commands on a remote system.

| + | |

| − | | + | |

| − | There is also support available for discovering and managing service providers.

| + | |

| − | | + | |

| − | === Implementation Details === | + | |

| − | | + | |

| − | The main plugin for remote services is found in the <tt>org.eclipse.ptp.remote</tt>. This plugin must be included in order to access the remote abstraction layer. The plugin provides an extension point for adding remote services implementations. Each remote services implementation supplies a <i>remote services ID</i> which is used to identify the particular implementation. The following table lists the current remote services implementations and their ID's.

| + | |

| − | | + | |

| − | {| border="1"

| + | |

| − | |-

| + | |

| − | ! Plugin Name !! ID !! Remote Service Provider

| + | |

| − | |-

| + | |

| − | | <tt>org.eclipse.ptp.remote</tt> || <tt>org.eclipse.ptp.remote.LocalServices</tt> || Local filesystem and process services

| + | |

| − | |-

| + | |

| − | | <tt>org.eclipse.ptp.remote.rse</tt> || <tt>org.eclipse.ptp.remote.RSERemoteServices</tt> || Remote System Explorer

| + | |

| − | |-

| + | |

| − | |}

| + | |

| − | | + | |

| − | In addition to providing a remote services ID, each plugin must provide implementations for the three main services types: connection management, file management, and process management. The plugin is also responsible for ensuring that it is initialized prior to any of the services being invoked.

| + | |

| − | | + | |

| − | ==== Entry Point: PTPRemotePlugin ====

| + | |

| − | | + | |

| − | The activation class for the main remote services plugin is <tt>PTPRemotePlugin</tt>. This class provides two main methods for accessing the remote services:

| + | |

| − | | + | |

| − | ; <tt>IRemoteServices[] PTPRemotePlugin.getAllRemoteServices()</tt> : This method returns an array of all remote service providers that are currently available. A service provider for accessing local services is guaranteed to be available, so this method will always return an array containing at least one element.

| + | |

| − | | + | |

| − | ; <tt>IRemoteServices PTPRemotePlugin.getRemoteServices(String id)</tt> : This method returns the remote services provider that corresponds to the ID give by the <code>id</code> argument.

| + | |

| − | | + | |

| − | Typically, the remote service providers returned by <tt>getAllRemoteServices()</tt> will be used to populate a dropdown that allows the user to select the service they want to use. Once the provider has been selected, the <tt>getRemoteServices()</tt> method can be used to retrieve the remote services at a later date.

| + | |

| − | | + | |

| − | ==== Obtaining Services: IRemoteServices ====

| + | |

| − | | + | |

| − | The <tt>IRemoteServices</tt> interface represents a particular set of remote services, and is the main interface for interacting with the remote service provider. There are four main methods available:

| + | |

| − | | + | |

| − | ; <tt>boolean isInitialized()</tt> : This should be called to check that the service has been initialized. Other methods should only be called if this returns true.

| + | |

| − | | + | |

| − | ; <tt>IRemoteConnectionManager getConnectionManager()</tt> : Returns a connection manager. This is used for creating and managing connections.

| + | |

| − | | + | |

| − | ; <tt>IRemoteFileManager getFileManager()</tt> : Returns a file manager for a given connection. A file manager is responsible for managing operations on files and directories.

| + | |

| − | | + | |

| − | ; <tt>IRemoteProcessBuilder getProcessBuilder()</tt> : Returns a process builder for a given connection. A process builder is responsible for running commands on a remote system.

| + | |

| − | | + | |

| − | ==== Connection Managerment: IRemoteConnectionManager ====

| + | |

| − | | + | |