Notice: this Wiki will be going read only early in 2024 and edits will no longer be possible. Please see: https://gitlab.eclipse.org/eclipsefdn/helpdesk/-/wikis/Wiki-shutdown-plan for the plan.

PDS Client 2.0 Design

{{#eclipseproject:technology.higgins|eclipse_custom_style.css}} DESIGN PROPOSAL

The PDS Client is packaged as code library and triggered by separate operating system process with permissions of current logged-in user, so each user in the system has his own instance of PDS Client.

Architecture

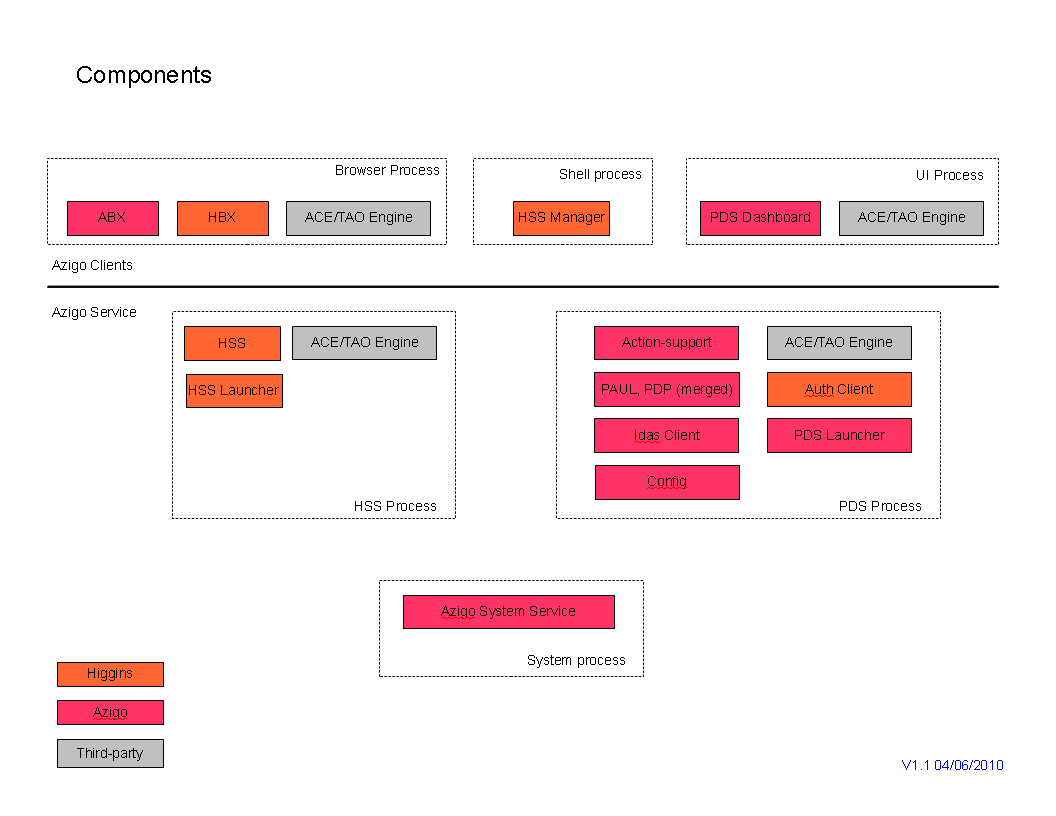

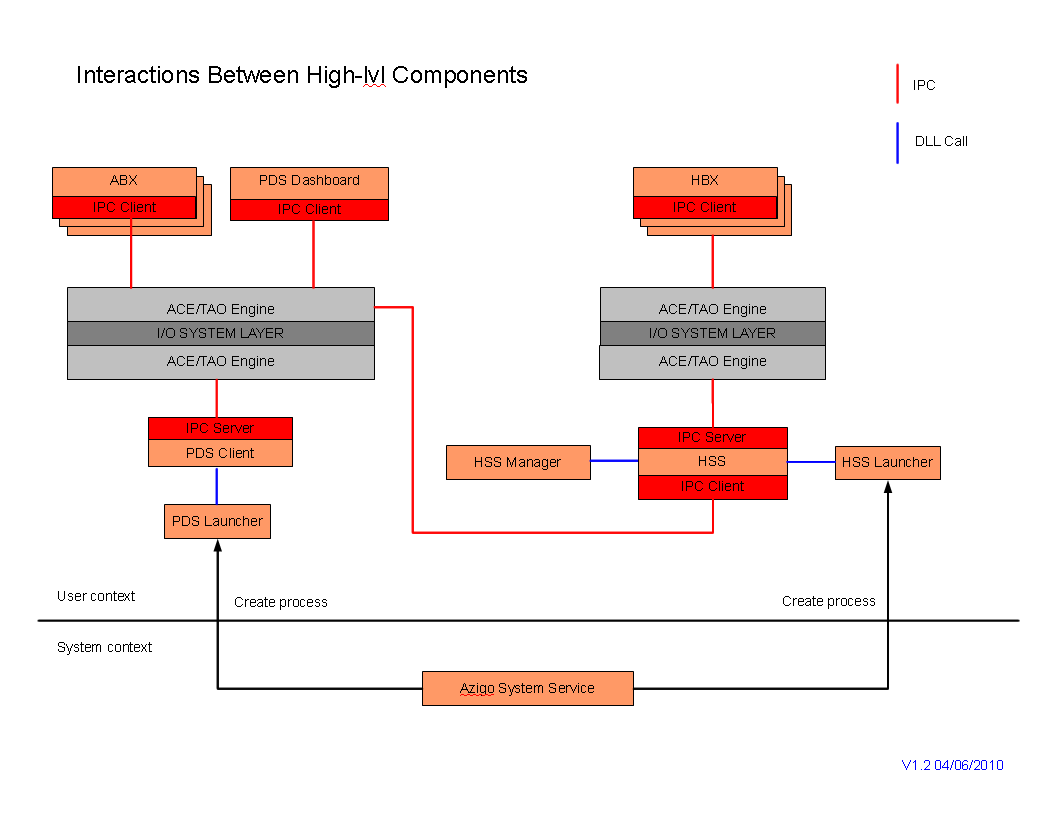

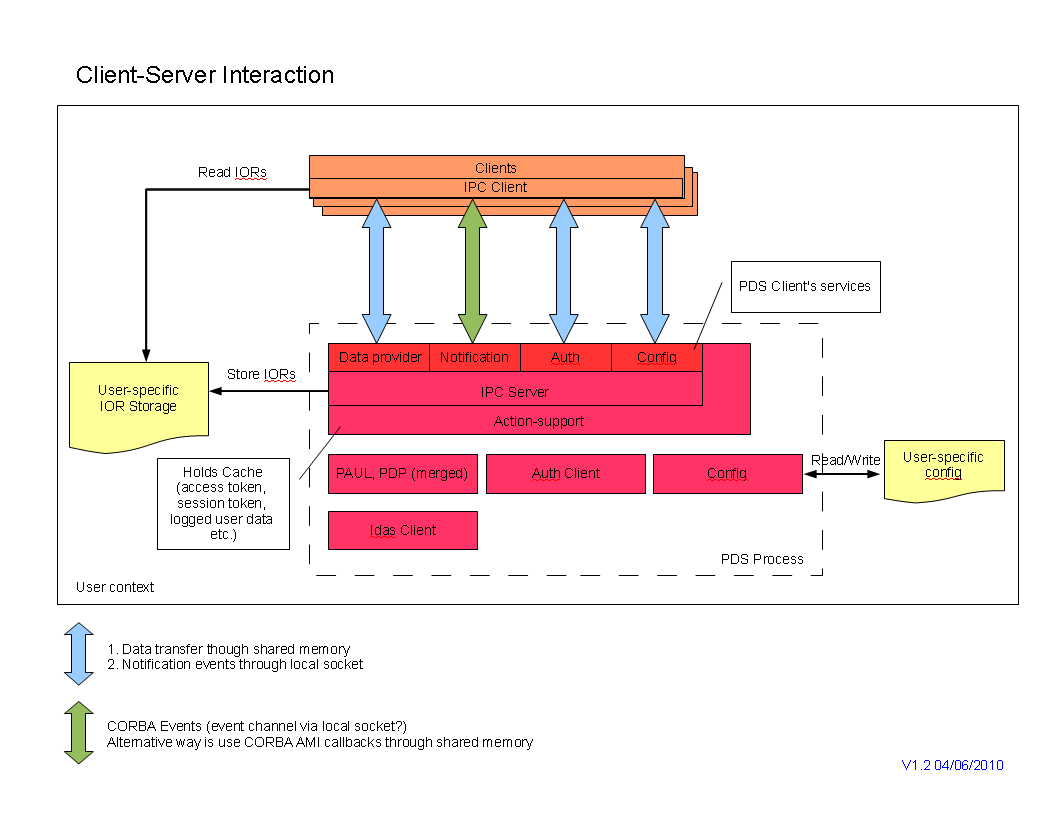

The following three pictures show a new/revised design for the PDS Client 2.0. The architecture includes some new components. Communication with remote servers is the same as shown on the old PDS Client 2.0 architecture as well as internal communication between PDS Client low-level components.

1) First picture shows all components grouped 'by process' on user's machine:

2) On the second picture interactions between high-level components is represented:

3) As a result of overview of current Azigo3 APIs it has been discovered that PDS Client provides few logical groups of APIs and we can realize them as PDS Client's services as shown below.

The IPC Server endpoint is configured by ORB engine (ACE/TAO) during IPC Server initialization. This info and server objects references with POA configuration are stored in IOR Storage. It might be user-specific storage or e.g. TAO Naming Service (but run in system context).

IOR data is updated after each start of IPC Server. Using IOR data all clients (browser extensions, PDS Dashboard etc working under one system user) of PDS Client will be able to configure their IPC clients and get access to the IPC server.

Hence, each IPC client of several logged-in users will know correct IPC server endpoint (because IOR data is user specific) and it gives the chance to avoid a situation when clients of one user access to a server of other users in the system.

Work progress

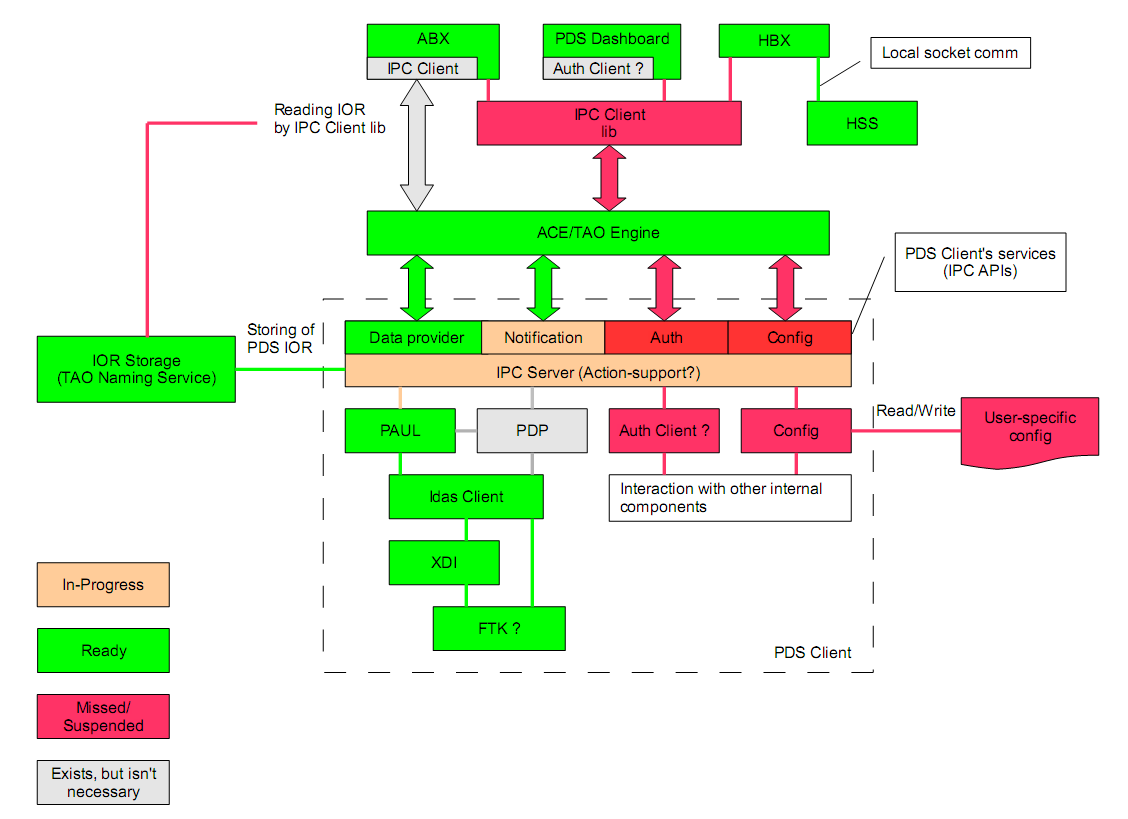

The following picture shows state of the PDS Client for 5/10/2010:

Comments:

- Due to big size of TAO COS Events Service dependencies (1,6 Mb), this service is not used. Implementation of Notification Service is based on a prototype CORBA-based distributed notification mechanism. This mechanism implements a "publish/subscribe" communication protocol. It allows Suppliers to pass messages containing an event data to a dynamically managed group of Consumers. This is similar to the OMG COS Events Service, though not as sophisticated.

- TAO Naming Service has been chosen as storehouse of the PDS IORs.

It consists of the following C++ components:

- Components 2.0#PAUL - Personal Agent Utility Layer (PAUL) - the higher level logic of the PDS Client 2.0

- Components 2.0#Persona Data Provider - an API for the Persona Data Model 2.0

- Components 2.0#ACE/TAO Engine - set of libraries implements IPC communication.

- Components 2.0#Auth Client

- Components 2.0#IdAS Client C++ - communicates with Personal Data Store 2.0

- Components 2.0#Azigo System Service - planned. System dependent component. Manages PDS and HSS processes and run them for each logging-in user with his permissions.

- Components 2.0#Config - planned. Manages user configuration (sync interval, settings etc).

Security

We have to figure out how to identify clients which can get access to PDS Client.