Notice: this Wiki will be going read only early in 2024 and edits will no longer be possible. Please see: https://gitlab.eclipse.org/eclipsefdn/helpdesk/-/wikis/Wiki-shutdown-plan for the plan.

Difference between revisions of "EclipseLink/Cloud"

m (→4 Distribute Coherence Cache Example) |

m (→OTS 2: TopLink Grid (uses Coherence)) |

||

| Line 166: | Line 166: | ||

===OTS 1: EclipseLink Cache Coordination=== | ===OTS 1: EclipseLink Cache Coordination=== | ||

===OTS 2: TopLink Grid (uses Coherence)=== | ===OTS 2: TopLink Grid (uses Coherence)=== | ||

| + | *We will investigate using Oracle Coherence as a distributed 2nd level cache. Before we start we will verify the Coherence install that ships with certain versions of WebLogic (before using it from our JPA application). | ||

====Coherence Quickstart==== | ====Coherence Quickstart==== | ||

*Here we verify Coherence 3.6 by installing and running the Coherence example installed as an option in the WebLogic server 11gR1 install. | *Here we verify Coherence 3.6 by installing and running the Coherence example installed as an option in the WebLogic server 11gR1 install. | ||

Revision as of 11:51, 27 October 2010

Contents

Cloud Enabled EclipseLink : CEE

DISCLAIMER: This page reflects investigation into how EclipseLink technology can used as part of - or benefit from integration with other projects. It does NOT imply any formal certification from EclipseLink on these technologies or make any assumptions on the direction of the API. This page is purely experimental speculation.

Scope

- This page started 20101020 details thoughts, initiatives or projects surrounding performance optimization through use of Cloud Computing hardware running EclipseLink as a layer of their software stack.

- Multitenancy may enter into the discussion here - where everything is shared (OS, software stack, application and database) except that data is kept separate between different customers. The complexity in this case moves to the developer in the form of separate data models.

- Virtualization may be discussed here - where everything is logically separated into virtual machines. A valid use case is one where virtualization is used as a means to offer multiple release versions of a multitenancy application.

Analysis

- The move from a single server hosting single customer applications to a cloud server hosting multiple applications with some or all of these running as multitenant applications will involve a lot of design decisions because of the increased complexity of simultanously dealing with concurrent customers, applications and hardware.

- In some ways we are already dealing with most of these issues separately.

- Hardware concurrency is dealt with in clusters and grid systems

- Software concurrency is experienced in multithreaded synchronization to resources such as memory and persistent storage on the system or network

- Customer concurrency is an issue in multitenant systems where the data model and its use is the only non-shared resource

- In some ways we are already dealing with most of these issues separately.

Recommendations

Software

- We will investigate various levels of networking scenarios that link our distributed JVM nodes.

Base Case 1: Custom Distributed RMI

EE Distributed Session Bean

- Deploy this on each of your WebLogic servers

@Stateful(mappedName="ejb/Node") @Local public class Node implements NodeRemote, NodeLocal { private int transition; private int state; public void setState(int aState) { System.out.println("_Distributed: new state: " + aState); state = aState; } public int getState() { return state; } public int getAdjacent(int offsetX, int offsetY) { return 1; } } @Remote public interface NodeRemote { //####<15-Sep-2010 1:21:15 o'clock PM EDT> <Info> <EJB> <xps435> <AdminServer> <[STANDBY] ExecuteThread: '5' for queue: 'weblogic.kernel.Default (self-tuning)'> <<WLS Kernel>> <> <> <1284571275760> <BEA-010009> //<EJB Deployed EJB with JNDI name org_eclipse_persistence_example_distributed_ClientEARorg_eclipse_persistence_example_distributed_ClientEJB_jarNode_Home.> public void setState(int state); public int getState(); public int getAdjacent(int offsetX, int offsetY); }

SE client

- Run this on one of the servers or a separate SE JVM

package org.eclipse.persistence.jmx; import java.util.ArrayList; import java.util.HashMap; import java.util.Hashtable; import java.util.List; import java.util.Map; import javax.naming.Context; import javax.naming.InitialContext; import javax.rmi.PortableRemoteObject; import org.eclipse.persistence.example.distributed.NodeRemote; /** * This class tests RMI connections to multiple WebLogic servers running on * remote JVM's. It is intended for experimental concurrency investigations only. * @author mfobrien 20100916 * */ public class JNDIClient { public static final List<String> serverNames = new ArrayList<String>(); private Map<String, Hashtable<String, String>> contextMap = new HashMap<String, Hashtable<String, String>>(); private Map<String, Context> rmiContextMap = new HashMap<String, Context>(); private Map<String, NodeRemote> remoteObjects = new HashMap<String, NodeRemote>(); private Map<String, Integer> stateToSet = new HashMap<String, Integer>(); private static Map<String, String> serverIPMap = new HashMap<String, String>(); private static final String CONTEXT_FACTORY_NAME = "weblogic.jndi.WLInitialContextFactory"; private static final String SESSION_BEAN_REMOTE_NAME = "ejb/Node#org.eclipse.persistence.example.distributed.NodeRemote"; //private String sessionBeanRemoteName = "java:comp/env/ejb/Node"; // EE only //private String sessionBeanRemoteName = "org_eclipse_persistence_example_distributed_ClientEARorg_eclipse_persistence_example_distributed_ClientEJB_jarNode_Home" ; /** * For each server add the name key and corresponding RMI URL */ static { // Beowulf5 on Cat6 1Gb/Sec serverNames.add("beowulf5"); serverIPMap.put("beowulf5", "t3://192.168.0.165:7001"); // Beowulf6 on Cat6 1Gb/Sec serverNames.add("beowulf6"); serverIPMap.put("beowulf6", "t3://192.168.0.166:7001"); } public JNDIClient() { } public void connect() { /** * Note: only import weblogic.jar and naming.jar */ // For JNDI we are forced to use Hashtable instead of HashMap // populate the hashtables for(String aServer : serverNames) { Hashtable<String, String> aTable = new Hashtable<String, String>(); contextMap.put(aServer,aTable); aTable.put(Context.INITIAL_CONTEXT_FACTORY,CONTEXT_FACTORY_NAME); aTable.put(Context.PROVIDER_URL, serverIPMap.get(aServer)); } /** * Setup and connect to RMI Objects */ try { // Establish RMI connections to the session beans for(String aServer : serverNames) { Context aContext = new InitialContext(contextMap.get(aServer)); rmiContextMap.put(aServer, aContext); System.out.println("Context for " + aServer + " : " + aContext); // For qualified name look for weblogic log "EJB Deployed EJB with JNDI name" Object aRemoteReference = aContext.lookup(SESSION_BEAN_REMOTE_NAME); System.out.println("Remote Object: " + aRemoteReference); NodeRemote aNode = (NodeRemote) PortableRemoteObject.narrow(aRemoteReference, NodeRemote.class); remoteObjects.put(aServer, aNode); System.out.println("Narrowed Session Bean: " + aNode); // initialize state list stateToSet.put(aServer, new Integer(0)); } NodeRemote aNode; StringBuffer aBuffer = new StringBuffer(); // Endlessly generate RMI requests for(;;) { // Send messages to entire grid in parallel for(String remoteServer : remoteObjects.keySet()) { aNode = remoteObjects.get(remoteServer); // increment server's pending state stateToSet.put(remoteServer, stateToSet.get(remoteServer).intValue() + 1); aNode.setState(stateToSet.get(remoteServer)); aBuffer = new StringBuffer("State from: "); aBuffer.append(remoteServer); aBuffer.append(" = "); aBuffer.append(aNode.getState()); System.out.println(aBuffer.toString()); } } } catch (Exception e) { e.printStackTrace(); } } public static void main(String[] args) { JNDIClient client = new JNDIClient(); client.connect(); } }

OTS 1: EclipseLink Cache Coordination

OTS 2: TopLink Grid (uses Coherence)

- We will investigate using Oracle Coherence as a distributed 2nd level cache. Before we start we will verify the Coherence install that ships with certain versions of WebLogic (before using it from our JPA application).

Coherence Quickstart

- Here we verify Coherence 3.6 by installing and running the Coherence example installed as an option in the WebLogic server 11gR1 install.

Distributed Coherence Cache Example - 4 caches

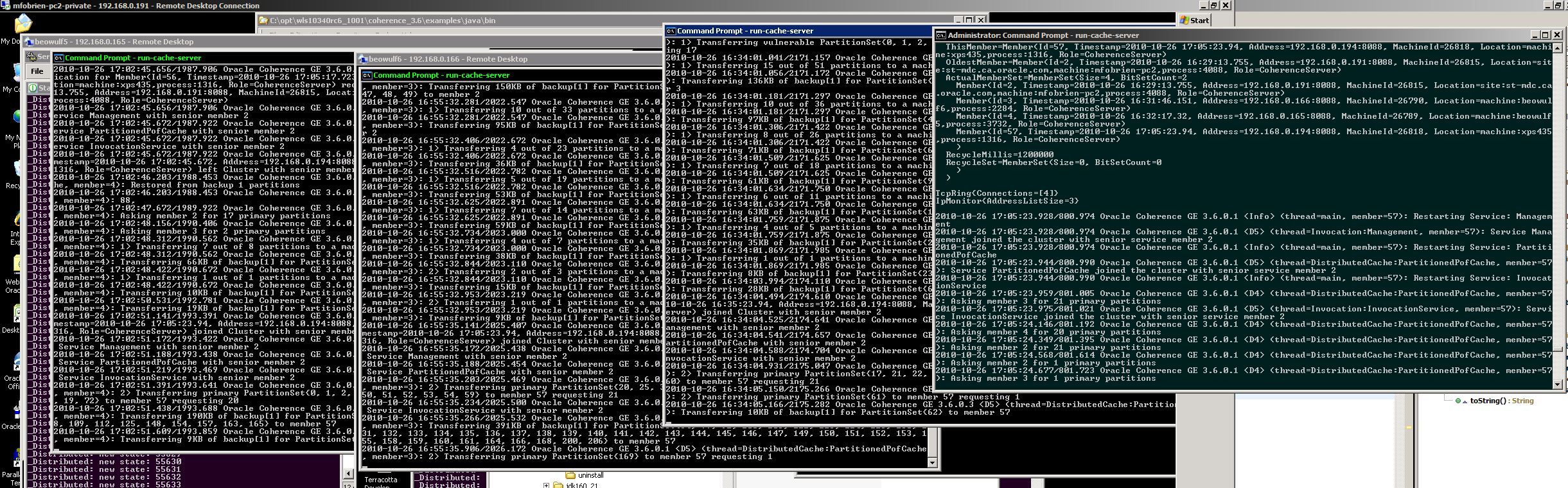

- In the following image we see the messaging that occurs out-of-the-box via cache discovery when running Coherence on multiple distributed JVM's on different machines.

- We start the nodes in the following order 192.168.0.194,192.168.0.191,192.168.0.166,192.168.0.165,

- See $WEBLOGIC_HOME\coherence_3.6\examples\java\bin

Hardware

4 Machines

- Our cluster similations will run on an existing set of 4 machines of varying capability that run on a private subnet accessible from the dual networked PC1 via remote desktop

PC1:xps435: Windows 7 64-bit 8 core Intel i7-920

- 192.168.0.194

PC2:mfobrien-pc2: Windows XP 32-bit 2 core Intel E8400

- 192.168.0.191

PC3:beowulf5: Windows XP 32-bit 2 core Intel P630

- 192.168.0.165

PC4:beowulf6: Windows XP 32-bit 2 core Intel P630

- 192.168.0.166