Notice: this Wiki will be going read only early in 2024 and edits will no longer be possible. Please see: https://gitlab.eclipse.org/eclipsefdn/helpdesk/-/wikis/Wiki-shutdown-plan for the plan.

Difference between revisions of "ATL/User Guide"

(→Install ATL) |

(→Install ATL) |

||

| Line 44: | Line 44: | ||

*access to the source code of installed ATL plugins | *access to the source code of installed ATL plugins | ||

| − | To install ATL from CVS, please refer to the [ | + | To install ATL from CVS, please refer to the [[ATL/Developer_Guide#Install_ATL_from_CVS | Developer Guide]]. |

= Overview of the Atlas Transformation Language = | = Overview of the Atlas Transformation Language = | ||

Revision as of 05:54, 3 June 2009

Contents

- 1 Introduction

- 2 Installation

- 3 Overview of the Atlas Transformation Language

- 4 The ATL Language

- 4.1 Data types

- 4.2 ATL Comments

- 4.3 OCL Declarative Expressions

- 4.4 ATL Helpers

- 4.5 ATL Rules

- 4.6 ATL Queries

- 4.7 ATL Keywords

- 4.8 ATL Tips & Tricks

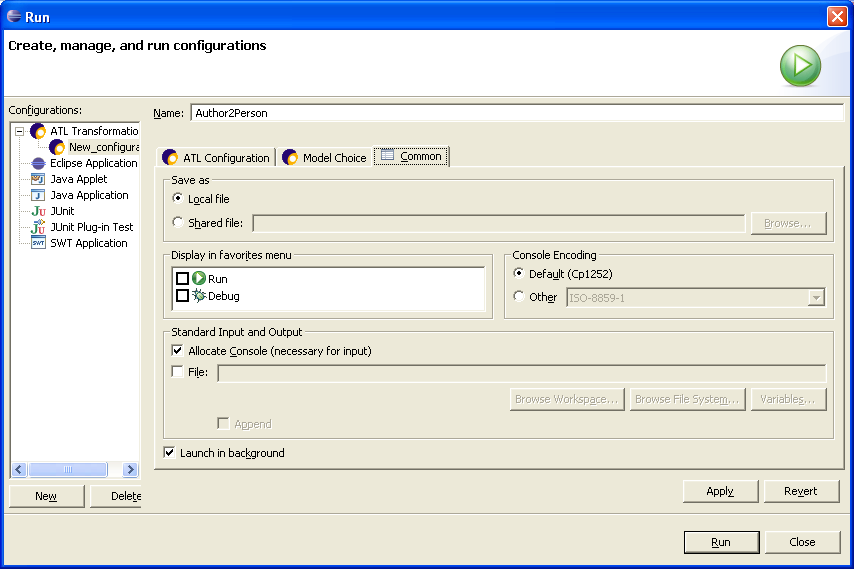

- 5 The ATL Tools

- 5.1 Perspectives

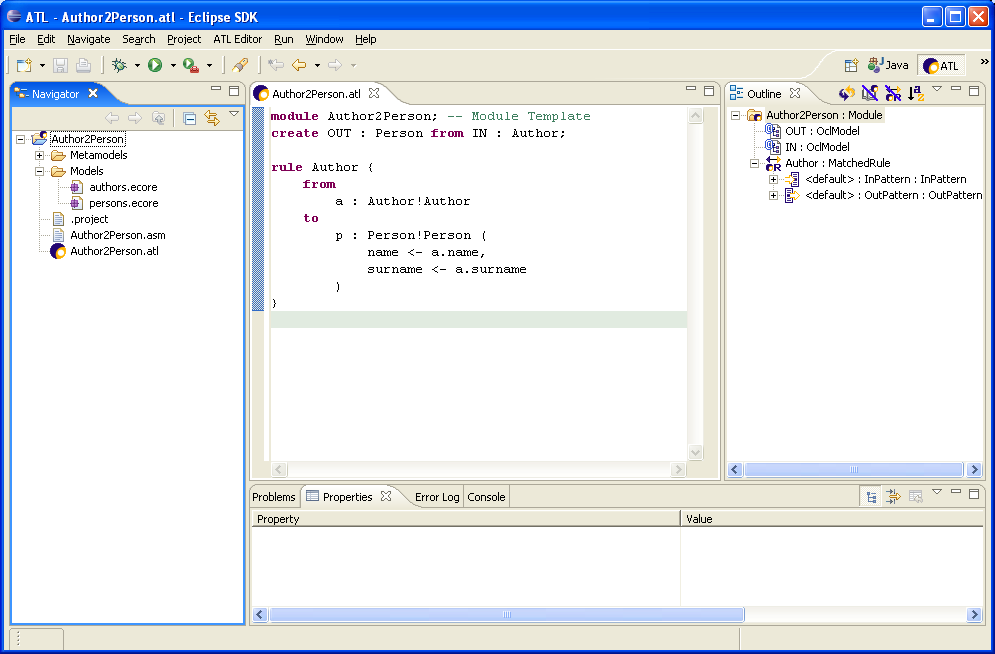

- 5.2 Programming ATL

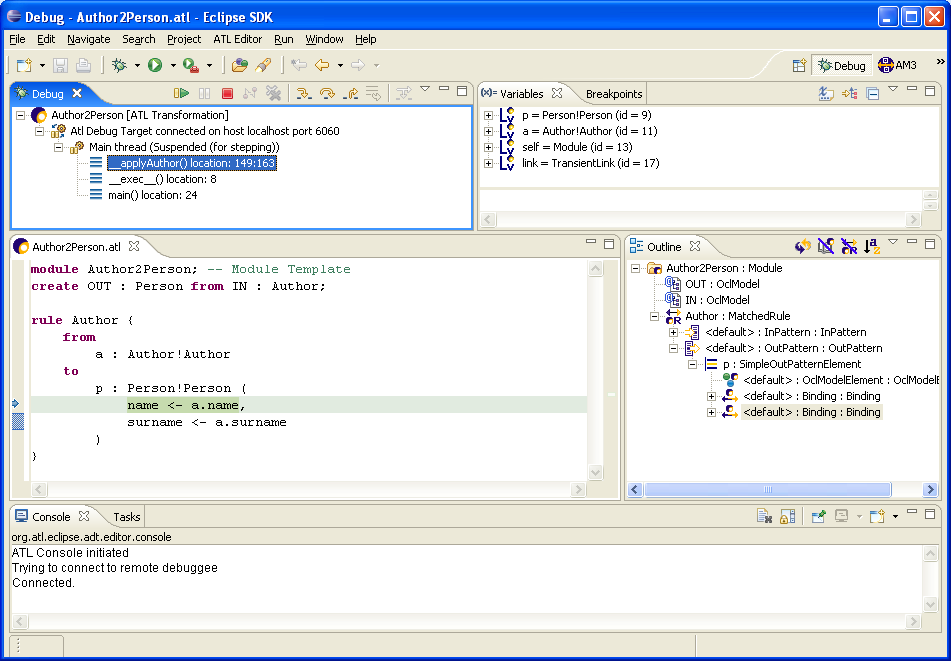

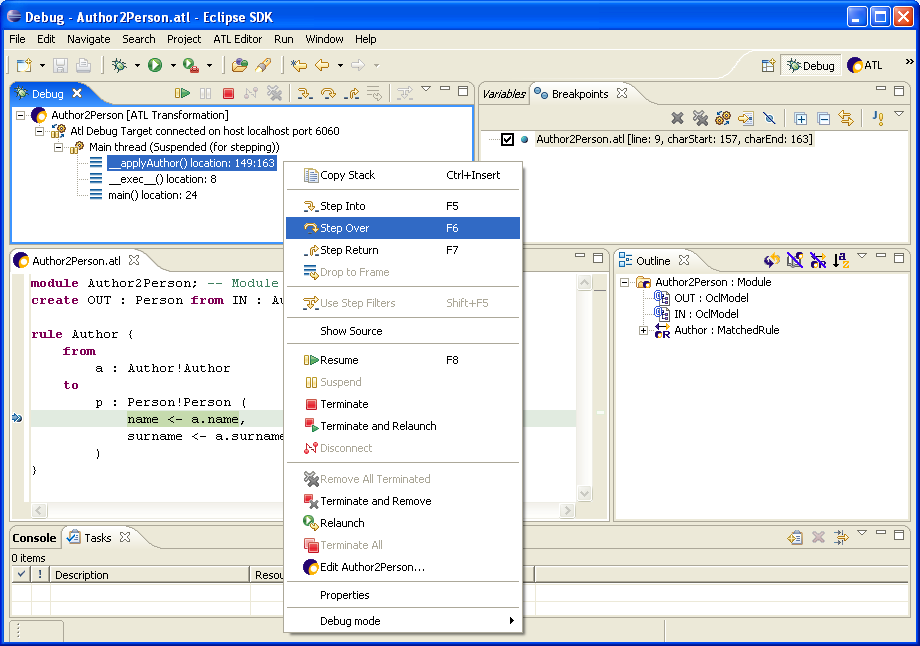

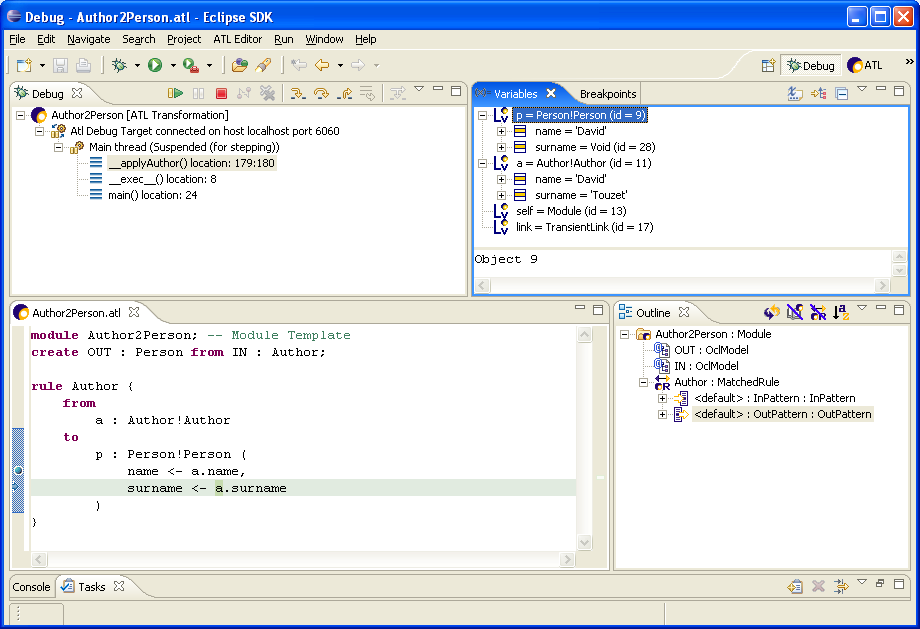

- 5.3 Debugging ATL

Introduction

ATL, the Atlas Transformation Language, is the ATLAS INRIA & LINA research group's answer to the OMG MOF/QVT RFP. It is a model transformation language specified as both a metamodel and a textual concrete syntax. In the field of Model-Driven Engineering (MDE), ATL provides developers with a mean to specify the way to produce a number of target models from a set of source models.

The ATL language is a hybrid of declarative and imperative programming. The preferred style of transformation writing is the declarative one: it enables to simply express mappings between the source and target model elements. However, ATL also provides imperative constructs in order to ease the specification of mappings that can hardy be expressed declaratively.

An ATL transformation program is composed of rules that define how source model elements are matched and navigated to create and initialize the elements of the target models. Besides basic model transformations, ATL defines an additional model querying facility that enables to specify requests onto models. ATL also allows code factorization through the definition of ATL libraries.

Developed over the Eclipse platform, the ATL Integrated Development Environment (IDE) provides a number of standard development tools (syntax highlighting, debugger, etc.) that aim to ease the design of ATL transformations. The ATL development environment also offers a number of additional facilities dedicated to models and metamodels handling. These features include a simple textual notation dedicated to the specification of metamodels, but also a number of standard bridges between common textual syntaxes and their corresponding model representations.

Installation

Prerequisites

Java

- Download and install a JVM (JRE or JDK), if one is not already installed.

- for ATL 2.0 or older, JDK 1.4 will work.

- for ATL 3.0+, you need JDK 5.0 or later

Eclipse

- Download and install Eclipse.

EMF

- Install EMF.

Install ATL

There are two ways to install ATL:

- via update site

- via zip: see ATL Downloads. Once your zip is downloaded, just unpack it into

- with Eclipse 3.3, 3.2: ~/eclipse/features/ and ~/eclipse/plugins/ folders

- with Eclipse 3.4+: ~/eclipse/dropins/ folder

Finally, restart Eclipse.

The ATL SDK build enables to:

- consult documentation offline

- access ATL examples

- access to the source code of installed ATL plugins

To install ATL from CVS, please refer to the Developer Guide.

Overview of the Atlas Transformation Language

The ATL language offers ATL developers to design different kinds of ATL units. An ATL unit, whatever its type, is defined in its own distinct ATL file. ATL files are characterized by the .atl extension.

As an answer to the OMG MOF/QVT RFP, ATL mainly focus on the model to model transformations. Such model operations can be specified by means of ATL modules. Besides modules, the ATL transformation language also enables developers to create model to primitive data type programs. These units are called ATL queries. The aim of a query is to compute a primitive value, such as a string or an integer, from source models. Finally, the ATL language also offers the possibility to develop independent ATL libraries that can be imported from the different types of ATL units, including libraries themselves. This provides a convenient way to factorize ATL code that is used in multiple ATL units. Note that the three ATL unit kinds same the share .atl extension.

These different ATL units are detailed in the following subsections. This section explains what each kind of unit should be used for, and provides an overview of the content of these different units.

Examples metamodels

This section provides two simple metamodels which will be used all along this guide to demonstrate ATL syntax and use.

Author metamodel

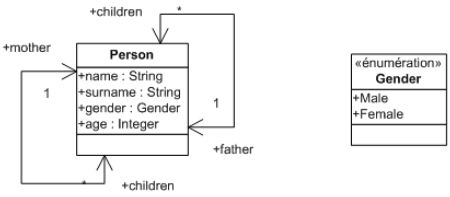

Person metamodel

Biblio metamodel

ATL module

An ATL module corresponds to a model to model transformation. This kind of ATL unit enables ATL developers to specify the way to produce a set of target models from a set of source models. Both source and target models of an ATL module must be "typed" by their respective metamodels. Moreover, an ATL module accepts a fixed number of models as input, and returns a fixed number of target models. As a consequence, an ATL module can not generate an unknown number of similar target models (e.g. models that conform to a same metamodel).

Structure of an ATL module

An ATL module defines a model to model transformation. It is composed of the following elements:

- A header section that defines some attributes that are relative to the transformation module;

- An optional import section that enables to import some existing ATL libraries;

- A set of helpers that can be viewed as an ATL equivalent to Java methods;

- A set of rules that defines the way target models are generated from source ones.

Helpers and rules do not belong to specific sections in an ATL transformation. They may be declared in any order with respect to certain conditions (see ATL Helpers section for further details). These four distinct element types are now detailed in the following subsections.

Header section

The header section defines the name of the transformation module and the name of the variables corresponding to the source and target models. It also encodes the execution mode of the module. The syntax for the header section is defined as follows:

module module_name; create output_models [from|refining] input_models;

The keyword module introduces the name of the module. Note that the name of the ATL file containing the code of the module has to correspond to the name of this module. For instance, a ModelA2ModelB transformation module has to be defined into the ModelA2ModelB.atl file. The target models declaration is introduced by the create keyword, whereas the source models are introduced either by the keyword from (in normal mode) or refining (in case of refining transformation). The declaration of a model, either a source input or a target one, must conform the scheme model_name : metamodel_name. It is possible to declare more than one input or output model by simply separating the declared models by a coma. Note that the name of the declared models will be used to identity them. As a consequence, each declared model name has to be unique within the set of declared models (both input and output ones). The following ATL source code represents the header of the Book2Publication.atl file, e.g. the ATL header for the transformation from the Book metamodel to the Publication metamodel:

module Book2Publication; create OUT : Publication from IN : Book;

Import section

The optional import section enables to declare which ATL libraries have to be imported. The declaration of an ATL library is achieved as follows:

uses extensionless_library_file_name;

For instance, to import the strings library, one would write:

uses strings;

Note that it is possible to declare several distinct libraries by using several successive uses instructions.

Helpers

ATL helpers can be viewed as the ATL equivalent to Java methods. They make it possible to define factorized ATL code that can be called from different points of an ATL transformation. An ATL helper is defined by the following elements:

- a name (which corresponds to the name of the method);

- a context type. The context type defines the context in which this attribute is defined (in the same way a method is defined in the context of given class in object-programming);

- a return value type. Note that, in ATL, each helper must have a return value;

- an ATL expression that represents the code of the ATL helper;

- an optional set of parameters, in which a parameter is identified by a couple (parameter name, parameter type).

As an example, it is possible to consider a helper that returns the maximum of two integer values: the contextual integer and an additional integer value which is passed as parameter. The declaration of such a helper will look like (detail of the helper code is not interesting at this stage, please refer to ATL Helpers section for further details):

helper context Integer def : max(x : Integer) : Integer = ...;

It is also possible to declare a helper that accepts no parameter. This is, for instance, the case for a helper that just multiplies an integer value by two:

helper context Integer def : double() : Integer = self * 2;

In some cases, it may be interesting to be able to declare an ATL helper without any particular context. This is not possible in ATL since each helper must be associated with a given context. However, the ATL language allows ATL developers to declare helpers within a default context (which corresponds to the ATL module). This is achieved by simply omitting the context part of the helper definition. It is possible, by this mean, to provide a new version of the max helper defined above:

helper def : max(x1 : Integer, x2 : Integer) : Integer = ...;

Note that several helpers may have the same name in a single transformation. However, helpers with a same name must have distinct signatures to be distinguishable by the ATL engine (see ATL Helpers section for further details). The ATL language also makes it possible to define attributes. An attribute helper is a specific kind of helper that accepts no parameters, and that is defined either in the context of the ATL module or of a model element. In the remaining of the present document, the term attribute will be specifically used to refer to attribute helpers, whereas the generic term of helper will refer to a functional helper. Thus, the attribute version of the double helper defined above will be declared as follows:

helper context Integer def : double : Integer = self * 2;

Declaring a functional helper with no parameter or an attribute may appear to be equivalent. It is therefore equivalent from a functional point of view. However, there exists a significant difference between these two approaches when considering the execution semantics. Indeed, compared to the result of a functional helper which is calculated each time the helper is called, the return value of an ATL attribute is computed only once when the value is required for the first time. As a consequence, declaring an ATL attribute is more efficient than defining an ATL helper that will be executed as many times as it is called. Note that the ATL attributes that are defined in the context of the ATL module are initialized (during the initialization phase) in the order they have been declared in the ATL file. This implies that the order of declaration of this kind of attribute is of some importance: an attribute defined in the context of the ATL module has to be declared after the other ATL module attributes it depends on for its initialization. A wrong order in the declaration of the ATL module attributes will raise an error during the initialization phase of the ATL program execution.

Rules

In ATL, there exist three different kinds of rules that correspond to the two different programming modes provided by ATL (e.g. declarative and imperative programming): the matched rules (declarative programming), the lazy rules, and the called rules (imperative programming).

Matched rules. The matched rules constitute the core of an ATL declarative transformation since they make it possible to specify:

1) for which kinds of source elements target elements must be generated,

2) the way the generated target elements have to be initialized.

A matched rule is identified by its name. It matches a given type of source model element, and generates one or more kinds of target model elements. The rule specifies the way generated target model elements must be initialized from each matched source model element. A matched rule is introduced by the keyword rule. It is composed of two mandatory (the source and the target patterns) and two optional (the local variables and the imperative) sections. When defined, the local variable section is introduced by the keyword using. It enables to locally declare and initialize a number of local variables (that will only be visible in the scope of the current rule). The source pattern of a matched rule is defined after the keyword from. It enables to specify a model element variable that corresponds to the type of source elements the rule has to match. This type corresponds to an entity of a source metamodel of the transformation. This means that the rule will generate target elements for each source model element that conforms to this matching type. In many cases, the developer will be interested in matching only a subset of the source elements that conform to the matching type. This is simply achieved by specifying an optional condition (expressed as an ATL expression, see OCL Declarative Expressions section for further details) within the rule source pattern. By this mean, the rule will only generate target elements for the source model elements that both conform to the matching type and verify the specified condition.

The target pattern of a matched rule is introduced by the keyword to. It aims to specify the elements to be generated when the source pattern of the rule is matched, and how these generated elements are initialized. Thus, the target pattern of a matched rule specifies a distinct target pattern element for each target model element the rule has to generate when its source pattern is matched. A target pattern element corresponds to a model element variable declaration associated with its corresponding set of initialization bindings. This model element variable declaration has to correspond to an entity of the target metamodels of the transformation.

Finally, the optional imperative section, introduced by the keyword do, makes it possible to specify some imperative code that will be executed after the initialization of the target elements generated by the rule. As an example, consider the following simple ATL matched rule between two metamodels, MMAuthor and MMPerson:

rule Author {

from

a : MMAuthor!Author

to

p : MMPerson!Person (

name <- a.name,

surname <- a.surname

)

}

This rule, called Author, aims to transform Author source model elements (from the MMAuthor source model) to Person target model elements in the MMPerson target model. This rule only contains the mandatory source and target patterns. The source pattern defines no filter, which means that all Author classes of the source MMAuthor model will be matched by the rule. The rule target pattern contains a single simple target pattern element (called p). This target pattern element aims to allocate a Person class of the MMPerson target model for each source model element matched by the source pattern. The features of the generated model element are initialized with the corresponding features of the matched source model element. Note that a source model element of an ATL transformation should not be matched by more than one ATL matched rule. This implies the source pattern of matched rules to be designed carefully in order to respect this constraint. Moreover, an ATL matched rule can not generate ATL primitive type values.

Lazy rules.

TODO: write lazy rules documentation overview

Called rules. The called rules provide ATL developers with convenient imperative programming facilities. Called rules can be seen as a particular type of helpers: they have to be explicitly called to be executed and they can accept parameters. However, as opposed to helpers, called rules can generate target model elements as matched rules do. A called rule has to be called from an imperative code section, either from a match rule or another called rule.

As a matched rule, a called rule is introduced by the keyword rule. As matched rules, called rules may include an optional local variables section. However, since it does not have to match source model elements, a called rule does not include a source pattern. Moreover, its target pattern, which makes it possible to generate target model elements, is also optional. Note that, since the called rule does not match any source model element, the initialization of the target model elements that are generated by the target pattern has to be based on a combination of local variables, parameters and module attributes. The target pattern of a called rule is defined in the same way the target pattern of a matched rule is. It is also introduced by the keyword to. A called rule can also have an imperative section, which is similar to the ones that can be defined within matched rules. Note that this imperative code section is not mandatory: it is possible to specify a called rule that only contains either a target pattern section or an imperative code section. In order to illustrate the called rule structure, consider the following simple example:

rule NewPerson (na: String, s_na: String) {

to

p : MMPerson!Person (

name <- na

)

do {

p.surname <- s_na

}

}

This called rule, named NewPerson, aims to generate Person target model elements. The rule accepts two parameters that correspond to the name and the surname of the Person model element that will be created by the rule execution. The rule has both a target pattern (called p) and an imperative code section. The target pattern allocates a Person class each time the rule is called, and initializes the name attribute of the allocated model element. The imperative code section is executed after the initialization of the allocated element (see Default mode execution semantics section for further details on execution semantics). In this example, the imperative code sets the surname attribute of the generated Person model element to the value of the parameter s_na.

Module execution modes

The ATL execution engine defines two different execution modes for ATL modules. With the default execution mode, the ATL developer has to explicitly specify the way target model elements must be generated from source model elements. In this scope, the design of a transformation which aims to copy its source model with only a few modifications may prove to be very tiresome. Designing this transformation in default execution mode therefore requires the developer to specify the rules that will generate the modified model elements, but also all the rules that will only copy, without any modification, source to target model elements. The refining execution mode has been designed for this kind of situation: it enables ATL developers to only specify the modifications that have to be performed between the transformation source and target models. These two execution modes are described in the following subsections.

Normal execution mode

The normal execution mode is the ATL module default execution mode. It is associated with the keyword from in the module header. In default execution mode, the ATL developer has to specify, either by matched or called rules, the way to generate each of the expected target model elements. This execution mode suits to most ATL transformations where target models differ from the source ones.

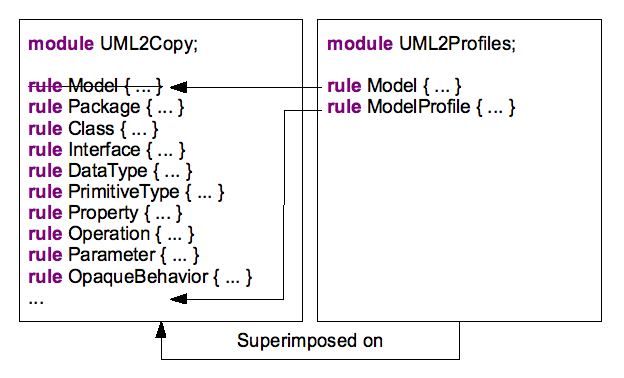

Refining execution mode

The refining execution mode has been introduced to ease the programming of refining transformations between similar source and target models. With the refining mode, ATL developers can focus on the ATL code dedicated to the generation of modified target elements. Other model elements (e.g. those that remain unchanged between the source and the target model) are implicitly copied from the source to the target model by the ATL engine. The refining mode is associated with the keyword refining in the header of the ATL module. Granularity of the refining mode is defined at the model element level. This means that the developer will have to specify how to generate a model element as soon as the transformation modifies one of its features (either an attribute or a reference). On the other side, the developer is not required to specify the ATL code that corresponds to the copy of unchanged model elements. This feature may result in important saving of ATL code, which, in the end, makes the programming of refining ATL transformations simpler and easier. At current time, the refining mode can only be used to transform a single source model into a single target model. Both source and target models must conform to the same metamodel.

Module execution semantics

This section introduces the basics of the ATL execution semantics. Although designing ATL transformations does not require any particular knowledge on the ATL execution semantics, understanding the way an ATL transformation is processed by the ATL engine can prove to be helpful in certain cases (in particular, when debugging a transformation).

The semantics of the two available ATL execution modes, the normal and the refining modes, are introduced in the following subsections.

Default mode execution semantics

The execution of an ATL module is organized into three successive phases:

- a module initialization phase,

- a matching phase of the source model elements,

- a target model elements initialization phase.

The module initialization step corresponds to the first phase of the execution of an ATL module. In this phase, the attributes defined in the context of the transformation module are initialized. Note that the initialization of these module attributes may make use of attributes that are defined in the context of source model elements. This implies these new attributes to be also initialized during the module initialization phase. If an entry point called rule has been defined in the scope of the ATL module, the code of this rule (including target model elements generation) is executed after the initialization of the ATL module attributes.

During the source model elements matching phase, the matching condition of the declared matched rules are tested with the model elements of the module source models. When the matching condition of a matched rule is fulfilled, the ATL engine allocates the set of target model elements that correspond to the target pattern elements declared in the rule. Note that, at this stage, the target model elements are simply allocated: they are initialized during the target model elements initialization phase.

The last phase of the execution of an ATL module corresponds to the initialization of the target model elements that have been generated during the previous step. At this stage, each allocated target model element is initialized by executing the code of the bindings that are associated with the target pattern element the element comes from. Note that this phase allows invocations of the resolveTemp() operation that is defined in the context of the ATL module. The imperative code section that can be specified in the scope of a matched rule is executed once the rule initialization step has completed. This imperative code can trigger the execution of some of the called rules that have been defined in the scope of the ATL module.

Refining mode execution semantics

TODO: update with the current refining mode execution semantics

ATL Query

An ATL query consists in a model to primitive type value transformation. An ATL query can be viewed as an operation that computes a primitive value from a set of source models. The most common use of ATL queries is the generation of a textual output (encoded into a string value) from a set of source models. However, ATL queries are not limited to the computation of string values and can also return a numerical or a boolean value.

The following subsections respectively describe the structure and the execution semantics of an ATL query.

Structure of an ATL query

After an optional import section, an ATL query must define a query instantiation. A query instantiation is introduced by the keyword query and specifies the way its result must be computed by means of an ATL expression:

query query_name = exp;

Beside the query instantiation, an ATL query may include a number of helper or attribute definitions. Note that, although an ATL query is not strictly a module, it defines its own kind of default module context. It is therefore possible, for ATL developers, to declare helpers and attributes defined in the context of the module in the scope of an ATL query.

Query execution semantics

As an ATL module, the execution of an ATL query is organized in several successive phases. The first phase is the initialization phase. It corresponds to the initialization phase of the ATL modules and is dedicated to the initialization of the attributes that are defined in the context of the ATL module.

The second phase of the execution of an ATL query is the computation phase. During this phase, the return value of the query is calculated by executing the declarative code of the query element of the ATL query. Note that the helpers that have been defined within the query file can be called at both the initialization and the computation phases.

ATL Library

The last type of ATL unit is the ATL library. Developing an ATL library enables to define a set of ATL helpers that can be called from different ATL units (modules, but also queries and libraries).

As the other kinds of ATL units, an ATL library can include an optional import section. Besides this import section, an ATL library defines a number of ATL helpers that will be made available in the ATL units that will import the library.

Compared to an ATL module, there exists no default module element for ATL libraries. As a consequence, it is impossible, in libraries, to declare helpers that are defined in the default context of the module. This means that all the helpers defined within an ATL library must be explicitly associated with a given context.

Compared to both modules and queries, an ATL library cannot be executed independently. This currently means that a library is not associated with any initialization step at execution time (as described in Module execution semantics). Due to this lack of initialization step, attribute helpers cannot be defined within an ATL library.

The ATL Language

This section is dedicated to the description of the ATL language. As introduced in Overview of the Atlas Transformation Language, the language enables to define three kinds of ATL units: the ATL transformation modules, the ATL queries and the ATL libraries. According to their type, these different kinds of units may be composed of a combination of ATL helpers, attributes, matched and called rules. This section aims to detail the syntax of these different ATL elements. For this purpose, the ATL language is based on OMG OCL (Object Constraint Language) norm for both its data types and its declarative expressions. There exist a few differences between the OCL definition and the current ATL implementation. They will be specified in this section by specific remarks.

Data types

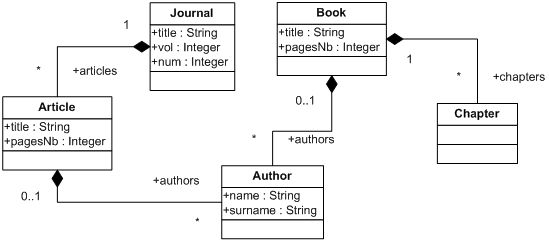

The ATL data type scheme is very close, but not similar, to the one defined by OCL. The following schema provides an overview of the data type's structure considered in ATL. The different data types presented in this schema represent the possible instances of the OclType class.

The root element of the OclType instances structure is the abstract OclAny type, from which all other considered types directly or indirectly inherit. ATL considers six main kinds of data types: the primitive data types, the collection data types, the tuple type, the map type, the enumeration type and the model element type. Note that the map data type is implemented by ATL as an additional facility, but does not appear in the OCL specification.

The class OclType can be considered as the definition of a type in the scope of the ATL language. The different elements appearing in the schema represent the type instances that are defined by OCL (except the map and the ATL module data types), and implemented within the ATL engine.

The OCL primitive data types correspond to the basic data types of the language (the string, boolean and numerical types). The set of collection types introduced by OCL provides ATL developers with different semantics for the handling of collections of elements. Additional data types include the enumerations, a tuple and a mapping data type and the model element data type. This last corresponds to the type of the entities that may be declared within the models handled by the ATL engine. Finally, the ATL module data type, which is specific to the ATL language, is associated with the running ATL units (either modules or queries).

Before going further in the description of these data types, it must be noted that each OCL expression, including the operations associated with each kind of data type (that are presented along with their respective data type), is defined in the context of an instance of a specific type. In ATL as in OCL, the reserved keyword self is used to refer to this contextual instance.

OclType operations

The class OclType corresponds to the definition of the type instances specified by OCL. It is associated with a specific OCL operation: allInstances(). This operation, which accepts no parameter, returns a set containing all the currently existing instances of the type self.

The ATL implementation provides an additional operation that enables to get all the instances of a given type that belong to a given metamodel. Thus, the allInstancesFrom(metamodel : String) operation returns a set containing the instances of type self that are defined within the model namely identified by metamodel.

OclAny operations

This section describes a set of operations that are common to all existing data types. The syntax used to call an operation from a variable in ATL follows the classical dot notation:

self.operation_name(parameters)

ATL currently provides support for the following OCL-defined operations:

- comparison operators: =, <>;

- oclIsUndefined() returns a boolean value stating whether self is undefined;

- oclIsKindOf(t : oclType) returns a boolean value stating whether self is an either an instance of t or of one of its subtypes;

- oclIsTypeOf(t : oclType) returns a boolean value stating whether self is an instance of t.

The operations oclIsNew() and oclAsType() defined by OCL are currently not supported by the ATL engine. ATL however implements a number of additional operations:

- toString() returns a string representation of self. Note that the operation may return irrelevant string values for a few remaining types;

- oclType() returns the oclType of self;

- asSequence(), asSet(), asBag() respectively return a sequence, a set or a bag containing self. These operations are redefined for the collection types;

- output(s : String) writes the string s to the Eclipse console. Since the operation has no return value, it shall only be used in ATL imperative blocks;

- debug(s : String) returns the self value and writes the "s : self_value" string to the eclipse console;

- refSetValue(name : String, val : oclAny) is a reflective operation that enables to set the self feature identified by name to value val. It returns self;

- refGetValue(name : String) is a reflective operation that returns the value of the self feature identified by name;

- refImmediateComposite() is a reflective operation that returns the immediate composite (e.g. the immediate container) of self;

- refInvokeOperation(opName : String, args : Sequence) is a reflective operation that enables to invoke the self operation named opName with the sequence of parameter contained by args.

The ATL Module data type

The ATL Module data type is specific to the ATL language. This internal data type aims to represent the ATL unit (either a module or a query) that is currently run by the ATL engine. There exists a single instance of this data type, and developers can refer to it (in their ATL code) using the variable thisModule. The thisModule variable makes it possible to access the helpers and the attributes that have been declared in the context of the ATL module.

The ATL Module data type also provides the resolveTemp operation. This specific operation makes it possible to point, from an ATL rule, to any of the target model elements (including non-default ones) that will be generated from a given source model element by an ATL matched rule.

The operation resolveTemp has the following declaration:

resolveTemp(var, target_pattern_name)

The parameter var corresponds to an ATL variable that contains the source model element from which the searched target model element is produced. The parameter target_pattern_name is a string value that encodes the name of the target pattern element that maps the provided source model element (contained by var) into the searched target model element.

Note that, as it is defined in the scope of the ATL module, this operation must be called from the variable thisModule. The resolveTemp operation must not be called before the completion of the matching phase. This means that the operation can be called from:

- the target pattern and do sections of any matched rule;

- the target pattern and do sections of a called rule, provided that this called rule is executed after the matching phase (e.g. is not called from a transformation entrypoint).

ATL developers may note that the operation call does not specify the matched rule from which the generated target model element comes from. However, as explained in the Rules section, a source model element should not be matched by more than one matched rule. As a consequence, the concerned matched rule can be derived from the specified source model element.

Primitive data types

OCL defines four basic primitive data types:

- the Boolean data type, for which possible values are true or false;

- the Integer data type which is associated with the integer numerical values (1, -5, 2, 34, 26524, ...);

- the Real data type which is associated with the floating numerical values (1.5, 3.14, ...);

- the String data type ('To be or not to be', ...). A string is defined between '. The escape character '\' enables to include ' characters within handled string variables. Note that, in OCL:

- a character is encoded as a one-character string;

- the characters composing a string are numbered from 1 to the size of the string.

According to the considered data type (string, numerical values and boolean values), OCL defines a number of specific operations. They are detailed in the following sections along with some additional functions provided by the ATL engine.

Boolean data type operations

The set of OCL operations defined for the boolean data type is the following:

- logical operators: and, or, xor, not;

- implies(b : Boolean) returns false if self is true and b is false, and

returns true otherwise.

Boolean expressions evaluation

In this case:

if (exp1 and exp2) then ... else ... endif

exp2 will always be evaluated, regardless of the result of the first expression. ATL evaluates it like this:

if (exp1.and(exp2)) then ... else ... endif

So remember that in this case:

if (self.attributes->size() > 0

and self.attributes->first().attr)

Even though the first member is false, there may be a call of the "attr" property on an undefined element, which will cause an error.

String data type operations

OCL defines the following operations for the string data type:

- size() returns the number of characters contained by the string self;

- concat(s : String) returns a string in which the specified string s is concatenated to the end of self;

- substring(lower : Integer, upper : Integer) returns the substring of self starting from character lower to character upper;

- toInteger() and toReal().

Besides the OCL-defined operations, ATL implements a number of additional operations for the string data type:

- comparison operators: <, >, =>, =<;

- the string concatenation operator (+) can be used as a shortcut for the string concat() function;

- toUpper(), toLower() respectively return an upper/lower case copy of self;

- toSequence() returns the sequence of characters (e.g. of one-character strings) corresponding to self;

- trim() returns a copy of self with leading and trailing white spaces (' ', '\t', '\n', '\f', '\r') omitted;

- startsWith(s : String), endsWith(s : String) return a boolean value respectively stating whether self starts/ends with s;

- indexOf(s : String), lastIndexOf(s : String) respectively return the index (an integer value) within self of the first/last occurrence of the specified substring s;

- split(regex : String) splits the self string around matches of the regular expression regex. Specification of regular expression must follow the definition of Java regular expressions. Result is returned as a sequence of strings;

- replaceAll(c1 : String, c2 : String) returns a copy of self in which each occurrence of character c1 is replaced with the character c2. Note that both c1 and c2 are specified as OCL strings. However the function only considers the first character of each of the provided strings;

- regexReplaceAll(regex : String, replacement : String) returns a copy of self in which each substring of this string that matches the given regular expression regex is replaced with the given replacement. Specification of regular expression must follow the definition of Java regular expressions.

As a last point, ATL currently defines two additional functions that make it possible to write strings to outputs. These functions are useful for redirecting the result of ATL queries, but they may also be used for debugging purposes:

- writeTo(fileName : String) enables to write the self string into the file identified by the string fileName. Note that this string may encode either a full or a relative path to the file. In the last case, the path is relative to the \eclipse directory from which the ATL tool kit is run. If the identified file already exists, the function writes the new content over this existing file;

- println() writes the self string onto the default output, that is the Eclipse console.

Note that these two functions are provided as temporary solutions as the ATL toolkit does still not provide any integrated solution for the redirection of the result of ATL queries. They are likely to be removed from future releases of the ATL tool suite.

Numerical data type operations

The following OCL operations are defined for both OCL numerical data types (integer and real):

- comparison operators: <, >, =>, =<;

- binary operators: *, +, -, /, div(), max(), min();

- unary operator: abs().

Note that the - unary operator defined by OCL (that returns the negative value of self) is not implemented in current version of ATL. As a consequence, a -x negative numerical value has to be declared as the result of a call to the - binary operator: 0-x.

OCL also defines some operations that are specific to the integer and the real data types:

- integer operation: mod();

- real operations: floor(), round().

Besides the OCL-defined operations, ATL provides a set of additional functions. The toString() operation, available for both the integer and real data types returns a string representing the integer/real value of self. There also exist a set of ATL operations specific to the real data type:

- cos(), sin(), tan(), acos(), asin();

- toDegrees(), toRadians();

- exp(), log(), sqrt().

Examples

In the following, some usage examples of OCL operations on primitive data types are illustrated:

- testing whether a string is of type OclAny:

'test'.oclIsTypeOf(OclAny)- evaluates to

false

- evaluates to

- testing whether a string is of kind OclAny:

'test'.oclIsKindOf(OclAny)- evaluates to

true

- evaluates to

- boolean operations:

trueorfalse- evaluates to

true

- evaluates to

- computing a substring of a given string:

'test'.substring(2, 3)- evaluates to 'es'

- casting a string into upper case:

'test'.toUpper()- evaluates to 'TEST'

- casting a string into a sequence:

'test'.toSequence()- evaluates to

Sequence{'t', 'e', 's', 't'}

- evaluates to

- checking whether a string ends by a given substring:

'test'.endsWith('ast')- evaluates to

false

- evaluates to

- getting last index of character "t" in string "test":

'test'.lastIndexOf('t')- evaluates to 4

- replacing character "t" by character "o" in string "test":

'test'.replaceAll('t', 'o')- evaluates to 'oeso'

- replacing occurrences of regular expression "a*" by string "A" in string "aaabaftaap":

'aaabaftaap'.regexReplaceAll('a*', 'A')- evaluates to 'AbAftAp'

- integer division:

23 div 2 or 23."div"(2)- evaluates to 11

- real division:

23/2- evaluates to 11.5

Collection data types

OCL defines a number of collection data types that provide developers with different ways to handle collections of elements. The provided collection types are Set, OrderedSet, Bag and Sequence. Collection is the common abstract superclass of these different types of collections.

The existing collection classes have the following characteristics:

- Set is a collection without duplicates. Set has no order;

- OrderedSet is a collection without duplicates. OrderedSet is ordered;

- Bag is a collection in which duplicates are allowed. Bag has no order;

- Sequence is a collection in which duplicates are allowed. Sequence is ordered.

A collection can be seen as a template data type. This means that the declaration of a collection data type has to include the type of the elements that will be contained by the type instances. Whatever the type of the contained elements, the declaration of a collection data type has to conform to the following scheme:

collection_type(element_datatype)

The supported collection data types are Set, OrderedSet, Sequence and Bag. The element data type can be any supported oclType, including another collection type.

The definition of a collection variable is achieved as follows:

collection_type{elements}

Please note that the brackets used in the type definition must here be replaced by curly brackets. Examples of collection type definitions and instantiations can be found here.

Operations on collections

ATL provides a large number of operations in the context of the different supported collection types. Note that there exists a specific syntax for invoking an operation onto a collection type:

self->operation_name(parameters)

The different kinds of existing OCL collections share a number of common operations:

- size() returns the number of elements in the collection self;

- includes(o : oclAny) returns a boolean stating whether the object o is part of the collection self;

- excludes(o : oclAny) returns a boolean stating whether the object o is not part of the collection self;

- count(o : oclAny) returns the number of times the object o occurs in the collection self;

- includesAll(c : Collection) returns a boolean stating whether all the objects contained by the collection c are part of the self collection;

- excludesAll(c : Collection) returns a boolean stating whether none of the objects contained by the collection c are part of the self collection;

- isEmpty() returns a boolean stating whether the collection self is empty;

- notEmpty() returns a boolean stating whether the collection self is not empty;

- sum() returns a value that corresponds to the addition of all elements in self. These elements must be of a type that support the + operation.

Note that the product() operation defined by OCL is unsupported by the current ATL implementation. However, ATL defines three additional operations in the context of a collection (OCL defines similar operations in the context of each collection type):

- asBag() returns a bag containing the elements of the self collection. Order is lost from a sequence or an ordered set. Has no effect in the context of a bag;

- asSequence() returns a sequence containing the elements of the self collection. Introduces an order from a bag or a set. Has no effect in the context of a sequence;

- asSet() returns a set containing the elements of the self collection. Order is lost from a sequence or an ordered set. Duplicates are removed from a bag or a sequence. Has no effect in the context of a set.

Note that, in the current ATL version, the casting operation asOrderedSet() defined by OCL is implemented for none of the collection types.

Sequence data type operations

The sequence type supports all the collection operations. OCL defines a number of additional operations that are specific to sequences:

- union(c : Collection) returns a sequence composed of all elements of self followed by the elements of c;

- flatten() returns a sequence directly containing the children of the nested subordinate collections contained by self;

- append(o : oclAny) returns a copy of self with the element o added at the end of the sequence;

- prepend(o : oclAny) returns a copy of self with the element o added at the beginning of the sequence;

- insertAt(n : Integer, o : oclAny), returns a copy of self with the element o added at rank n of the sequence;

- subSequence(lower : Integer, upper : Integer) returns a subsequence of self starting from rank lower to rank upper (both bounds being included);

- at(n : Integer) returns the element located at rank n in self;

- indexOf(o : oclAny) returns the rank of first occurrence of o in self;

- first() returns the first element of self (oclUndefined if self is empty);

- last() returns the last element of self (oclUndefined if self is empty);

- including(o : oclAny) returns a copy of self with the element o added at the end of the sequence;

- excluding(o : oclAny) returns a copy of self with all occurrences of element o removed.

Set data type operations

Set supports all collection operations and some specific ones:

- union(c : Collection) returns a set composed of the elements of self and the elements of c with duplicates removed (they may appear within c, and between c and self elements);

- intersection(c : Collection) returns a set composed of the elements that appear both in self and c;

- operator - (s : Set) returns a set composed of the elements of self that are not in s;

- including(o : oclAny), returns a copy of self with the element o if not already present in self;

- excluding(o : oclAny), returns a copy of self with the element o removed from the set;

- symetricDifference(s : Set) returns a set composed of the elements that are in self or s, but not in both.

Note that the flatten() operation defined by OCL is not implemented in the current version of ATL.

OrderedSet data type operations

The sequence type supports all the collection operations. OCL defines a number of additional operations that are specific to ordered sets:

- append(o : oclAny) returns a copy of self with the element o added at the end of the ordered set if it does not already appear in self;

- prepend(o : oclAny) returns a copy of self with the element o added at the beginning of the ordered set if it does not already appear in self;

- insertAt(n : Integer, o : oclAny), returns a copy of self with the element o added at rank n of the ordered set if it does not already appear in self;

- subOrderedSet (lower : Integer, upper : Integer) returns a subsequence of self starting from rank lower to rank upper (both bounds being included);

- at(n : Integer) returns the element located at rank n in self;

- indexOf(o : oclAny) returns the rank of first occurrence of o in self;

- first() returns the first element of self (oclUndefined if self is empty);

- last() returns the last element of self (oclUndefined if self is empty).

Besides this set of operations specified by OCL, ATL implements the following additional functions:

- union(c : Collection) returns an ordered set composed of the elements of self followed by the elements of c with duplicates removed (they may appear within c, and between c and self elements);

- flatten() returns an ordered set directly containing the children of the nested subordinate collections contained by self;

- including(o : oclAny) returns a copy of self with the element o added at the end of the ordered set if it does not already appear in self;

- excluding(o : oclAny) returns a copy of self with the o removed.

Bag data type operations

The bag operations defined by the OCL specification are not available with the current ATL implementation.

Iterating over collections

The OCL specification defines a number of iterative operations, also called iterative expressions, on the collection types. The main difference between a classical operation and an iterative expression on a collection is that the iterator accepts an expression as parameter, whereas operations only deal with data. The definition of an iterative expression includes:

- the iterated collection, which is referred as the source collection;

- the iterator variables declared in iterative expressions, which are referred as the iterators;

- the expression passed as parameter to the operation, which is referred as the iterator body.

The syntax used to call an iterative expression is the following:

source->operation_name(iterators | body)

ATL currently provides support for the following set of defined iterative expressions:

- exists(body) returns a boolean value stating whether body evaluates to true for at least one element of the source collection;

- forAll(body) returns a boolean value stating whether body evaluates to true for all elements of the source collection;

- isUnique(body) returns a boolean value stating whether body evaluates to a different value for each element of the source collection;

- any(body) returns one element of the source collection for which body evaluates to true. If body never evaluates to true, the operation returns OclUndefined;

- one(body) returns a boolean value stating whether there is exactly one element of the source collection for which body evaluates to true;

- collect(body) returns a collection of elements which results in applying body to each element of the source collection;

- select(body) returns the subset of the source collection for which body evaluates to true;

- reject(body) returns the subset of the source collection for which body evaluates to false (is equivalent to select(not body));

- sortedBy(body) returns a collection ordered according to body from the lowest to the highest value. Elements of the source collection must have the < operator defined.

Note that the collect() operation provided by ATL implements the semantics of the collectNested() operation defined in the OCL specification. Getting the semantics of the collect() operation as defined by OCL can simply be achieved with ATL by calling the flatten() operation onto the result provided by the ATL collect() iterative expression, as follows:

source->collect(iterator | body)->flatten()

The ATL language introduces another constraint compared to the OCL specification. The specification indeed allows declaring multiple iterators in the scope of the exists() and the forAll() iterative expressions. This feature is not supported by the current ATL implementation, in which the number of iterator is limited to one, whatever the considered iterative expression.

Besides these predefined iterative operations, OCL specifies a more generic collection iterator, named iterate(). This iterative expression has an iterator, an accumulator and a body. The accumulator corresponds to an initialized variable declaration. The body of an iterate() expression is an expression that should make use of both the declared iterator and accumulator. The value returned by an iterate() expression corresponds to the value of the accumulator variable once the last iteration has been performed. An iterative expression is defined with the following syntax:

source->iterate(iterator, variable_declaration = init_exp | body )

Examples

In the following, some operations on collections are illustrated:

- declaring the sequence of integer type:

Sequence(Integer) - specifying a sequence of integers:

Sequence{1, 2, 3} - declaring the set of sequences of string type:

Set(Sequence(String)) - specifying a set of sequences of strings:

Set{Sequence{'monday'}, Sequence{'march', 'april', 'may'}} - testing whether a bag is empty:

Bag{1, 2, 3}->isEmpty()- evaluates to

false

- evaluates to

- testing whether a set contains an element: Set{1, 2, 3}->includes(1)

- evaluates to

true

- evaluates to

- testing whether a set contains all the elements of another set: Set{1, 2, 3}->includesAll(Set{3, 2})

- evaluates to

true

- evaluates to

- getting the size of a sequence:

Sequence{1, 2, 3}->size()- evaluates to 3

- note that

Set{3, 3, 3}->size()evaluates to 1 since the set data type eliminates duplicates

- getting the first element of an ordered set sequence:

OrderedSet{1, 2, 3}->first()- evaluates to 1

- computing the union of two sequences:

Sequence{1, 2, 3}->union(Sequence{7, 3, 5})- evaluates to

Sequence{1, 2, 3, 7, 3, 5}

- evaluates to

- computing the union of two sets:

Set{1, 2, 3}->union(Set{7, 3, 5})- evaluates to

Set{1, 2, 3, 7}

- evaluates to

- flattening a sequence of sequences:

Sequence{Sequence{1, 2}, Sequence{3, 5, 2}, Sequence{1}}->flatten()- evaluates to

Sequence{1, 2, 3, 5, 2, 1}

- evaluates to

- computing a subsequence of a sequence:

Sequence{Sequence{1, 2}, Sequence{3, 5, 2}, Sequence{1}}->subSequence(2, 3)- evaluates to

Sequence{ Sequence{3, 5, 2}, Sequence{1}}

- evaluates to

- inserting an element at a given position into a sequence:

Sequence{5, 15, 20}->insertAt(2, 10)- evaluates to

Sequence{5, 10, 15, 20}

- evaluates to

- computing the intersection of two sets:

Set{1, 2, 3}->intersection(Set{7, 3, 5})- evaluates to

Set{3}

- evaluates to

- computing the symmetric difference of two sets:

Set{1, 2, 3}->symetricDifference(Set{7, 3, 5})- evaluates to

Set{1, 2, 7, 5}

- evaluates to

- selecting all elements of a sequence that are smaller or equal to 3:

Sequence{1, 2, 3, 4, 5, 6}->select(i | i <= 3)- evaluates to

Set{1, 2, 3}

- evaluates to

- collecting the names of all MOF classes:

MOF!Class.allInstances()->collect(e | e.name)- checking whether all the numbers in a sequence are greater than 2:

Sequence{12, 13, 12}->forAll(i | i > 2) - evalutes to

true

- checking whether all the numbers in a sequence are greater than 2:

- checking whether there is only one element of the sequence that is greater that 2:

Sequence{12, 13, 12}->one(i | i > 2)- evalutes to

false

- evalutes to

- checking whether there exists a number in the sequence that is greater than 2:

Sequence{12, 13, 12}->exists(i | i > 2)- evaluates to

true

- evaluates to

- computing the sum of the positive integer of a sequence using the iterate instruction:

Sequence{8, -1, 2, 2, -3}->iterate(e; res : Integer = 0 |

if e > 0

then res + e

else res

endif

)- evaluates to 12;

- is equivalent to

Sequence{8, -1, 2, 2, -3}->select(e | e > 0)->sum()

Enumeration data types

An enumeration is an OclType. It has a name just as any other data type. However, compared to the data presented up to now, the enumerations have to be defined within the source and target metamodels of a transformation.

With the OCL specification, referring to an enumeration literal (e.g. an enumeration defined value) is achieved by specifying the enumeration type (e.g. the name of the enumeration), followed by two double-points and the enumeration value. Consider, as an example, an enumeration named Gender that defines two possible values, male and female. Accessing to the female value of this enumeration type in OCL is achieved as follows: Gender::female.

The current ATL implementation differs from the OCL specification. Access to enumeration values is simply achieved by prefixing the enumeration by a sharp character (the enumeration type is no more required): #female. The enumeration data type is associated with no specific operation.

Tuple data type

The tuple data type enables to compose several values into a single variable. A tuple consists into a number of named parts that may each have a distinct type. Note that a tuple type is not named. As a consequence, a declared tuple type has to be identified by its full declaration each time it is required.

Each part of a tuple type is associated with an OclType and is identified by a unique name. The declaration of a tuple data type must conform to the following syntax:

TupleType(var_name1 : var_type1, ..., var_nameN : var_typeN)

Note that the order in which the different parts are declared is not significant. As an example, it is possible to consider the declaration of a tuple type associating an Author model element from the MMAuthor metamodel with a couple of strings encoding the title of a book and the name of the editor of this book:

TupleType(a : MMAuthor!Author, title : String, editor : String)

The instantiation of a declared tuple variable has to respect the following syntax:

Tuple{var_name1 [: var_type1]? = init_exp1, ..., var_namen [: var_typen]? = init_expn}

When declaring a tuple instance, the types of the tuple parts can be omitted. As a consequence, the two following tuple instantiations corresponding to the tuple type defined above are equivalent:

Tuple{editor : String = 'ATL Eds.', title : String = 'ATL Manual', a : MMAuthor!Author = anAuthor}

Tuple{title = 'ATL Manual', a = anAuthor, editor = 'ATL Eds.'}

As for the declaration of a tuple type, the instantiation of the different parts of a tuple variable may be performed in any order. The different parts of a tuple structure can be accessed using the same dot notation that is used for the invocation of operations or the access to model element attributes. Thus, the expression

Tuple{title = 'ATL Manual', a = anAuthor, editor = 'ATL Eds.'}.title

provides access to the title part of the tuple.

Besides the set of common operations, the current ATL implementation defines an additional casting operation in the context of the tuple dada type: the asMap() operation returns a map variable in which the name of the tuple parts are associated with their respective values.

Map data type

Provided as an additional facility in the ATL implementation, the map data type does not belong to the OCL specification. This data type enables to manage a structure in which each value is associated with a unique key that enables to access it (see the Java Map interface for further details).

The declaration of a map type has to conform to the following syntax:

Map(key_type, value_type)

Note that, as a tuple type, a map type is not named, which again implies to specify the full type declaration when required. The following map declaration associates some Author model element values with integer keys:

Map(Integer, MMAuthor!Author)

Instantiating a map variable is achieved according to the following syntax:

Map{(key1, value1), ..., (keyn, valuen)}

As an example, the following expression instantiates a two entries map corresponding to the map type declared above:

Map{(0, anAuthor1), (1, anAuthor2)}

Besides the set of common operations, the ATL implementation provides the following operations on map data:

- get(key : oclAny) returns the value associated with key within the self map (or OclUndefined if key is not a key of self);

- including(key : oclAny, val : oclAny) returns a copy of self in which the couple (key, val) has been inserted if key is not already a key of self;

- union(m : Map) returns a map containing all self elements to which are added those elements of m whose key does not appear in self;

- getKeys() returns a set containing all the keys of self;

- getValues() returns a bag containing all the values of self.

Model element data type

The last kind of data type introduced by the OCL specification corresponds to the model elements. These last are defined within the source and target metamodels of an ATL transformation. Metamodels usually define a number of different model elements (also called classes).

In ATL, model element variables are referred to by means of the notation metamodel!class in which metamodel identifies (through its name) one of the metamodels handled by the transformation, and class points to a given model element (e.g. class) of this metamodel. Note that, as opposed to the OCL notation, which does not specify the metamodel a given class comes from, the ATL notation makes it possible to handle several metamodel at once.

A model element has a number of features that can be either attributes or references. Both are accessed through the dot notation self.feature. Thus, in the context of the MMAuthor metamodel, the expression anAuthor.name enables to access to the attribute name of the instance anAuthor of the Author class.

In ATL, the model elements can only be generated by means of the ATL rules (either matched or called rules). Initializing a newly generated model element consists in initializing its different features. Such assignments are operated by means of the bindings of the rules target pattern elements.

Please note that the operation oclIsUndefined(), defined for the OclAny data type, tests whether the value of an expression is undefined. This operation is useful when applied on an attribute with a multiplicity zero to one (which is void or not). However, attributes with the multiplicity n are usually represented as collections that may be empty and not void.

Full name reference to metamodel classes

It is also possible to include the full path using the following scheme:

<Package1Name>::<Package2Name>::<ClassifierName>

Actually the ATL Parser doesn't deal well with "::" so we need to surround the path using ".

For instance, using the metamodel excerpt given above, we could write:

MM!"P1::C1" MM!"P1::P2::C2" MM!"P3::C3"

In some cases, full name reference is required to avoid ambiguity due to name collision. Let us consider the following metamodel:

package P1 {

class C1 {}

package P2 {

class C1 {}

}

}

package P3 {

class C1 {}

}

Using MM!C1 is incorrect because it cannot reliably me mapped to a specific class. If you try to do this, a warning will be reported in the ATL console. In this case, it is mandatory to write:

MM!"P1::C1" MM!"P1::P2::C1" MM!"P3::C1"

Examples

Here is a sample of OCL expressions using features of model elements. They are defined in the context of the MOF metamodel:

- collect the names of all MOF classes:

MOF!Class.allInstances()->collect(e | e.name)

- getting the names of all primitive MOF types by filtering:

MOF!DataType.allInstances()

->select(e | e.oclIsTypeOf(MOF!PrimitiveType))

->collect(e| e.name)

- getting the names of all primitive MOF types the simple way:

MOF!PrimitiveType.allInstances()->collect(e| e.name)

- an enumeration instance in MOF:

MOF!VisibilityKind.labels

- getting the names of all classes inheriting from more than one class:

MOF!Class.allInstances()

->select(e | e.supertypes->size() > 1)

->collect(e | e.name)

ATL Comments

In ATL, as in the OCL standard, comments start with two consecutive hyphens "--" and end at the end of the line. The ATL editor in Eclipse colours comments with dark green, if the standard configuration is used:

-- this is an example of a comment

OCL Declarative Expressions

Besides the declarative expressions that correspond to the instances of the supported data types, as well as the invocation of operations on these data types, OCL defines additional declarative expressions that aim to enable developers to structure OCL code. This section is dedicated to the description of these declarative expressions. There exist two kinds of advanced declarative expressions: the "if" and the "let" expressions. The "if" expression provides an alternative expression facility. The "let" expression, as for it, enables to define and initialize new OCL variables.

If expression

An OCL "if" expression is expressed with an if-then-else-endif structure. As an expression, an "if" expression should be evaluated (e.g. must have a value) in any cases. This means that the "else" clause of an "if" expression can not be omitted. All "if" expressions must conform to the following syntax:

if condition

then

exp1

else

exp2

endif

The condition of the "if" expression is a boolean expression. According to the evaluation of this boolean expression, the "if" expression will return the value corresponding to either exp1 (in case condition is evaluated to true) or exp2 (in case condition is evaluated to false). This is illustrated by the following simple "if" expression:

if 3 > 2 then 'three is greater than two' else 'this case should never occur' endif

Note that the different parts of an "if" expression can, in turn, include another composed OCL expression, including operation invocations, "let" expressions or nested "if" expressions. As an example, it is possible to consider the following example:

if mySequence->notEmpty() then if mySequence->includes(myElement) then 'the element is at position ' + mySequence->indexOf(myElement).toString() else 'the sequence does not contain the element' endif else 'the sequence is empty' endif

Let expression

The OCL "let" expression enables the definition of variables. A "let" expression has to conform to the following syntax:

let var_name : var_type = var_init_exp in exp

The identifier var_name corresponds to the name of the declared variable. var_type identifies the type of the declared variable. A variable declared by means of a "let" expression must be initialized with the var_init_exp. The initialization expression can be of any available OCL expression type, including nested "let" expressions. Finally, the in keyword introduces the expression in which the newly declared variable can be used. Again, this expression can be of any existing OCL expression type. This is illustrated by the following simple example:

let a : Integer = 1 in a + 1

Several "let" expressions can be enchained in order to declare several variables, as in the following example:

let x : Real = if aNumber > 0 then aNumber.sqrt() else aNumber.square() endif in let y : Real = 2 in x/y

An OCL variable is visible from is declaration to the end of the OCL expression it belongs to. Note that, although it is not advised, OCL allows developers to declare several variables of the same name within a single expression. In such a case, the lastly declared variable will hide the other variables having the same name.

The "let" expressions also prove to be very useful at the debugging stage. Indeed, the ATL development tools integrate debugging facilities that enable, among other things, to consult the value of the declared variables during the execution of an ATL program. In many cases, it proves to be useful to also be able to consult the value returned by a complex OCL expression. This could be achieved with few modification of the OCL code by declaring an OCL variable initialized with the complex expression to be checked. By this means, the value computed by the expression will be stored in an OCL variable, and thus be available for visualization during the debugging of the ATL program.

In order to illustrate this point, consider the following expression:

aSequence->first().square()

It is here assumed that the collection aSequence is a sequence of Real elements. In case this sequence is empty, the invocation of the operation first() will return the value OclUndefined. Invoked onto OclUndefined, the operation square() will raise an error at runtime. In such a case, it may be interesting to be able to check, at debug stage, whether the first element exists or is undefined by storing its value in a dedicated variable. This is the purpose of the following expression:

let firstElt : Real = aSequence->first() in firstElt.square()

Other expressions

Besides the "if" and "let" structural expressions, the OCL language enables to define different kinds of expressions whose syntax has been introduced in the Data Types section. These expressions include:

- the constant expressions, which correspond to a constant value of any supported data type;

- the helper/attribute call expressions which correspond to the call of an helper/attribute either defined in the context of the ATL module or of any source model element. The expression is resolved into the value returned by the helper/attribute;

- the operation call expressions, which correspond to the call of a standard operation defined for a supported data type. The expression is resolved into the value returned by the operation;

- the collection iterative expressions, which correspond to the call of an iterative expression on a supported collection data type. The expression is resolved into the value returned by the called iterative operation.

Expressions tips & tricks

A number of errors, while designing OCL expressions in ATL, come from the evaluation mode of these OCL expressions. Indeed, in many languages, such as C++ and Java, there exists an optimiser that stops the evaluation of logical expressions when finding either a true value followed by the "or" logical operator or a false value followed by the "and" logical operator. No matter the rest of the expression may result into an error or an exception, the expression will be successfully evaluated.

As opposed to these common programming languages, the semantics of composed expressions, as defined by OCL, are such that each expression has to be fully evaluated. As a consequence, some expressions that usually appear to be correct will raise errors in ATL, as illustrated by the following example:

not person.oclIsUndefined() and person.name = 'Isabel'

This expression will therefore raise an error for an undefined person model element when evaluating the expression person.name. An error-free way to express an equivalent logical expression is:

if person.oclIsUndefined() then false else person.name = 'Isabel' endif

The same remark can be applied similarly to the logical expressions that use the logical "or" operator, such as:

person.oclIsUndefined() or person.name = 'Isabel'

The correct way to express this logical expression is:

if person.oclIsUndefined() then true else person.name = 'Isabel' endif

Note that the logical expressions that are likely to raise this kind of errors may be embedded in more complex OCL expressions:

collection->select(person | not person.oclIsUndefined() and person.name = 'Isabel')

Using the same rewriting rule, this expression can be transformed into the correct following expression:

collection->select(person | if person.oclIsUndefined() then false else person.name = 'Isabel' endif )

There may exist several ways to rewrite an incorrect expression. Thus, the following expression will compute the same result:

collection ->select(person | not person.oclIsUndefined()) ->select(person | person.name = 'Isabel')

Note that the first solution should here be preferred to this one for efficient reasons: the first solution iterates the collection only once.

ATL Helpers

ATL enables developers to define methods within the different kinds of ATL units. In the ATL context, these methods are called helpers. They make it possible to define factorized ATL code that can then be called from different points of an ATL program. There exist two different, although very similar from their syntax, kinds of helpers: the functional and the attribute helpers. Both kinds of helpers must be defined in the context of a given data type. However, compared to an attribute helper, which is commonly referred to as an attribute, a functional helper, referred to as a helper, can accept parameters. This difference implies some differences in the execution semantics of both helper kinds.

Helpers

ATL helpers can be viewed as the ATL equivalent to methods. They make it possible to define factorized ATL code that can be called from different points of an ATL transformation. An ATL helper is defined according to the following scheme:

helper [context context_type]? def : helper_name(parameters) : return_type = exp;

Each helper is characterized by its context (context_type), its name (helper_name), its set of parameters (parameters) and its return type (return_type). The context of a helper is introduced by the keyword context. It defines the kind of elements the helper applies to, that is, the type of the elements from which it will be possible to invoke it. Note that the context may be omitted in a helper definition. In such a case, the helper is associated with the global context of the ATL module. This means that, in the scope of such a helper, the variable self refers to the run module/query itself.

The name of a helper is introduced by the keyword def. As its context, it is part of the signature of the helper (along with the parameters and the return_type). A helper accepts a set of parameters that is specified between brackets after the helper's name. A parameter definition includes both the parameter name and the parameter type, as specified by the following scheme:

parameter_name : parameter_type

Several parameters can be declared by separating them with a comma (","). The name of the parameter (parameter_name) is a variable identifier within the helper. This means that, within a given helper definition, each parameter name must be unique. Note that the specified context type as well as the parameters' type and the return type may be of any of the data types supported by ATL. The body of a helper is specified as an OCL expression. This expression can be of any of the supported expression types. As an example, it is possible to consider the following helper:

helper def : averageLowerThan(s : Sequence(Integer), value : Real) : Boolean = let avg : Real = s->sum()/s->size() in avg < value;

This helper, named averageLowerThan, is defined in the context of the ATL module (since no context is explicitly specified). It aims to compute a boolean value stating whether the average of the values contained by an integer sequence (the s parameter) is strictly lower than a given real value (the value parameter). The body of the helper consists in a "let" expression which defines and initializes the avg variable. This variable is then compared to the reference value.

Note that several helpers may have the same name in a single transformation. However, helpers with a same name must have distinct signatures to be distinguishable by the ATL engine (see Limitations).

Calling super helpers

The super keyword lets you call helpers with the same name defined on a super type of the current type.

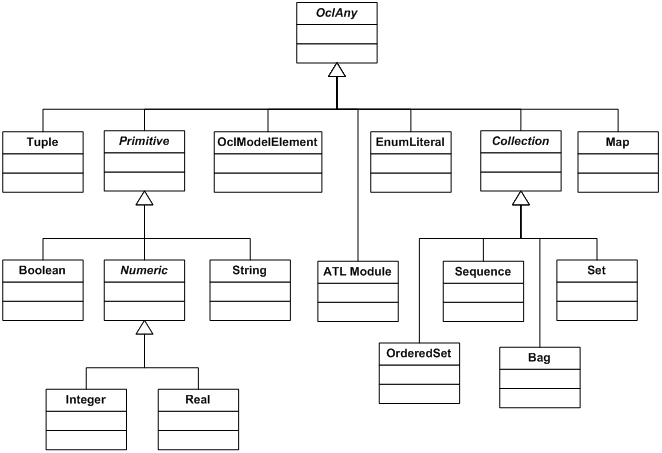

Suppose you have the following metamodel:

class A {}

class B extends A {}

Then you can write:

helper context A def: test() : Integer = 1; helper context B def: test() : Integer = super.test() + 1;

Attributes

Besides helpers, the ATL language makes it possible to define attributes. Compared to a helper, an attribute can be viewed as a constant that is specified within a specific context. The major difference between a helper and an attribute definition is that the attribute accepts no parameter.

The syntax used to define an ATL attribute is very close to the definition of functional helpers. The only difference is that the attribute syntax does not enable to define any parameter:

helper [context context_type]? def : attribute_name : return_type = exp;

As for a helper, the context definition can be omitted in the declaration of an attribute. In this case, the attribute will be associated with the ATL module context. The following attribute, which is related to the MMPerson metamodel, can be considered as an example:

helper def : getYoungest : MMPerson!Person = let allPersons : Sequence(MMPerson!Person) = MMPerson!Person.allInstances()->asSequence() in allPersons->iterate(p; y : MMPerson!Person = allPersons->first() | if p.age < y.age then p else y endif );